Posted 31 January 2023

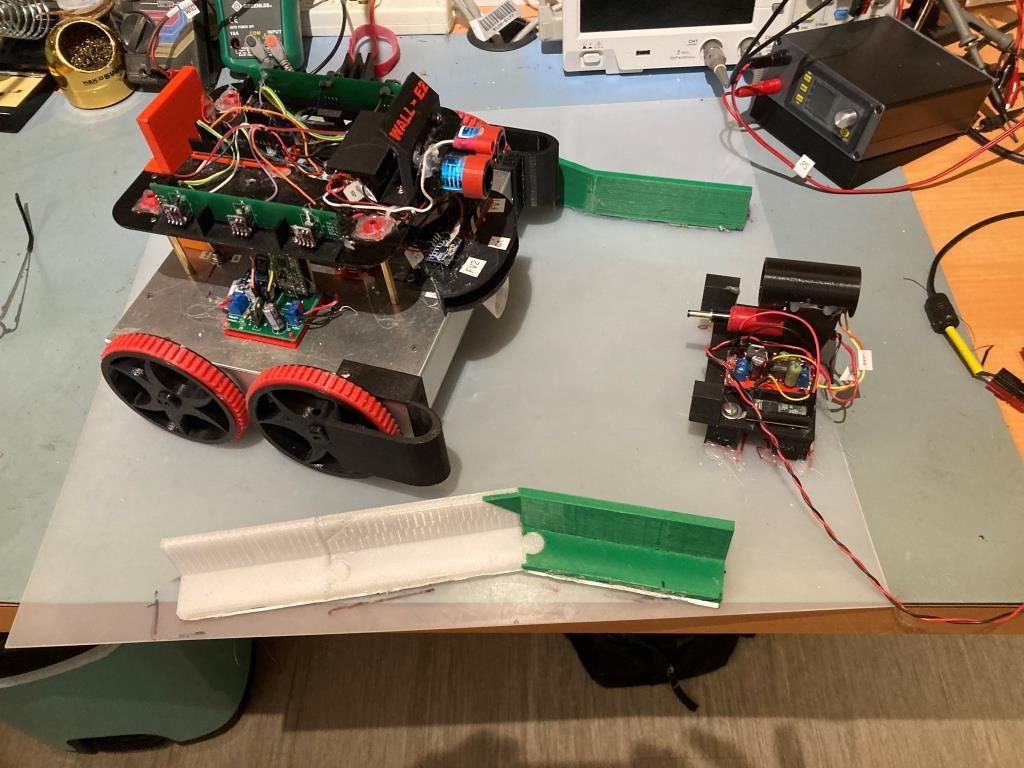

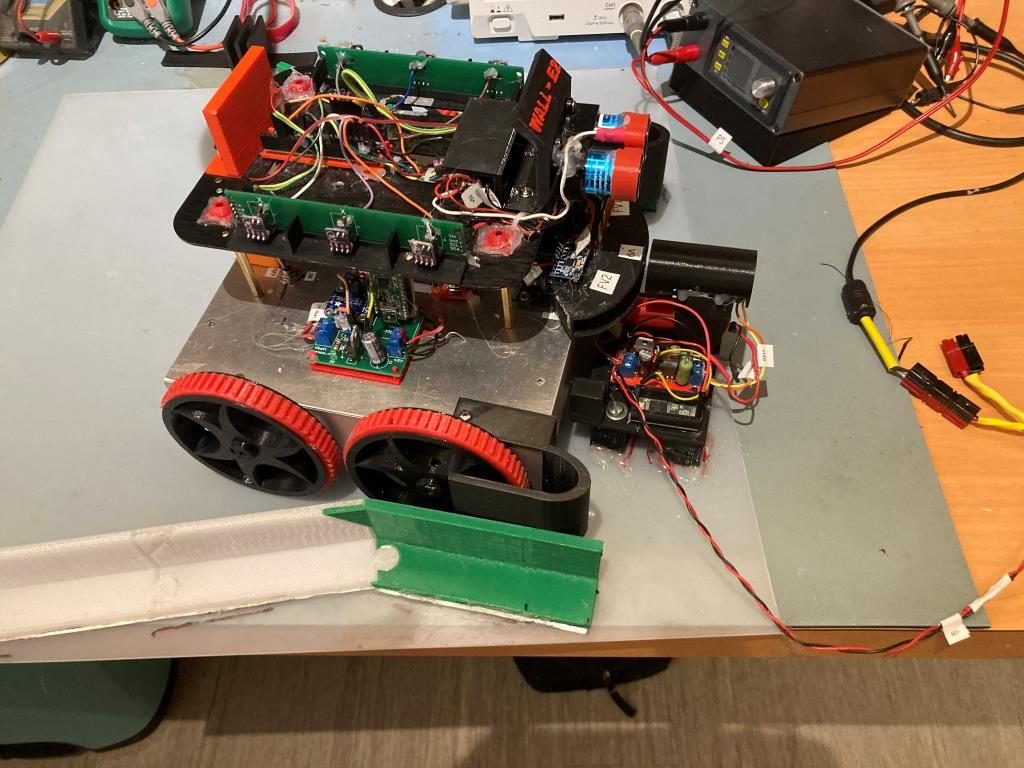

I think I have now arrived at the point where the major sub-systems in my anonymous wall-following robot program are working well now. The last piece (I think) of the puzzle was the offset tracking algorithm (see https://www.fpaynter.com/2023/01/walle3-wall-track-tuning-review/).

- Wall-Track Tuning – just finished (I hope)

- Move to Front/Rear/Left/Right Distance – These are generally working now.

- Detection of and tracking/homing to a charging station via IR beam.

- Error condition detection/handling – this is still an open question to me. The program handles a number of error conditions, but I’m sure there are some error conditions that it doesn’t handle, or doesn’t handle well (the ‘open doorway’ detection and handling issue, for one).

The last full program I see in my Arduino directory is ‘WallE3_WallTrack_V5’, although it appears that _V5 isn’t that much different than _V4. Below are the changes from V4 to V5:

- There are a number of changes in _V5’s #pragma OFFSET_CAPTURE section, but as I just discovered in this post, the new wall tracking configuration with PID(350,0,0) and ‘tweak’ divisor 50 (as described at the bottom of this post) means that I don’t need a separate ‘offset capture’ feature at all – the normal offset tracking routine handles the capture portion quite well, thankyou.

- There are some very minor changes in _V5’s TrackLeftWallOffset(), but this section will be replaced in its entirety with the algorithm from the above post

- V5 has a function called OrientCorr() that doesn’t exist in _V4, but it was just for debugging support.

So, I think I will start by creating yet another Arduino project from scratch, with the intention of building up to a complete working program, with wall offset tracking, charging station detection/docking, and anomalous condition detection/handling. But what to call it? I think I will go with ‘WallE3_Complete_V1’ and see how that works. I think I will need to be careful during it’s construction, as I want to incorporate all the progress I have made in the various ‘part-task’ programs:

- WallE3_AnomalyRecovery_V1/V2

- WallE3_ChargingStn_V1/V2/V3

- WallE3_FrontBackMotionTuning_V1

- WallE3_ParallelFind_V1

- WallE3_RollingTurn_V1

- WallE3_SpinTurnTuning_V2

- WallE3_WallTrack_V1-V5

- WallE3_WallTrackTuning_V1-V5

I will start by creating WallE3_Complete_V1 as a new blank program, and then going carefully through each of the above ‘part-task’ programs (in alphabetical order each time, just to reduce the confusion factor) to pull in the relevant bits.

Includes:

It looks like the complete #includes section is:

|

1 2 3 4 5 6 7 8 9 10 |

#pragma region INCLUDES #include <Wire.h> #include "FlashTxx.h" // TLC/T3x/T4x flash primitives #include <elapsedMillis.h> #include "MPU6050_6Axis_MotionApps612.h" //01/18/22 changed to use the \I2CDevLib\Arduino\MPU6050\ version #include "I2C_Anything.h" //needed for sending float data over I2C #include "timelib.h" //added 01/01/22 for charge monitoring support #include <math.h> #include "enums.h" //12/20/2022 added to avoid intellisense errors assoc with enums #pragma endregion Includes |

Oddly though, many of my programs don’t #include <wire.h>, but they compile and run fine – no idea why. OK, the reason is – “I2C_Anything.h” also includes <wire.h>. When I look at ‘wire.h’, I see it has a ‘#ifndef TwoWire_h’ statement at the top, so adding #include <wire.h> at the top won’t cause a problem, and I like it just for its informational value.

After copying in the #includes, I right-clicked on the project name and selected ‘add->existing item…’ and added ‘enums.h’, ‘FlashTxx.h’ and ‘FlashTxx.cpp’ from the ‘…\Robot Common Files’ folder. Then I opened a CMD window and used mklink (see this link) to create hard links to ‘board.txt’ and ‘TeensyOTA1.ttl’. At this point, the minimalist program (only #defines and empty, setup() and loop() functions) compiles without error – yay!

#Define Section:

Next in line are all the #Defines:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

#pragma region DEFINES //02/29/16 hardware defines //#define NO_MOTORS //#define NO_MPU6050 //added 01/23/22 //#define IR_HOMING_ONLY //#define NO_LIDAR //#define NO_VL53L0X //01/08/22 now used for VL53L0X hardware //#define NO_IRDET //added 04/05/17 for daytime in-atrium testing (too much ambient IR) //#define DISTANCES_ONLY //added 11/14/18 to just display distances in infinite loop //#define NO_STUCK //added 03/10/19 to disable 'stuck' detection //#define BATTERY_DISCHARGE //added 03/04/20 to discharge battery safely #define NO_POST //added 04/12/20 to skip all the POST checks //11/07/2020 moved all I2C Address declarations here #define IRDET_I2C_ADDR 0x08 #define MPU6050_I2C_ADDR 0x68 #pragma endregion Program #Defines |

TIME INTERVALS Section:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

#pragma region TIME INTERVALS const uint16_t MSEC_PER_IR_HOMING_ADJ = 150; //06/27/22 100ms is too fast for GetFrontDistCm() const int MSEC_PER_DIST_UPDATE = 100; //10/02/22 rev to speed up rate indep of front LIDAR const uint16_t WALL_TRACK_UPDATE_INTERVAL_MSEC = MSEC_PER_DIST_UPDATE;//10/02/22 rev to make these two intervals the same const uint16_t FRONT_DISTANCE_UPDATE_INTERVAL_MSEC = 250; //10/02/22 added to separate out slow front LIDAR from faster VL53L0X sensors const uint16_t TURN_RATE_UPDATE_INTERVAL_MSEC = 30; //30 mSec is as fast as it can go elapsedMillis MsecSinceLastAdj; //added 05/24/22 for ParallelOrientation() routine elapsedMillis MsecSinceLastIRHomingAdj; //01/07/22 used for #ifdef IR_HOMING_ONLY block elapsedMillis MsecSinceLastDistUpdate; //01/07/22 used for local dist update loops elapsedMillis MsecSinceLastTurnRateUpdate;//heading/rate based turn support elapsedMillis mSecSinceLastWallTrackUpdate; elapsedMillis MsecSinceLastFrontDistUpdate; //10/02/22 slower update rate needed for front LIDAR #pragma endregion TIME INTERVALS |

Note that the above const declarations for MSEC_PER_DIST_UPDATE and FRONT_DISTANCE_UPDATE_INTERVAL_MSEC could just as easily be in the DISTANCE_MEASUREMENT_SUPPORT section, but I decided to try and keep all the timing stuff together. I’ll also put these declarations in the DISTANCE_MEASUREMENT_SUPPORT section but commented out with a pointer to the timing section.

TELEMETRYSTRINGS Section:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

#pragma region TELEMETRYSTRINGS const char* AnomalyStrArray[] = { "NO_ANOMALIES", "STUCK_AHEAD", "STUCK_BEHIND", "OBSTACLE_AHEAD", "WALL_OFFSET_DIST_AHEAD","OBSTACLE_BEHIND", "OPEN_CORNER", "DEAD_BATTERY", "CHARGER_CONNECTED" };//06/12/22 CHG_STN_AVAIL removed //const char* TrkStrArray[] = { "None", "Left", "Left Capture", "Right", "Right Capture", "Neither" }; const char* TrkStrArray[] = { "None", "Left", "Right", "Neither" };//01/31/23 removed "_Capture" elements const char* IRHomingTelemStr = "Time\tBattV\tFin1\tFin2\tSteer\tPID_Out\t\tLSpd\tRSpd\tFrontD\tRearD"; const char* IRHomingTelemStrNoPings = "Time\tBattV\tFin1\tFin2\tSteer\tPID_Out\t\tLSpd\tRSpd\n"; const char* LeftWallFollowTelemStr = "Msec\tLFront\tLCtr\tLRear\tFront\tFrontVar\tRear\tSteer\tOutput\tSetPt\tAdjDist\tLSpd\tRSpd"; const char* WallFollowTelemHdrStr = "Msec\tLF\tLC\tLR\t\tRF\tRC\tRR\t\tF\tFvar\tR\tRvar\tSteer\tSet\tOutput\tLSpd\tRSpd\tIRAvg\n"; const char* WallFollowTelemStr = "%lu\t%d\t%d\t%d\t\t%d\t%d\t%d\t\t%d\t%2.0f\t%d\t%2.0f\t%2.2f\t%2.2f\t%2.0f\t%d\t%d\t%lu\n"; //const char* ChargingTelemStr = "ChgSec\tBattV\tTotalI\tRunI\tChgI\tRearD\n"; //rev 01/30/21 const char* ChargingTelemStr = "ChgSec\tBattV\tTotalI\tRunI\tChgI\tbChging\n"; //rev 01/30/21 #pragma endregion Mode-specific telemetry header strings |

I removed the “_Capture” elements from TrkStrArray[] as these are no longer needed (wall offset capture now just part of TrackLeftRightOffset()). The rest should be OK for now.

DISTANCE_MEASUREMENT_SUPPORT Section:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

#pragma region DISTANCE_MEASUREMENT_SUPPORT //misc LIDAR and Ping sensor parameters const uint16_t MIN_FRONT_OBSTACLE_DIST_CM = 30; //rev 04/28/17 for better obstacle handling const uint16_t CHG_STN_AVOIDANCE_DIST_CM = 40; //added 03/11/17 for charge stn avoidance const uint16_t MAX_FRONT_DISTANCE_CM = 400; const uint16_t MAX_LR_DISTANCE_CM = 200; //04/19/15 now using sep parameters for front and side sensors //04/01/2015 added for 'stuck' detection support const uint16_t FRONT_DIST_ARRAY_SIZE = 50; //11/22/20 doubled to acct for 10Hz update rate const uint16_t FRONT_DIST_AVG_WINDOW_SIZE = 3; //moved here & renamed 04/28/19 const uint16_t LR_DIST_ARRAY_SIZE = 3; //04/28/19 added to reinstate l/r dist running avg const uint16_t REAR_DIST_ARRAY_SIZE = 50; //02/28/22 bumped to 50 const uint16_t LR_AVG_WINDOW_SIZE = 3; //added 04/28/19 so front & lr averages can differ const uint16_t STUCK_FRONT_VARIANCE_THRESHOLD = 50; //chg to 50 04/28/17 const uint16_t STUCK_REAR_VARIANCE_THRESHOLD = 50; //added 01/08/22 const uint16_t NO_LIDAR_FRONT_VAR_VAL = 10 * STUCK_FRONT_VARIANCE_THRESHOLD; //01/16/19 //04/28/19 added to reinstate l/r dist running avg //06/28/20 chg to uint_16 to accommodate change from cm to mm uint16_t aFrontDist[FRONT_DIST_ARRAY_SIZE]; //04/18/19 rev to use uint16_t vs byte uint16_t aLeftDistCM[LR_DIST_ARRAY_SIZE]; uint16_t aRightDistCM[LR_DIST_ARRAY_SIZE]; uint16_t aRearDistCM[REAR_DIST_ARRAY_SIZE]; uint16_t curMinObstacleDistance = MIN_FRONT_OBSTACLE_DIST_CM;//added 03/11/17 for chg stn avoidance //added 10/24/20 //const int STUCK_BACKUP_DISTANCE_CM = 25; const uint16_t STUCK_BACKUP_DISTANCE_CM = 20; //11/21/28 shortened slightly const uint16_t STUCK_FORWARD_DISTANCE_CM = 15; const uint16_t MAX_REAR_DISTANCE_CM = 100; //03/01/22 rev to use actual experimental results const uint16_t MIN_REAR_OBSTACLE_DIST_CM = 10; const uint16_t STUCK_BACKUP_TIME_MSEC = 1000; const uint16_t STUCK_FORWARD_TIME_MSEC = 1000; //const uint16_t FRONT_DISTANCE_UPDATE_INTERVAL_MSEC = 250; //01/31/23 this const was moved to 'TIME_INTERVALS' #pragma endregion Distance Measurement Support |

Copied this from ‘WallE3_AnomalyRecovery_V2’. It looks pretty complete. Note that I left the ‘STUCK_FORWARD/BACKUP_TIME_MSEC’ declarations here rather than in the ‘Timing’ section. Didn’t seem to warrant the attention.

PIN ASSIGNMENTS Section:

Copied from ‘WallE3_AnomalyRecovery_V2’ – looks pretty complete

MOVE TO DESIRED DISTANCE Section:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

//04/25/21 moved here from MoveToDesiredFront/BackDist() functions //01/31/23 TODO: Globally change 'OffsetDistKx' to 'MoveToDist_Kx', 'OffsetDistOutput' to 'MoveToDistOutput', 'OffsetDistVal' to 'MoveToDistInput' #pragma region MoveToDesiredDist MOVE TO DESIRED DIST SUPPORT //12/26/22 updated per https://www.fpaynter.com/2022/12/move-to-a-specified-distance-revisited/ const float OffsetDistKp = 1.5f; //neg value creates PID in REVERSE mode const float OffsetDistKi = 0.1f; const float OffsetDistKd = 0.2f; //float OffsetDistSetpointCm, OffsetDistOutput;//10/06/17 chg input variable name to something more descriptive float OffsetDistOutput;//10/06/17 chg input variable name to something more descriptive double OffsetDistVal = 0; //has to be 'double' #pragma endregion MoveToDesiredFront/Back/Left/RightDist() |

I changed the #pragma name from ‘FRONT_BACK OFFSET MOTION PID’ to ‘MOVE TO DESIRED DIST SUPPORT’ as that is a better description of what these parameters do. In addition, the ‘Offsetxxxx’ name is no longer relevant – it should be changed to ‘MoveToDistxxx’, but I don’t want to do that willy-nilly now. I’ll wait until the entire program will compile, and then (after one last check) I’ll make the change globally.

Charge Support Parameters Section:

Copied from ‘WallE3_AnomalyRecovery_V2’ – looks good.

MOTOR_PARAMETERS Section:

Copied from ‘WallE3_AnomalyRecovery_V2’ – looks good.

MPU6050_SUPPORT Section:

Copied from ‘WallE3_AnomalyRecovery_V2’ – looks good.

WALL_FOLLOW_SUPPORT Section:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

#pragma region WALL_FOLLOW_SUPPORT float WALL_OFFSET_TRACK_Kp = 200;//07/09/22 from WallE3_WallTrackTuning.ino float WALL_OFFSET_TRACK_Ki = 20;//07/09/22 from WallE3_WallTrackTuning.ino float WALL_OFFSET_TRACK_Kd = 0; float WallTrackSteerVal, WallTrackOutput, WallTrackSetPoint; const uint16_t WALL_OFFSET_TGTDIST_CM = 40; const uint16_t REAR_OBSTACLE_RECOVERY_DISTANCE_CM = 10; //added 09/03/22 const uint16_t WALL_OFFSET_APPR_ANGLE_MINDEG = 30;//added 09/08/21 const float WALL_TRACK_CAPTURE_MAX_STEERING_VAL = 0.8;//09/06/22 added for use in RunToDaylight() //const float LEFT_WALL_PARALLEL_STEER_VALUE = -0.35; //added 10/08/22 //const float RIGHT_WALL_PARALLEL_STEER_VALUE = 0.25; //added 10/08/22 #pragma endregion WALL_FOLLOW_SUPPORT |

Copied from ‘WallE3_AnomalyRecovery_V2’, except I commented out the ‘LEFT/RIGHT_WALL_PARALLEL_STEER_VALUEs as these are no longer used.

HEADING_AND_RATE_BASED_TURN_PARAMETERS Section:

Only the ‘TurnRate_Kx’ parameters, the ‘HDG_NEAR_MATCH’, HDG_FULL_MATCH, ‘HDG_MIN_MATCH’ and ‘DEFAULT_TURN_RATE’ should be in this section. Everything else should be local to the ‘turn’ functions (I kept the ‘Prev_HdgDeg’ and ‘TurnRatePIDOutput’ at global scope for now to avoid lots of compile errors, but they should also be removed.

PARALLEL_FIND_SUPPORT Section:

|

1 2 3 4 5 6 7 8 9 10 |

#pragma region PARALLEL_FIND_SUPPORT //05/24/22 added for new RotateToParallelOrientation() routine //const int ParallelFindKp = 100; const float ParallelFindKp = 50.f; const float ParallelFindKi = 10.f; const float ParallelFindKd = 0.f; const float ParallelFindSetpoint = 0.0; //09/22/20 moved here const float ParallelFindThreshold = 0.01; //05/24/22 float ParallelFindOutput = 0; //05/24/22 #pragma endregion PARALLEL_FIND_SUPPORT |

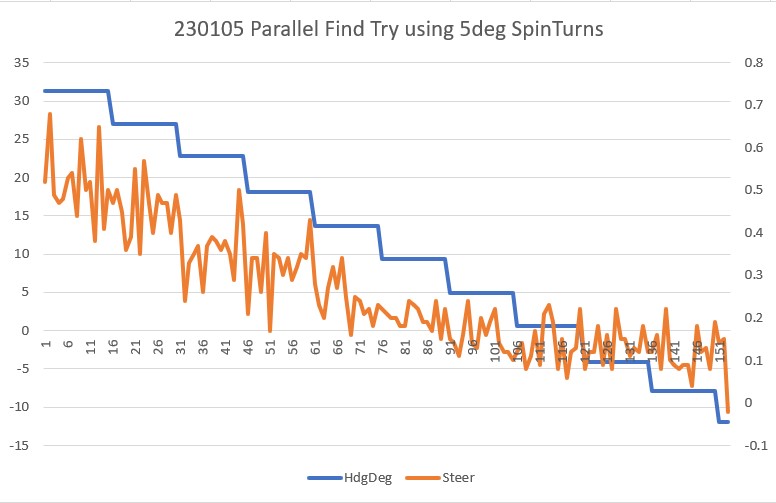

03 February 2023: The entire PARALLEL_FIND_SUPPORT section has been removed, as the new RotateToParallelOrientation() function no longer uses a PID engine

IR_HOMING_SUPPORT Section:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

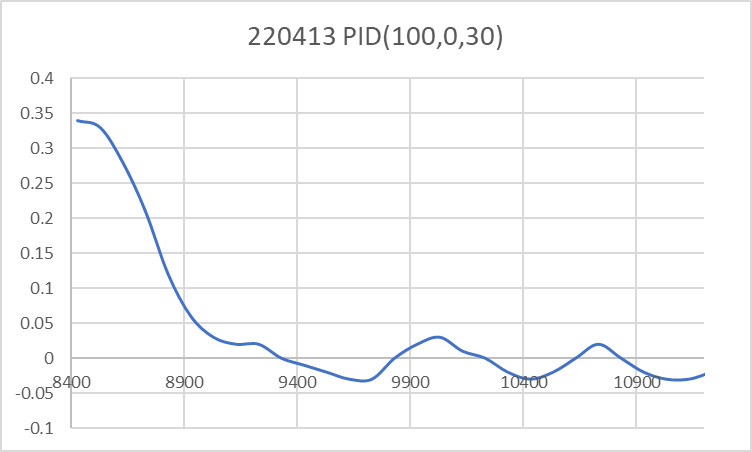

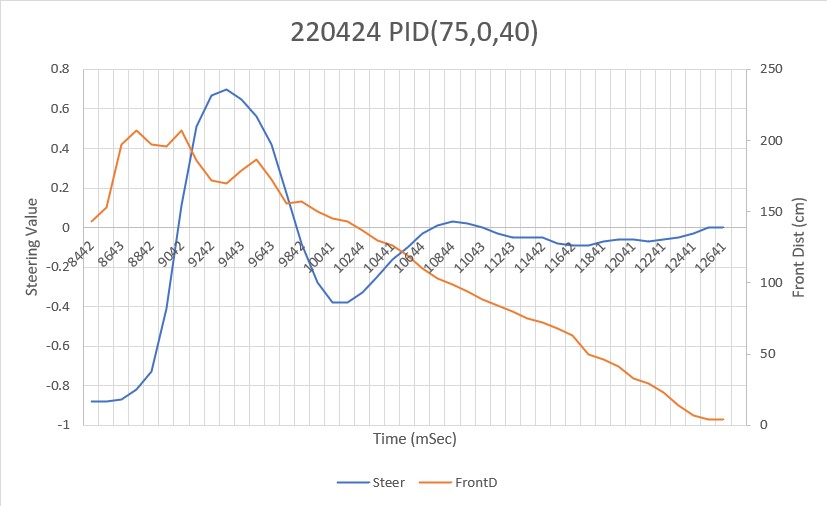

#pragma region IR_HOMING_SUPPORT //uint8_t IR_HOMING_MODULE_SLAVE_ADDR = 8; //uint8_t type reqd here for Wire.requestFrom() call //mvd to #define 02/02/23 float IRHomingSetpoint, IRHomingOutput;//10/06/17 chg input variable name to something more descriptive double IRHomingLRSteeringVal = 0;//04/12/22 has to be double (8 bytes) //these values all come from IR Homing Teensy via I2C calls uint32_t IRFinalValue1 = 0; uint32_t IRFinalValue2 = 0; uint32_t IRHomingValTotalAvg = 0; //01/11/22 started over again with WallE3 and home-grown PID algorithm //01/15/22 reduce Kp to 150, keeping others the same const float IRHomingKp = 75.f; const float IRHomingKi = 00.f; const float IRHomingKd = 40.f; //worse //04/14/22 chg from const long to const uint32_t const uint32_t IR_BEAM_DETECTION_CHANNEL_MAX = 2621440; //const uint32_t IR_BEAM_DETECTION_THRESHOLD = 15000; const uint32_t IR_BEAM_DETECTION_THRESHOLD = 25000;//05/08/22 const float IR_HOMING_STEERING_VAL_CAPTURE_THRESHOLD = 0.3; //added 01/30/21 const float IR_HOMING_STEERING_VAL_DETECTION_THRESHOLD = 0.8; //added 03/30/21 const uint16_t IRHOMING_VALUE_ARRAY_SIZE = 3; //added 03/16/21 for value average support long int aIRHOMINGVALTOTALS[IRHOMING_VALUE_ARRAY_SIZE];//added 03/16/21 for value average support const uint16_t IRHOMING_IAP_OFFSET_LOW_THRESHOLD_PCT = 75; //03/21/21 if wall offset is less than this relative to IAP offset, then adjust const uint16_t IRHOMING_IAP_OFFSET_HIGH_THRESHOLD_PCT = 110; //03/23/21 if wall offset is more than this relative to IAP offset, then adjust //const float IRHOMING_IAP_FINE_TUNE_STEERING_VALUE_THRESHOLD = 0.2; //03/21/21 fine-tune if more off-boresight than this const float IRHOMING_IAP_STEERING_VALUE_THRESHOLD = 0.8; //05/28/22 //added 05/28/22 for better TurnToHomingBeacon() performance const uint16_t IRHOMING_STEERVALUE_ARRAY_SIZE = 10; float aIRHOMINGSTEERVALS[IRHOMING_STEERVALUE_ARRAY_SIZE]; const float IRHOMING_DISTANCE_OFFSET_RATIO = 0.3; //added 06/17/22 #pragma endregion IR_HOMING_SUPPORT |

Copied these from WallE3_AnomalyRecovery_V2. At some point the ‘IRHomingSetpoint’ variable should be changed from global to local scope, and ‘IRHomingLRSteeringVal’ should be renamed to ‘gl_IRHomingLRSteeringVal’ to show global scope. Same with ‘IRFinalValue1’, ‘IRFinalValue2’ and ‘IRHomingValTotalAvg’

GLOBAL_VARIABLES Section:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 |

#pragma region GLOBAL_VARIABLES float glBatteryVoltage; uint16_t glLeftspeednum = MOTOR_SPEED_HALF; uint16_t glRightspeednum = MOTOR_SPEED_HALF; NavCases glNavCase = NavCases::NAV_WALLTRK; WallTrackingCases glTrackingCase = WallTrackingCases::TRACKING_NEITHER; //added 01/05/16 WallTrackingCases glPrevTrackingCase = WallTrackingCases::TRACKING_LEFT; //only used decide which way to turn in the TRACKING_NEITHER case OpModes glPrevOpMode = OpModes::MODE_NONE; //added 03/08/17, rev to MODE_NONE 04/04/17 OpModes glCurrentOpMode = OpModes::MODE_NONE; //added 10/13/17 so can use in motor speed setting routines //04/10/20 added for experiment to port heading based wall tracking from two wheel robot TrackingState glCurrentTrackingState = TrackingState::TRK_RIGHT_NONE; TrackingState glPrevTrackingState = TrackingState::TRK_RIGHT_NONE; int16_t glFinalLeftSpeed = 0; int16_t glFinalRightSpeed = 0; //02/15/22 added from FourWD_WallE2_V12.ino uint16_t glFrontCm = 0; float glFrontvar = 0;//chg to float 03/23/22 float glRearvar = 0;//chg to float 03/23/22 //float glFrontmean = 0; //float glRearmean = 0;//06/12/22 not needed - local var is OK //03/07/22 prepended 'gl_' bool gl_bIRBeamAvail = false; bool gl_bChgConnect = false; bool gl_bObstacleAhead = false; bool gl_bWallOffsetDist = false;//03/27/22 added bool gl_bObstacleBehind = false; bool gl_bStuckAhead = false; bool gl_bStuckAhead_Slow = false; bool gl_bStuckBehind = false; bool gl_bDeadBattery = false; //Sensor data values //03/01/22 rev to store all dists in cm vs mm uint16_t glRightFrontCm; uint16_t glRightCenterCm; uint16_t glRightRearCm; uint16_t glRearCm; //added 10/24/20 float glRightSteeringVal; //added 08/06/20 uint16_t glLeftFrontCm; uint16_t glLeftCenterCm; uint16_t glLeftRearCm; float glLeftSteeringVal; //added 08/06/20 bool bVL53L0X_TeensyReady = false; //11/10/20 added to prevent bad data reads during Teensy setup() Stream* gl_pSerPort = 0; //09/26/22 made global so can use everywhere. //02/05/22 ported from FourWD_WallTrackTest_V4 #pragma endregion GLOBAL_VARIABLES |

It looks like most, if not all, of the global variables associated with TrackingCases, OpModes, and TrackingStates are no longer used. I left them in for now, but will go back through and remove unused vars when WallE3_Complete_V1 is finished.

SETUP():

SERIAL_PORTS Section:

Copied verbatim from ‘WallE3_AnomalyRecovery_V2’ – looks good

PIN_INITIALIZATION, SERIAL_PORTS, I2C_PORTS, MPU6050, VL53L0X_TEENSY Sections:

Copied all these verbatim from ‘WallE3_AnomalyRecovery_V2’ – these haven’t changed in literally years, so shouldn’t be an issue

LR_FRONT DISTANCE ARRAYS, #IFDEF DISTANCES_ONLY, IRDET_TEENSY, #IFDEF IR_HOMING_ONLY, IR_BEAM_STEERVAL_ARRAY, POST_CHECKS Sections:

Copied all these verbatim from ‘WallE3_AnomalyRecovery_V2’ – these haven’t changed in literally years, so shouldn’t be an issue. I did note, however, that the ‘POST_CHECKS’ section always runs as the ‘NO_POST’ #define isn’t used. Will leave as it is for now. Thinking a bit more about this – it seems that what I originally thought would be a potentially long, onerous, and not very useful POST hasn’t turned out that way. It is long and onerous, but very necessary, as it initializes and connects to all the peripheral equipment. So I think I will simply remove the #define NO_POST line, and rename the section that ‘ripples’ the rear LED’s from ‘#pragma POST_CHECKS’ to ‘#pragma FLASH_REAR_LEDS’

This complete the ‘setup()’ function – on to ‘loop()’!

Obviously, the contents of the loop() function varies widely across all the ‘part-task’ programs, so this will probably require a lot of work to ‘harmonize’ all the part-task features into a complete program. I think I will try to go through the ‘part-task’ programs in alpha order to see if I can pick out the salient features that should be included.

WallE3_AnomalyRecovery_V2:

loop() has just three main sections – IR_HOMING, CHARGING, and WALL_TRACKING. The first two above are specific operations associated with homing and connecting to the charging station, and the last one is very simple – it just calls either TrackRightWallOffset() or TrackLeftWallOffset().

WallE3_ChargingStn_V2:

This one has the same three sections as WallE3_AnomalyRecovery_V2 and AFAICT, they are identical.

WallE3_FrontBackMotionTuning_V1:

In addition to the same three sections as WallE3_AnomalyRecovery_V2, this one has ‘PARAMETER CAPTURE’ and ‘FRONT_BACK_MOTION_TEST’ sections. The ‘PARAMETER CAPTURE’ section captures test parameter value input from the user, and the ‘FRONT_BACK_MOTION_TEST’ section actually performs the motion with the user-entered parameters. So, we need to make sure that the actual functions used for testing are copied over and the global front/back motion PID values as well. From the ‘Move to a Specified Distance’ post, I see that the final PID values are (1.5, 0.1, 0.2), and these values were incorporated into the ‘WallE3_WallTrackTuning_V5’ as follows:

|

1 2 3 4 5 6 7 8 9 |

#pragma region FRONT_BACK OFFSET MOTION PID //12/26/22 updated per https://www.fpaynter.com/2022/12/move-to-a-specified-distance-revisited/ const float OffsetDistKp = 1.5f; //neg value creates PID in REVERSE mode const float OffsetDistKi = 0.1f; const float OffsetDistKd = 0.2f; float OffsetDistOutput;//10/06/17 chg input variable name to something more descriptive double OffsetDistVal = 0; //has to be 'double' #pragma endregion FRONT_BACK OFFSET MOTION PID |

Uh-Oh, trouble ahead! When I looked at the OffsetDistKx values copied into _Complete_V1 from WallE3_AnomalyRecovery_V2, I see:

|

1 2 3 4 |

#pragma region FRONT_BACK OFFSET MOTION PID const float OffsetDistKp = 2.f; //neg value creates PID in REVERSE mode const float OffsetDistKi = 0.5f; const float OffsetDistKd = 0.0f; |

so, which set of values is correct? The WallE3_AnomalyRecovery_V2 project was created 9/26/22, while the Move to a Specified Distance, Revisited post is dated 24 December 2022, so much more recent. We’ll at least start with the later PID values of (1.5,0.1,0.2)

Copied the following functions from WallE3_FrontBackMotionTuning_V1 into WallE3_Complete_V1:

- bool MoveToDesiredFrontDistCm(uint16_t offsetCm)

- bool MoveToDesiredLeftDistCm(uint16_t offsetCm)

- bool MoveToDesiredRightDistCm(uint16_t offsetCm)

- bool MoveToDesiredRearDistCm(uint16_t offsetCm)

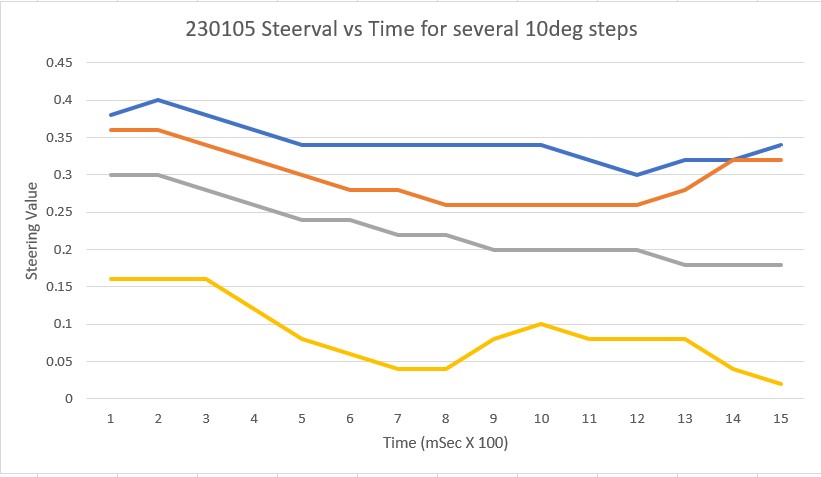

WallE3_ParallelFind_V1:

This program has the same structure in setup – with a PARAMETER CAPTURE section followed by the actual test code, all in setup(). However, when I tested this for functionality, I realized it a) only addressed the ‘left wall tracking’ case, and b) didn’t work even for that case.

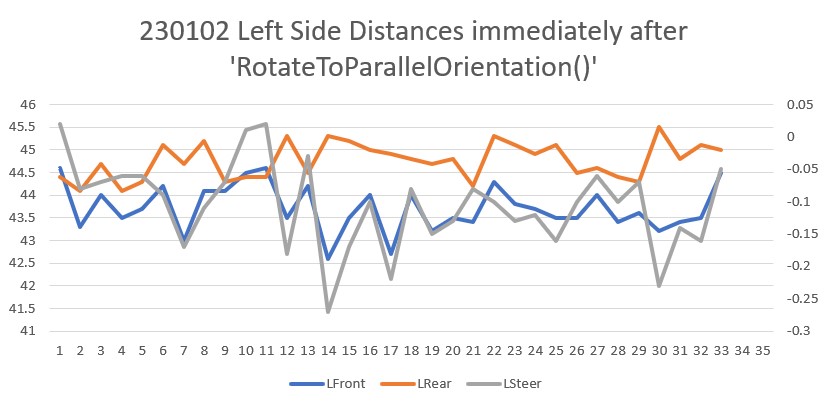

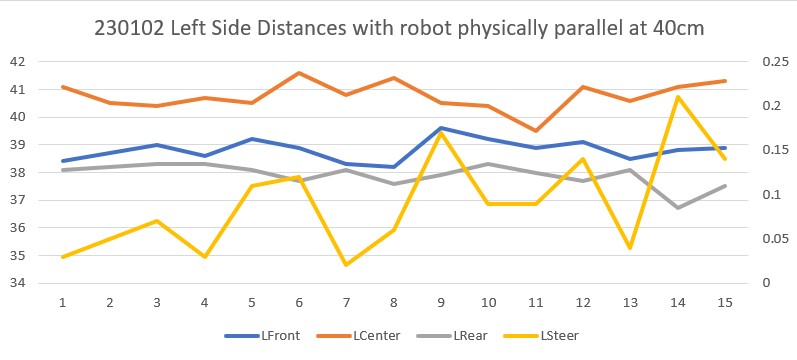

So, I spent some quality time with this ‘part-task’ program and got it working fairly well, for both cases. See this post for the details and testing results.

After getting everything working, I copied the RotateToParallelOrientation() function from ‘WallE3_ChargingStn_V2’ into ‘WallE3_Complete_V1’ (in the WALL_TRACK_SUPPORT section) and then replaced the actual code with the code from the latest ‘WallE3_ParallelFind_V1’ part-test program.

After making the copy, there were a number of compile errors due to needed utility functions not being present. From ‘WallE3_ChargingStn_V2’ I copied in the following functions:

- Entire HDG_BASED_TURN_SUPPORT section

- Entire DISTANCE_MEASUREMENT_SUPPORT section

- Entire MOTOR_SUPPORT section

- Entire IR_HOMING_SUPPORT section

- Entire VL53L0X_SUPPORT section

- UpdateAllEnvironmentParameters()

- Entire CHARGE_SUPPORT_FUNCTIONS section

- copied ‘float glLeftCentCorrCm;’ from WallE3_ParallelFind_V1

- IsStuckAhead(), IsStuckBehind(), IsIRBeamAvail(), GetWallOrientDeg(), CorrDistForOrient()

At this point the program still doesn’t compile, but I am going to stop and continue with looking at each part-task program in order, and then I’ll come back to the task of getting ‘Complete’ to compile

WallE3_RollingTurn_V1:

Based on the results described in ‘WallE3 Rolling Turn, Revisited’, I copied the ‘RollingTurn()’ function verbatim into ‘Complete’, and also added the ‘TURN_RATE_UPDATE_INTERVAL_MSEC’ constant to the ‘TIME INTERVALS’ section.

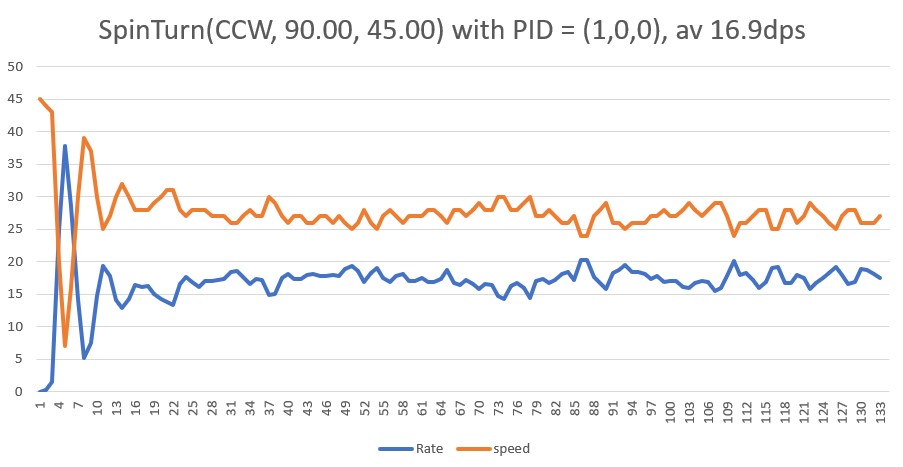

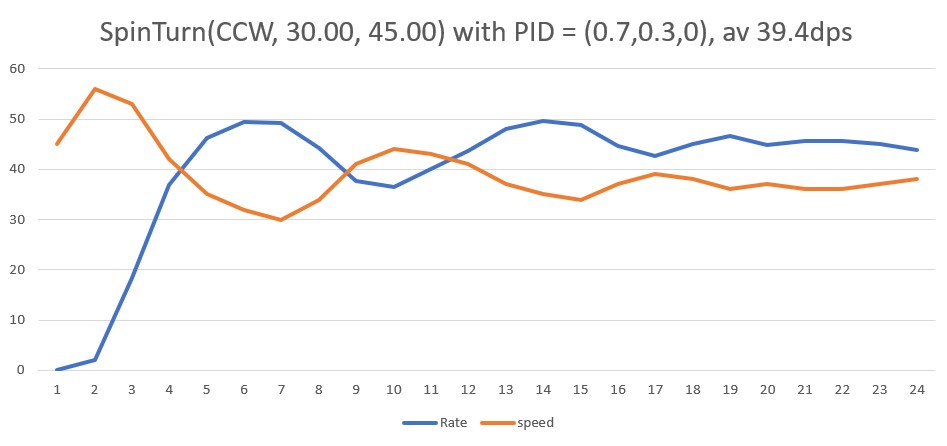

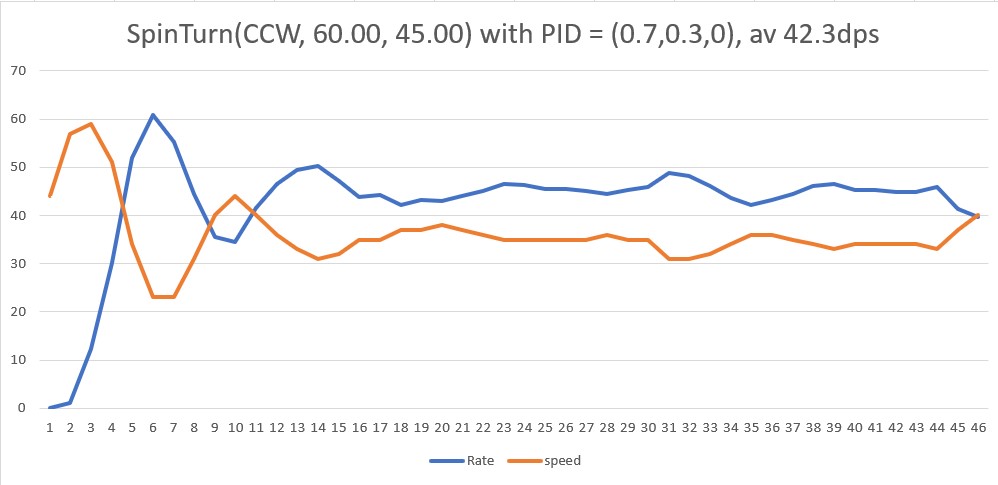

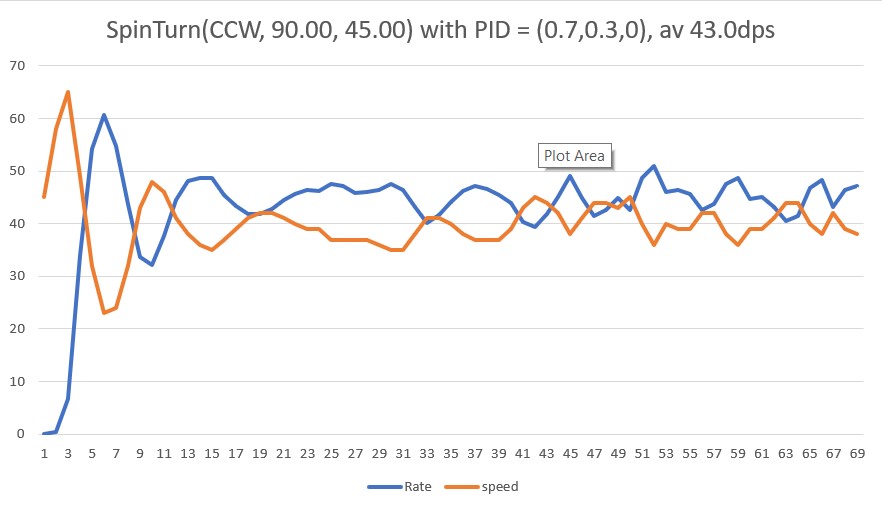

WallE3_SpinTurnTuning_V2:

Based on the ‘WallE3 Spin Turn, Revisited’ post, it looks like the original ‘SpinTurn()’ function is unaffected, but with a different set of PID values. The ‘final’ PID value set is (0.7,0.3,0). I did a file compare of the SpinTurn() functions between the SpinTurnTuning_V2 and ChargingStn_V2 programs, and found that they are functionally identical (ChargingStn_V2 uses TeePrint, and SpinTurnTuning_V2 uses gl_SerPort). So, I copied the SpinTurnTuning_V2 versions of both the SpinTurn() functions (one with and one without Kp/Ki/Kd as input parameters) to ‘Complete_V1’, and copied the Kp,Ki, & Kd values from SpinTurnTuning to ‘Complete_V1’.

WallE3_WallTrack_V1-V5:

From this post I see the following changes through ‘WallE3_WallTrack_V1-V5 series of programs:

WallE3_WallTrack_V2 vs WallE3_WallTrack_V1 (Created: 2/19/2022)

- V2 moved all inline tracking code into TrackLeft/RightWallOffset() functions (later ported back into V1 – don’t know why)

- V2 changed all ‘double’ declarations to ‘float’ due to change from Mega2560 to T3.5

WallE3_WallTrack_V3 vs WallE3_WallTrack_V2 (Created: 2/22/2022)

- V3 Chg left/right/rear dists from mm to cm

- V3 Concentrated all environmental updates into UpdateAllEnvironmentParameters();

- V3 No longer using GetOpMode()

WallE3_WallTrack_V4 vs WallE3_WallTrack_V3 (Created: 3/25/2022)

- V4 Added ‘RollingForwardTurn() function

WallE3_WallTrack_V5 vs WallE3_WallTrack_V4 (Created: 3/25/2022)

- No real changes between V5 & V4

I *think* that I copied most pieces in from WallE3_WallTrack_V2, so I’m going to go back through the entire program, looking for V2-V5 differences.

Loop():

I think I have everything above loop() accounted for. Now to try and make some sense of loop(). I need to be cognizant of ‘WallE3_ChargingStn_V2’, ‘WallE3_WallTrack_V5’ and ‘WallE3_AnomalyRecovery_V2’ versions of ‘loop()’. Going through all the programs, I see the following differences in loop().

- ChargingStn_V2 adds ‘UpdateAllEnvironmentParameters()’ compared to WallE3_WallTrack_V5.

- WallE3_AnomalyRecovery_V2 also adds ‘UpdateAllEnvironmentParameters()’ and in addition changes all ‘myTeePrint.’ to ‘gl_SerPort->’ compared to ChargingStn_V2. So, I copied the WallE3_AnomalyRecovery_V2 versions of IR_HOMING, CHARGING, and WALL_TRACKING into Compare’s loop() function.

So, at this point I have all of the ‘pre-setup’, setup(), and loop() stuff in properly (I hope). This should compile, but probably won’t, so I’ll need to go through the PITA part of figuring out why, and fixing it – oh well.

I got IR_HOMING and CHARGING working (well, at least compiling), and now I’m working on WALL_TRACKING. For this section I need to decide which version of TrackRight/LeftWallOffset to use (or at least start with).

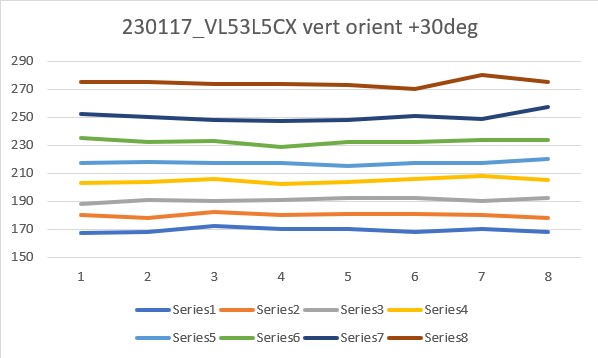

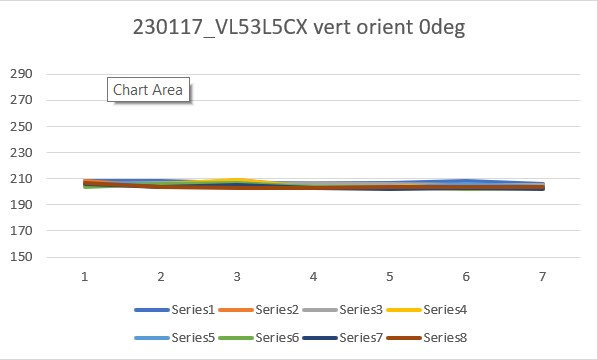

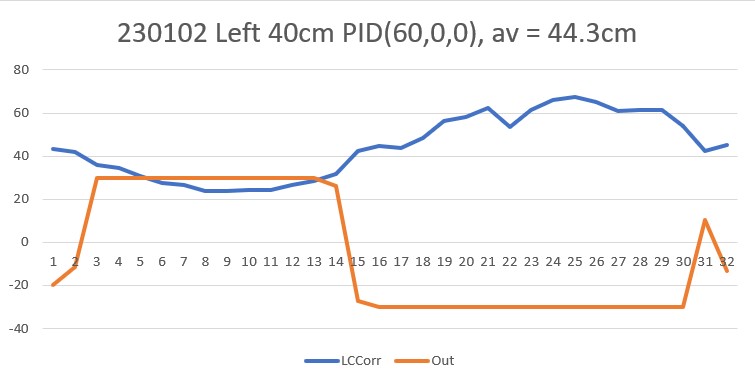

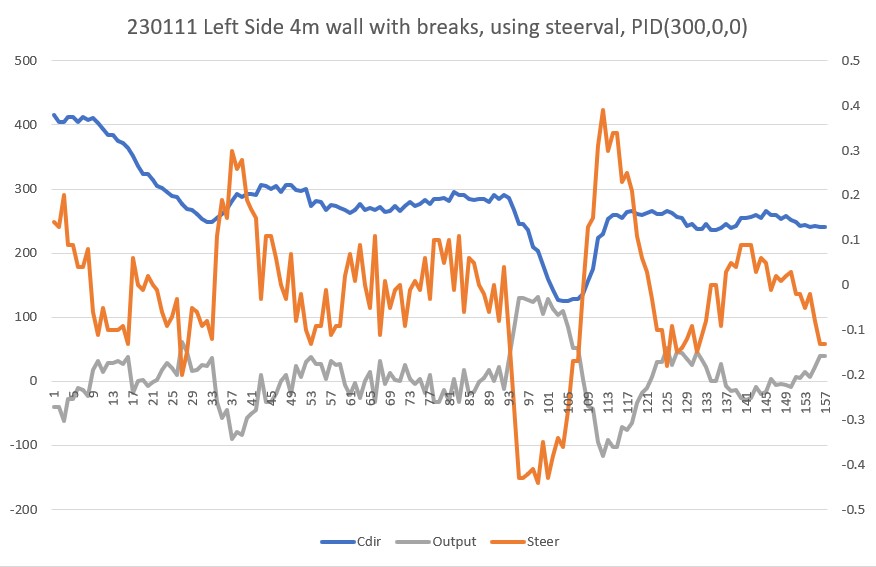

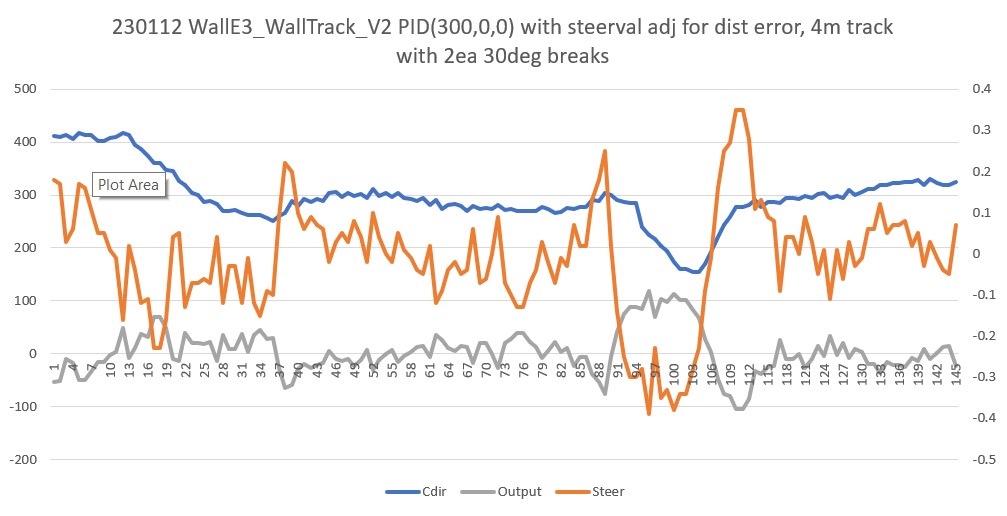

WallE3_WallTrackTuning_V5 ended up with a very successful setup with PID(350,0,0) and offset divisor of 50, but it only addressed left-side wall tracking, and didn’t use the actual ‘TrackLeftWallOffset()’ function. So first we need to move the test code into ‘TrackLeftWallOffset()’, and then port ‘TrackLeftWallOffset()’ into ‘TrackRightWallOffset()’.

Here’s the tracking code from WallE3_WallTrackTuning_V5:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 |

if (isRightWall) { //TrackRightWallOffset(WallTrack_Kp, WallTrack_Ki, WallTrack_Kd, OffCm); } else { float lastError = 0; float lastInput = 0; float lastIval = 0; float lastDerror = 0; AnomalyCode errcode = CheckForAnomalies(); //WallTrackSetPoint = -0.35; //04/17/22 this value holds robot parallel to left wall WallTrackSetPoint = 0; //04/17/22 this value holds robot parallel to left wall //01/14/23 added to bypass offset capture section gl_pSerPort->printf("Press any key to start\n"); gl_pSerPort->printf("Front\tCenter\tRear\tSteer\n"); while (!gl_pSerPort->available()) { gl_pSerPort->read(); //remove this character from stream GetRequestedVL53l0xValues(VL53L0X_LEFT); gl_pSerPort->printf("\n%2.2f\t%2.2f\t%2.2f\t%2.2f\n", glLeftFrontCm, glLeftCenterCm, glLeftRearCm, glLeftSteeringVal); delay(500); } gl_pSerPort->printf("\n%s: TrackLeftWallOffset: Start tracking offset of %2.1f cm with Kp/Ki/Kd = %2.2f\t%2.2f\t%2.2f\n", __FILENAME__, OffCm, WallTrack_Kp, WallTrack_Ki, WallTrack_Kd); gl_pSerPort->printf(LeftWallFollowTelemHdrStr); //Step4: Track the wall using PID algorithm mSecSinceLastWallTrackUpdate = 0; //added 09/04/21 MsecSinceLastFrontDistUpdate = 0; //added 10/02/22 to slow front LIDAR update rate gl_pSerPort->printf("%s: In TrackLeftWallOffset: before while with errcode = %s\n", __FILENAME__, AnomalyStrArray[errcode]); //gl_pSerPort->printf("\nMsec\tLF\tLC\tLR\tLCCorr\tOffCm\tOffF\tSet\tSteer\tErr\tLastI\tLastD\tOut\tLspd\tRspd\n"); gl_pSerPort->printf("\nMsec\tLF\tLC\tLR\tLCCorr\tSteer\tTweak\tOffCm\tErr\tLastI\tLastD\tOut\tLspd\tRspd\n"); while (errcode == NO_ANOMALIES && !gl_bIRBeamAvail) { CheckForUserInput(); //this is a bit recursive, but should still work (I hope) if (mSecSinceLastWallTrackUpdate > WALL_TRACK_UPDATE_INTERVAL_MSEC) { mSecSinceLastWallTrackUpdate -= WALL_TRACK_UPDATE_INTERVAL_MSEC; //03/08/22 abstracted these calls to UpdateAllEnvironmentParameters() UpdateAllEnvironmentParameters();//03/08/22 added to consolidate sensor update calls errcode = CheckForAnomalies(); //from Teensy_7VL53L0X_I2C_Slave_V3.ino: LeftSteeringVal = (LF_Dist_mm - LR_Dist_mm) / 100.f; //rev 06/21/20 see PPalace post glLeftCentCorrCm = CorrDistForOrient(glLeftCenterCm, glLeftSteeringVal); //float offset_factor = (glLeftCentCorrCm - OffCm) / 10.f; //float offset_factor = (glLeftCentCorrCm - OffCm) / 100.f; //01/28/23 from https://www.fpaynter.com/2023/01/walle3-wall-track-tuning-review/ float offset_factor = (glLeftCentCorrCm - OffCm) / tweak_divisor; //01/28/23 from https://www.fpaynter.com/2023/01/walle3-wall-track-tuning-review/ WallTrackSteerVal = glLeftSteeringVal + offset_factor; ////update motor speeds, skipping bad values ////if (!isnan(WallTrackSteerVal)) //if (abs(WallTrackSteerVal) < maxSteerVal) //{ // WallTrackOutput = PIDCalcs(glLeftCentCorrCm, OffCm, lastError, lastInput, lastIval, lastDerror, // WallTrack_Kp, WallTrack_Ki, WallTrack_Kd); if (!isnan(WallTrackSteerVal)) //if (!isnan(glLeftCentCorrCm)) { //gl_pSerPort->printf("just before PIDCalcs call: WallTrackSetPoint = %2.2f\n", WallTrackSetPoint); //WallTrackOutput = PIDCalcs(glLeftCentCorrCm, OffCm, lastError, lastInput, lastIval, lastDerror, // WallTrack_Kp, WallTrack_Ki, WallTrack_Kd); WallTrackOutput = PIDCalcs(WallTrackSteerVal, 0, lastError, lastInput, lastIval, lastDerror, WallTrack_Kp, WallTrack_Ki, WallTrack_Kd); //04/05/22 have to use local var here, as result could be negative int16_t leftSpdnum = MOTOR_SPEED_QTR + WallTrackOutput; int16_t rightSpdnum = MOTOR_SPEED_QTR - WallTrackOutput; //04/05/22 Left/rightSpdnum can be negative here - watch out! rightSpdnum = (rightSpdnum <= MOTOR_SPEED_HALF) ? rightSpdnum : MOTOR_SPEED_HALF; //result can still be neg glRightspeednum = (rightSpdnum > 0) ? rightSpdnum : 0; //result here must be positive leftSpdnum = (leftSpdnum <= MOTOR_SPEED_HALF) ? leftSpdnum : MOTOR_SPEED_HALF;//result can still be neg glLeftspeednum = (leftSpdnum > 0) ? leftSpdnum : 0; //result here must be positive MoveAhead(glLeftspeednum, glRightspeednum); //gl_pSerPort->printf(WallFollowTelemStr, millis(), // glLeftFrontCm, glLeftCenterCm, glLeftRearCm, // glLeftSteeringVal, orientdeg, orientcos, glLeftCentCorrCm, // WallTrackSetPoint, lastError, WallTrackOutput, // glLeftspeednum, glRightspeednum); } //gl_pSerPort->printf("\nMsec\tLF\tLC\tLR\tLCCorrtOffCm\tErr\tLastI\tLastD\tOut\tLspd\tRspd\n"); //gl_pSerPort->printf("Msec\tLF\tLC\tLR\tLCCorr\tSteer\tTweak gl_pSerPort->printf("%lu\t%2.2f\t%2.2f\t%2.2f\t%2.2f\t%2.2f\t%2.2f", millis(), glLeftFrontCm, glLeftCenterCm, glLeftRearCm, glLeftCentCorrCm, glLeftSteeringVal, offset_factor); //\tOffCm\tOffF\tSet\tSteer\tErr gl_pSerPort->printf("\t%2.2f\t%2.2f", //OffCm, offset_factor, WallTrackSetPoint, WallTrackSteerVal, lastError); OffCm, lastError); //\tLastI\tLastD\tOut\Lspd\tRspd\n"); gl_pSerPort->printf("\t%2.2f\t%2.2f\t%2.2f\t%d\t%d\n", lastIval, lastDerror, WallTrackOutput, glLeftspeednum, glRightspeednum); } } } |

Comparing the above to TrackLeftWallOffset() from WallE3_WallTrack_V5….

- WallE3_WallTrack_V5 has an extra ‘float spinRateDPS = 30;’ line for use in it’s now unneeded ‘OFFSET_CAPTURE’ section.

- WallE3_WallTrackTuning_V5 adds ‘MsecSinceLastFrontDistUpdate = 0;’ and I think this is needed for the ‘final’ version.

- WallE3_WallTrackTuning_V5 adds ‘&& !gl_bIRBeamAvail’ to the initial ‘while()’ statement

- WallE3_WallTrackTuning_V5 computes the ‘offset_factor’ and adds it to WallTrackSteerVal.

- The rest of the function is essentially the same, but WallE3_WallTrackTuning_V5 uses a custom inline telemetry output section rather than ‘PrintWallFollowTelemetry();’

- WallE3_WallTrackTuning_V5 doesn’t have the line ‘digitalToggle(DURATION_MEASUREMENT_PIN2);’ for debug purposes – I should probably keep this.

- WallE3_WallTrackTuning_V5 doesn’t have ‘HandleAnomalousConditions(errcode, TRACKING_LEFT);’ at the end. This should be kept as well.

- WallE3_WallTrack_V5 uses ‘myTeePrint.’ instead of ‘gl_SerPort->’

So, I copied ‘TrackLeftWallOffset()’ to Complete_V1 and made the above changes. I only copied in the ‘parameterized’ version of ‘TrackLeftWallOffset()’ – the ‘parameterless’ version is no longer needed.

- Removed ‘float spinRateDPS = 30;’

- added ‘MsecSinceLastFrontDistUpdate = 0;’

- Removed entire OFFSET_CAPTURE section

- added ‘&& !gl_bIRBeamAvail’ to the initial ‘while()’ statement

- Edited the PIDCalcs line to match WallTrackTuning_V5, except with ‘kp,ki,kd’ symbols instead of ‘WallTrack_Kp, WallTrack_Ki, WallTrack_Kd’

- Replaced ‘PrintWallFollowTelemetry() with custom telemetry printout

- kept ‘digitalToggle(DURATION_MEASUREMENT_PIN2);’ for debug purposes

- ‘HandleAnomalousConditions(errcode, TRACKING_LEFT);’ at the end, and copied in the function code from WallE3_WallTrack_V5.

After finishing all this up and adding some required MISCELLANEOUS section functions, the ‘Complete_V1’ program compiles with only one error, as follows:

|

1 |

WallE3_Complete_V1.ino: 983:114: error: 'TrackRightWallOffset' was not declared in this scope |

This error is due to the fact that I haven’t yet ported the WallE3_WallTrackTuning_V5 code to the ‘right side wall’ case. I think I’ll comment this out for now, until I can confirm that the robot can actually track the left side wall.

04 February 2023 Update:

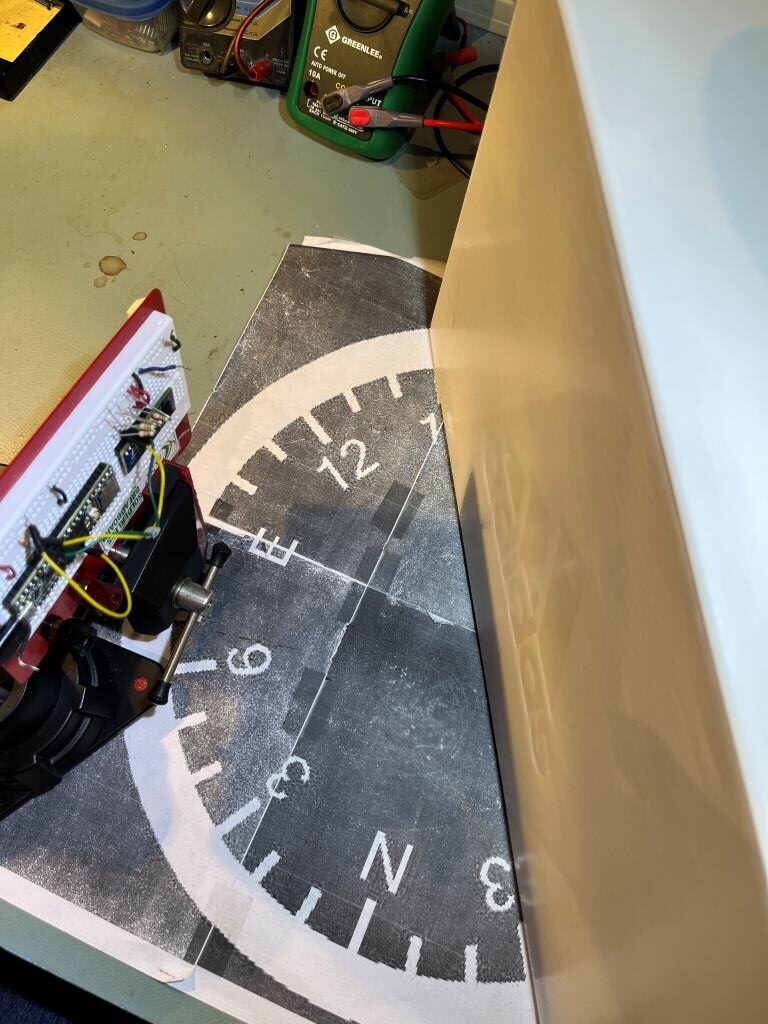

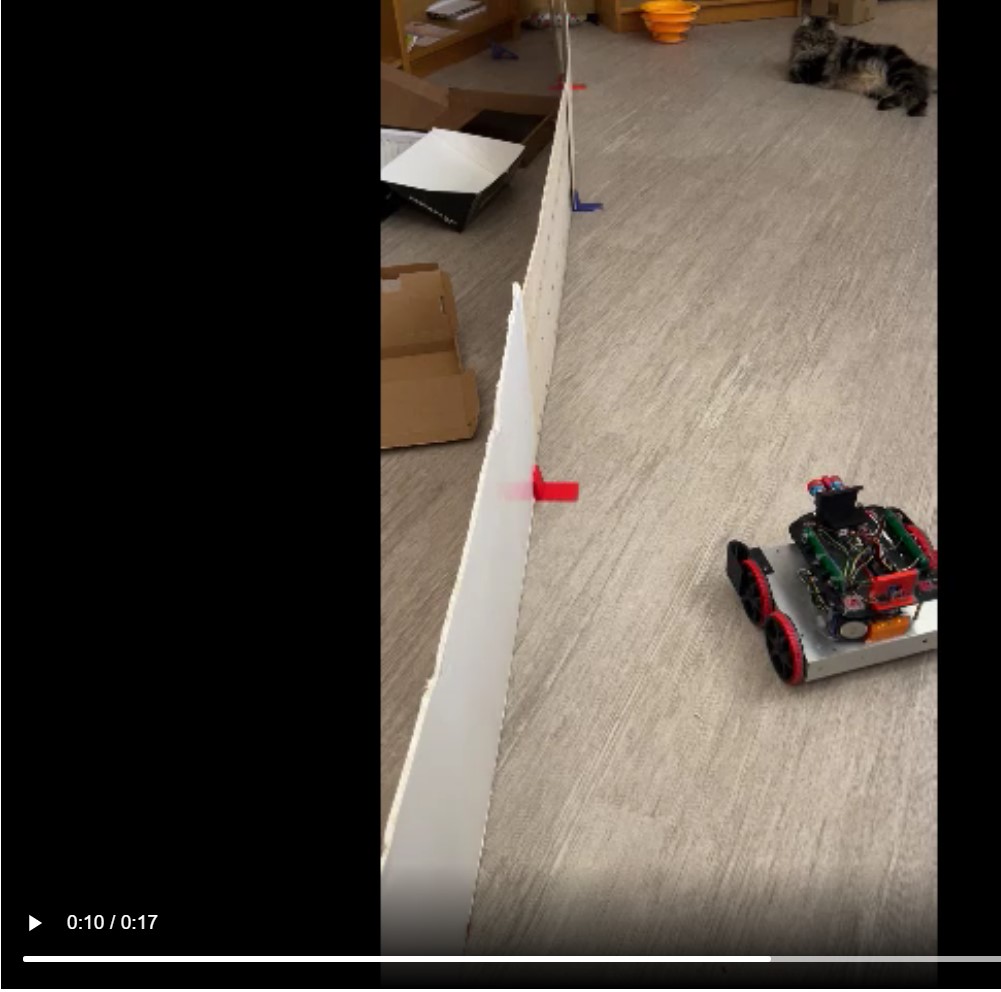

Well, what a miracle! I commented out the call to ‘TrackRightWallOffset’, compiled the program, ran the left-side tracking test on my ‘two-break’ wall configuration, and it actually worked – YAY!! Here’s a short video showing the run.

Stay tuned!

Frank