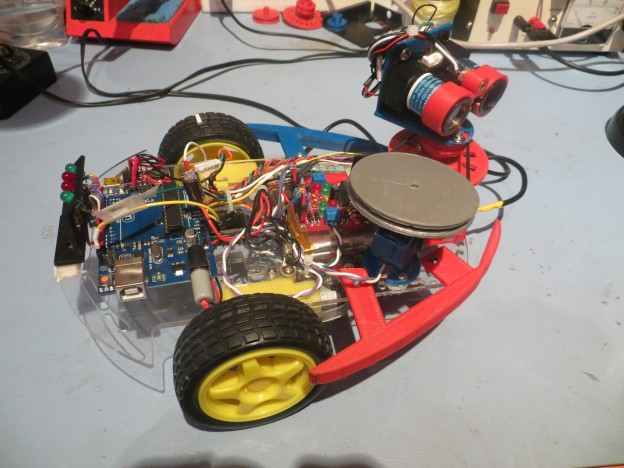

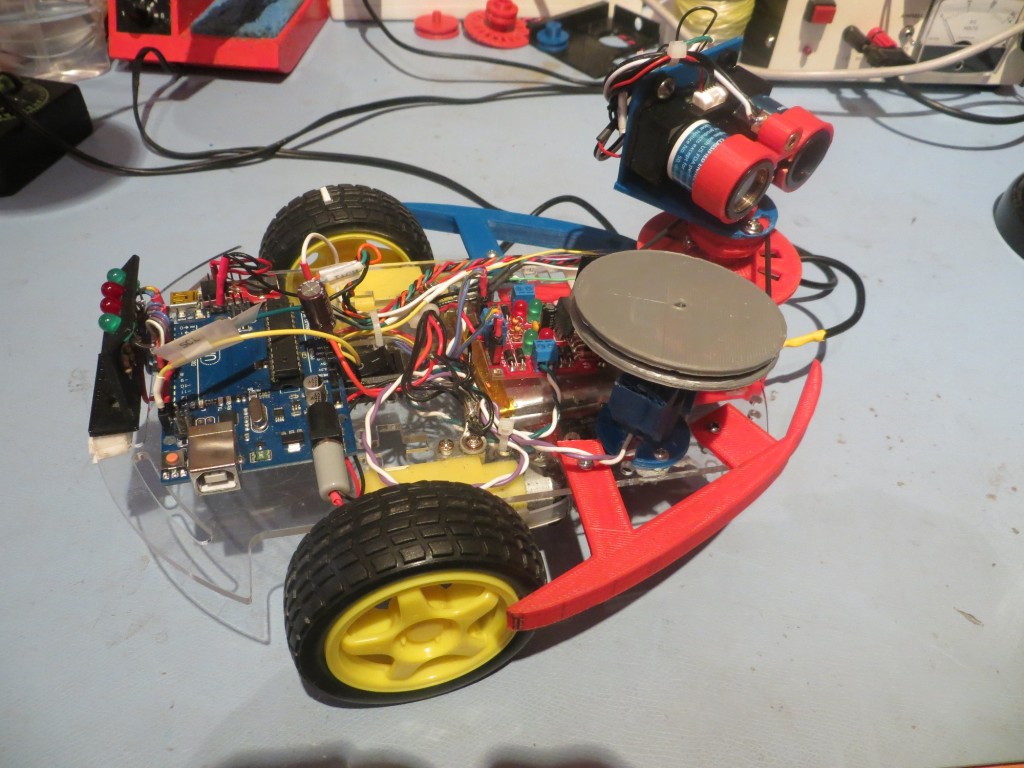

Back in May of this year I purchased a 4-wheel drive robot kit from DFRobots as a possible successor to my then-current Wall-E 3-wheel (2 drive motors and a small castering nose wheel). I didn’t have time to do more than just assemble the basic kit (see this post), so it spent the intervening months gathering dust on my shelf. Coincidentally, my wife arranged with our son to kidnap her grand-kids for a week (giving our son and his wife a much-needed break, and giving us some quality grand-kid time), so I decided to brush off the 4WD robot kit as a fun project to do with them, in parallel with re-working Wall-E to remove its spinning LIDAR assembly and replace it with a hybrid LIDAR/Sonar setup.

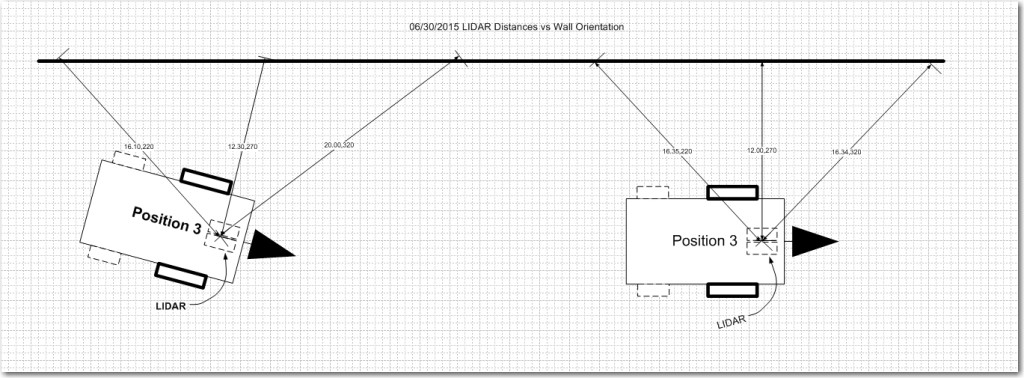

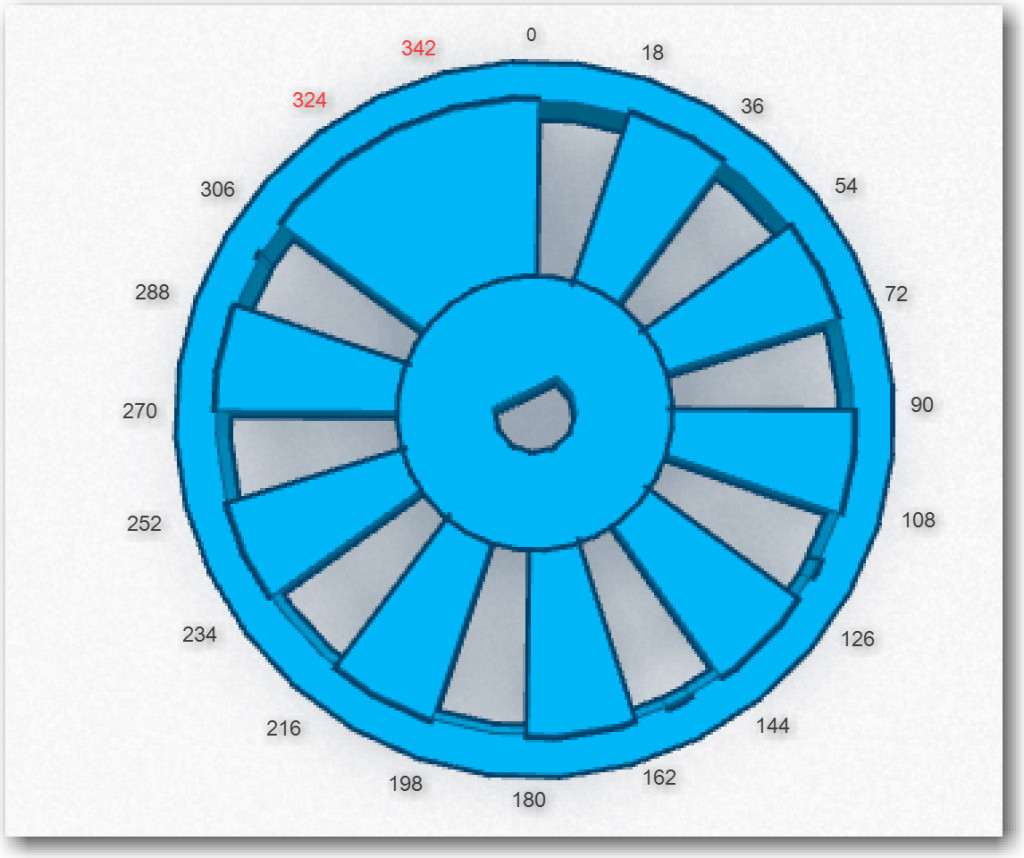

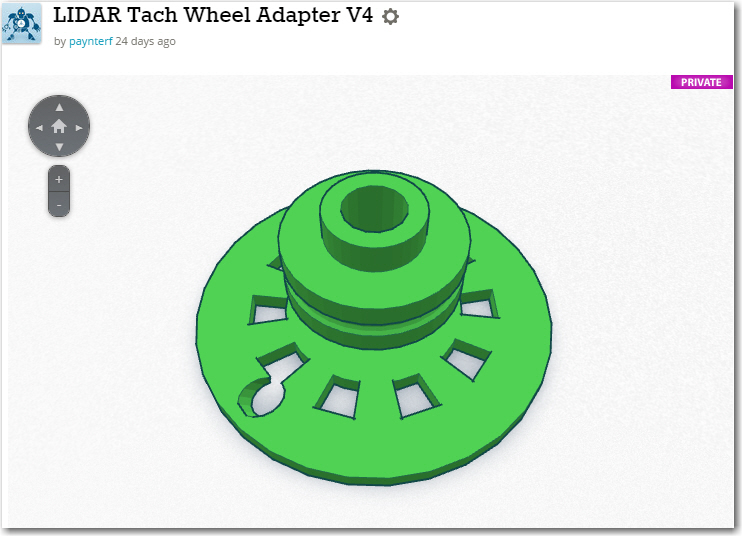

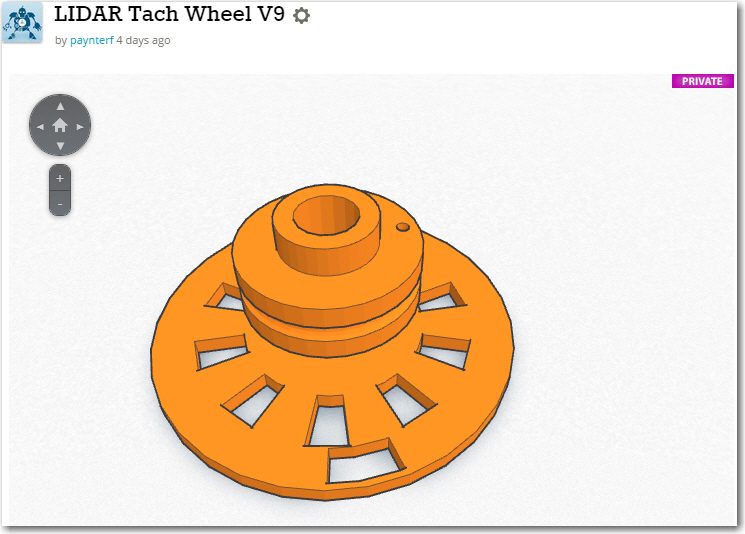

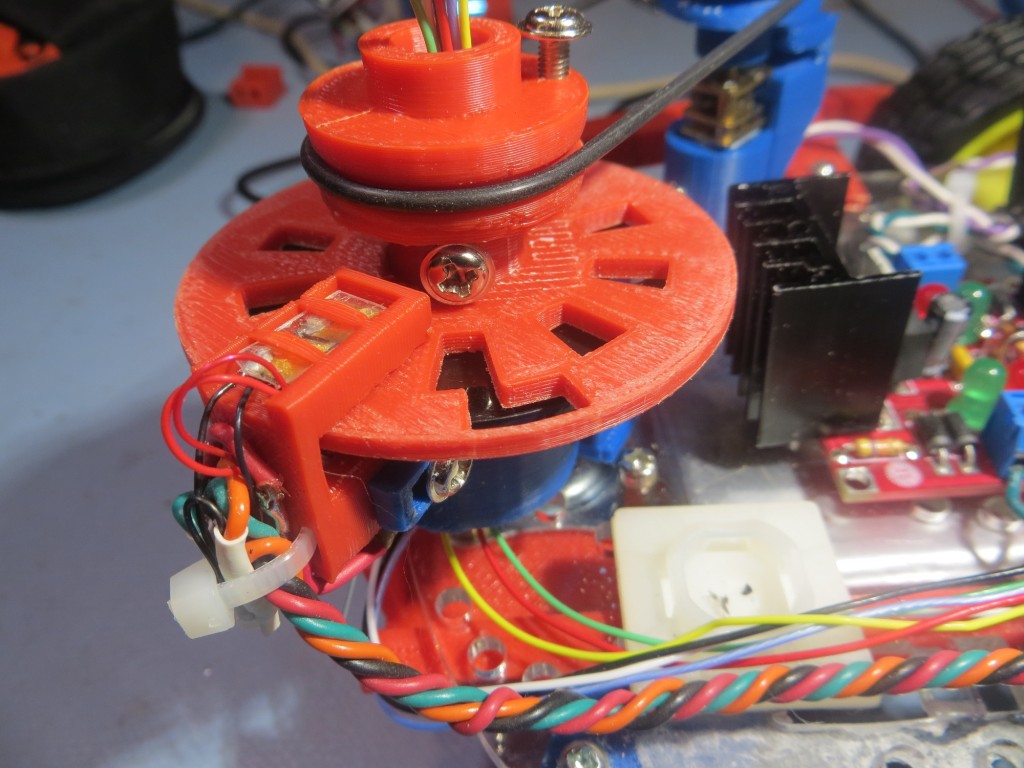

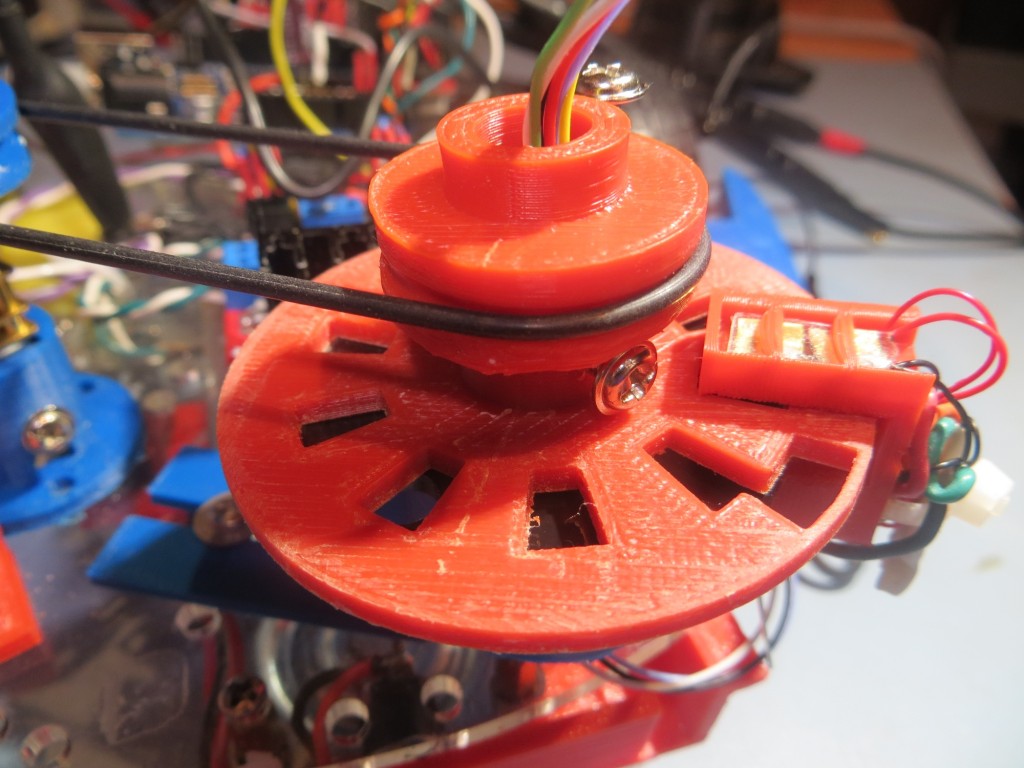

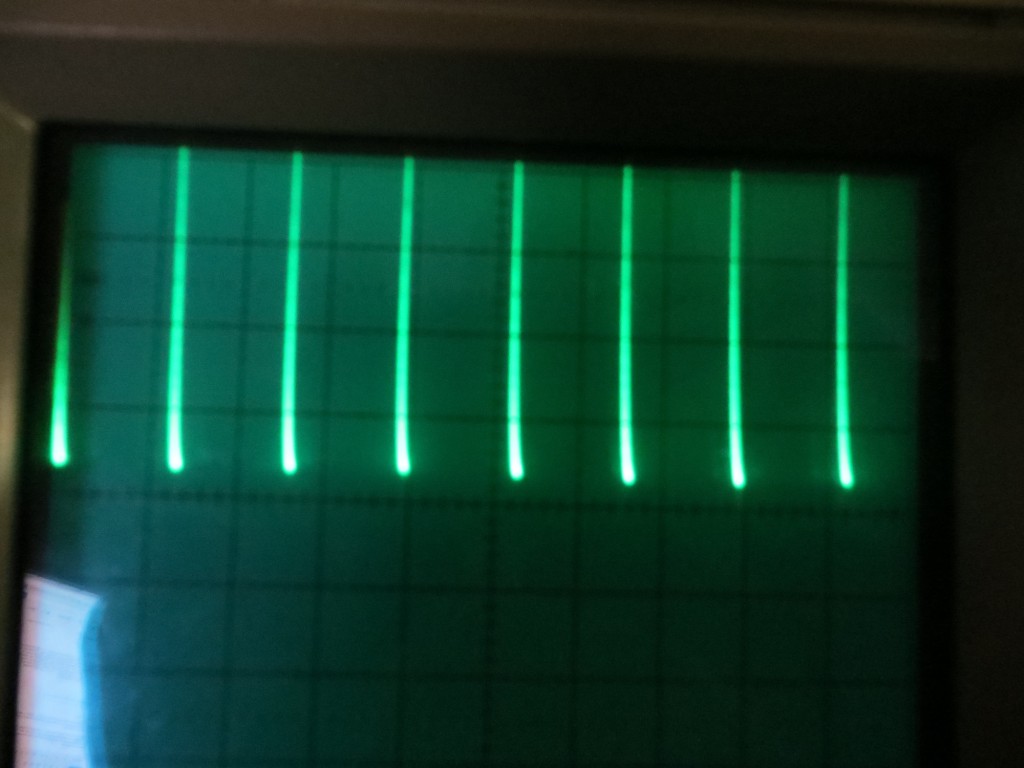

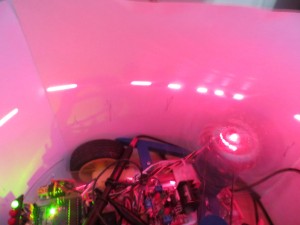

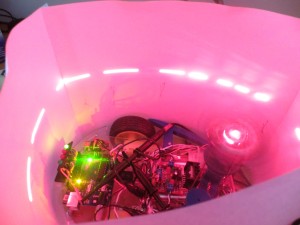

The plan with the 4WD robot is to incorporate another spinning-LIDAR setup – this one utilizing the XV-11 spinning LIDAR system from the NEATO vacuum cleaner. This system rotates at approximately 300 RPM (5 RPS), so there is a decent chance that will be fast enough for effective wall navigation (the Wall-E LIDAR setup couldn’t manage more than about 200 RPM and that just wasn’t good enough).

However, before we get to the point of determining whether or not the XV-11 LIDAR system will work, there is a LOT of work to be done. At the moment, I can see that there are four major subsystems to be implemented

- Battery Supply and Charger

- Motor Controller integration

- XV-11 LIDAR controller

- Navigation controller

Battery Supply and Charger

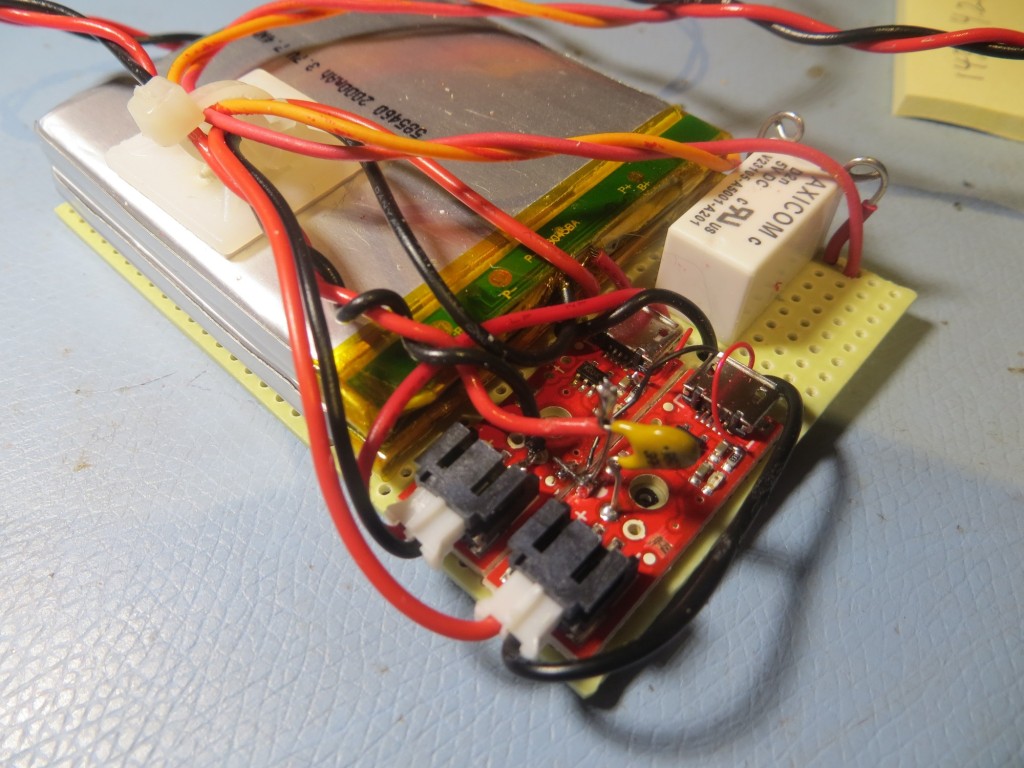

In preparation for the project, I purchased 2ea 2000 mAH Li-Ion batteries and a supply of ‘basic Li-Ion charger’ modules from SparkFun. In my previous work with Wall-E, I had devised a pretty decent scheme for charge/run switching using a small 2-pole, double-throw relay to switch the battery pack from series connection for running the robot to independent-parallel for charging, so I planned to use the same setup here. After the usual number of screwups, I wound up with a modular battery pack system that could be tucked away in the motor compartment of the 4WD robot.

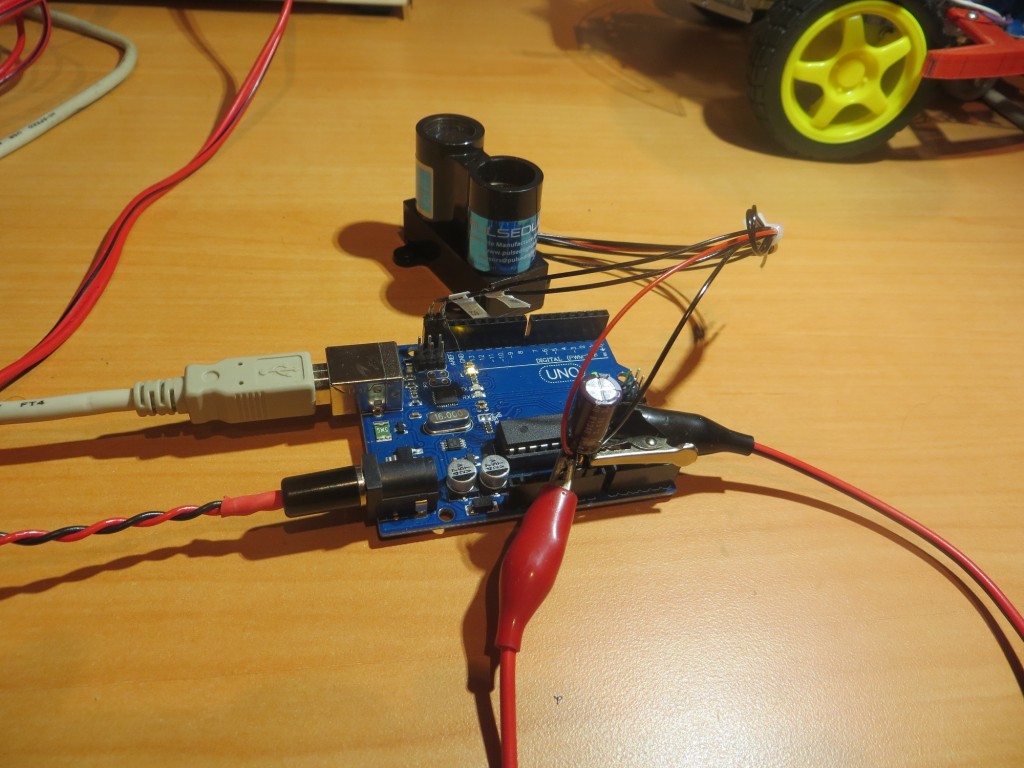

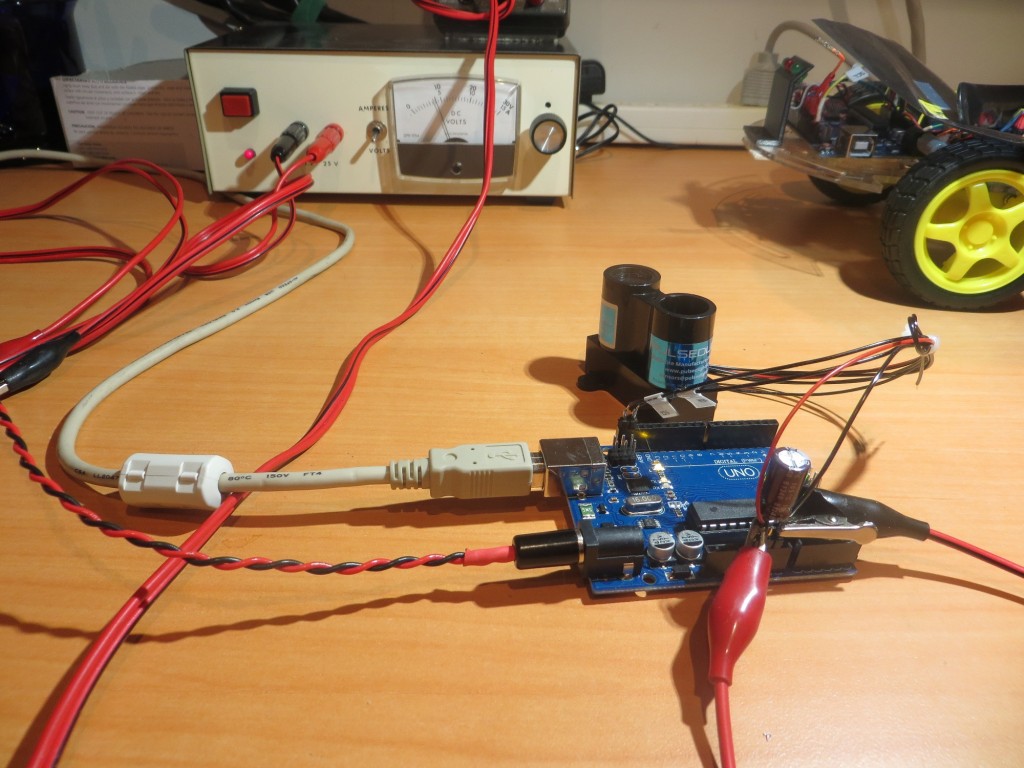

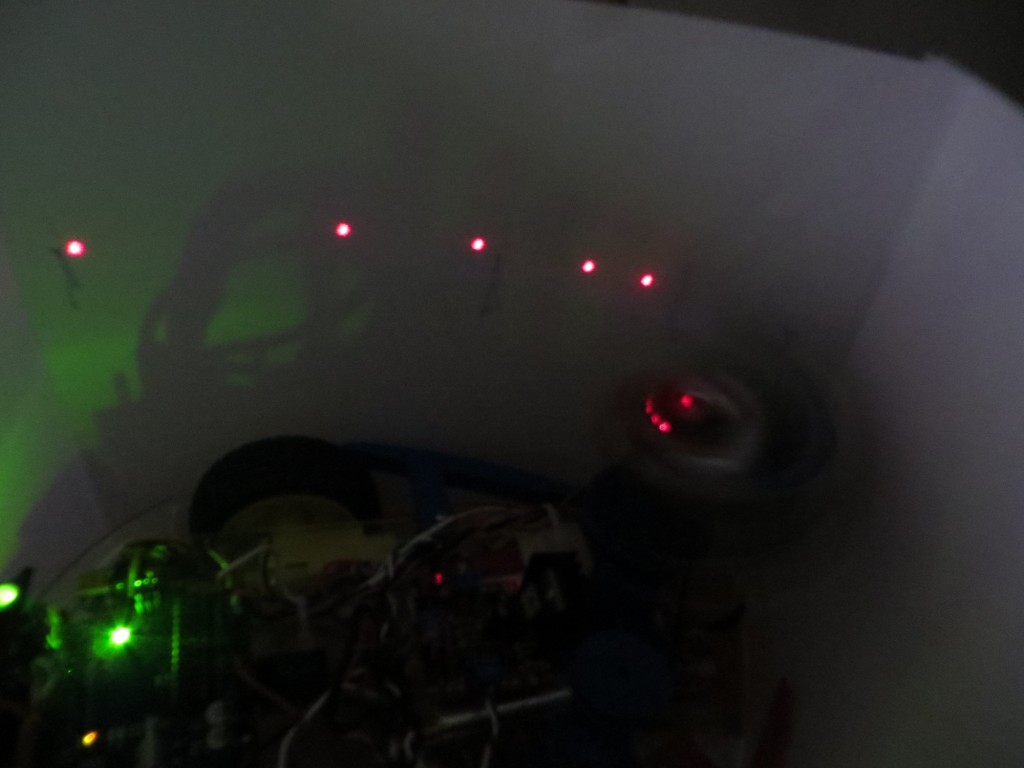

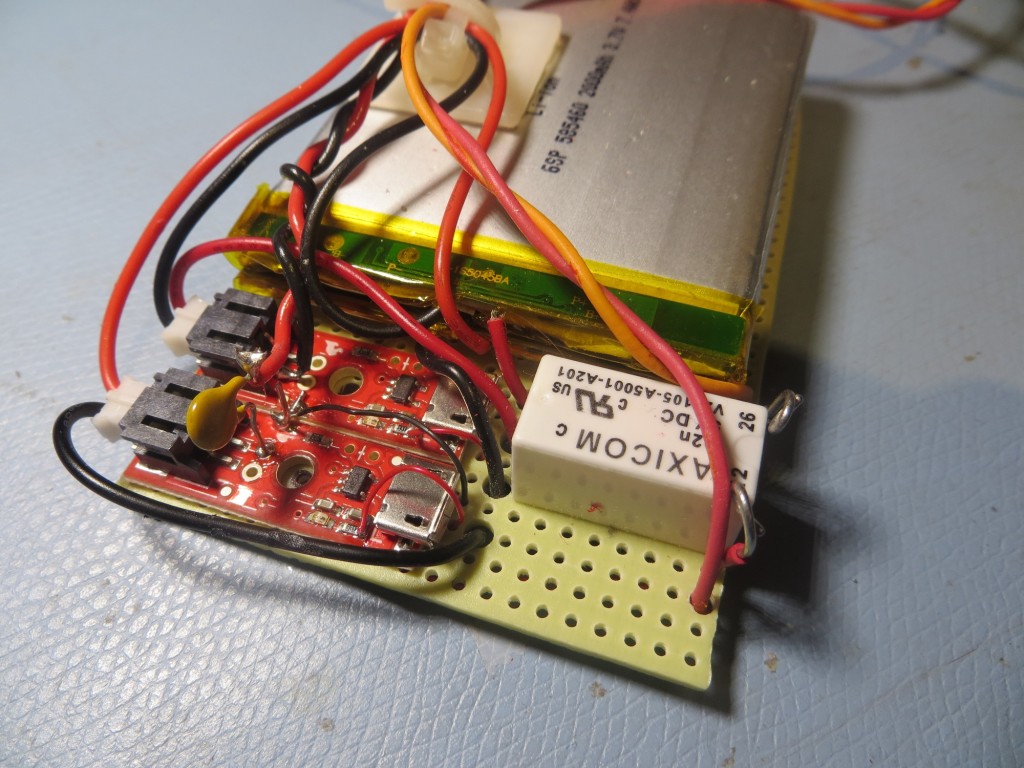

Battery pack showing charging modules and switching relay. The tan capacitor-looking component is actually a re-settable fuse

Battery pack showing charging modules and switching relay. The tan capacitor-looking component is actually a re-settable fuse

In the above photos, the tan component that looks very much like a non-polarized capacitor is actually a re-settable fuse! I really did not want to tuck this battery pack away in a relatively inaccessible location without some way of preventing a short-circuit from causing a fire or worse. After some quality time with Google on the inet, I found a Wikipedia entry for ‘polymeric positive temperature coefficient device (PPTC, commonly known as a resettable fuse, polyfuse or polyswitch). These devices transition from a low to a high resistance state when they get hot enough – i.e. when the current through them stays above a threshold level for long enough. They aren’t fast (switching time on the order of seconds for 5X current overload conditions), but speed isn’t really a factor for this application. I don’t really care if I lose a controller board or two, as long as I don’t burn the house down. Even better, these devices reset some time after the overload condition disappears, so (assuming the batteries themselves weren’t toasted by the overload), recovery might be as simple as ‘don’t do that!’.

Motor Controller Integration

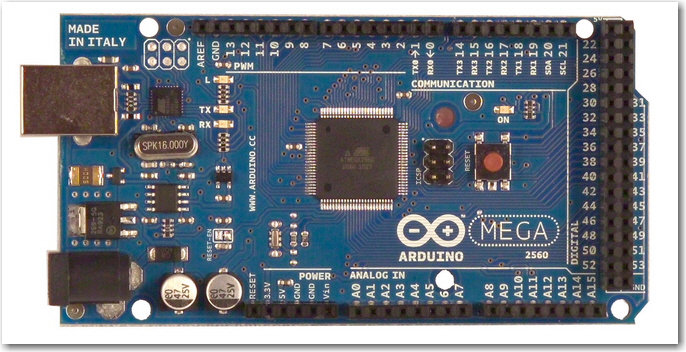

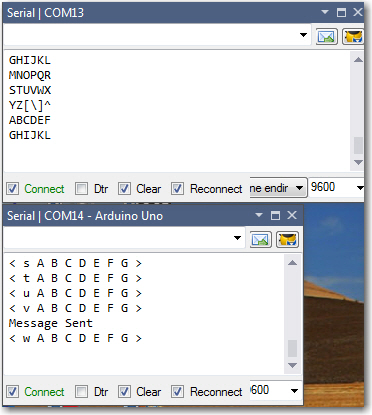

When I got the 4WD robot kit, I also purchased the DFRobots ‘Romeo’ controller module. This module integrates an Arduino Uno-like device with dual H-bridge motor controllers with outputs for all 4 drive motors. Unfortunately, the Romeo controller has only one hardware serial port, and I need two for this project (one for the PC connection, and one to receive data from the XV-11 LIDAR). So, I plan to use two Solarbotics dual-motor controllers, and an Arduino Mega as the controller

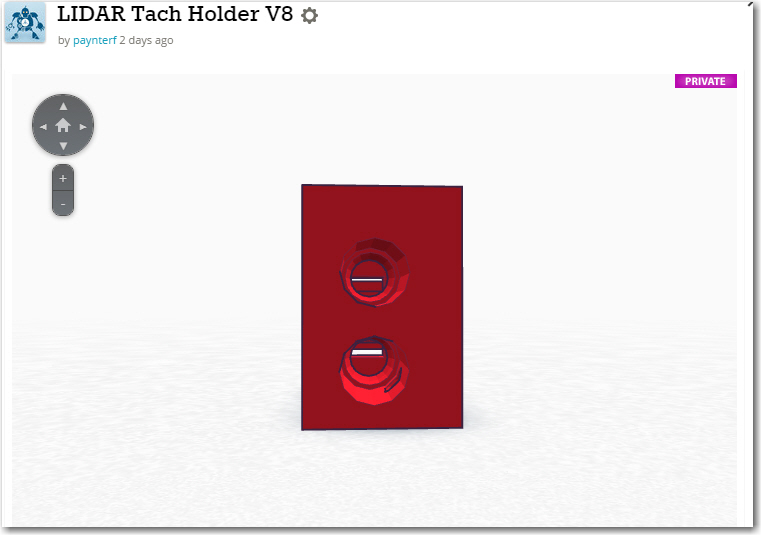

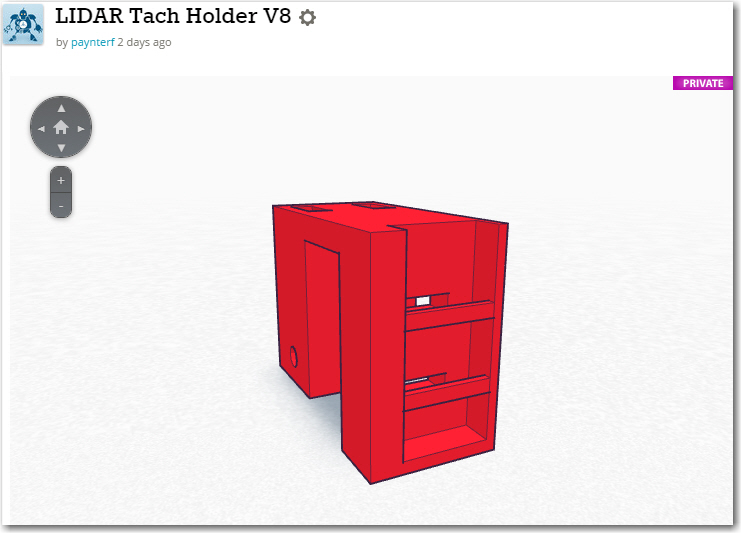

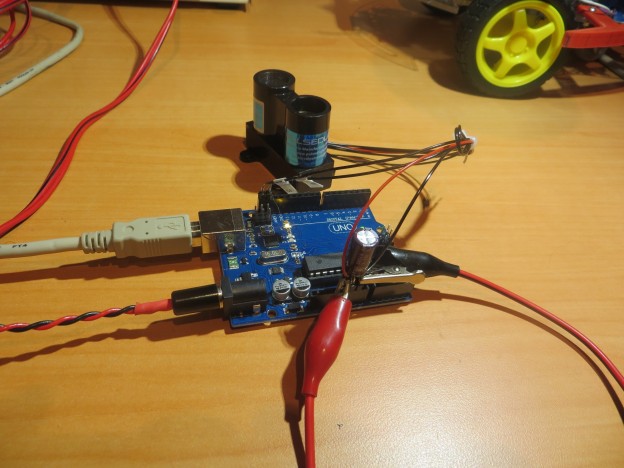

XV-11 LIDAR Controller

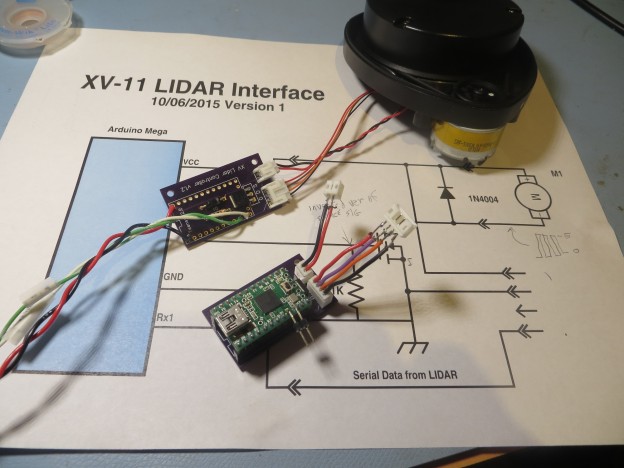

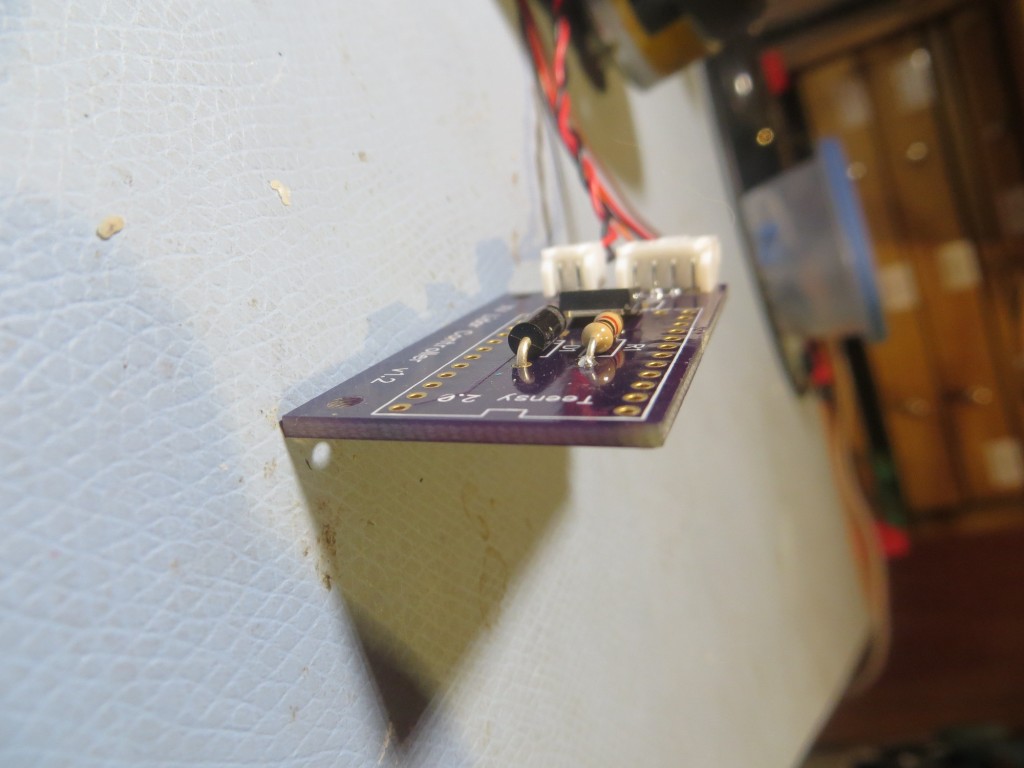

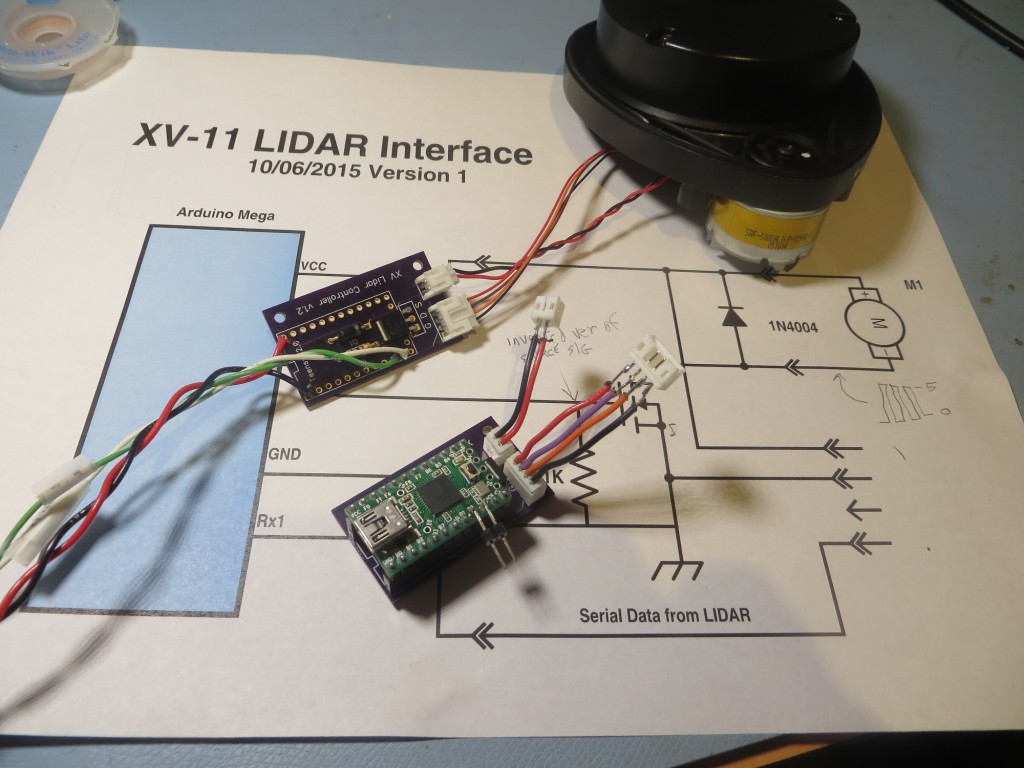

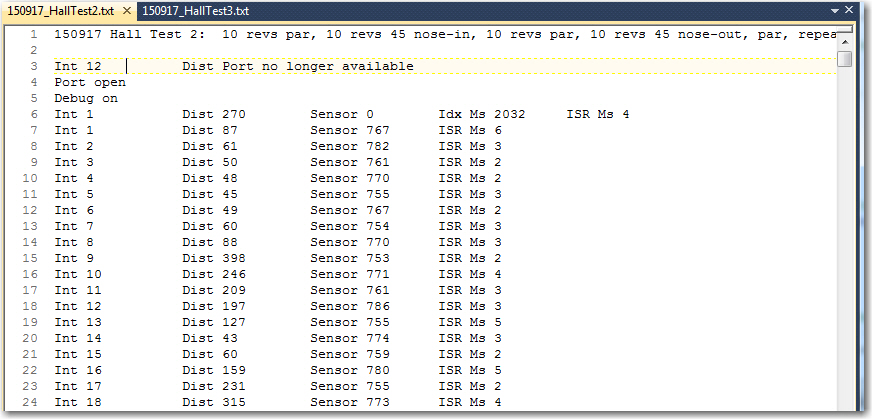

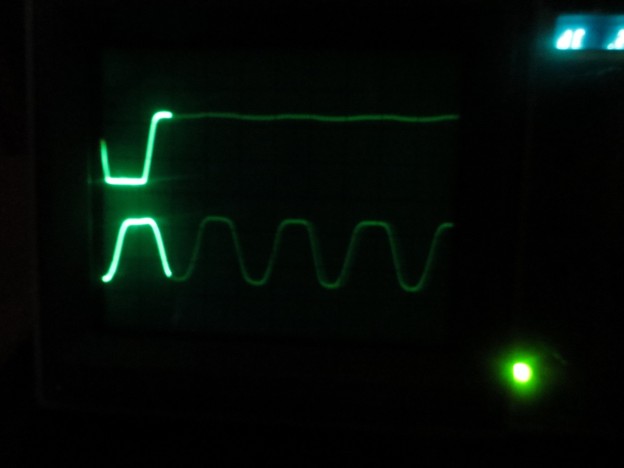

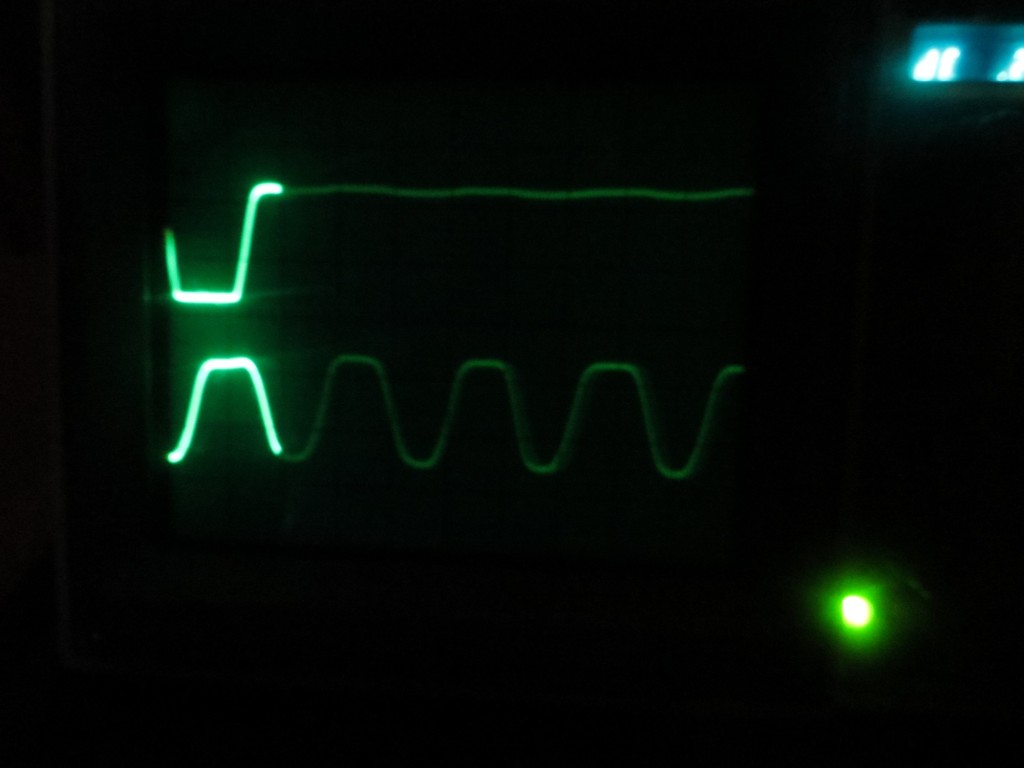

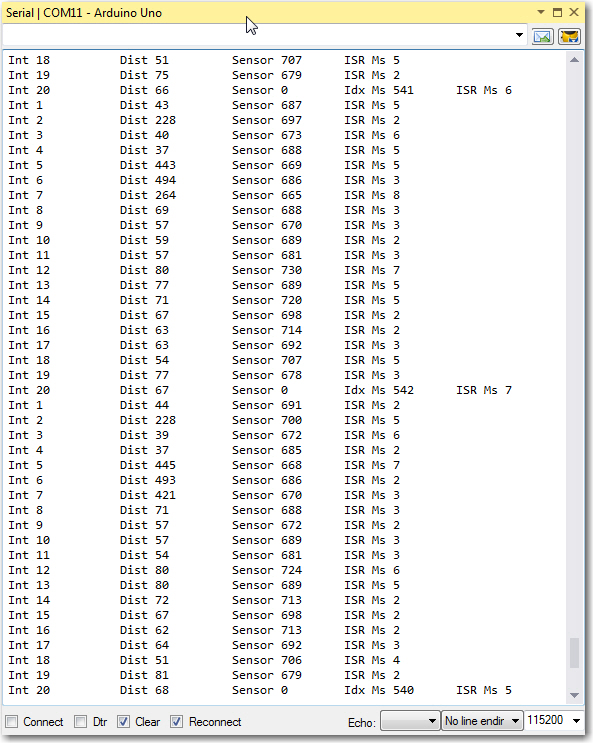

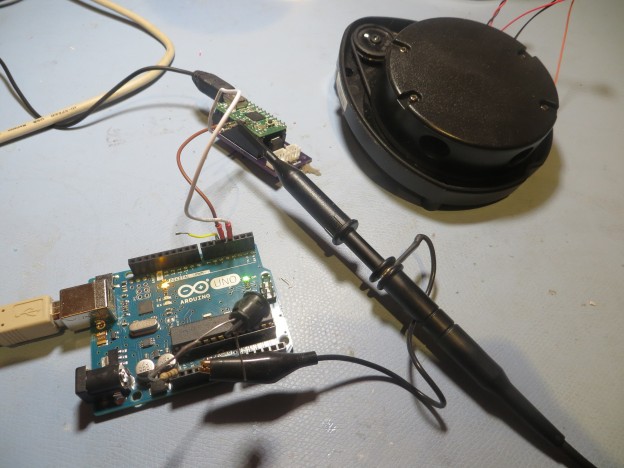

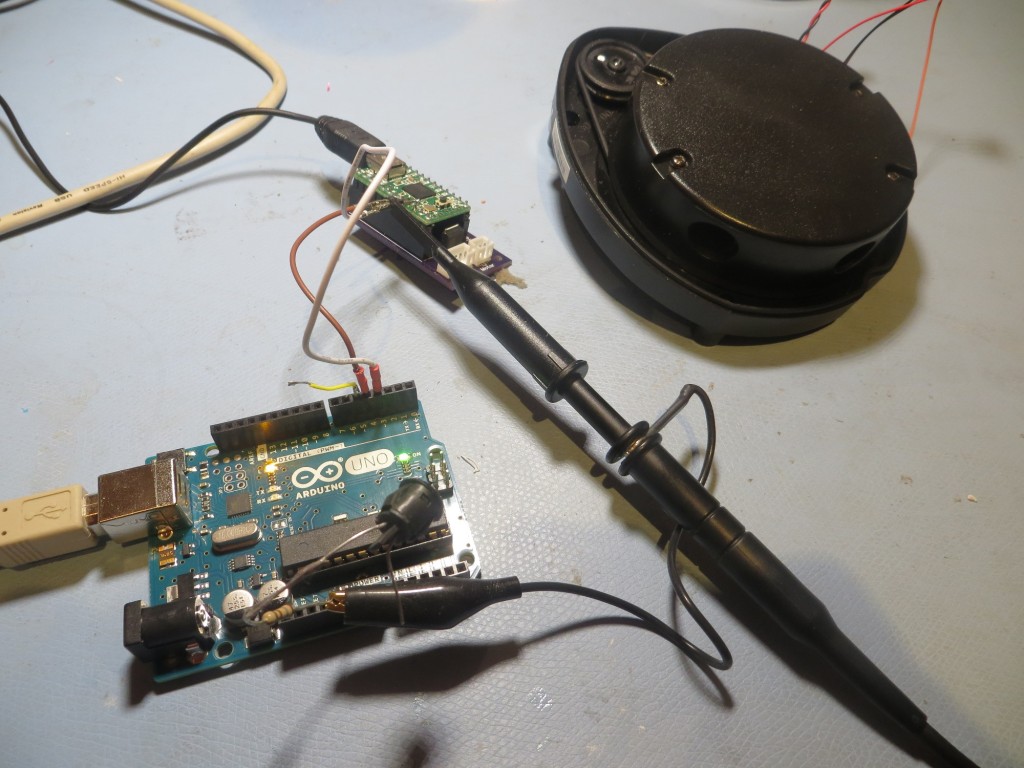

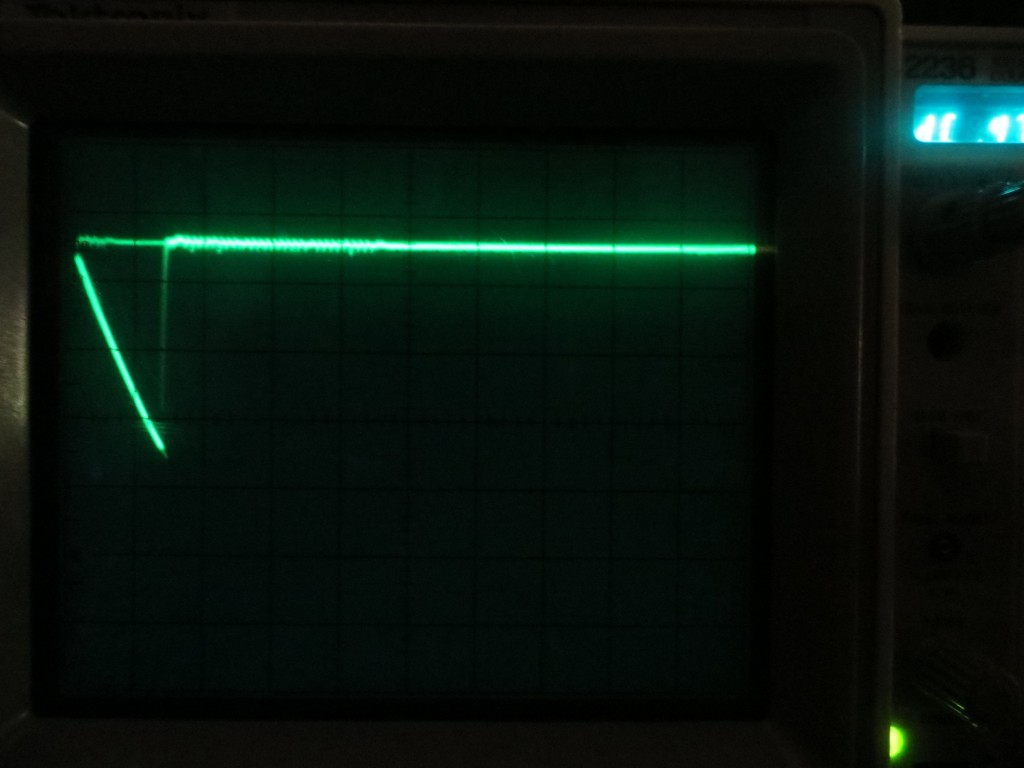

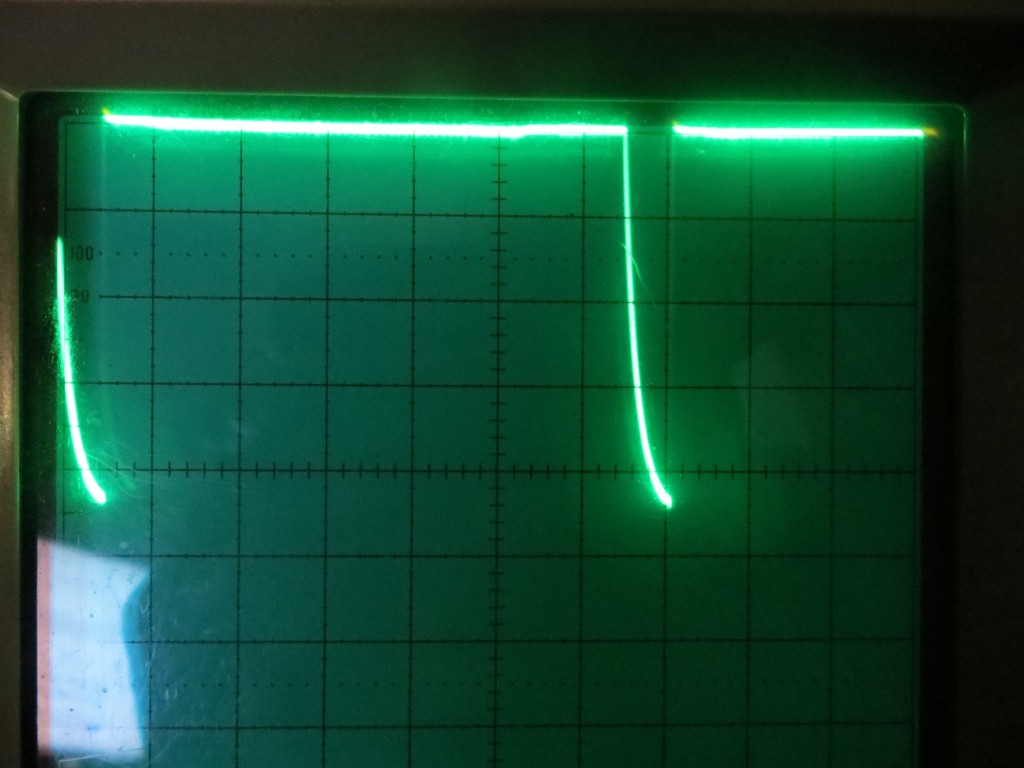

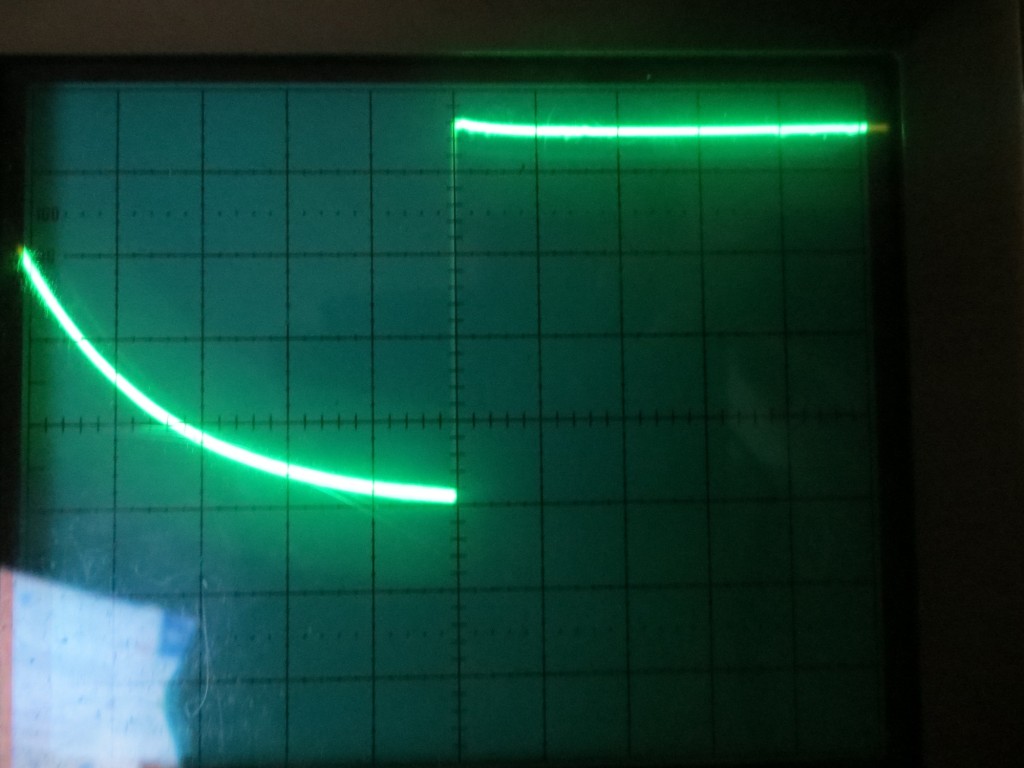

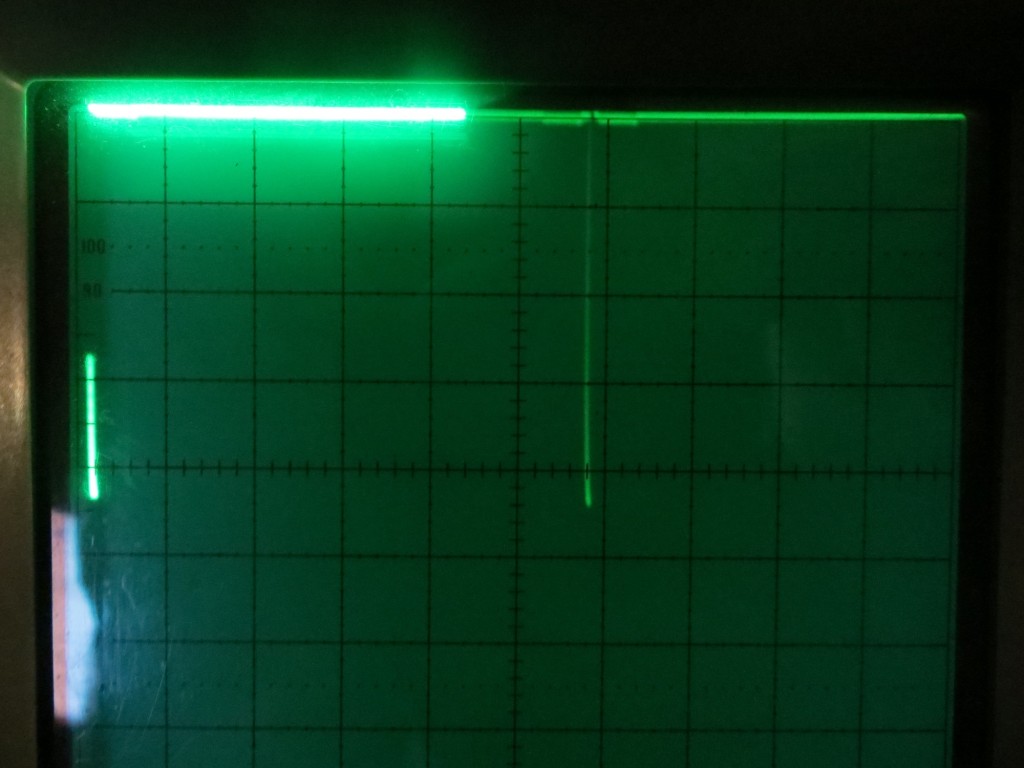

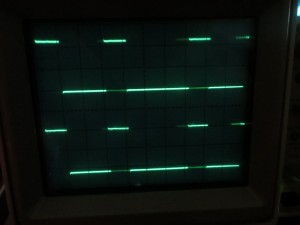

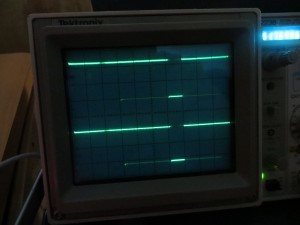

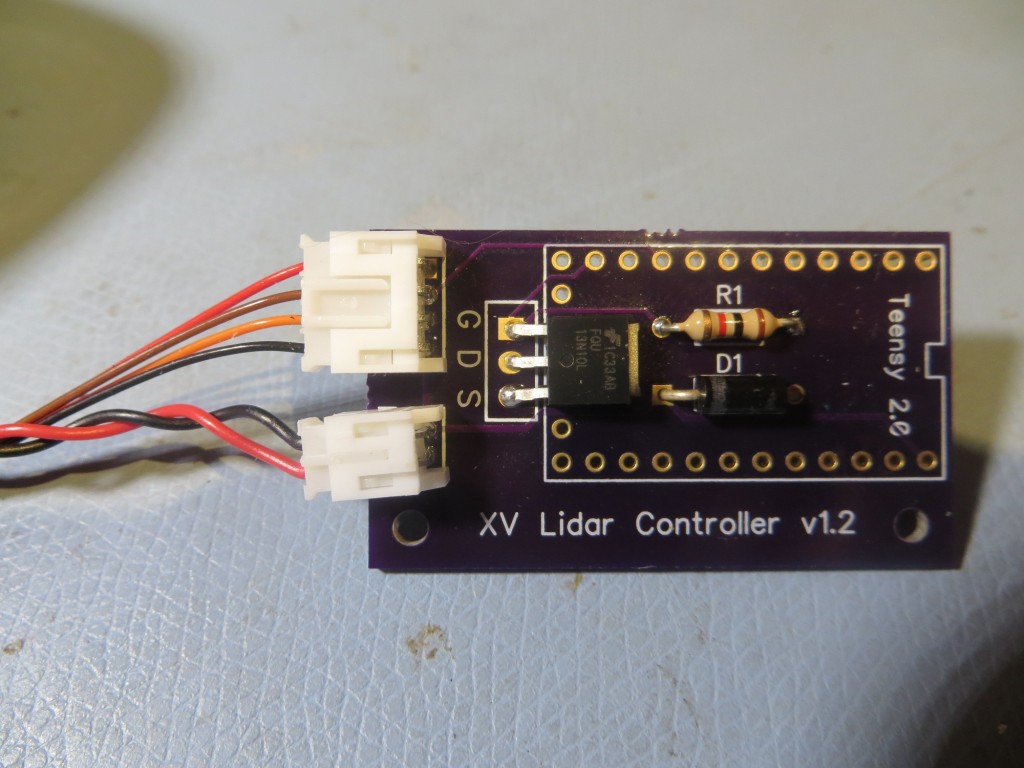

The XV-11 LIDAR from the NEATO vacuum has two interfaces; a 2-wire motor drive that expects a PID signal from a FET driver, and a high-speed serial output that transfers packets containing RPM, status, and distance data. A company called ‘Get Surreal’ has a really nice Teensy2.0-based module and demo software that takes all the guesswork out of controlling the XV-11, but its only output is to a serial monitor window on a PC. Somehow I have to get the data from the XV-11, decode it to extract RPM and distance data, and use the information for navigation. The answer I came up with was to use the Get Surreal PCB without the Teensy as sort of a ‘dongle’ to allow me to control the XV-11 from an Arduino Mega. XV-11 serial data is re-routed from the Teensy PCB connector to Rx1 on the Arduino Mega. The Mega, running the same demo code as the Teensy, decodes the serial packets, extracts the RPM data and provides the required PID signal back to the driver FET on the Teensy PCB. Not particularly fancy, but it works!

XV-11 Controller V1.1 without the Teensy 2. The original connectors were replaced with right-angle versions

During testing of the ‘XV-11 dongle’, I discovered an unintended consequence of the change from upright to right-angle connectors. As it turned out, the right-angle connectors caused the pin assignments to be reversed – yikes! My initial reaction to this was to simply pull the pins from the XV-11 connectors and re-insert them into the now-proper places. Unfortunately, this meant that I could no longer go back to controlling the XV-11 from the original controller module – bummer. So, I wound up constructing two short converter cables to convert the now-reversed XV-11 cables to the ‘normal’ sense for the Get Surreal controller module. Nothing is ever simple….

Navigation Controller

The navigation controller for the 4WD robot will be an Arduino MEGA 2560. This board was chosen because it has LOTS of digital/analog/pwm I/O and multiple hardware serial ports. You can’t have too many I/O ports, and I need at least two (one for the USB connection the host PC, and one to interface to the NEATO XV-11 spinning LIDAR) hardware serial ports. The downside to using the Mega is its size – almost twice the area of the Arduino Uno.