Posted November 14, 2015

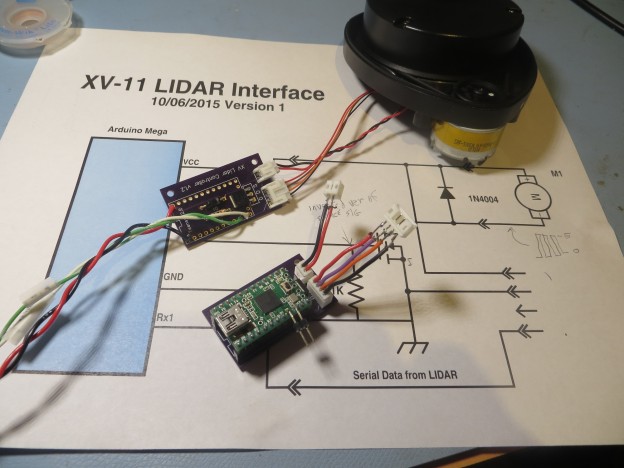

Back in the spring of this year I ran a series of experiments that convinced me that acoustic multipath problems made it impossible to reliably navigate and recover from ‘stuck’ conditions using only acoustic ‘ping’ sensors (see “Adventures with Wall-E’s EEPROM, Part VI“). At the end of this post I described some alternative sensors, including the LIDAR-lite unit from Pulsed Light.

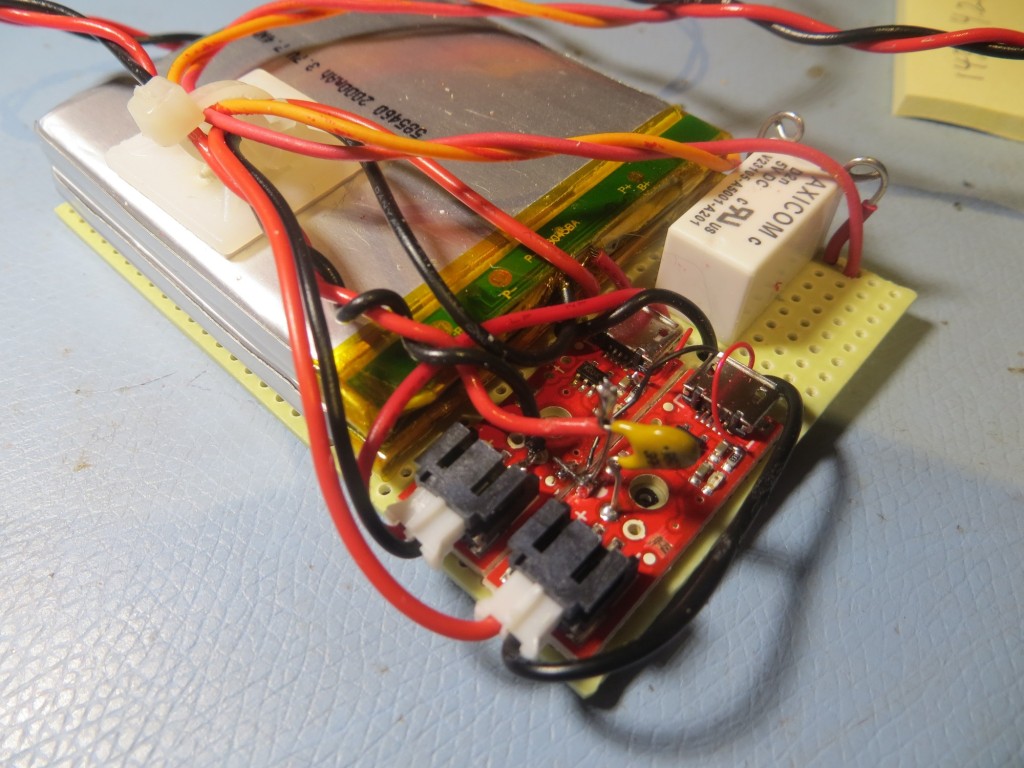

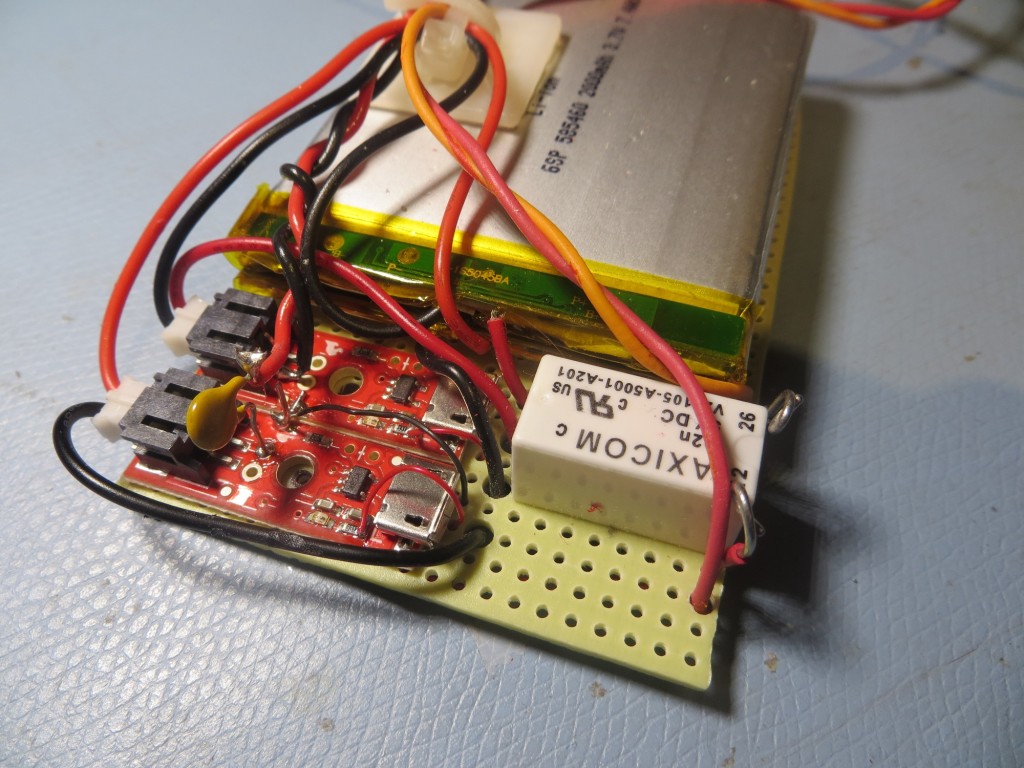

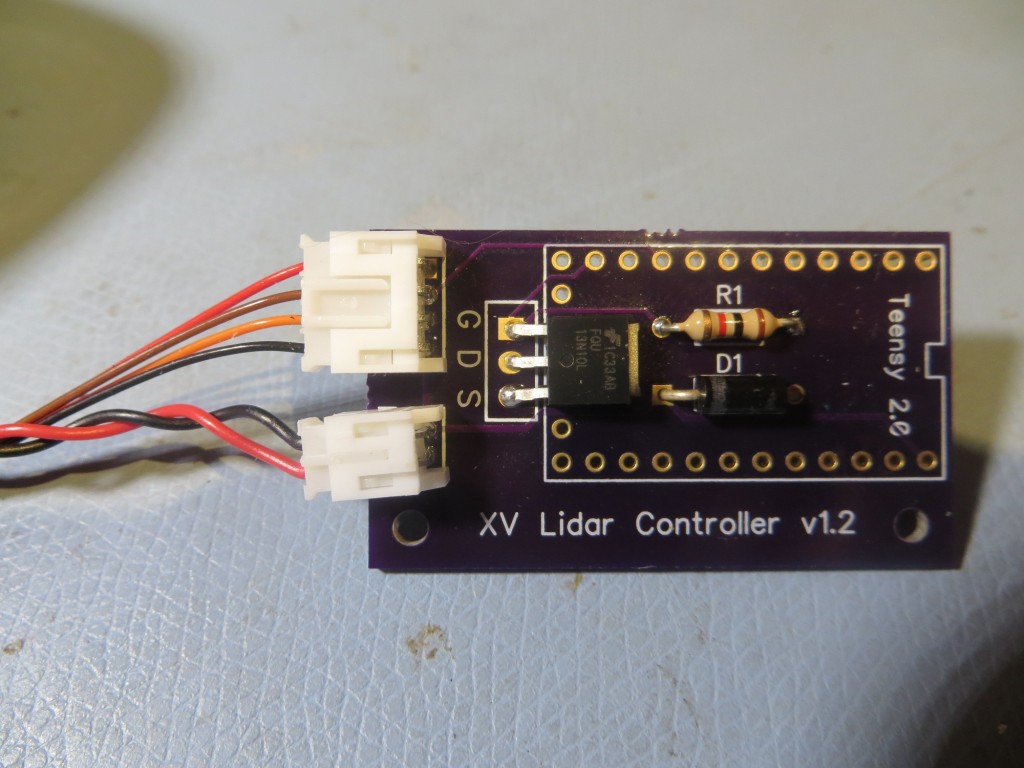

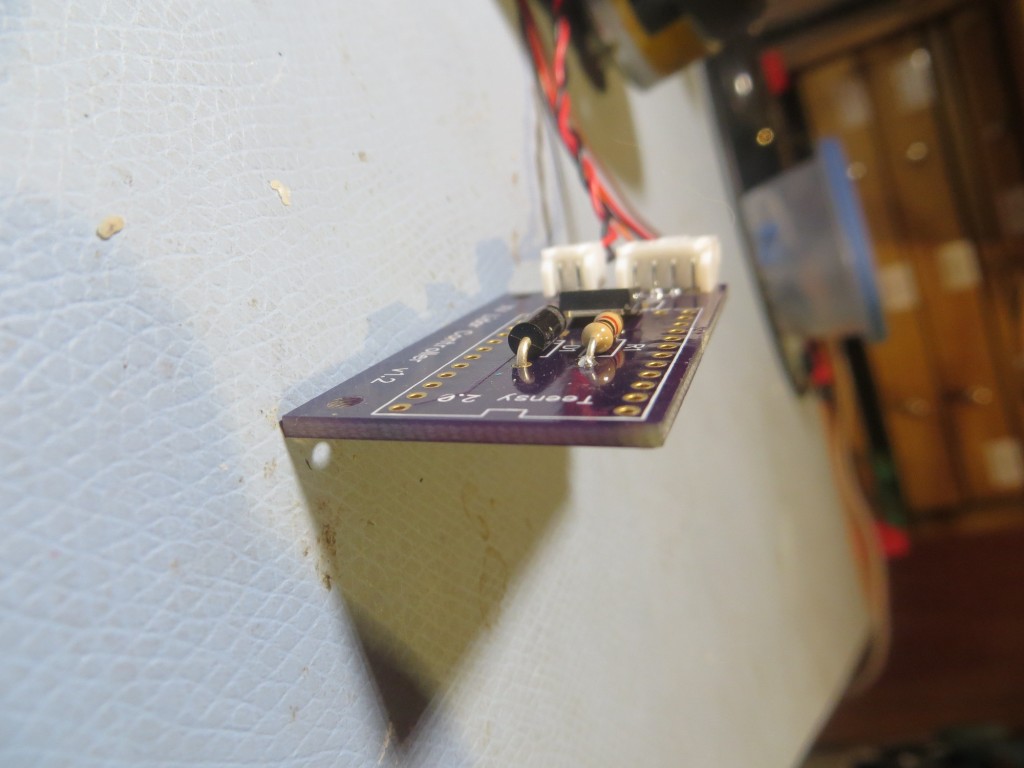

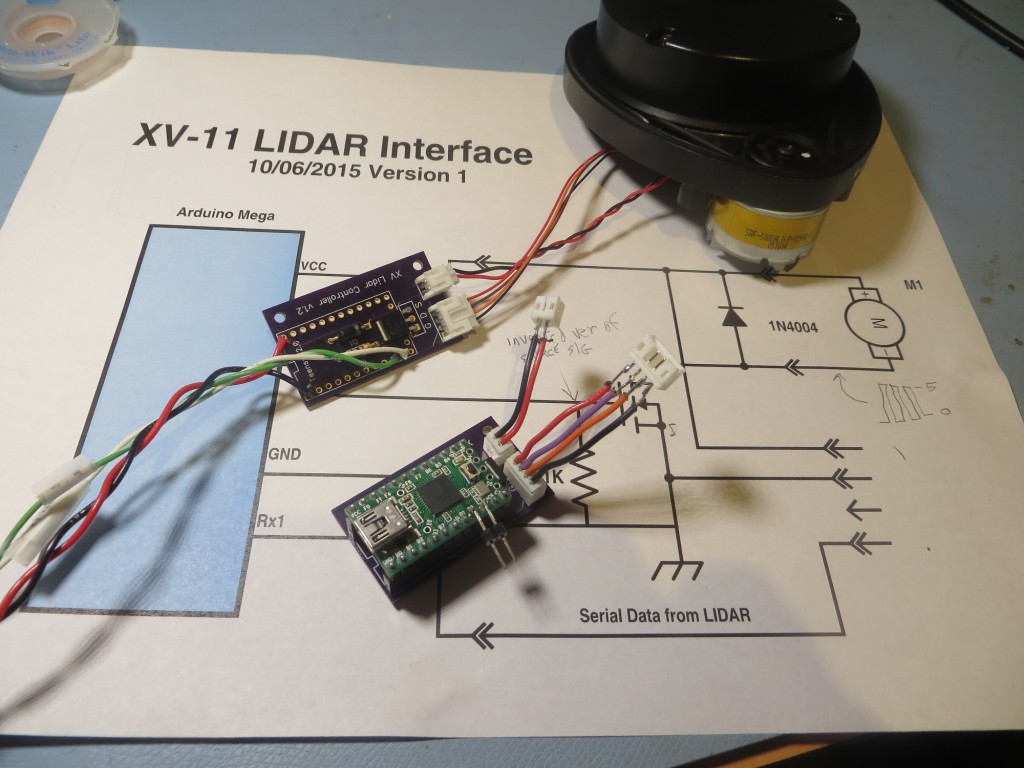

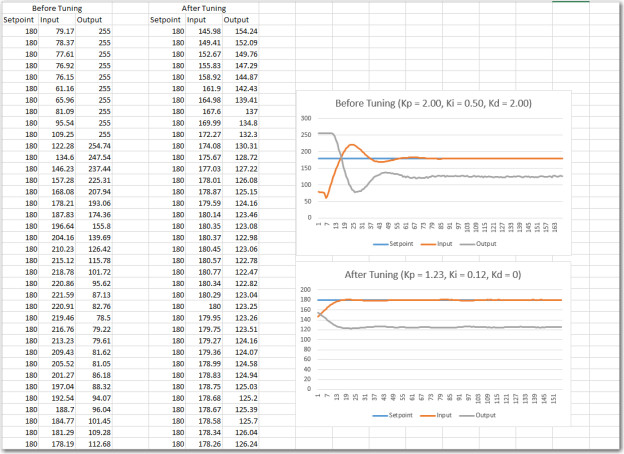

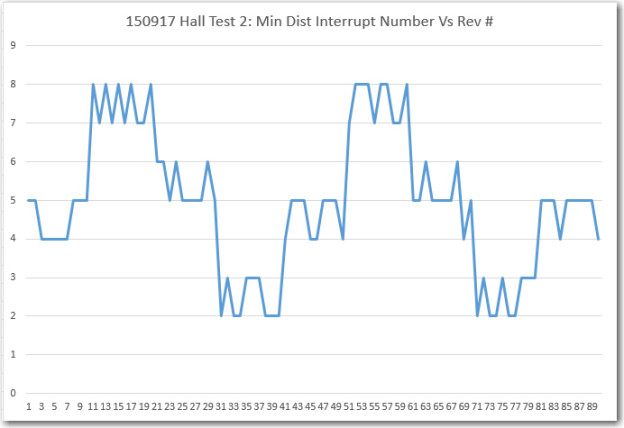

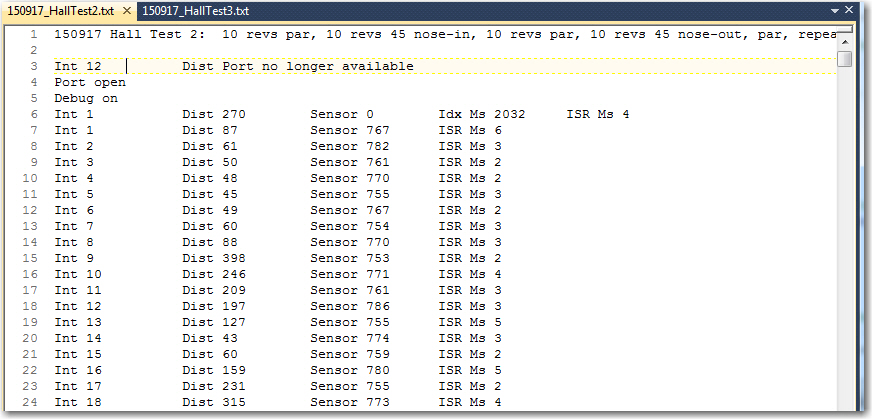

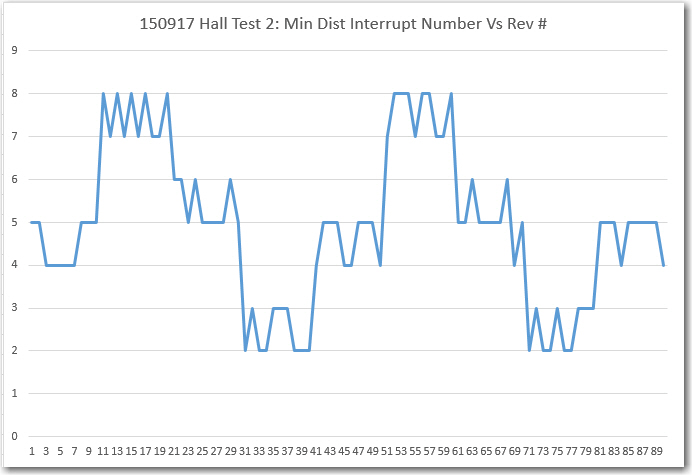

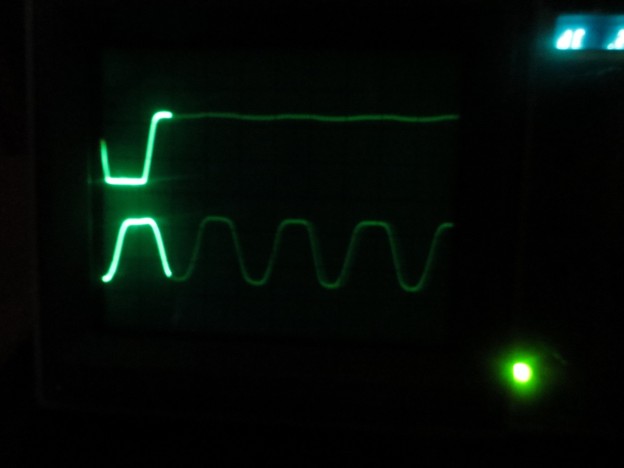

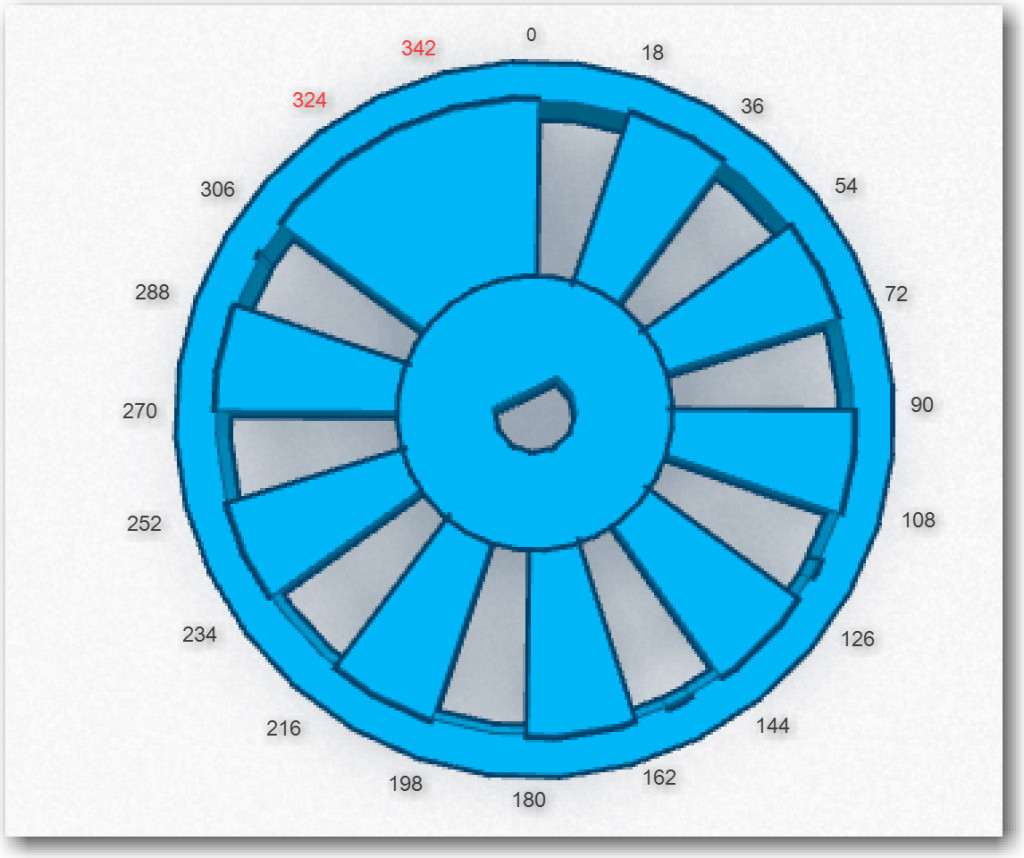

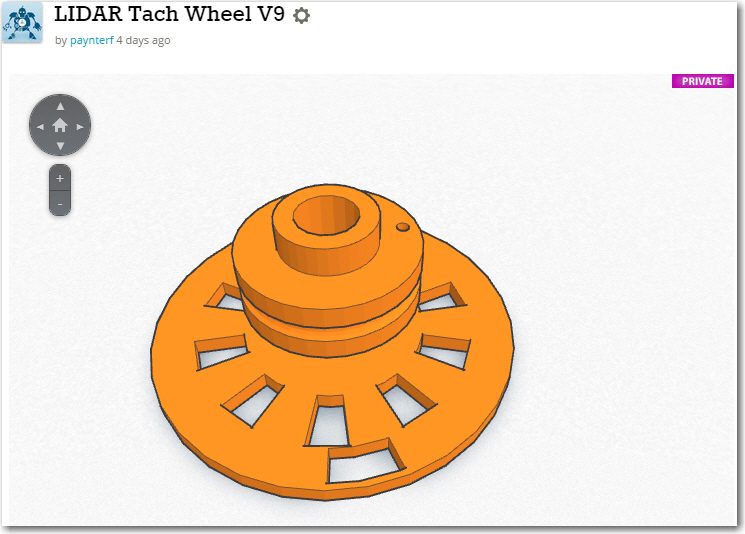

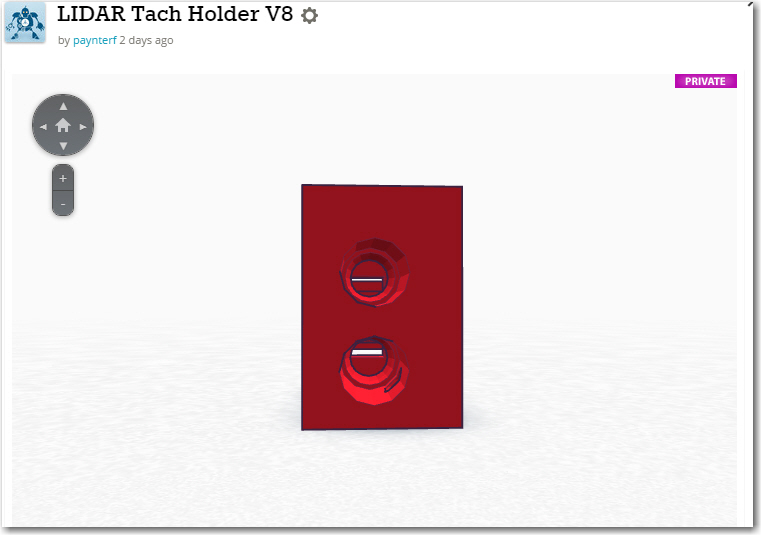

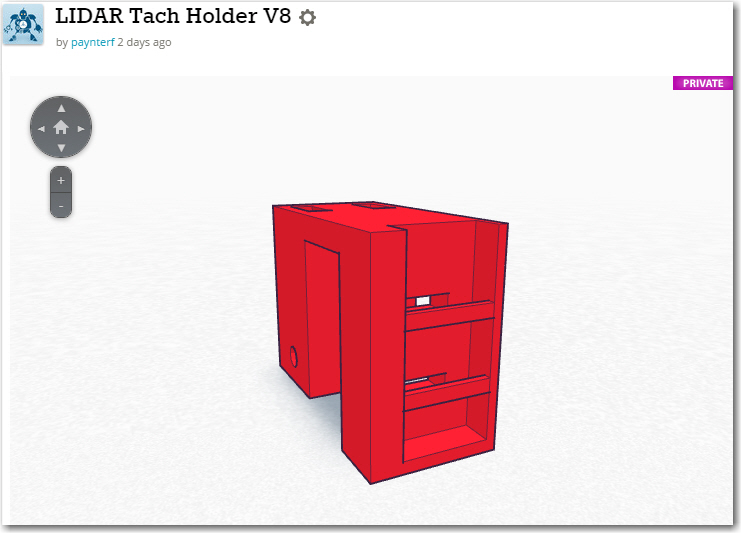

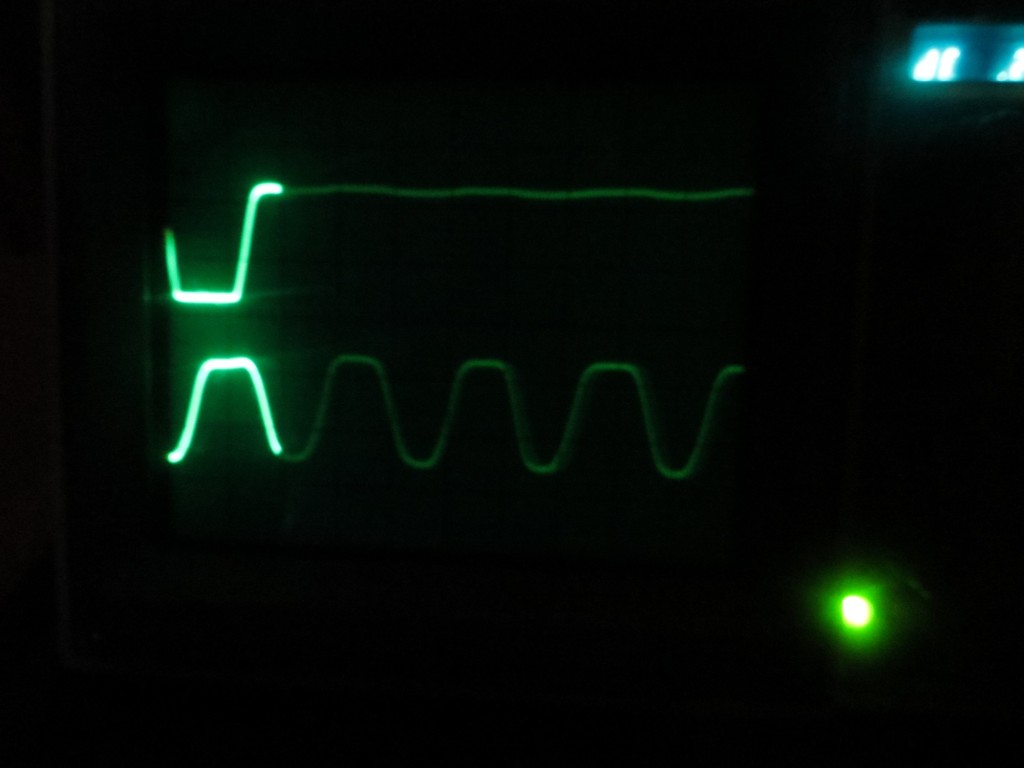

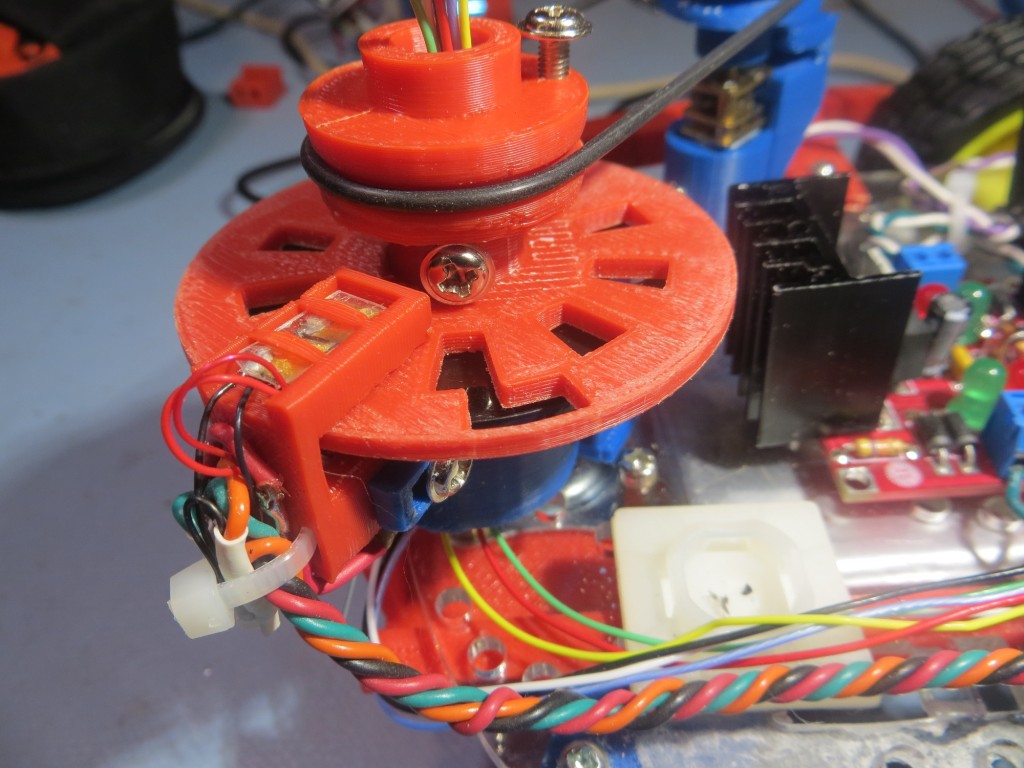

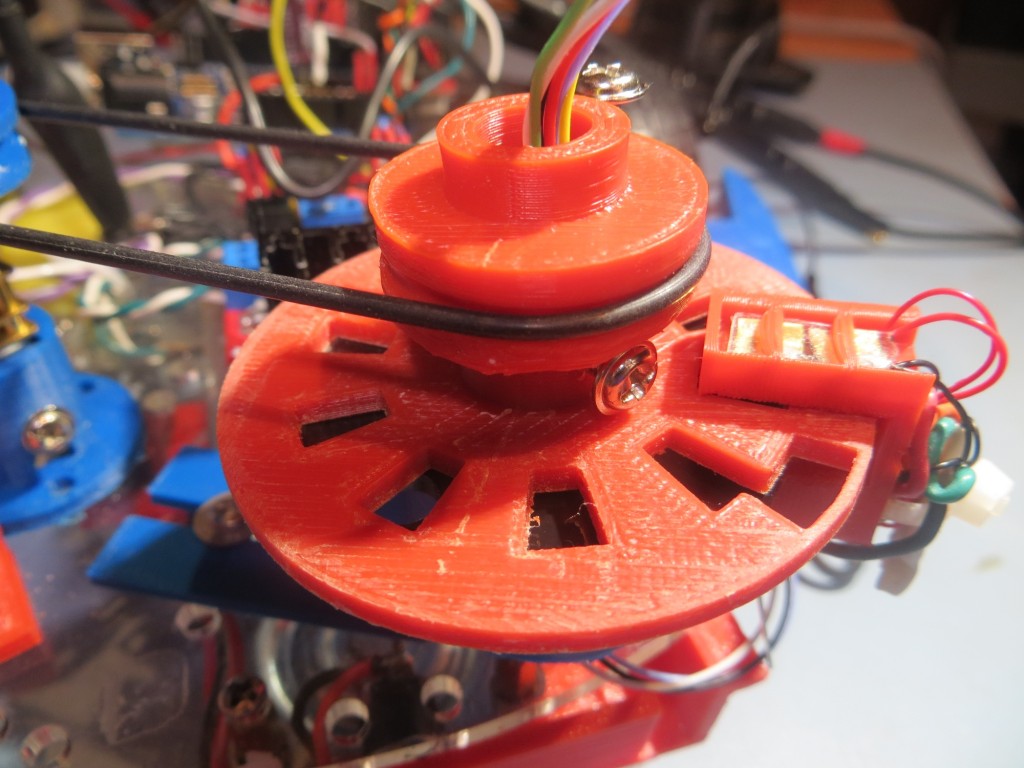

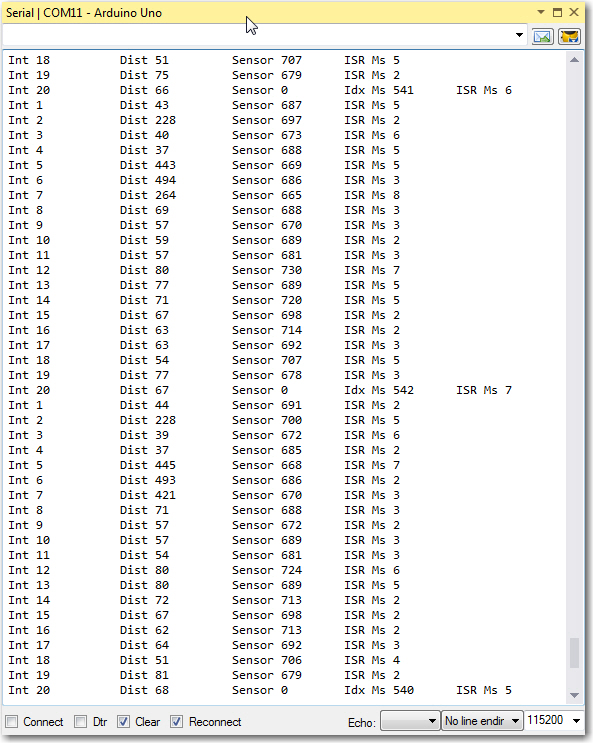

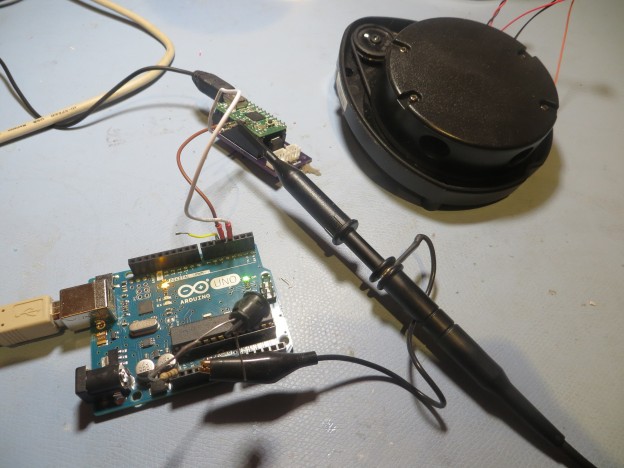

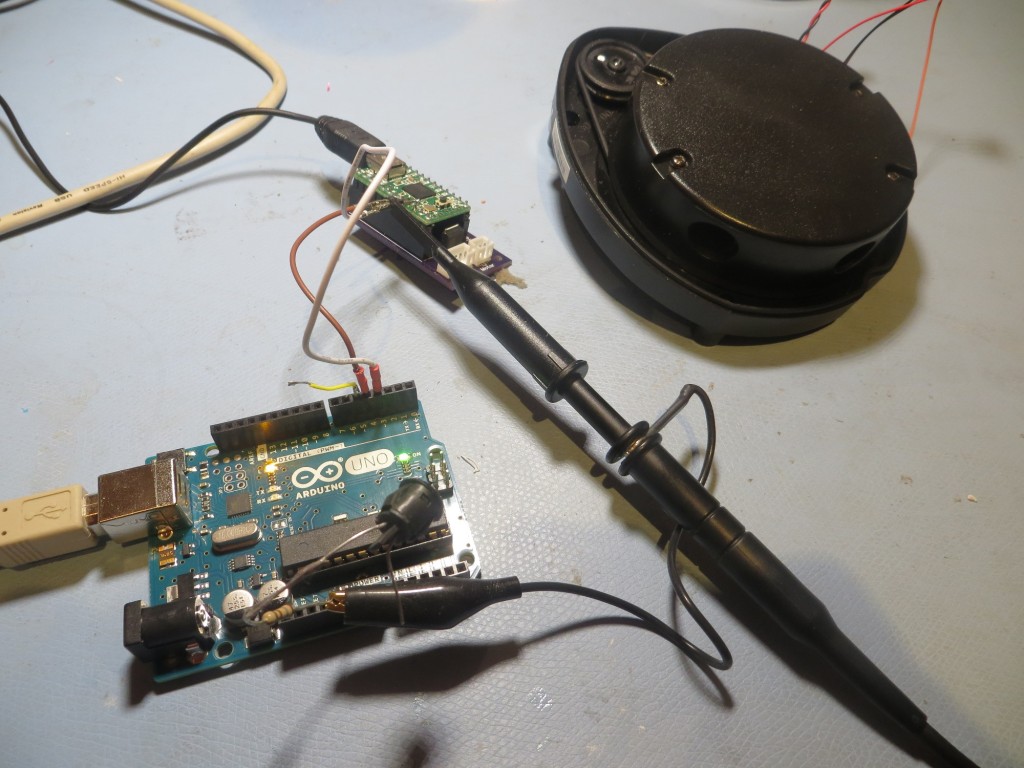

In this same article, I also hypothesized that I might be able to replace all the acoustic sensors with a single spinning-LIDAR system using another motor and a cool slip-ring part from AdaFruit. In the intervening months between April and now, I have been working on implementing and testing this spinning LIDAR idea, but just recently arrived at the conclusion that this idea too is a dead end (to paraphrase Thomas Edison – “I have not failed. I’ve just found 2 ways that won’t work.”). I simply couldn’t make the system work. In order to acquire ranging data fast enough to keep Wall-E from crashing into things, I had to get the rotation rate up to around 300 rpm (i.e. 5 rev/sec). However, when I did that, I couldn’t process the data fast enough with my Arduino Uno processor and the data itself became suspect because the LIDAR was moving while the measurement was being taken, and the higher the rpm got, the faster the battery ran down. In the end, I realized I was throwing most of the LIDAR data away anyway, and was paying too high of a price in terms of battery drain and processing for the data I did keep.

So, in my last post on the spinning-LIDAR configuration I summarized my findings to date, and described my plan to ‘go back to basics’; return to acoustic ‘ping’ sensors for left/right wall ranging, and replace the spinning-LIDAR system with a fixed forward-facing LIDAR. Having just two ‘ping’ sensors pointed in opposite directions should suppress or eliminate inter-sensor interference problems, and the fixed forward-facing LIDAR system should be much more effective than an acoustic sensor for obstacle and ‘stuck’ detection situations.

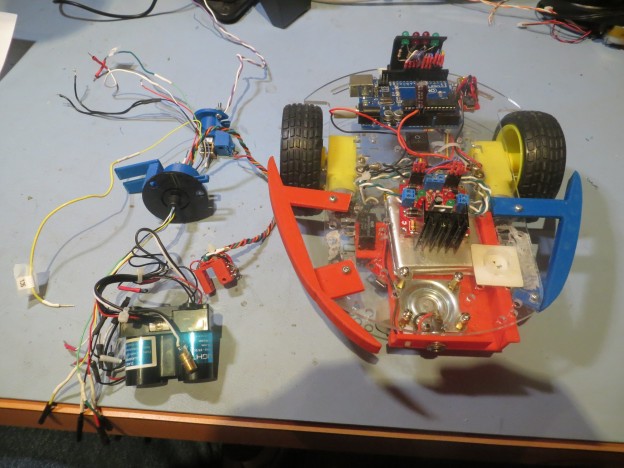

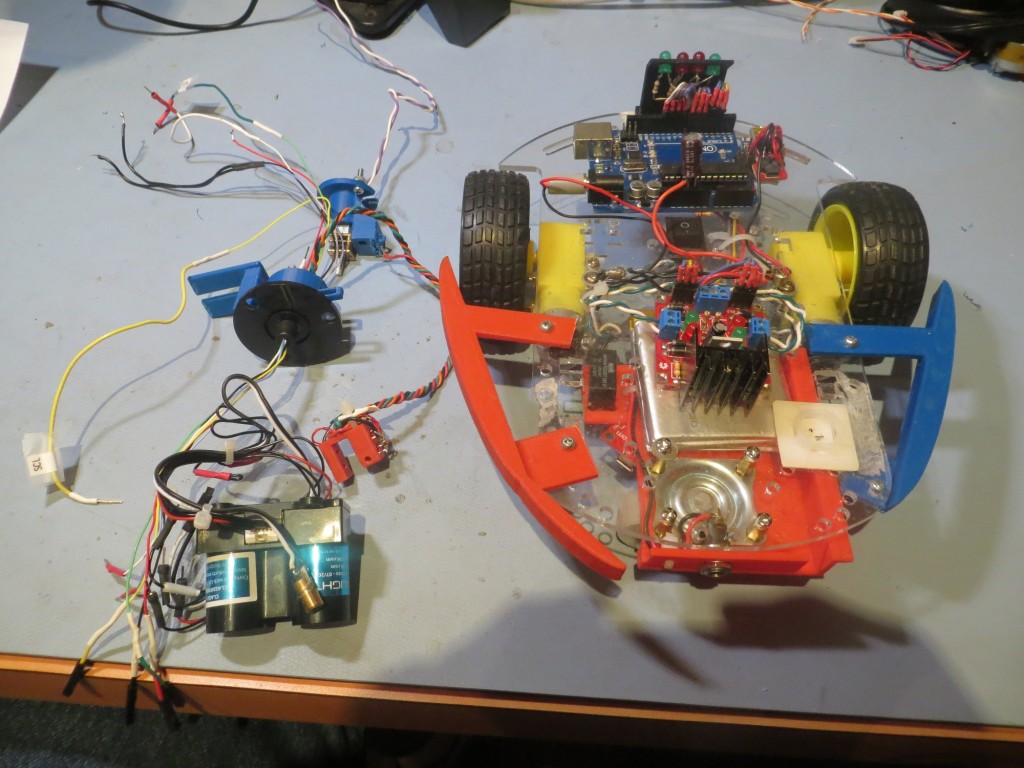

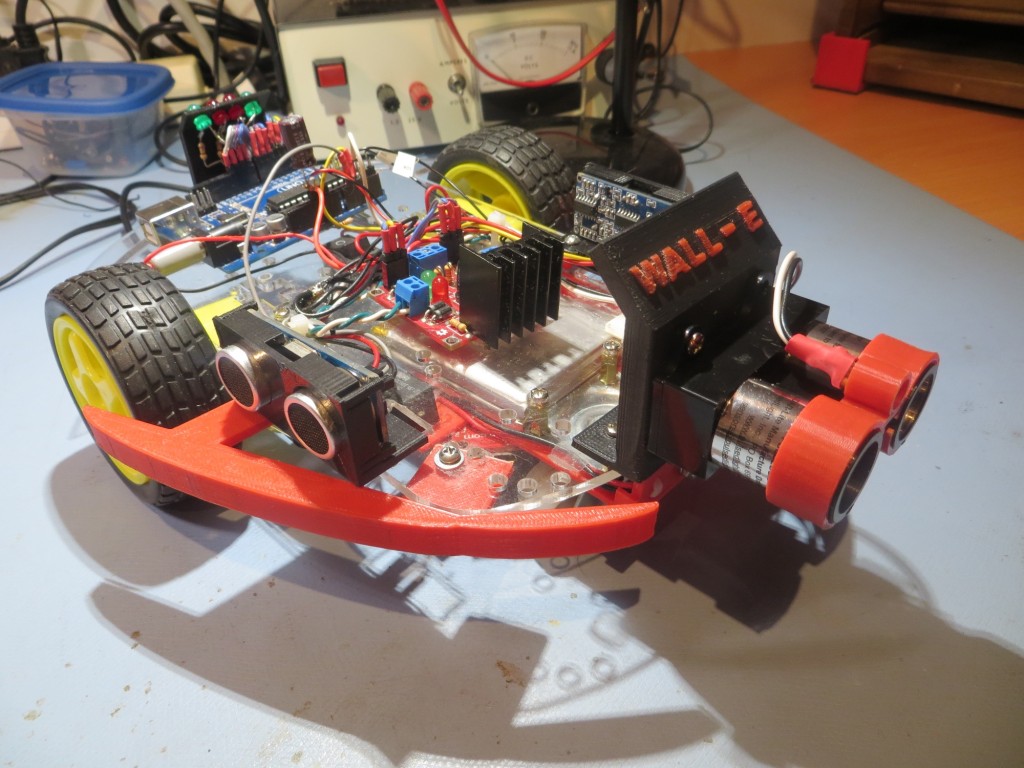

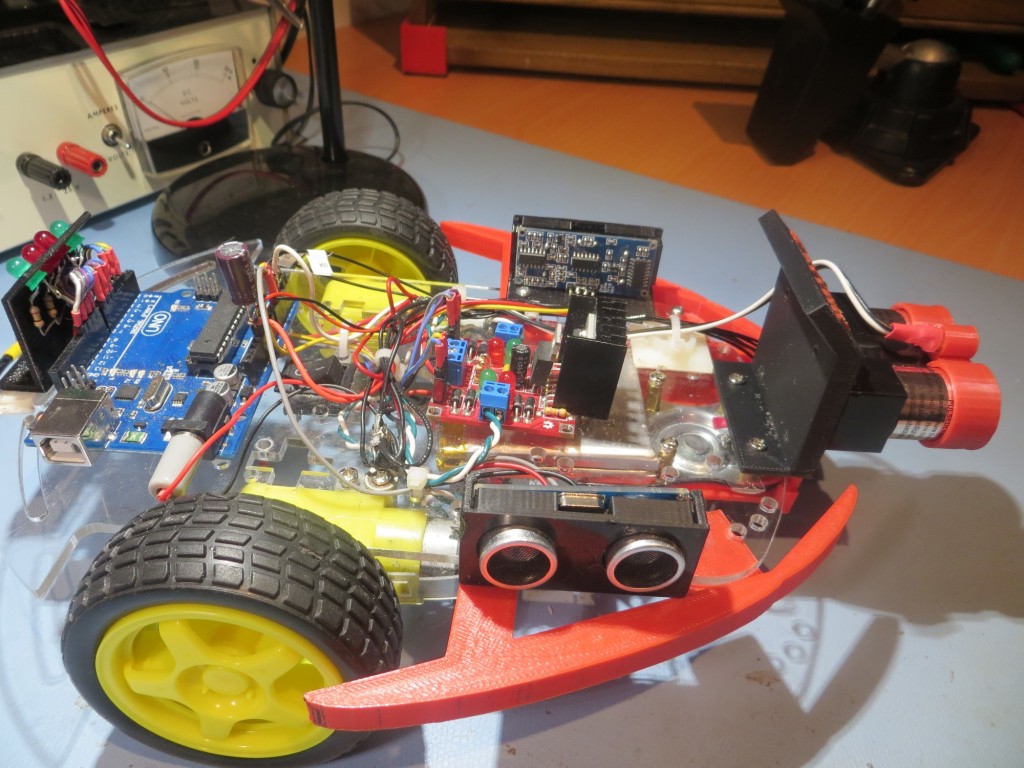

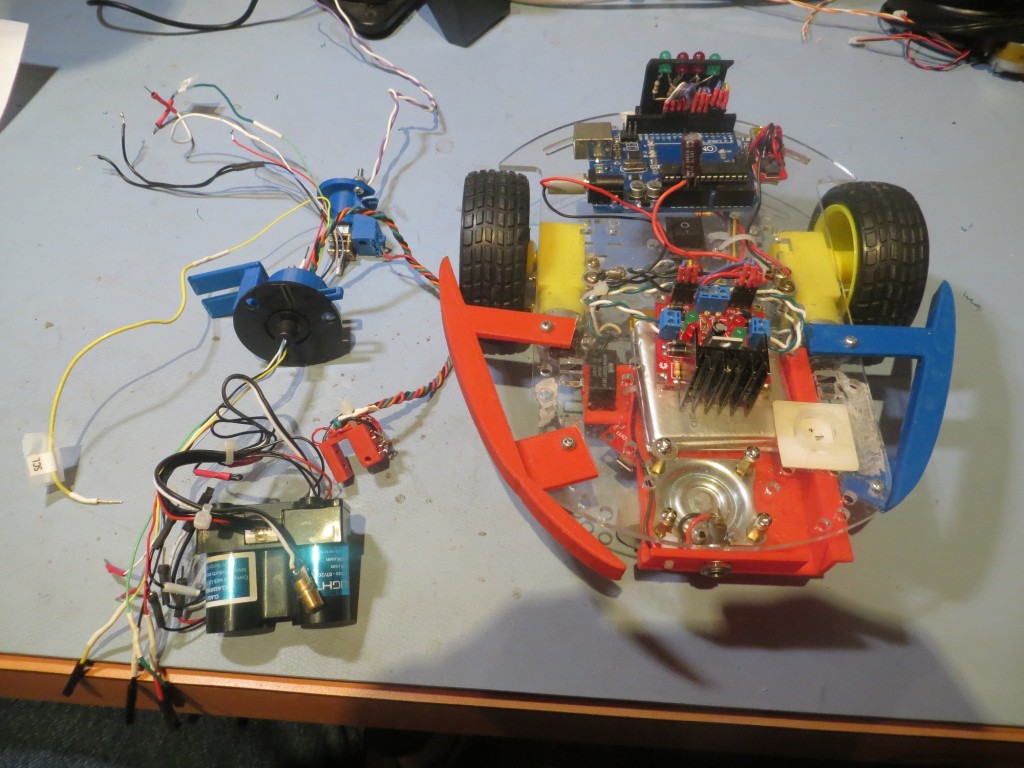

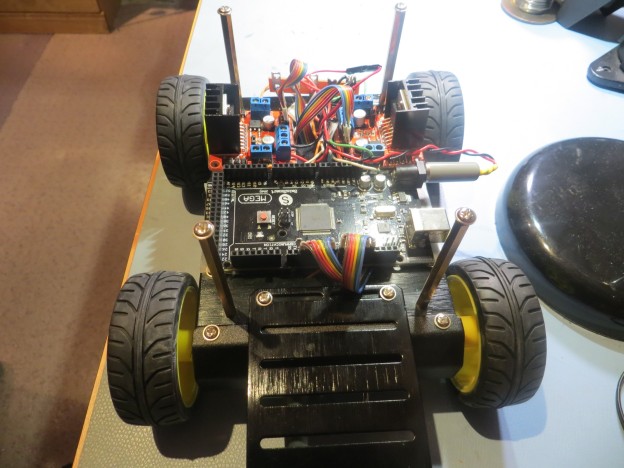

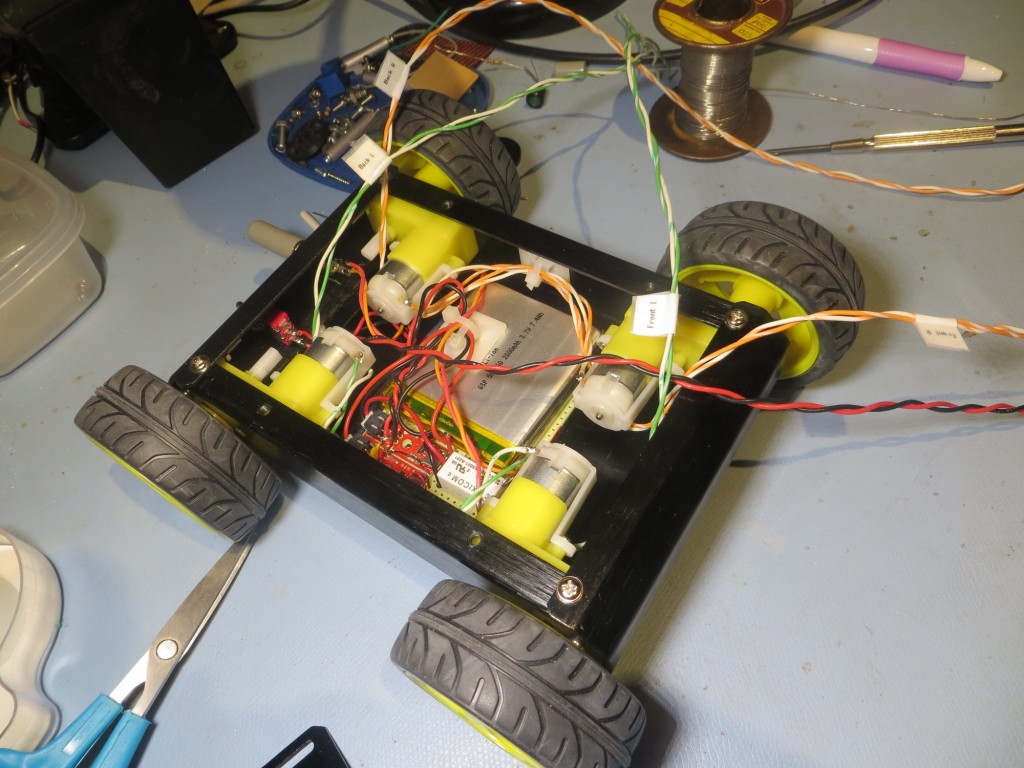

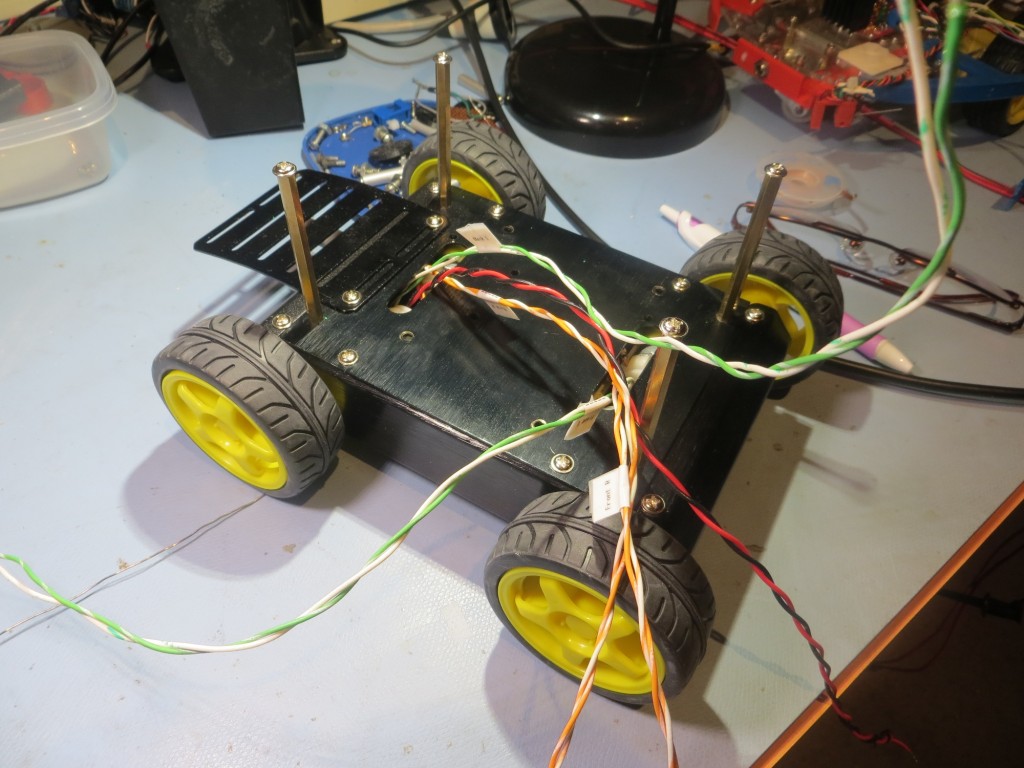

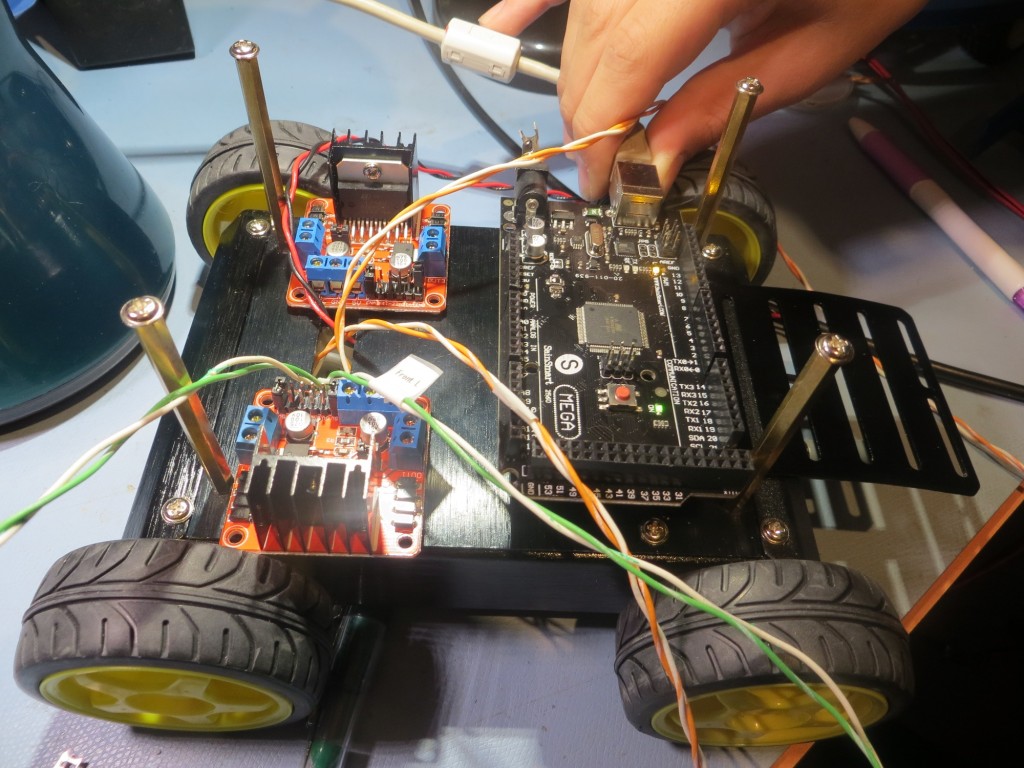

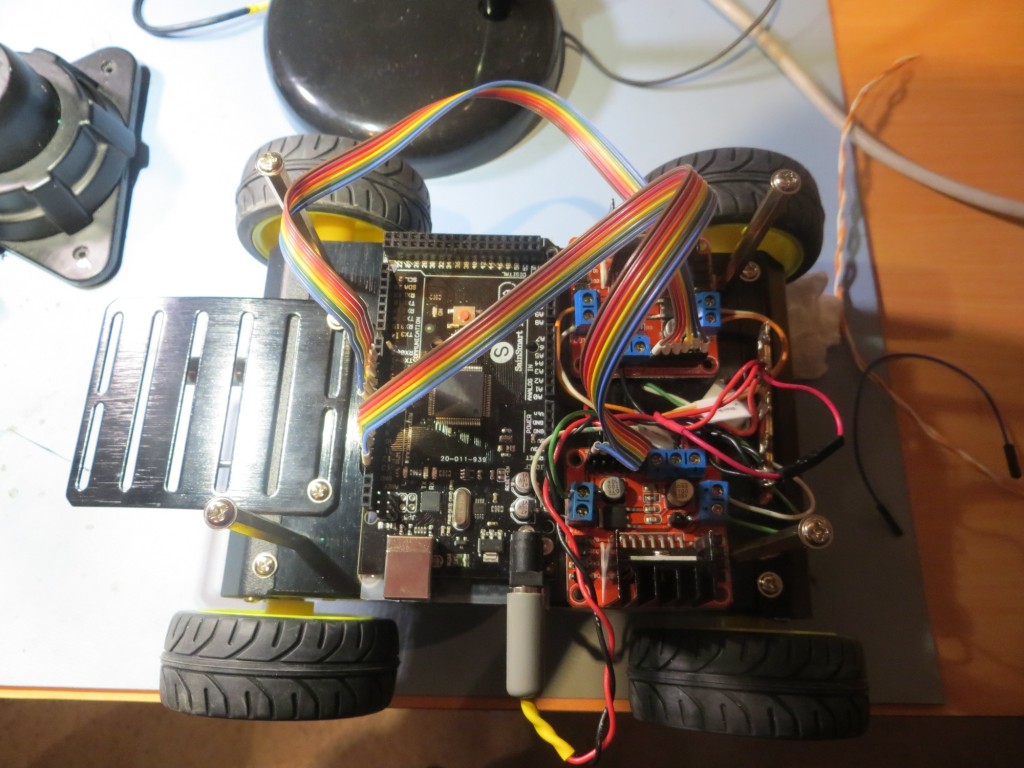

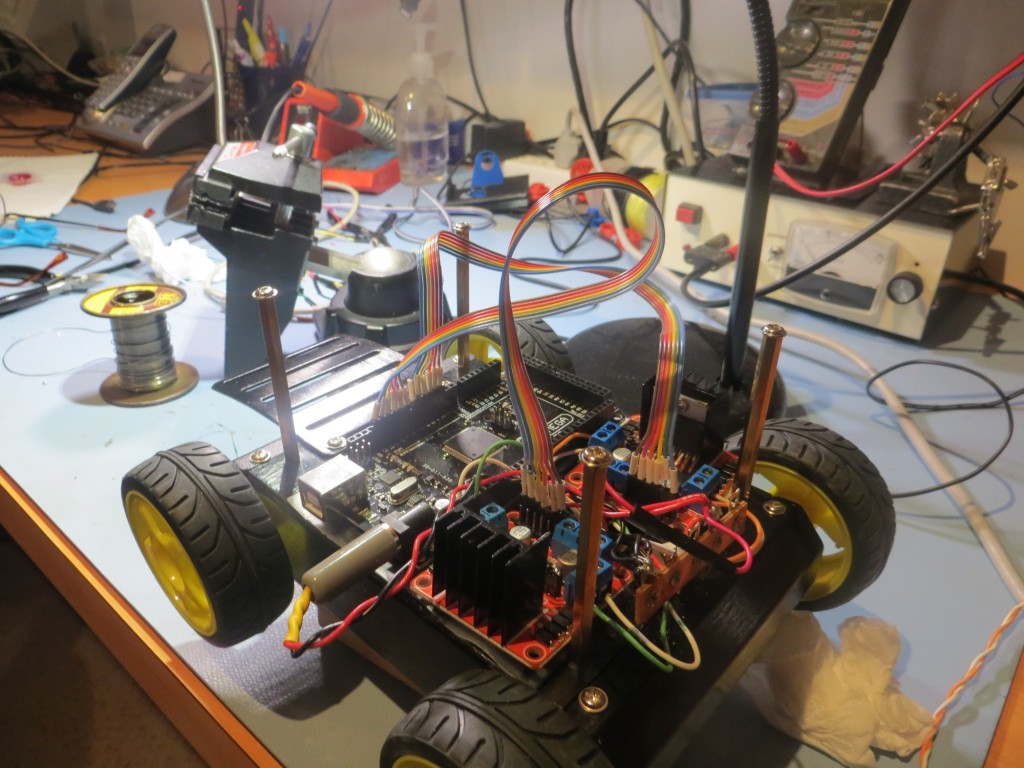

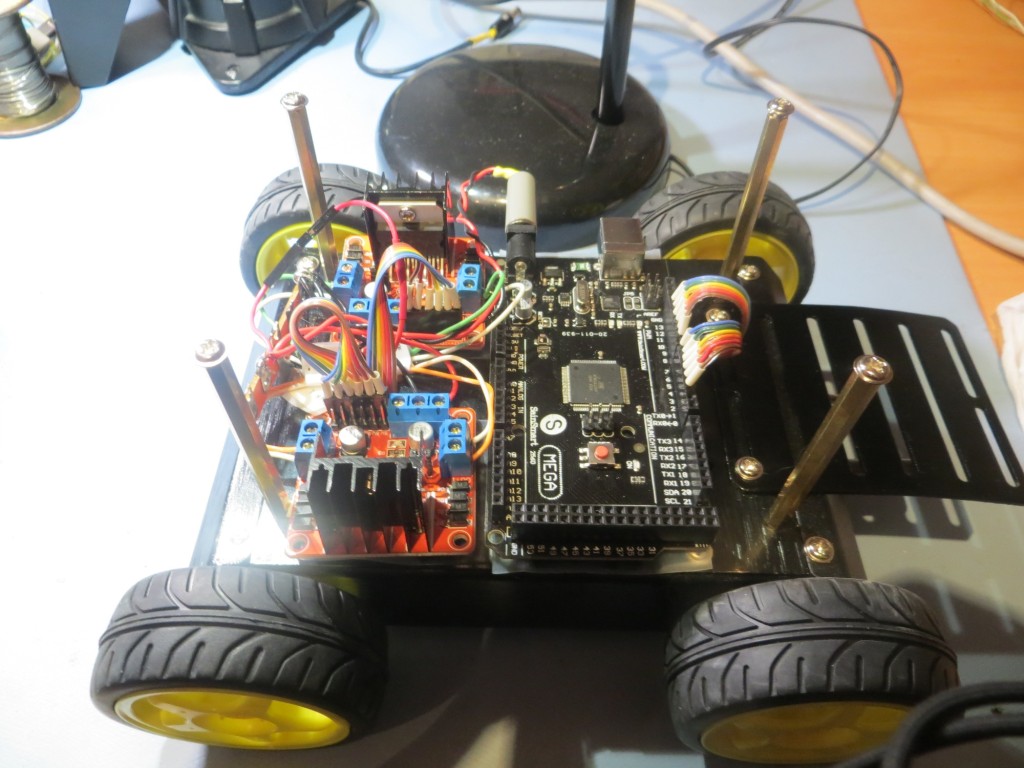

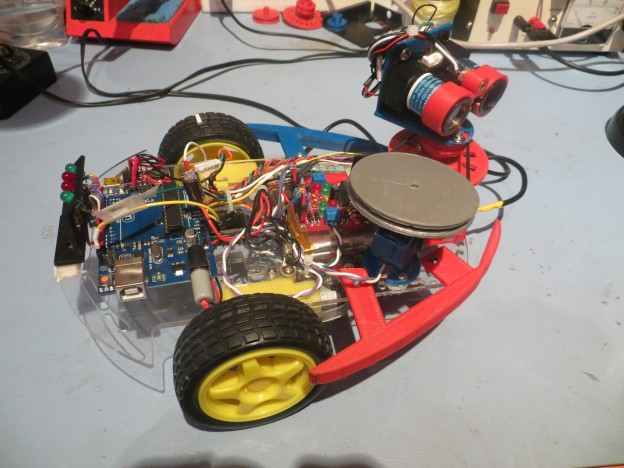

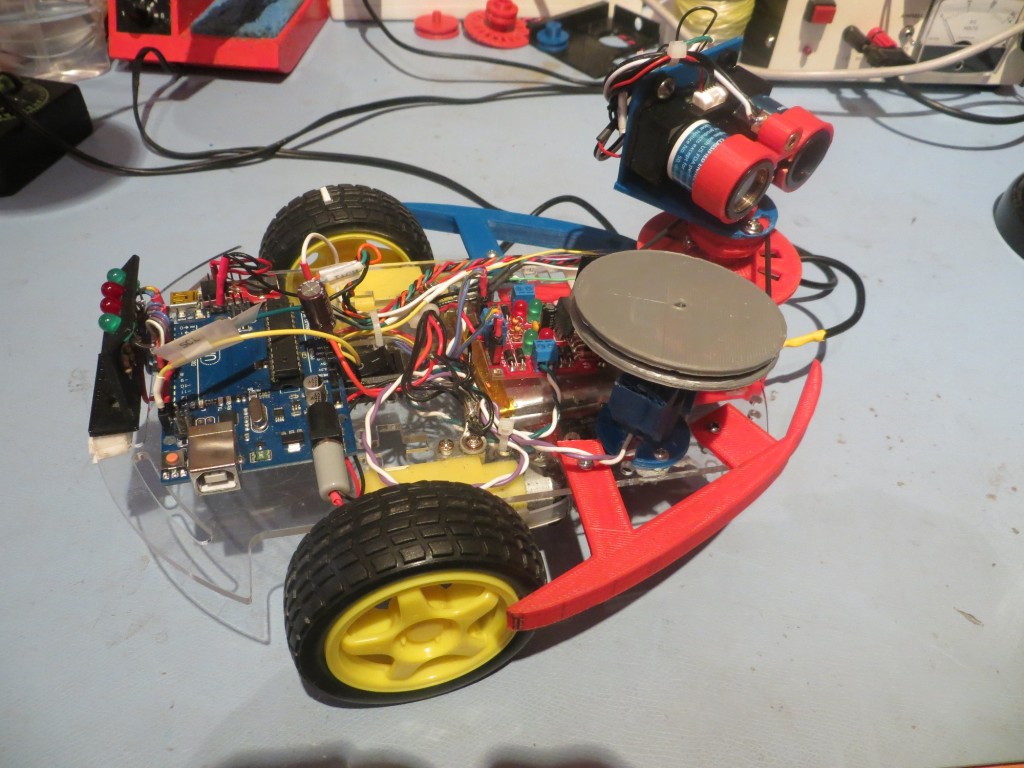

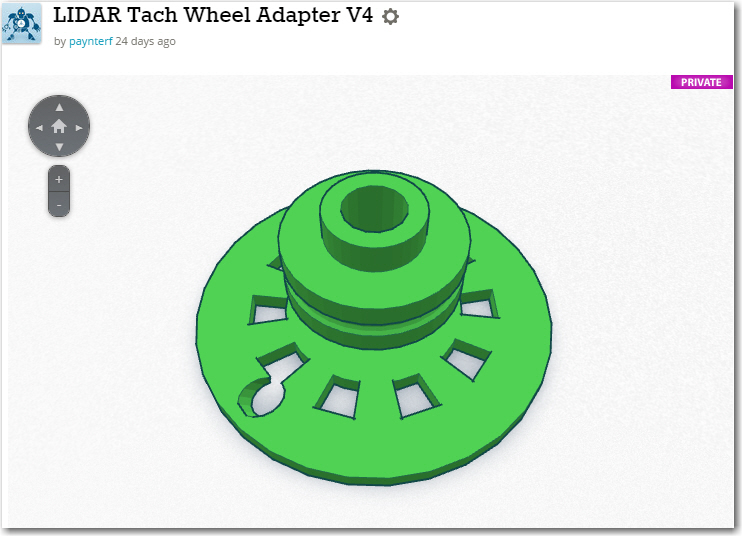

Over the last few weeks I have been reworking Wall-E into the new configuration. First I had to disassemble the spinning LIDAR system and all its support elements (Adafruit slip-ring, motors and pulleys, speed control tachometer, etc). Then I re-mounted left and right ‘ping’ sensors (fortunately I had kept the appropriate 3D-printed mounting brackets), and then designed and 3D-printed a front-facing bracket for the LIDAR unit. While I was at it, I 3D-printed a new left-side bumper to match the right-side one, and I also decided to retain the laser pointer from the previous version. The result is shown in the pictures below.

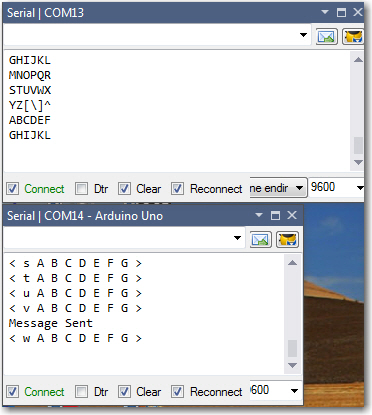

After getting all the physical and software rework done, I ran a series of bench tests to test the feasibility of using the LIDAR for ‘stuck’ detection. I was able to determine that by continuously calculating the mathematical variance of the last 50 LIDAR distance, I could reliably detect the ‘stuck’ condition; while Wall-E was actually moving, this variance remained quite large, but rapidly decreased to near zero when Wall-E stopped making progress. In addition, the instantaneous LIDAR measurements were found to be fast enough and accurate enough for obstacle detection (note here that I am currently using the much faster ‘Blue Label’ version of the LIDAR-Lite unit).

Finally, I set Wall-E loose on the world (well, on the cats anyway) with some ‘field’ testing, with very encouraging results. The ‘stuck’ detection algorithm seems to work very well, with very few ‘false positives’, and the real-time obstacle detection scheme also seems to be very effective. Shown below is a video clip of one of the ‘field test’ runs. The significant events in the video are:

- 14 sec – 30 sec: Wall-E gets stuck on the coat rack, but gets away again. I *think* the reason it took so long (16 seconds) to figure out it was stuck was because the 50-element diagnostic array wasn’t fully populated with valid distance data in the 14 seconds from the start of the run to the point were it hit the coat rack.

- 1:14: Wall-E approaches the dreaded ‘stealth slippers’ and laughs them off. Apparently the ‘stealth slippers’ aren’t so stealthy to LIDAR ;-).

- 1:32: Wall-E backs up and turns around for no apparent reason. This may be an instance of a ‘false positive’ ‘stuck’ declaration, but I don’t really know one way or the other.

- 2:57: Wall-E gets stuck on a cat tree, but gets away again no problem. This time the ‘stuck’ declaration only took 5-6 seconds – a much more reasonable number.

- 3:29: Wall-E gets stuck on a pair of shoes. This one is significant because the LIDAR unit is shooting over the toe of the shoe, and Wall-E is wriggling around a bit. But, while it took a little longer (approx 20 sec), Wall-E did manage to get away successfully!

So, it appears that at least some of my original goals for a wall-following robot have been met. In my first post on the idea of a wall-following robot back in January of this year, I laid out the following ‘system requirements’:

- Follow walls and not get stuck

- Find and utilize a recharging station

- Act like a cat prey animal (i.e. a mouse or similar creature)

- Generate lots of fun and waste lots of time for the humans (me and my grandson) involved

So now Wall-E does seem to follow walls and not get stuck – check. It still cannot find/utilized a charging station, so that one has definitely not been met. With the laser pointer left over from the spinning-LIDAR version, it is definitely interesting to the cats, and one of them has had a grand time chasing the laser dot – check. Lastly, the Wall-E project has been hugely successful in generating lots of fun and wasting lots of time, so that’s a definite CHECK!! ;-).

Next Steps:

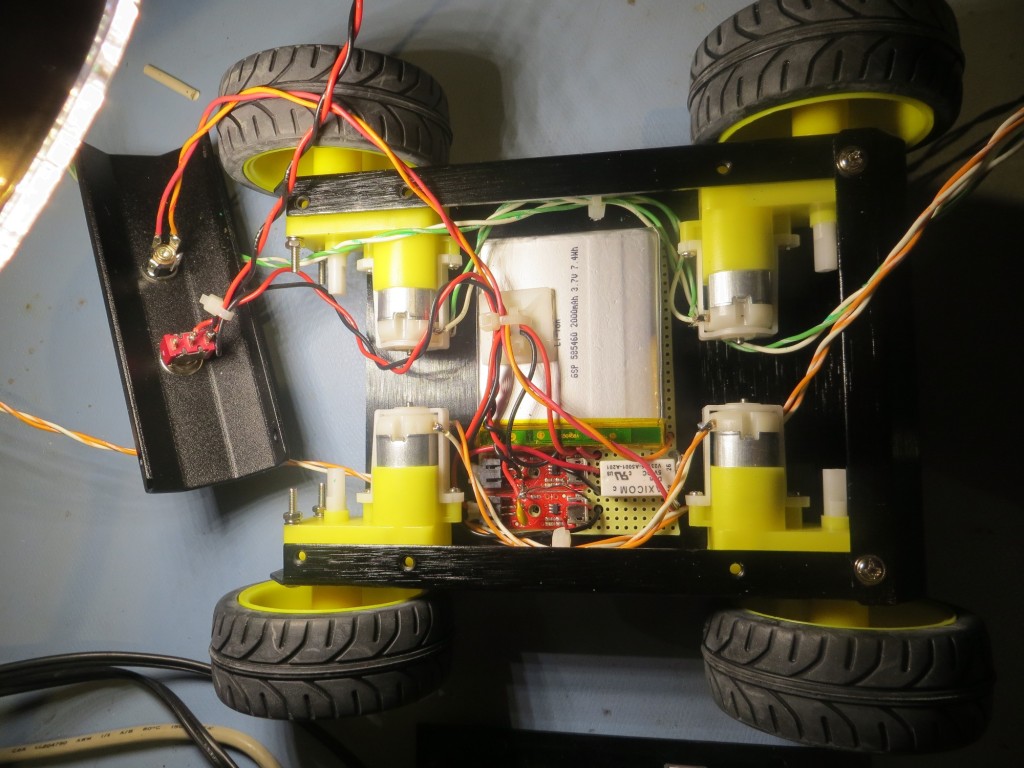

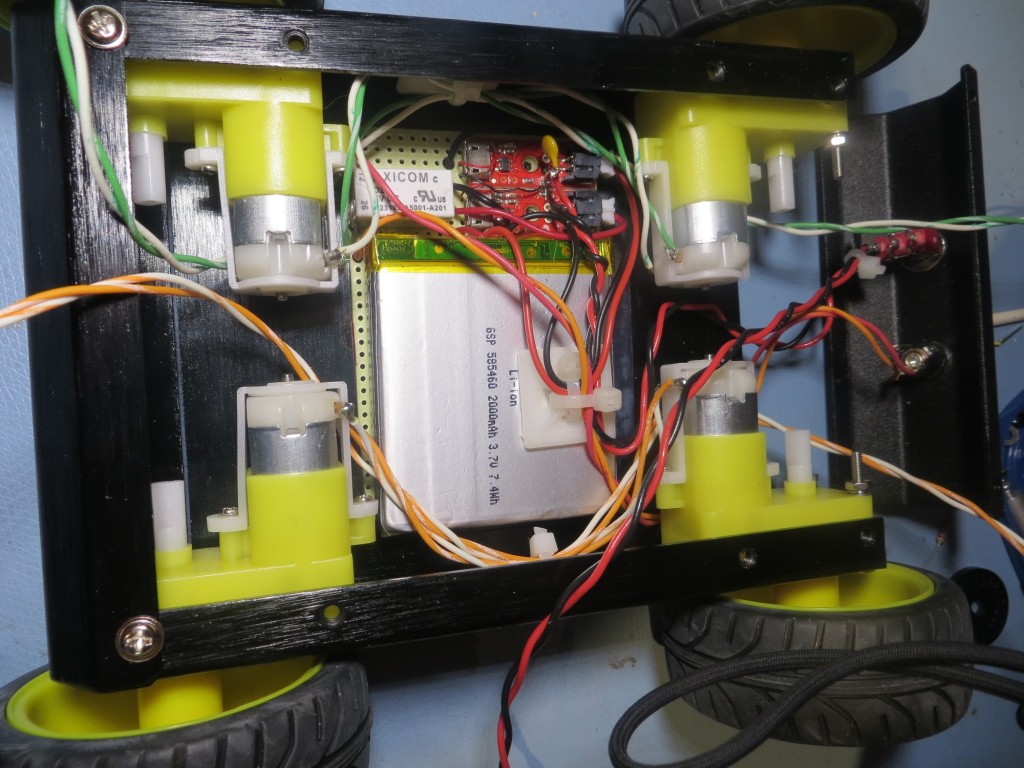

While I’d love to make some progress on the idea of getting Wall-E to find and utilize a charging station, I’m not sure that’s within my reach. However, I do plan to see if I can get my new(er) 4WD chassis up and running with the same sort of ping/LIDAR sensor setup, to see if it does a better job of navigating on carpet. Stay tuned!

Frank