/*

Name: FourWD_WallE2_V7.ino

Created: 9/24/2020 11:03:59 AM

Author: FRANKNEWXPS15\Frank

*/

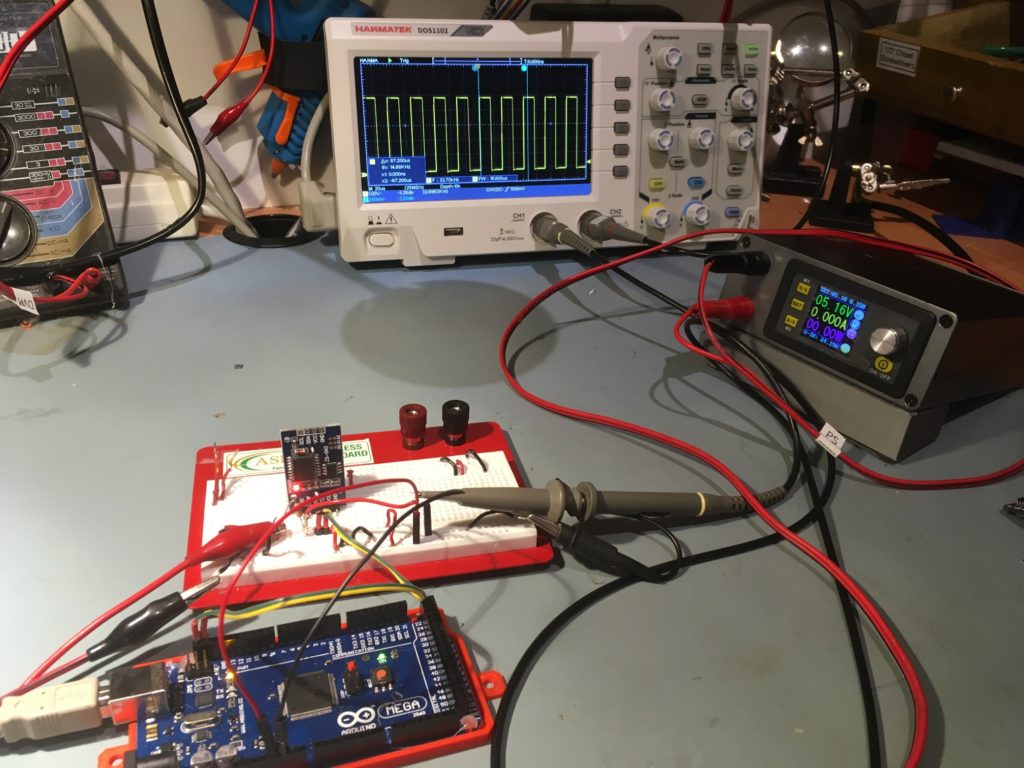

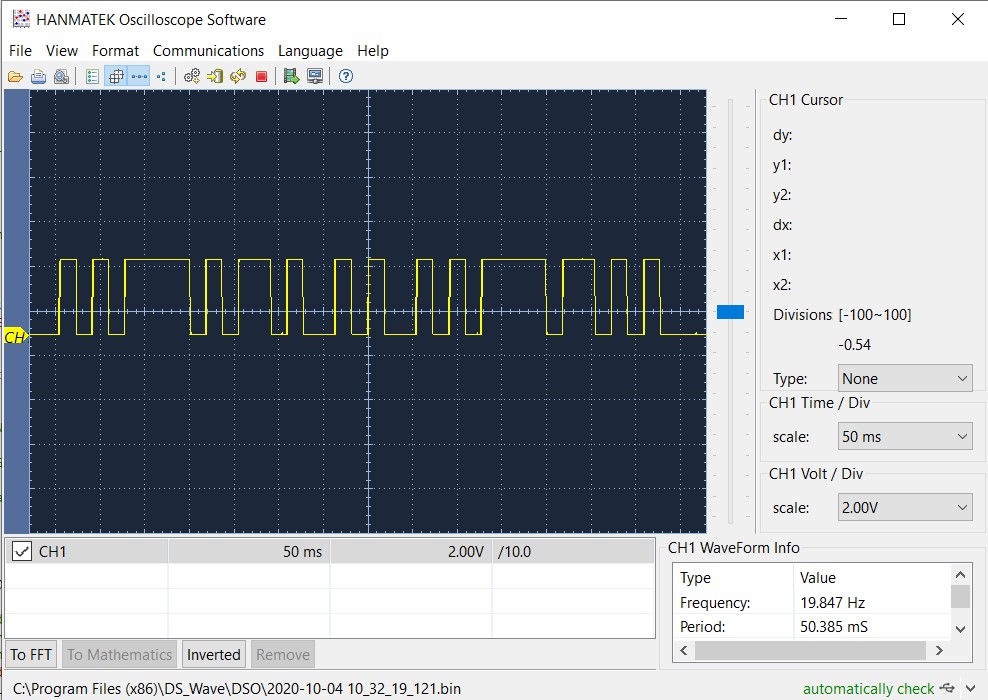

#define TIMER_INT_OUTPUT_PIN 51 //scope monitor pin

#pragma region INCLUDES

#include <avr/sleep.h> //needed for sleep_enable() & sleep_cpu() functions

#include <elapsedMillis.h>

#include <PrintEx.h> //allows printf-style printout syntax

#include "MPU6050_6Axis_MotionApps_V6_12.h" //changed to this version 10/05/19

#include "I2Cdev.h" //02/19/19: this includes SBWire.h

#include "I2C_Anything.h" //needed for sending float data over I2C

#include "Adafruit_FRAM_I2C.h"

#include "RTClib.h" //07/11/20 this MUST be the #include "file" form for the "Local files override.." option to work

#include <PID.h> //moved here 02/09/19

#include <limits.h> //added 04/19/19

#pragma endregion Includes

#pragma region DEFINES

//02/29/16 hardware defines

//#define NO_MOTORS

//#define NO_LIDAR

//#define NO_PINGS

//#define NO_IRDET //added 04/05/17 for daytime in-atrium testing (too much ambient IR)

//#define DISTANCES_ONLY //added 11/14/18 to just display distances in infinite loop

#define NO_FRAM_TELEMETRY //added 11/14/18

//#define FORCE_RTC_TO_LAST_COMPILE_TIME //added 02/18/19. Forces RTC to last compile time

#define NO_STUCK //added 03/10/19 to disable 'stuck' detection

//#define BATTERY_DISCHARGE //added 03/04/20 to discharge battery safely

#define NO_POST //added 04/12/20 to skip all the POST checks

#pragma endregion Program #Defines

#pragma region PRE_SETUP

StreamEx mySerial = Serial; //added 03/18/18 for printf-style printing

Adafruit_FRAM_I2C fram = Adafruit_FRAM_I2C(); //I2C addr = 0x50 (default)

//FramPacket.h holds 'inline' definition of CFRAMStatePacket class

/*this has to come after the following lines

#include "Adafruit_FRAM_I2C.h"

Adafruit_FRAM_I2C fram = Adafruit_FRAM_I2C();

StreamEx mySerial = Serial; //added 03/18/18 for printf-style printing

*/

#include "FramPacket.h"

//tri-sensor board

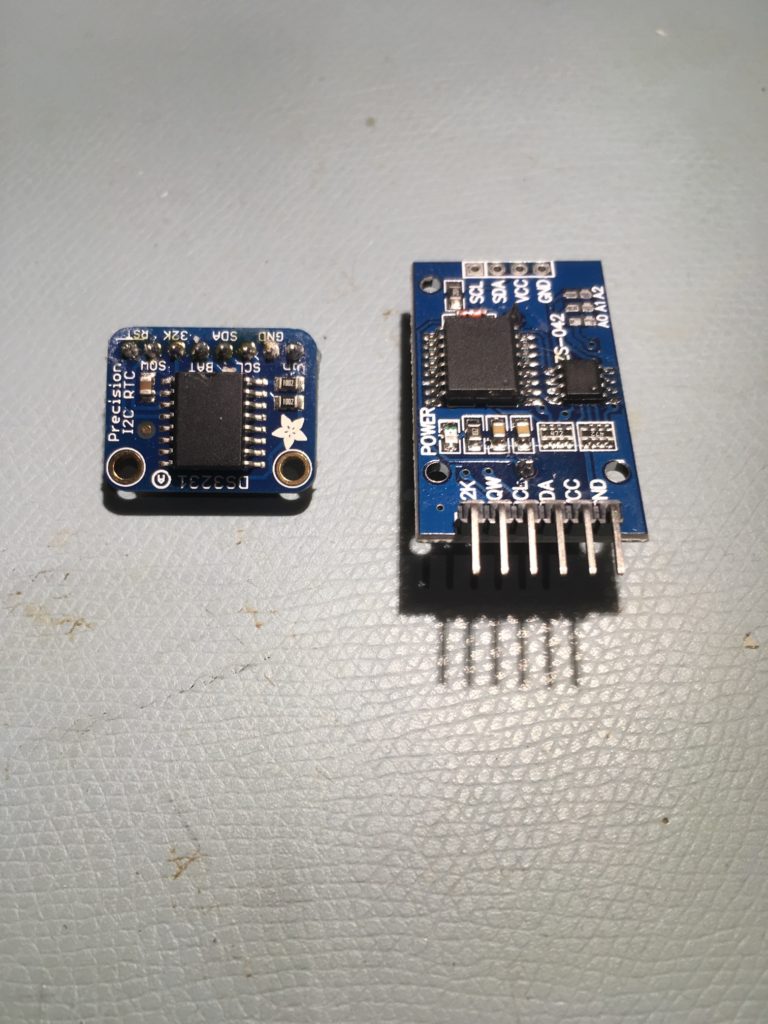

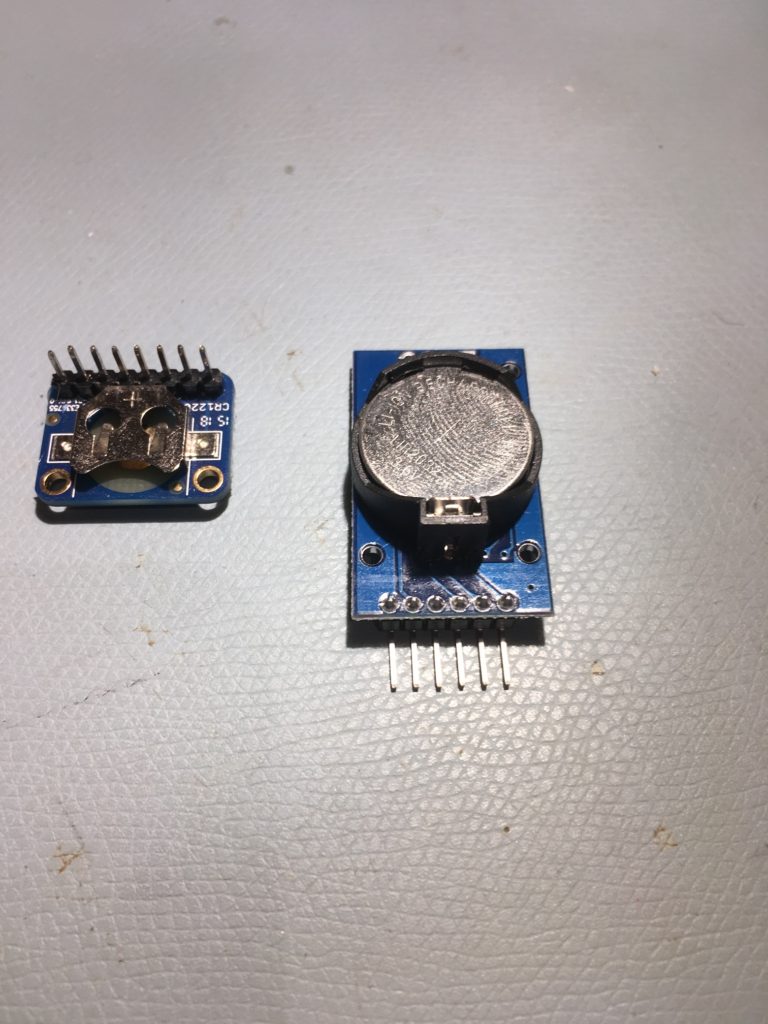

RTC_DS3231 rtc; //I2C addr = 0x68

MPU6050 mpu(0x69); //06/23/18 chg to AD0 high addr, using INT connected to Mega pin 2 (INT0)

CFRAMStatePacket FramPacket = CFRAMStatePacket(&fram); //this object is used for all FRAM transactions

const char daysOfTheWeek[7][12] = { "Sunday", "Monday", "Tuesday", "Wednesday", "Thursday", "Friday", "Saturday" };//used for RTC date/time readouts

//added 01/30/17 for IR homing support

#pragma region IRHOMING

uint8_t IR_HOMING_MODULE_SLAVE_ADDR = 8; //uint8_t type reqd here for Wire.requestFrom() call

double IRHomingSetpoint, IRHomingLRSteeringVal, IRHomingOutput;//10/06/17 chg input variable name to something more descriptive

PID IRHomingPID(&IRHomingLRSteeringVal, &IRHomingOutput, &IRHomingSetpoint, 1000, 0.0, 200.0, REVERSE);//10/15/17 'final' setup

const long IR_BEAM_DETECTION_CHANNEL_MAX = 2621440;

const long IR_BEAM_DETECTION_THRESHOLD = 10000;

#pragma endregion IR Homing Parameters

#pragma region ENUMS

//01/05/16 enum types cannot be used as arguments or return types for functions due to Arduino pre-processor quirk

//12/20/15 added for navigation support

//01/15/16 revised to be consistent wtih nav modes identified in https://fpaynter.com/2016/01/making-wall-e2-smarter-using-karnaugh-maps/

enum NavCases

{

NAV_NONE = 0,

NAV_WALLTRK,

NAV_OBSTACLE,

NAV_STEPTURN,

NAV_STUCK,

NAV_OPENCNR

};

//04/10/20 Experiment with porting heading based tracking capability from two wheel robot

enum TrackingState

{

TRK_RIGHT_NONE,

TRK_RIGHT_CAPTURE,

TRK_RIGHT_MAINTAIN,

TRK_RIGHT_BACKUP_AND_TURN,

TRK_RIGHT_STEP_TURN

};

const char* NavStrArray[] = { "WallTrack", "Obstacle", "StepTurn", "Stuck", "OpenCorner" };

//03/08/17 added for new mode/state definitions; see https://fpaynter.com/2017/03/wall-e2-operating-mode-review/

enum OpModes

{

MODE_NONE = 0, //04/04/17 chg from MODE_DEFAULT and moved to top (zero) position

MODE_CHARGING,

MODE_IRHOMING,

MODE_WALLFOLLOW,

MODE_DEADBATTERY, //added 01/16/18 to handle dead battery case

MODE_DISCHARGE //added 03/04/20 to safely discharge the battery

};

const char* ModeStrArray[] = { "None", "Charge", "Home", "Wall", "DeadBatt", "Discharge" };

const char* LongModeStrArray[] = { "None", "Charging", "IR Homing", "Wall Following", "Dead Battery", "Discharging" };

enum WallTrackingCases

{

TRACKING_NONE = 0,

TRACKING_LEFT,

TRACKING_RIGHT,

TRACKING_NEITHER

};

const char* TrkStrArray[] = { "None", "Left", "Right", "Neither" };

#pragma endregion Tracking and Nav Case Enums

#pragma region BATTCONSTS

//03/10/15 added for battery charge level monitoring

//const int LOW_BATT_THRESH_VOLTS = 7.4; //50% chg per http://batteryuniversity.com/learn/article/lithium_based_batteries

const int LOW_BATT_THRESH_VOLTS = 8.4; //07/10/20 temp debug settingr//50% chg per http://batteryuniversity.com/learn/article/lithium_based_batteries

const int MAX_AD_VALUE = 1023;

const long BATT_CHG_TIMEOUT_SEC = 36000; //10 HRS, for test only

//const long BATT_CHG_TIMEOUT_MIN = 120; //2 hours. Chg to min vs sec 01/09/18

const float DEAD_BATT_THRESH_VOLTS = 6; //added 01/24/17

//const float DEAD_BATT_THRESH_VOLTS = 6.2; //chg to 6.2 03/02/19 per https://www.fpaynter.com/2019/03/better-battery-charging-for-wall-e2/

//const float FULL_BATT_VOLTS = 8.2; //added 03/17/18. Chg to 8.2 02/24/19

const float FULL_BATT_VOLTS = 8.4; //added 03/17/18. Chg to 8.4 03/05/20

const int MINIMUM_CHARGE_TIME_SEC = 10; //added 04/01/18

const float VOLTAGE_TO_CURRENT_RATIO = 0.75f; //Volts/Amp rev 03/01/19. Used for both 'Total' and 'Run' sensors

const float FULL_BATT_CURRENT_THRESHOLD = 0.5; //amps chg to 0.5A 03/02/19 per https://www.fpaynter.com/2019/03/better-battery-charging-for-wall-e2/

const int CURRENT_AVERAGE_NUMBER = 10; //added 03/01/19

const int VOLTS_AVERAGE_NUMBER = 5; //added 03/01/19

const int IR_HOMING_TELEMETRY_SPACING_MSEC = 200; //added 04/23/20

//battery fuel guage constants

const float _20PCT_BATT_VOLTS = DEAD_BATT_THRESH_VOLTS + 0.2f * (FULL_BATT_VOLTS - DEAD_BATT_THRESH_VOLTS);

const float _40PCT_BATT_VOLTS = DEAD_BATT_THRESH_VOLTS + 0.4f * (FULL_BATT_VOLTS - DEAD_BATT_THRESH_VOLTS);

const float _60PCT_BATT_VOLTS = DEAD_BATT_THRESH_VOLTS + 0.6f * (FULL_BATT_VOLTS - DEAD_BATT_THRESH_VOLTS);

const float _80PCT_BATT_VOLTS = DEAD_BATT_THRESH_VOLTS + 0.8f * (FULL_BATT_VOLTS - DEAD_BATT_THRESH_VOLTS);

const float ZENER_VOLTAGE_OFFSET = 5.84; //measured zener voltage

const float ADC_REF_VOLTS = 3.3; //03/27/18 now using analogReference(EXTERNAL) with Teensy 3.3V connected to AREF

#pragma endregion Battery Constants

#pragma region DISTANCE_MEASUREMENT_SUPPORT

//misc LIDAR and Ping sensor parameters

const int MIN_OBS_DIST_CM = 20; //rev 04/28/17 for better obstacle handling

const int CHG_STN_AVOIDANCE_DIST_CM = 30; //added 03/11/17 for charge stn avoidance

//const int STEPTURN_DIST_CM = 80; //rev 04/28/17 to be indep of MIN_OBS_DIST_CM

//const int STEPTURN_DIST_CM = 0; //rev 05/16/20 to temporarily disable

const int STEPTURN_DIST_CM = 50; //rev 06/29/20 to temporarily disable

const int MAX_FRONT_DISTANCE_CM = 400;

const int MAX_LR_DISTANCE_CM = 200; //04/19/15 now using sep parameters for front and side sensors

//const int MIN_PING_INTERVAL_MSEC = 200; //rev 03/12/16

//const int MIN_PING_INTERVAL_MSEC = 50; //rev 10/03/20

const int MIN_PING_INTERVAL_MSEC = 30; //rev 10/03/20

const int CHG_STN_FINAL_APPR_DIST_CM = 20; //added 03/11/17 for charge stn avoidance

//04/01/2015 added for 'stuck' detection support

const int FRONT_DIST_ARRAY_SIZE = 25; //04/28/19 - final value (I hope)

const int FRONT_DIST_AVG_WINDOW_SIZE = 3; //moved here & renamed 04/28/19

const int LR_PING_DIST_ARRAY_SIZE = 3; //04/28/19 added to reinstate l/r dist running avg

const int LR_PING_AVG_WINDOW_SIZE = 3; //added 04/28/19 so front & lr averages can differ

const int STUCK_FRONT_VARIANCE_THRESHOLD = 50; //chg to 50 04/28/17

//const int STUCK_FRONT_VARIANCE_THRESHOLD = 0; //chg to 0 09/15/18

const int NO_LIDAR_FRONT_VAR_VAL = 10 * STUCK_FRONT_VARIANCE_THRESHOLD; //01/16/19

uint16_t aFrontDist[FRONT_DIST_ARRAY_SIZE]; //04/18/19 rev to use uint16_t vs byte

//04/28/19 added to reinstate l/r dist running avg

//06/28/20 chg to uint_16 to accommodate change from cm to mm

//byte aLeftDist[LR_PING_DIST_ARRAY_SIZE];

//byte aRightDist[LR_PING_DIST_ARRAY_SIZE];

uint16_t aLeftDist[LR_PING_DIST_ARRAY_SIZE];

uint16_t aRightDist[LR_PING_DIST_ARRAY_SIZE];

//added 12/26/14 for wall following support

int prevleftdistval = 0;

int prevrightdistval = 0;

int prevfrontdistcm = 0;

int curMinObstacleDistance = MIN_OBS_DIST_CM;//added 03/11/17 for chg stn avoidance

//04/13/20 moved distance vars up here so can be initialized just before loop()

int leftdistval = 0;

int rightdistval = 0;

int frontdistval = 0;

//double frontvar = 0;

volatile double frontvar = 0; //08/11/20 now updated in timer1 ISR

#pragma endregion Distance Measurement Support

#pragma region MOTOR_PARAMETERS

//drive wheel speed parameters

const int MOTOR_SPEED_FULL = 200; //range is 0-255

const int MOTOR_SPEED_MAX = 255; //range is 0-255

const int MOTOR_SPEED_HALF = 127; //range is 0-255

const int MOTOR_SPEED_QTR = 75; //added 09/25/20

const int MOTOR_SPEED_LOW = 50; //added 01/22/15

const int MOTOR_SPEED_OFF = 0; //range is 0-255

const int MOTOR_SPEED_CAPTURE_OFFSET = 75; //added 06/21/20 for offset capture

//const int MOTOR_SPEED_CAPTURE_OFFSET = 125; //added 06/28/20 for offset capture

const int MOTOR_SPEED_ADJ_FACTOR = 40; //chg to 40 at 5:55pm

const int LEFT_SPEED_COMP_VAL_FWD = 15; //left speed compensation value

const int RIGHT_SPEED_COMP_VAL_FWD = -LEFT_SPEED_COMP_VAL_FWD; //right speed compensation value

const int LEFT_SPEED_COMP_VAL_REV = 5; //left speed compensation value

const int RIGHT_SPEED_COMP_VAL_REV = -LEFT_SPEED_COMP_VAL_REV; //right speed compensation value

//drive wheel direction constants

const boolean FWD_DIR = true;

const boolean REV_DIR = !FWD_DIR;

//Motor direction variables

boolean bLeftMotorDirIsFwd = true;

boolean bRightMotorDirIsFwd = true;

bool bIsForwardDir = true; //default is foward direction

#pragma endregion Motor Parameters

#pragma region HEADING_BASED_TURN_PARAMS

//09/11/18 new heading-based turn support constants

const int ESS_TURN_DEG = 45;

const int QUARTER_TURN_DEG = 90;

const float DEFAULT_HDG_JUMP_VAL = 20.f;

const int DEFAULT_TURN_DEG = QUARTER_TURN_DEG;

const float ROLLING_TURN_MAX_SEC_PER_DEG = 1 / 15.0; //used to limit time in rolling turns

//const int OFFSIDE_MOTOR_SPEED = MOTOR_SPEED_LOW;

//const int OFFSIDE_MOTOR_SPEED = 0; //03/04/20 debug

const int OFFSIDE_MOTOR_SPEED = 25; //03/04/20 turn debug - leave at this value for now

const int DRIVESIDE_MOTOR_SPEED_HIGH = MOTOR_SPEED_MAX;

const int DRIVESIDE_MOTOR_SPEED_HALF = MOTOR_SPEED_HALF;//added 02/16/20 for wall tracking support

const int DRIVESIDE_MOTOR_SPEED_LOW = MOTOR_SPEED_HALF;

const long MSEC_PER_HDG_CHECK = 100; //added 08/29/18

const float HDG_NEAR_MATCH_VAL = 0.9; //slow the turn down here

//const float HDG_FULL_MATCH_VAL = 0.98; //stop the turn here

const float HDG_FULL_MATCH_VAL = 0.99; //stop the turn here //rev 05/17/20

const float HDG_MIN_MATCH_VAL = 0.6; //added 09/08/18: don't start checking slope until turn is well started

elapsedMillis sinceLastTimeCheck; //used for rolling turn timeout

#pragma endregion Heading-based turn support parameters

#pragma region VL53L0X_TOF_LIDAR_SUPPORT

//const int ToFArray_PARALLEL_FIND_Kp = 800;

//const int ToFArray_PARALLEL_FIND_Kp = 80;

//const int ToFArray_PARALLEL_FIND_Kp = 120;

const int ToFArray_PARALLEL_FIND_Kp = 200;

const int ToFArray_PARALLEL_FIND_Ki = 20; //added 09/22/20

const int ToFArray_PARALLEL_FIND_Kd = 0; //added 09/22/20

//const float ToFArray_PARALLEL_FIND_SETPOINT = 0.05; //09/22/20 moved here

const float ToFArray_PARALLEL_FIND_SETPOINT = 0.01; //09/22/20 moved here

const int ToFArray_OFFSET_CAPTURE_Kp = 200;

//const int ToFArray_OFFSET_CAPTURE_Ki = 0; //06/28/20

const int ToFArray_OFFSET_CAPTURE_Ki = 50;

const int ToFArray_OFFSET_CAPTURE_Kd = 50;

const int ToFArray_OFFSET_TRACK_Kp = 10;

const int ToFArray_OFFSET_TRACK_Kd = 0;

//const int ToFArray_OFFSET_TRACK_Kd = 50;

double LeftSteeringVal, RightSteeringVal; //added 08/06/20

double ToFSetpoint, ToFSteeringVal, ToFOutput;//10/06/17 chg input variable name to something more descriptive

PID ToFArrayPID(&ToFSteeringVal, &ToFOutput, &ToFSetpoint, ToFArray_PARALLEL_FIND_Kp, 0.0, 0.0, DIRECT);//06/19/20 use this for now

const int ROTATE_TO_PARALLEL_TELEMETRY_SPACING_MSEC = 200;

const float PARALLEL_ORIENTATION_STEERING_VALUE_THRESHOLD = 0.1; //rev 06/21/20 - now using (F-R)/100

//const float CAPTURE_APPROACH_STEERING_VALUE = 0.2; //added 06/21/20 - now using (F-R)/100

const float CAPTURE_APPROACH_STEERING_VALUE = 0.4; //rev 06/28/20 - now using (F-R)/100

//const float WALL_OFFSET_CAPTURE_WINDOW_CM = 5.0; //just a guess

const float WALL_OFFSET_CAPTURE_WINDOW_CM = 2.0; //just a guess

const int VL53L0X_I2C_SLAVE_ADDRESS = 0x20; ////Teensy 3.5 VL53L0X ToF LIDAR controller

//Sensor data values

int Lidar_RightFront;

int Lidar_RightCenter;

int Lidar_RightRear;

int Lidar_LeftFront;

int Lidar_LeftCenter;

int Lidar_LeftRear;

elapsedMillis lastToFArrayTelemetryMsec; //used to space out telemetry prints

enum VL53L0X_REQUEST

{

VL53L0X_CENTERS_ONLY,

VL53L0X_RIGHT,

VL53L0X_LEFT,

VL53L0X_BOTH //added 08/06/20

};

#pragma endregion VL53L0X ToF LIDAR Support

//vvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvv//

//=================== START PIN ASSIGNMENTS ===================//

//vvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvv//

#pragma region MOTOR_PINS

//09/11/20 Now using two Adafruit DRV8871 drivers for all 4 motors

const int In1_Left = 10;

const int In2_Left = 11;

const int In1_Right = 8;

const int In2_Right = 9;

#pragma endregion Motor Pin Assignments

//Laser pointer

const int RED_LASER_DIODE_PIN = 7;

//LIDAR MODE pin (continuous mode)

const int LIDAR_MODE_PIN = 4; //mvd here 01/10/18

//BATT Monitor and IR Detector pin assignments added 01/30/17

const int BATT_MON_PIN = A1;

#pragma region CHG_SUPP_PINS

//04/22/20 bugfix - RUN & TOT input definitions were reversed

//const int RUN_CURR_PIN = A8; //02/25/19 bugfix 02/28/19 name chg

//const int TOT_CURR_PIN = A9; //02/24/19 now connected to 1NA619 charge current sensor

const int RUN_CURR_PIN = A9; //connected to 1NA619 between battery and rest of robot

const int TOT_CURR_PIN = A8; //connected to 1NA619 between charge plug and battery

const int CHG_CONNECT_PIN = 23; //goes HIGH when chg cable connected

//LED en/dis output pins

//03/15/18 revised for TP5100 module

const int FIN_LED_PIN = 35;

const int _80PCT_LED_PIN = 33;

const int _60PCT_LED_PIN = 31;

const int _40PCT_LED_PIN = 29;

const int _20PCT_LED_PIN = 27;

const int CHG_LED_PIN = 25; //pwr2 signal line repl by Batt2

long chgStartMsec;//added 02/24/17

#pragma endregion Charge Control/Status Pins

//05/03/17 moved below Chg supp LED defs so can re-use them

//03/15/18 revised for TP5100 module

#pragma region BACKUP_TURN_LED_PINS

//03/19/18 new plan - use 'full/half/qtr_left, full/half/qtr_right aliases

const int FULL_LEFT_LED_PIN = FIN_LED_PIN;

const int HALF_LEFT_LED_PIN = _80PCT_LED_PIN;

const int QTR_LEFT_LED_PIN = _60PCT_LED_PIN;

const int QTR_RIGHT_LED_PIN = _40PCT_LED_PIN;

const int HALF_RIGHT_LED_PIN = _20PCT_LED_PIN;

const int FULL_RIGHT_LED_PIN = CHG_LED_PIN;

#pragma endregion Rear Bumper Display LEDs

#pragma region SPEAKER_CONSTANTS

//const int SOS_PWM_PIN = 3;

const int SOS_PWM_PIN = 2; //chg to proper pin 09/13/2020

const int DOT_MS = 200;

const int DASH_MS = 800;

const int HIGHTONE = 1000;

const int LOWTONE = 500;

#pragma endregion Speaker Constants

//const int PWRDWN_INTERRUPT_PIN = 2; //added 03/27/18

//^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^//

//=================== END PIN ASSIGNMENTS ================//

//^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^//

//03/08/17 added Mode/state-specific telemetry header strings

#pragma region TELEMETRYSTRINGS

//const String IRHomingTelemStr = "Time\tBattV\tFin1\tFin2\tSteer\tPID_In\tPID_Out\tLSpd\tRSpd";

const String IRHomingTelemStr = "Time\tBattV\tFin1\tFin2\tSteer\tPID_Out\t\tLSpd\tRSpd\tFrontD";

//const String WallFollowTelemStr = "DateTime\tBatt\tMode\tTrack\tLeft\tRight\tFront\tVar\tLSpd\tRSpd";

//const char* WallFollowTelemStr = "DateTime\tBatt\tMode\tTrack\tLeft\tRight\tFront\tVar\tLSpd\tRSpd";

const char* WallFollowTelemStr = "Msec\tMode\tTrack\tFront\tCtr\tRear\tSteer\tOutput\tLSpd\tRSpd"; //09/01/20 PID tuning

//const String WallFollowTelemStr = "NewDist\tOldDist\tBmean\tBvar\tOldImean\tOldIvar\tOldD^2\tNewD^2\tImean\tIvar"; //04/17/19 added for Ivar debug

//const String WallFollowTelemStr = "New_D\tOld_D\tB_mean\tB_var\told_Im\told_Ivar\told_d^2\tnew_d^2\tI_mean\tIm_sq\toldIm_sq\tI_var\tB_uSec\tI_uSec\n";

//const String ChargingTelemStr = "ChgSec\tBattV\tTotalI\tRunI\tChgI\tATotI\tARunI\tChrging\n"; //rev 02/28/19 to chg 'BattI' to 'TotI', added 'RunI', ChgI

const String ChargingTelemStr = "ChgSec\tBattV\tTotalI\tRunI\tChgI\n"; //rev 05/02/20

#pragma endregion Mode-specific telemetry header strings

#pragma region LOOP_VARS

//loop() variables

double deltaD = 0;

double olddist = 0;

double newdist = 0;

boolean bStuck = false;

int leftspeednum = MOTOR_SPEED_HALF;

int rightspeednum = MOTOR_SPEED_HALF;

elapsedMillis sinceLastNavUpdateMsec; //added 10/15/18 to replace lastmillisec

NavCases NavCase = NAV_WALLTRK;

WallTrackingCases TrackingCase = TRACKING_NEITHER; //added 01/05/16

WallTrackingCases PrevTrackingCase = TRACKING_LEFT;

OpModes PrevOpMode = MODE_NONE; //added 03/08/17, rev to MODE_NONE 04/04/17

OpModes CurrentOpMode = MODE_NONE; //added 10/13/17 so can use in motor speed setting routines

//04/10/20 added for experiment to port heading based wall tracking from two wheel robot

TrackingState CurrentTrackingState = TRK_RIGHT_NONE;

TrackingState PrevTrackingState = TRK_RIGHT_NONE;

//02/13/16 added for 'pause' debug

int m_FinalLeftSpeed = 0;

int m_FinalRightSpeed = 0;

//11/03/18 added for new incremental variance calc

double last_incvar = 0;

double last_incmean = 0;

elapsedMillis lastHomingTelemetryMsec; //used to space out telemetry prints

#pragma endregion Loop Variables

#pragma region I2C_VARS

#pragma endregion I2C related parameters

#pragma region TRISENSOR PARAMS

//FRAM constants/params

const int NUM_FRAM_BYTES_TO_CLEAR = 2000;

const int FIRST_TIME_STORAGE_ADDR = 2;//addr 0/1 reserved for nextFramWriteAddr

const int NEXTFRAMWRITEADDR_FRAMSTORAGELOCATION = 0;

const int NUM_TIMES_TO_DISPLAY = 10;

//const unsigned long FRAM_WRITE_INTERVAL_MSEC = 60000; //one minute

const unsigned long FRAM_WRITE_INTERVAL_MSEC = 6000; //one minute

int nextFramWriteAddr = FIRST_TIME_STORAGE_ADDR;

elapsedMillis sinceLastFRAMWriteMsec; //added 09/19/18

#pragma endregion TRISENSOR

#pragma region MPU6050_SUPPORT

uint8_t mpuIntStatus; // holds actual interrupt status byte from MPU. Used in Homer's Overflow routine

uint8_t devStatus; // return status after each device operation (0 = success, !0 = error)

uint16_t packetSize; // expected DMP packet size (default is 42 bytes)

uint16_t fifoCount; // count of all bytes currently in FIFO

uint8_t fifoBuffer[64]; // FIFO storage buffer

// orientation/motion vars

Quaternion q; // [w, x, y, z] quaternion container

VectorInt16 aa; // [x, y, z] accel sensor measurements

VectorInt16 aaReal; // [x, y, z] gravity-free accel sensor measurements

VectorInt16 aaWorld; // [x, y, z] world-frame accel sensor measurements

VectorFloat gravity; // [x, y, z] gravity vector

float euler[3]; // [psi, theta, phi] Euler angle container

float ypr[3]; // [yaw, pitch, roll] yaw/pitch/roll container and gravity vector

int GetPacketLoopCount = 0;

int OuterGetPacketLoopCount = 0;

//MPU6050 status flags

bool bMPU6050Ready = true;

bool dmpReady = false; // set true if DMP init was successful

volatile float IMUHdgValDeg = 0; //updated by UpdateIMUHdgValDeg()

const uint16_t MAX_GETPACKET_LOOPS = 100; //10/30/19 added for backup loop exit condition in GetCurrentFIFOPacket()

uint8_t GetCurrentFIFOPacket(uint8_t* data, uint8_t length, uint16_t max_loops = MAX_GETPACKET_LOOPS); //prototype here so can define a default param

bool bFirstTime = true;

#define MPU6050_CCW_INCREASES_YAWVAL //added 12/05/19

#pragma endregion MPU6050 Support

#pragma region FRAM/RTC Support

//RTC/FRAM/MPU6050 status flags

bool RTC_Avail = true;

bool bRTCLostPower = false; //added 10/17/18

bool bFRAMReady = true;

#pragma endregion FRAM/RTC Support

#pragma region WALL_FOLLOW_SUPPORT

//12/05/19 defiine 'TURNDIR_CCW' & 'TURN_CW' identifiers for better readability. Now I can call

//RollingTurn(TURNDIR_CCW,...) and RollingTurn(TURNDIR_CW) instead of just RollingTurn(true/false)

const bool TURNDIR_CCW = true;

const bool TURNDIR_CW = false;

const int DESIRED_WALL_OFFSET_DIST_CM = 30;

const int ROLLING_TURN_RADIUS_CM = 5;

//const int NINETY_DEG_FUDGE_FACTOR_CM = 5; //added 04/17/20

const int NINETY_DEG_FUDGE_FACTOR_CM = 10; //added 04/17/20

const int WALL_APPR_ERR_WIN_MULTFACT = 2; //added 08/12/19

const int WALL_APPR_MAX_AGGREGATE_CUT = 30; //added 05/09/20

const int WALL_TRK_ERR_WIN_MULTFACT = 1; //added 08/12/19

const int VERY_FAR_AWAY_CM = 5;

const int FAR_AWAY_CM = 2;

const int CLOSE_CM = 1;

const int VERY_FAR_AWAY_TURN_DEG = 20;

const int FAR_AWAY_TURN_DEG = 10;

const int CLOSE_TURN_DEG = 5;

const int TURN_INCREMENT_DEG = 10;

int m_PrevHdgCutDeg = 0;

bool m_PrevHdgCutDir = TURNDIR_CW; //CW

int m_PrevLeftDistCm = 0;

int m_PrevRightDistCm = 0;

int m_AggregateCutDeg = 0; //added 05/09/20

elapsedMillis sinceLastHdgCutUpdateMsec; //added 10/15/18 to replace lastmillisec

int m_TrkErrWinMult = WALL_APPR_ERR_WIN_MULTFACT; //used to increase close in response

bool bIsFirst180 = true; //added 01/25/20 for boomerang mode tracking

elapsedMillis mSecSinceLastParDistChk = 0; //added 02/14/20 to steepen distance/measurement slope

#pragma endregion Wall Following Support

#pragma endregion PRE_SETUP

#pragma region TIMER_ISR

//08/10/20 added timer ISR

volatile bool bIsStuck = false;

volatile bool bIsStuck_Slow = false; //08/12/20 added for half-speed offset capture operations

volatile bool bObstacleAhead = false;

volatile uint32_t Last_ISR_Msec;

volatile bool bTimeForNavUpdate = false;

//DEBUG!!

//uint32_t elapsedUsec = micros()-Last_ISR_Msec;

//mySerial.printf("MSec\tUSec\tFdist\tFvar\n");

//mySerial.printf("%lu\t%lu\t%d\t%2.3f\n", millis(), elaspsed, frontdist, frontvar);

//DEBUG!!

//

ISR(TIMER5_COMPA_vect) //timer1 interrupt 1Hz toggles pin 13 (LED)

{

//digitalWrite(TIMER_INT_OUTPUT_PIN, HIGH);

//delayMicroseconds(30);

//digitalWrite(TIMER_INT_OUTPUT_PIN, LOW);

bTimeForNavUpdate = true;

#ifndef NO_STUCK

uint16_t frontdist = GetFrontDistCm();

frontvar = CalcDistArrayVariance(frontdist, aFrontDist);

bIsStuck = frontvar < STUCK_FRONT_VARIANCE_THRESHOLD;

bIsStuck_Slow = frontvar < STUCK_FRONT_VARIANCE_THRESHOLD / 2;

bObstacleAhead = frontdist < MIN_OBS_DIST_CM;

#endif // !NO_STUCK

}

#pragma endregion TIMER_ISR

#pragma region WALL_OFFSET_TRACKING

const int WALL_OFFSET_TRACK_Kp = 100;

const int WALL_OFFSET_TRACK_Ki = 0;

const int WALL_OFFSET_TRACK_Kd = 0;

const double WALL_OFFSET_TRACK_SETPOINT_LIMIT = 0.3;

double WallTrackSteerVal, WallTrackOutput, WallTrackSetPoint;

PID WallTrackPID(&WallTrackSteerVal, &WallTrackOutput, &WallTrackSetPoint, WALL_OFFSET_TRACK_Kp, 0.0, 0.0, REVERSE);

#pragma endregion Wall Offset Tracking Support

#pragma region SETUP

void setup()

{

Serial.begin(115200);

delay(1000);

FramPacket = CFRAMStatePacket(&fram); //09/27/18 comes after Serial.begin(). Calls Wire.begin() & fram.begin()

#pragma region RTC

if (rtc.begin()) //02/19/19 this now returns FALSE if RTC doesn't respond

{

Serial.println("Found RTC...");

delay(100);

bRTCLostPower = rtc.lostPower(); //added 10/17/18

mySerial.printf("rtc.lostPower() reports %d\n", bRTCLostPower);

if (rtc.lostPower())

{

Serial.println("RTC lost power. Setting RTC to last compile time");

rtc.adjust(DateTime(F(__DATE__), F(__TIME__)));// sets RTC to last compile date/time

}

#ifdef FORCE_RTC_TO_LAST_COMPILE_TIME

Serial.println("Forcing RTC to last compile time");

rtc.adjust(DateTime(F(__DATE__), F(__TIME__)));// sets RTC to last compile date/time

#endif

DateTime now = rtc.now();

char buffer[100];

memset(buffer, '\0', 100);

mySerial.printf("Retrieving Date/Time from RTC with rtc.now() = %ld\n", now.unixtime());

GetDayDateTimeStringFromDateTime(now, buffer);

Serial.print("Date/Time: "); Serial.println(buffer);

RTC_Avail = true;

}

else

{

Serial.println("Couldn't find RTC. Real-time clock functions won't be available");

RTC_Avail = false; //use this instead

}

if (RTC_Avail && rtc.lostPower())

{

DateTime Comp_dt = DateTime(F(__DATE__), F(__TIME__)); //__DATE__ & __TIME__ are environment variables)

uint8_t mydayofweek = Comp_dt.dayOfTheWeek(); //returns 0 for Sunday, 6 for Saturday dayOfTheWeek

int mymonth = Comp_dt.month();

int myday = Comp_dt.day();

int myyear = Comp_dt.year();

int myhour = Comp_dt.hour();

int mymin = Comp_dt.minute();

int mysec = Comp_dt.second();

long unixtime = Comp_dt.unixtime();

char* dayofweek = (char*)daysOfTheWeek[mydayofweek];

Serial.print("day of week value = "); Serial.println(mydayofweek);

Serial.print("day of week value = "); Serial.println(Comp_dt.dayOfTheWeek());

//mySerial.printf("RTC lost power - setting to datetime of last compile %ld (%s %4d/%02d/%02d at %02d:%02d:%02d)\n",

// dt.unixtime(), daysOfTheWeek[dt.dayOfTheWeek()], dt.year(), dt.month(), dt.day(),

// dt.hour(), dt.minute(), dt.second());

mySerial.printf("RTC lost power - setting to datetime of last compile %ld (%s %4d/%02d/%02d at %02d:%02d:%02d)\n",

unixtime, dayofweek, myyear, mymonth, myday, myhour, mymin, mysec);

rtc.adjust(Comp_dt);

}

#pragma endregion RTC

#pragma region FRAM

if (fram.begin())// you can stick a non-default i2c addr in here, e.g. begin(0x51);

{

Serial.println("Found I2C FRAM");

bFRAMReady = true;

}

else

{

Serial.println("I2C FRAM not identified ... check your connections?\r\n");

Serial.println("Will continue in case this processor doesn't support repeated start\r\n");

bFRAMReady = false;

}

// Read the stored nextFramWriteAddr value from the first two bytes, and the last stored packet

if (bFRAMReady)

{

fram.FRAM_I2C_readAnything(NEXTFRAMWRITEADDR_FRAMSTORAGELOCATION, nextFramWriteAddr);

mySerial.printf("Read %d (nextFramWriteAddr) from FRAM address %d\n",

nextFramWriteAddr, NEXTFRAMWRITEADDR_FRAMSTORAGELOCATION);

//if any packets have been written since last FRAM clear, display last-written one

if (nextFramWriteAddr > FIRST_TIME_STORAGE_ADDR)

{

int FramPacketSize = CFRAMStatePacket::packet_size;

mySerial.printf("printing packet written to FRAM at %d\n", nextFramWriteAddr - FramPacketSize);

FramPacket.Read(nextFramWriteAddr - FramPacketSize);

FramPacket.Print();

}

}

#pragma endregion FRAM

#pragma region MPU6050

Serial.println(F("Initializing MPU6050 ..."));

mpu.initialize();

// verify connection

Serial.println(F("Testing device connections..."));

Serial.println(mpu.testConnection() ? F("MPU6050 connection successful") : F("MPU6050 connection failed"));

float StartSec = 0; //used to time MPU6050 init

Serial.println(F("Initializing DMP..."));

devStatus = mpu.dmpInitialize();

// make sure it worked (returns 0 if successful)

if (devStatus == 0)

{

//12/06/19 don't need to do this every time. Above cal constants should work.

//// Calibration Time: generate offsets and calibrate our MPU6050

//mpu.CalibrateAccel(6);

//mpu.CalibrateGyro(6);

//Serial.println();

//mpu.PrintActiveOffsets();

// turn on the DMP, now that it's ready

Serial.println(F("Enabling DMP..."));

mpu.setDMPEnabled(true);

// set our DMP Ready flag so the main loop() function knows it's okay to use it

Serial.println(F("DMP ready! Waiting for MPU6050 drift rate to settle..."));

dmpReady = true;

// get expected DMP packet size for later comparison

packetSize = mpu.dmpGetFIFOPacketSize();

bMPU6050Ready = true;

StartSec = millis() / 1000.f;

mySerial.printf("\nMPU6050 Ready at %2.2f Sec\n", StartSec);

}

else

{

// ERROR!

// 1 = initial memory load failed

// 2 = DMP configuration updates failed

// (if it's going to break, usually the code will be 1)

Serial.print(F("DMP Initialization failed (code "));

Serial.print(devStatus);

Serial.println(F(")"));

bMPU6050Ready = false;

}

#pragma endregion MPU6050

//DEBUG!!

//print out Homing PID parameters

mySerial.printf("IRHomingPID Parameters (Kp,Ki,Kd,DIR) = (%2.2f,%2.2f,%2.2f,%d)\n",

IRHomingPID.GetKp(), IRHomingPID.GetKi(), IRHomingPID.GetKd(), IRHomingPID.GetDirection());

//DEBUG!!

#pragma region L/R/FRONT DISTANCE ARRAYS

InitFrontDistArray(); //08/12/20 code extracted to fcn so can call elsewhere

//04/28/19 put aLeftDist[], aRightDist[] arrays back in

for (int i = 0; i < LR_PING_DIST_ARRAY_SIZE; i++)

{

aLeftDist[i] = random(0, 200);

aRightDist[i] = random(0, 200);

////DEBUG!!

// mySerial.printf("aLeft/RightDist[%d] = %d, %d\n",i, aLeftDist[i], aRightDist[i]);

////DEBUG!!

}

#pragma endregion L/R/FRONT DISTANCE ARRAYS

#pragma region I/O PIN SETUP

//09/11/20 now using two DRV8871 motor drivers for all four motors

pinMode(In1_Left, OUTPUT);

pinMode(In2_Left, OUTPUT);

pinMode(In1_Right, OUTPUT);

pinMode(In2_Right, OUTPUT);

//Laser pointer

pinMode(RED_LASER_DIODE_PIN, OUTPUT);

//01/30/17 added for chg stn supp

pinMode(BATT_MON_PIN, INPUT);//Battery voltage monitor input

analogReference(EXTERNAL); //03/18/18 now using external 3.3V ref with 5.84V zener voltage shifter

//02/28/19 revised to repurpose CHG_SIG_PIN AND CHG_FIN_PIN for current measurement

pinMode(RUN_CURR_PIN, INPUT); //02/24/19 now connected to 'Run Current' 1NA619 charge current sensor

digitalWrite(RUN_CURR_PIN, LOW); //turn off the internal pullup resistor

pinMode(TOT_CURR_PIN, INPUT);//02/24/19 now connected to 'Total Current' 1NA619 charge current sensor

digitalWrite(TOT_CURR_PIN, LOW); //turn off the internal pullup resistor

pinMode(CHG_CONNECT_PIN, INPUT_PULLUP); //goes HIGH when chg cable connected

//Charge status LED en/dis pins

pinMode(FIN_LED_PIN, OUTPUT);

pinMode(_80PCT_LED_PIN, OUTPUT);

pinMode(_60PCT_LED_PIN, OUTPUT);

pinMode(_40PCT_LED_PIN, OUTPUT);

pinMode(_20PCT_LED_PIN, OUTPUT);

pinMode(CHG_LED_PIN, OUTPUT);

//added 03/27/18

//pinMode(SOS_PWM_PIN, OUTPUT);

//LIDAR mode pin

pinMode(LIDAR_MODE_PIN, INPUT); // Set LIDAR input monitor pin

#pragma endregion I/O PIN SETUP

//11/14/18 added this section for distance printout only

//08/06/20 revised for VL53L0X support

#ifdef DISTANCES_ONLY

sinceLastNavUpdateMsec = 0;

digitalWrite(RED_LASER_DIODE_PIN, HIGH); //enable the front laser dot

mySerial.printf("\n------------ DISTANCES ONLY MODE!!! -----------------\n\n");

int i = 0; //added 09/20/20 for in-line header display

mySerial.printf("Msec\tLFront\tLCenter\tLRear\tRFront\tRCenter\tRRear\n");

while (true)

{

//if (sinceLastNavUpdateMsec > MIN_PING_INTERVAL_MSEC)

//{

// sinceLastNavUpdateMsec -= MIN_PING_INTERVAL_MSEC;

//09/20/20 re-display the column headers

//if (i % 20 == 0)

//{

//}

GetRequestedVL53l0xValues(VL53L0X_BOTH);

//mySerial.printf("Both: %lu\t%d\t%d\t%d\t%d\t%d\t%d\n",

// millis(), Lidar_LeftFront, Lidar_LeftCenter, Lidar_LeftRear,

// Lidar_RightFront, Lidar_RightCenter, Lidar_RightRear);

mySerial.printf("%lu\t%d\t%d\t%d\t%d\t%d\t%d\n",

millis(), Lidar_LeftFront, Lidar_LeftCenter, Lidar_LeftRear,

Lidar_RightFront, Lidar_RightCenter, Lidar_RightRear);

//i++;

//}

}

#else

prevleftdistval = GetAvgLeftDistCm();

prevrightdistval = GetAvgRightDistCm();

prevfrontdistcm = GetFrontDistCm();

#endif // DISTANCES_ONLY

#pragma region TIMER_INTERRUPT

//set timer5 interrupt at (1000/MIN_PING_INTERVAL_MSEC)Hz 5Hz a/o 08/10/20

//09/18/20 can't use Timer1 cuz doing so conflicts with PWM on pins 10-12

cli();//stop interrupts

TCCR5A = 0;// set entire TCCR5A register to 0

TCCR5B = 0;// same for TCCR5B

TCNT5 = 0;//initialize counter value to 0

// set compare match register for 5hz increments

OCR5A = 3124;// = (16*10^6) / (5*1024) - 1 (must be <65536)

TCCR5B |= (1 << WGM52);// turn on CTC mode

TCCR5B |= (1 << CS52) | (1 << CS50);// Set CS10 and CS12 bits for 1024 prescaler

TIMSK5 |= (1 << OCIE5A);// enable timer compare interrupt

sei();//allow interrupts

#pragma endregion TIMER_INTERRUPT

pinMode(TIMER_INT_OUTPUT_PIN, OUTPUT);

#pragma region POST

//Check battery voltage

mySerial.printf("Checking Battery Voltage...\n");

mySerial.printf("Battery voltage = %2.3f\n", GetBattVoltage());

#ifndef NO_POST

//Power On Self-Test (POST)

//Check speaker

mySerial.printf("Checking Speaker...\n");

tone(SOS_PWM_PIN, HIGHTONE, DOT_MS); //returns immediately

delay(DOT_MS); //delay for LED viewing

tone(SOS_PWM_PIN, LOWTONE, DASH_MS); //returns immediately

delay(DOT_MS); //delay for LED viewing

tone(SOS_PWM_PIN, HIGHTONE, DOT_MS); //returns immediately

delay(DOT_MS); //delay for LED viewing

tone(SOS_PWM_PIN, LOWTONE, DASH_MS); //returns immediately

//Turn on LASER

mySerial.printf("Checking Laser pointer\n");

digitalWrite(RED_LASER_DIODE_PIN, HIGH);

delay(1000);

digitalWrite(RED_LASER_DIODE_PIN, LOW);

delay(1000);

digitalWrite(RED_LASER_DIODE_PIN, HIGH);

delay(1000);

digitalWrite(RED_LASER_DIODE_PIN, LOW);

//check for reasonable LIDAR value

#ifndef NO_LIDAR

mySerial.printf("LIDAR reports %d cm\n", GetFrontDistCm());

#else

mySerial.printf("LIDAR Disabled\n");

#endif // !NO_LIDAR

//

// mySerial.printf("Checking Left & Right Distance Sensors...\n");

//#ifndef NO_PINGS

// mySerial.printf("Left\tRight\n");

// for (size_t i = 0; i < 10; i++)

// {

// mySerial.printf("%d\t%d\n", GetLeftDistCm(), GetRightDistCm());

// }

//

//#else

// mySerial.printf("Distance Sensors Disabled\n");

//#endif // !NO_LIDAR

//

//set all chg status LEDs OFF (HIGH)

digitalWrite(FIN_LED_PIN, HIGH);

digitalWrite(_80PCT_LED_PIN, HIGH);

digitalWrite(_60PCT_LED_PIN, HIGH);

digitalWrite(_40PCT_LED_PIN, HIGH);

digitalWrite(_20PCT_LED_PIN, HIGH);

digitalWrite(CHG_LED_PIN, HIGH);

delay(1000);

//set all chg status LEDs ON (LOW)

digitalWrite(_40PCT_LED_PIN, LOW);

digitalWrite(_20PCT_LED_PIN, LOW);

digitalWrite(CHG_LED_PIN, LOW);

digitalWrite(_60PCT_LED_PIN, LOW);

digitalWrite(_80PCT_LED_PIN, LOW);

digitalWrite(FIN_LED_PIN, LOW);

//Disable LEDs in sequence from center out

delay(500);

digitalWrite(_40PCT_LED_PIN, HIGH);

digitalWrite(_60PCT_LED_PIN, HIGH);

delay(100);

digitalWrite(_20PCT_LED_PIN, HIGH);

digitalWrite(_80PCT_LED_PIN, HIGH);

delay(100);

digitalWrite(CHG_LED_PIN, HIGH);

digitalWrite(FIN_LED_PIN, HIGH);

delay(500);

//Enable LEDs in sequence from outside in

digitalWrite(CHG_LED_PIN, LOW);

digitalWrite(FIN_LED_PIN, LOW);

delay(100);

digitalWrite(_20PCT_LED_PIN, LOW);

digitalWrite(_80PCT_LED_PIN, LOW);

delay(100);

digitalWrite(_40PCT_LED_PIN, LOW);

digitalWrite(_60PCT_LED_PIN, LOW);

delay(500);

//Disable LEDs in sequence from center out

digitalWrite(_40PCT_LED_PIN, HIGH);

digitalWrite(_60PCT_LED_PIN, HIGH);

delay(100);

digitalWrite(_20PCT_LED_PIN, HIGH);

digitalWrite(_80PCT_LED_PIN, HIGH);

delay(100);

digitalWrite(CHG_LED_PIN, HIGH);

digitalWrite(FIN_LED_PIN, HIGH);

//10/08/17 make sure motors control lines are all in STOP state

StopLeftMotors();

StopRightMotors();

//run through motor test sequence

//10/06/17 added check for charger connection

//if (!IsChargerConnected())

//{

// //fwd 1 sec

// SetLeftMotorDir(FWD_DIR);

// SetRightMotorDir(FWD_DIR);

// RunBothMotorsMsec(1000, MOTOR_SPEED_HALF, MOTOR_SPEED_HALF); //run for 1 sec

// //reverse 1 sec

// SetLeftMotorDir(REV_DIR);

// SetRightMotorDir(REV_DIR);

// RunBothMotorsMsec(1000, MOTOR_SPEED_HALF, MOTOR_SPEED_HALF); //run for 1 sec

// StopBothMotors();

//}

//08/09/20 modified to use DRV8871 motor driver

if (!IsChargerConnected())

{

//fwd 1 sec

RunBothMotorsMsec(true, 1000, MOTOR_SPEED_HALF, MOTOR_SPEED_HALF); //run for 1 sec

//reverse 1 sec

RunBothMotorsMsec(false, 1000, MOTOR_SPEED_HALF, MOTOR_SPEED_HALF); //run for 1 sec

StopBothMotors();

}

#else

mySerial.printf("\n*********** POST checks Skipped!!********************\n\n");

#endif // !NO_POST

#pragma endregion POST

//09/20/20 have to do this for parallel finding to go the right way

mySerial.printf("Initializing Left & Right Distance Arrays...\n");

#ifndef NO_PINGS

mySerial.printf("Left\tRight\n");

for (size_t i = 0; i < LR_PING_DIST_ARRAY_SIZE; i++)

{

int leftdist = GetLeftDistCm();

int rightdist = GetRightDistCm();

mySerial.printf("%d\t%d\n", leftdist, rightdist);

UpdateLRDistanceArrays(leftdist, rightdist);

}

#else

mySerial.printf("Distance Sensors Disabled\n");

#endif // !NO_LIDAR

////01/26/15 start the robot going straight

MoveAhead(MOTOR_SPEED_LOW, MOTOR_SPEED_LOW);

//04/13/20 initialize just before loop()

leftdistval = GetLeftDistCm();

rightdistval = GetRightDistCm();

frontdistval = GetFrontDistCm();

sinceLastNavUpdateMsec = 0; //added 10/15/18

sinceLastFRAMWriteMsec = 0; //added 09/19/18

mySerial.printf("sinceLastNavUpdateMsec in setup() = %lu\n", (uint32_t)sinceLastNavUpdateMsec);

#pragma endregion Setup

//09/24/20 set WallOffsetTrackingPID parameters here

WallTrackPID.SetTunings(WALL_OFFSET_TRACK_Kp, WALL_OFFSET_TRACK_Ki, WALL_OFFSET_TRACK_Kd); //try more aggresive than capture, but less than parallel rotate

WallTrackPID.SetOutputLimits(-MOTOR_SPEED_QTR, MOTOR_SPEED_QTR);

WallTrackSetPoint = DESIRED_WALL_OFFSET_DIST_CM * 10; //now track the desired offset distance, in mm

mySerial.printf("WallTrackPID Parameters (Kp,Ki,Kd) = (%2.f,%2.f,%2.f)\n",

WallTrackPID.GetKp(), WallTrackPID.GetKi(), WallTrackPID.GetKd());

WallTrackPID.SetMode(AUTOMATIC);

mySerial.printf("mSec\tFront\tCenter\tRear\tSteer\tSetPt\tOut\tleftSpd\tRightSpd\n");

MoveAhead(MOTOR_SPEED_QTR, MOTOR_SPEED_QTR);

CaptureWallOffset(TRACKING_LEFT, DESIRED_WALL_OFFSET_DIST_CM + ROLLING_TURN_RADIUS_CM);

//delay for 2 sec, but check for user input during delay.

unsigned long now = millis();

while (millis()-now < 2000)

{

CheckForUserInput();

}

TrackLeftWall(DESIRED_WALL_OFFSET_DIST_CM); //infinite loop

} //setup

int ComputeCount = 0;

const int ComputeSkipsPerHeaderPrint = 20;

void loop()

{

CheckForUserInput();

GetRequestedVL53l0xValues(VL53L0X_LEFT);

WallTrackSteerVal = LeftSteeringVal;

//09/25/20 need a positive offset when ctr dist < desired. 4cm diff ->> 0.4 ->> approx 20 deg

WallTrackSetPoint = (double)(10*DESIRED_WALL_OFFSET_DIST_CM - Lidar_LeftCenter) / 100.f;

//update motor speeds, skipping bad values

if (!isnan(WallTrackSteerVal))

{

//By default, computes new output approx 10 times/sec (use SetSampleTime() to change)

if (WallTrackPID.Compute())//if Compute returns TRUE, IRHomingOutput has new value

{

digitalWrite(TIMER_INT_OUTPUT_PIN, HIGH);

delayMicroseconds(10);

digitalWrite(TIMER_INT_OUTPUT_PIN, LOW);

leftspeednum = MOTOR_SPEED_QTR + WallTrackOutput;

rightspeednum = MOTOR_SPEED_QTR - WallTrackOutput;

mySerial.printf("%lu\t%d\t%d\t%d\t%2.1f\t%2.1f\t%2.1f\t%d\t%d\n", millis(),

Lidar_LeftFront, Lidar_LeftCenter, Lidar_LeftRear, WallTrackSteerVal,

WallTrackSetPoint, WallTrackOutput, leftspeednum, rightspeednum);

MoveAhead(leftspeednum, rightspeednum);

ComputeCount++;

if (ComputeCount > ComputeSkipsPerHeaderPrint)

{

mySerial.printf("mSec\tFront\tCenter\tRear\tSteer\tSetPt\tOut\tleftSpd\tRightSpd\n");

ComputeCount = 0;

}

}

}

}

//06/18/20 Rewritten to utilize VL53L0X ToF sensor array

void TrackLeftWall(int tgt_offset)

{

WallTrackPID.SetTunings(WALL_OFFSET_TRACK_Kp, WALL_OFFSET_TRACK_Ki, WALL_OFFSET_TRACK_Kd); //try more aggresive than capture, but less than parallel rotate

WallTrackPID.SetOutputLimits(-MOTOR_SPEED_QTR, MOTOR_SPEED_QTR); //give PID full rein on motor speeds

mySerial.printf("WallOffsetTrackPID Parameters (Kp,Ki,Kd) = (%2.f,%2.f,%2.f)\n",

WallTrackPID.GetKp(), WallTrackPID.GetKi(), WallTrackPID.GetKd());

while (true)

{

GetRequestedVL53l0xValues(VL53L0X_LEFT);

WallTrackSteerVal = LeftSteeringVal; //computed by Teensy 3.5

//at 20mm from tgt offset, setpoint will be +/-0.2

WallTrackSetPoint = (float)(10 * tgt_offset - Lidar_LeftCenter) / 100.f; //10/04/20 positive value drives robot toward wall

if (WallTrackSetPoint > WALL_OFFSET_TRACK_SETPOINT_LIMIT) WallTrackSetPoint = WALL_OFFSET_TRACK_SETPOINT_LIMIT;

if (WallTrackSetPoint < -WALL_OFFSET_TRACK_SETPOINT_LIMIT) WallTrackSetPoint = -WALL_OFFSET_TRACK_SETPOINT_LIMIT;

//update motor speeds, skipping bad values

if (!isnan(WallTrackSteerVal))

{

//By default, computes new output approx 10 times/sec (use SetSampleTime() to change)

if (WallTrackPID.Compute())//if Compute returns TRUE, IRHomingOutput has new value

{

leftspeednum = MOTOR_SPEED_QTR - WallTrackOutput;

rightspeednum = MOTOR_SPEED_QTR + WallTrackOutput;

MoveAhead(leftspeednum, rightspeednum);

mySerial.printf("%lu\t%d\t%d\t%d\t%2.2f\t%2.2f\t%2.2f\t%d\t%d\n", millis(),

Lidar_LeftFront, Lidar_LeftCenter, Lidar_LeftRear, WallTrackSteerVal, WallTrackOutput,

WallTrackSetPoint, leftspeednum, rightspeednum);

}

}

//01/30/17 added for manual remote control via Wixel

CheckForUserInput();

}

}

#pragma region TIME/DATE FUNCTIONS

//02/18/19 copied from RTC Test project, and used to remove dependence on Time/TimeLib libraries

void GetDayDateTimeStringFromDateTime(DateTime dt, char* bufptr)

{

int mydayofweek = dt.dayOfTheWeek();

int myday = dt.day();

int mymonth = dt.month();

int myyear = dt.year();

int myhour = dt.hour();

int mymin = dt.minute();

int mysec = dt.second();

char* dayofweek = (char*)daysOfTheWeek[mydayofweek];

//now concatenate everything into the provided buffer

sprintf(bufptr, "%s %4d/%02d/%02d at %02d:%02d:%02d", dayofweek, mymonth, myday, myyear, myhour, mymin, mysec);

}

#pragma endregion Time & Date Support Functions

#pragma region DISTANCE_SUPPORT

//08/12/20 Extracted inline FRONT_DIST_ARRAY init code so can be called from anywhere

void InitFrontDistArray()

{

//04/01/15 initialize 'stuck detection' arrays

//06/17/20 re-wrote for better readability

//to ensure var > STUCK_FRONT_VARIANCE_THRESHOLD for first FRONT_DIST_ARRAY_SIZE loops

//array is initialized with sawtooth from 0 to MAX_FRONT_DISTANCE_CM

int newval = 0;

int bumpval = MAX_FRONT_DISTANCE_CM / FRONT_DIST_ARRAY_SIZE;

bool bgoingUp = true;

for (int i = 0; i < FRONT_DIST_ARRAY_SIZE; i++)

{

aFrontDist[i] = newval;

//DEBUG!!

//mySerial.printf("i = %d, newval = %d, aFrontdist[%d] = %d\n", i, newval, i, aFrontDist[i]);

//DEBUG!!

if (bgoingUp)

{

if (newval < MAX_FRONT_DISTANCE_CM - bumpval) //don't want newval > MAX_FRONT_DISTANCE_CM

{

newval += bumpval;

}

else

{

bgoingUp = false;

}

}

else

{

if (newval > bumpval) //don't want newval < 0

{

newval -= bumpval;

}

else

{

bgoingUp = true;

}

}

}

//04/19/19 init last_incmean & last_incvar to mean/var respectively

long sum = 0;

for (int i = 0; i < FRONT_DIST_ARRAY_SIZE; i++)

{

sum += aFrontDist[i]; //adds in rest of values

}

last_incmean = (float)sum / (float)FRONT_DIST_ARRAY_SIZE;

// Step2: calc new 'brute force' variance

float sumsquares = 0;

for (int i = 0; i < FRONT_DIST_ARRAY_SIZE; i++)

{

sumsquares += (aFrontDist[i] - last_incmean) * (aFrontDist[i] - last_incmean);

}

last_incvar = sumsquares / FRONT_DIST_ARRAY_SIZE;

mySerial.printf("aFrontDist Init: last_incmean = %3.2f, last_incvar = %3.2f\n", last_incmean, last_incvar);

}

float GetAvgFrontDistCm()

{

int dist = 0;

for (size_t i = 0; i < 3; i++)

{

dist += GetFrontDistCm();

delay(50);

//delay(10);

}

return dist / 3;

}

float GetAvgRightDistCm()

{

//Notes:

// 04/09/20 revised to compute proper running average of

// latest LR_PING_AVG_WINDOW_SIZE ping measurements

//DEBUG!!

//int totdist = 0;

//for (size_t i = 0; i < LR_PING_AVG_WINDOW_SIZE; i++)

//{

// totdist += aRightDist[i];

// //mySerial.printf("dist/total = %d\t%d\n", aRightDist[i], totdist);

//}

//float avg = (float)totdist / (float)LR_PING_AVG_WINDOW_SIZE;

//return avg;

//DEBUG!!

int rightavgdist_cm = 0;

for (int validx = 0; validx < LR_PING_AVG_WINDOW_SIZE; validx++)

{

rightavgdist_cm += aRightDist[LR_PING_DIST_ARRAY_SIZE - 1 - validx];

}

float avg = (float)rightavgdist_cm / (float)LR_PING_AVG_WINDOW_SIZE;

return avg;

}

float GetAvgLeftDistCm()

{

//Notes:

// 04/09/20 revised to compute proper running average of

// latest LR_PING_AVG_WINDOW_SIZE ping measurements

//int totdist = 0;

//for (size_t i = 0; i < LR_PING_AVG_WINDOW_SIZE; i++)

//{

// totdist += aLeftDist[i];

//}

//float avg = (float)totdist / (float)LR_PING_AVG_WINDOW_SIZE;

int leftavgdist_cm = 0;

for (int validx = 0; validx < LR_PING_AVG_WINDOW_SIZE; validx++)

{

leftavgdist_cm += aLeftDist[LR_PING_DIST_ARRAY_SIZE - 1 - validx];

}

float avg = (float)leftavgdist_cm / (float)LR_PING_AVG_WINDOW_SIZE;

return avg;

}

//11/05/15 added to get LIDAR measurement

int GetFrontDistCm()

{

//Notes:

// 12/05/15 chg to MODE line vs I2C

// 01/06/16 rev to return avg of prev distances on error

#ifndef NO_LIDAR

unsigned long pulse_width;

int LIDARdistCm;

pulse_width = pulseIn(LIDAR_MODE_PIN, HIGH); // Count how long the pulse is high in microseconds

LIDARdistCm = pulse_width / 10; // 10usec = 1 cm of distance for LIDAR-Lite

//mySerial.printf("Front Dist = %d at %lu mSec\n", LIDARdistCm, millis());

//chk for erroneous reading

if (LIDARdistCm == 0)

{

mySerial.printf("%lu: Error in GetFrontDistCm()\n", millis());

//replace with average of last three readings from aFrontDist

int avgdist = 0;

for (int i = 0; i < FRONT_DIST_AVG_WINDOW_SIZE; i++)

{

avgdist += aFrontDist[FRONT_DIST_ARRAY_SIZE - 1 - i];

}

avgdist = avgdist / FRONT_DIST_AVG_WINDOW_SIZE;

LIDARdistCm = avgdist;

}

//04/30/17 added limit detection/correction

LIDARdistCm = (LIDARdistCm > 0) ? LIDARdistCm : MAX_FRONT_DISTANCE_CM;

return LIDARdistCm;

#else

return 10; //safe number, I hope

#endif

}

//08/09/20 added no_param version f/u/by blocking functions like IRHomeToChgStn() and RotateToParallelOrientation()

double CalcDistArrayVariance()

{

frontdistval = GetFrontDistCm();

return CalcDistArrayVariance(frontdistval, aFrontDist);

}

double CalcDistArrayVariance(unsigned long newdistval, uint16_t* aDistArray)

{

//Purpose: Calculate Variance of input array

//Inputs: aDistArray = FRONT_DIST_ARRAY_SIZE array of integers representing left/right/front distances

//Outputs: Variance of selected array

//Plan:

// Step1: Calculate mean for array

// Step2: Sum up squared deviation of each array item from mean

// Step3: Divide squared deviation sum by number of array elements

//Notes:

// 11/01/18 this function takes about 1.8mSec - small compared to 200mSec loop interval

// 11/02/18 added distval to sig to facilitate incremental calc algorithm

// 11/12/18 re-wrote incr alg

// see C:\Users\Frank\Documents\Arduino\FourWD_WallE2_V1\Variance.xlsm

// and C:\Users\Frank\Documents\Arduino\VarianceCalcTest.ino

// 01/16/19 added 'return inc_var'

// 04/21/19 copied number overflow corrections from VarianceCalcTest.ino

// 04/28/19 commented out the 'brute force' sections - now using incr var exclusively

//unsigned long funcStartMicrosec = micros();

//11/03/18 update distance array, saving oldest for later use in incremental calcs

unsigned long oldestDistVal = aFrontDist[0];

for (int i = 0; i < FRONT_DIST_ARRAY_SIZE - 1; i++)

{

aFrontDist[i] = aFrontDist[i + 1];

}

aFrontDist[FRONT_DIST_ARRAY_SIZE - 1] = newdistval;

//11/02/18 now re-do the calculation using the incremental method, and compare the times

//mu_t = mu_(t-1) - dist_(t-N)/N + dist_t/N

//Example: mu_7 = mu_(6) - dist_(2)/N + dist_7/N

//var^2_t = var^2_(t-1) + dist^2_(t) - dist^2_(t-N) + mu^2_(t-1) - mu^2_t

//Example: var^2_7 = var^2_(6) + dist^2_(7) - dist^2_(t-N) + mu^2_(6) - mu^2_7

//DEBUG!!

//for (int i = 0; i < FRONT_DIST_ARRAY_SIZE; i++)

//{

// Serial.print("aDistArray["); Serial.print(i); Serial.print("] = "); Serial.println(aDistArray[i]);

//}

//DEBUG!!

double inc_mean = last_incmean - (double)oldestDistVal / (double)FRONT_DIST_ARRAY_SIZE + (double)newdistval / (double)FRONT_DIST_ARRAY_SIZE;

unsigned long olddist_squared = oldestDistVal * oldestDistVal;

unsigned long newdist_squared = newdistval * newdistval;

double last_incmean_squared = last_incmean * last_incmean;

double inc_mean_squared = inc_mean * inc_mean;

double inc_var = last_incvar + ((double)newdist_squared / FRONT_DIST_ARRAY_SIZE) - ((double)olddist_squared / FRONT_DIST_ARRAY_SIZE)

+ last_incmean_squared - inc_mean_squared;

//long uSecI = micros() - funcStartMicrosec - uSecB;

//DEBUG!!

//display results:

//mySerial.printf("%lu\t%lu\t%lu\t%4.2f\t%4.2f\t%4.2f\n", millis(),

// newdistval, oldestDistVal, last_incmean, last_incvar, inc_var);

//DEBUG!!

last_incvar = inc_var; //save for next time

last_incmean = inc_mean; //save for next time

return inc_var; //added 01/16/19

}

//04/28/18 added to update left/right dist arrays, so can reinstate incr l/r dist avg

void UpdateLRDistanceArrays(int leftdistval, int rightdistval)

{

//Purpose: Update the L/R distance arrays with the latest values, shifting all other values down 1

//Inputs:

// Latest left/right values from sensors

//Outputs:

// latest value placed at Array[LR_PING_DIST_ARRAY_SIZE - 1], all other values moved down one

//Plan:

// Step 1: For each array, shift all values down one (the 0th value drops into the bit bucket)

// Step 2: Place the latest reading at [LR_PING_DIST_ARRAY_SIZE - 1].

//Notes:

//DEBUG!!

mySerial.printf("UpdateLRDistanceArrays(left = %d, right = %d)", leftdistval, rightdistval);

//DEBUG!!

//Step 1: For each array, shift all values down one (the 0th value drops into the bit bucket)

for (int i = 0; i < LR_PING_DIST_ARRAY_SIZE - 1; i++)

//for (int i = 0; i < DIST_ARRAY_SIZE; i++)

{

aRightDist[i] = aRightDist[i + 1];

aLeftDist[i] = aLeftDist[i + 1];

}

//Step 2: Place the latest reading at [DIST_ARRAY_SIZE - 1].

aRightDist[LR_PING_DIST_ARRAY_SIZE - 1] = rightdistval;

aLeftDist[LR_PING_DIST_ARRAY_SIZE - 1] = leftdistval;

}

#pragma endregion Distance Measurement Support

#pragma region MOTOR SUPPORT

//09/08/20 modified for DRV8871 motor driver

void MoveReverse(int leftspeednum, int rightspeednum)

{

//Purpose: Move in reverse direction continuously - companion to MoveAhead()

//ProvEnA_Pinnce: G. Frank Paynter 09/08/18

//Inputs:

// leftspeednum = integer denoting speed (0=stop, 255 = full speed)

// rightspeednum = integer denoting speed (0=stop, 255 = full speed)

//Outputs: both drive Motors energized with the specified speed

//Plan:

// Step 1: Set reverse direction for both wheels

// Step 2: Run both Motors at specified speeds

//Notes:

// 01/22/20 now using Adafruit DRV8871 drivers

//Step 1: Set reverse direction and speed for both wheels

SetLeftMotorDirAndSpeed(REV_DIR, leftspeednum);

SetRightMotorDirAndSpeed(REV_DIR, rightspeednum);

}

//09/08/20 modified for DRV8871 motor driver

void MoveAhead(int leftspeednum, int rightspeednum)

{

//Purpose: Move ahead continuously

//ProvEnA_Pinnce: G. Frank Paynter and Danny Frank 01/24/2014

//Inputs:

// leftspeednum = integer denoting speed (0=stop, 255 = full speed)

// rightspeednum = integer denoting speed (0=stop, 255 = full speed)

//Outputs: both drive Motors energized with the specified speed

//Plan:

// Step 1: Set forward direction for both wheels

// Step 2: Run both Motors at specified speeds

//Notes:

// 01/22/20 now using Adafruit DRV8871 drivers

//mySerial.printf("InMoveAhead(%d,%d)\n", leftspeednum, rightspeednum);

//Step 1: Set forward direction and speed for both wheels

SetLeftMotorDirAndSpeed(true, leftspeednum);

SetRightMotorDirAndSpeed(true, rightspeednum);

}

//09/08/10 modified for DRV8871 motor driver

void StopLeftMotors()

{

analogWrite(In1_Left, MOTOR_SPEED_OFF);

analogWrite(In2_Left, MOTOR_SPEED_OFF);

}

void StopRightMotors()

{

analogWrite(In1_Right, MOTOR_SPEED_OFF);

analogWrite(In2_Right, MOTOR_SPEED_OFF);

}

//09/08/20 added bool bisFwd param for DRV8871 motor driver

void RunBothMotors(bool bisFwd, int leftspeednum, int rightspeednum)

{

//Purpose: Run both Motors at left/rightspeednum speeds

//Inputs:

// speednum = speed value (0 = OFF, 255 = full speed)

//Outputs: Both Motors run for timesec seconds at speednum speed

//Plan:

// Step 1: Apply drive to both wheels

//Notes:

// 01/14/15 - added left/right speed offset for straightness compensation

// 01/22/15 - added code to restrict fast/slow values

// 01/24/15 - revised for continuous run - no timing

// 01/26/15 - speednum modifications moved to UpdateWallFollowmyMotorspeeds()

// 12/07/15 - chg args from &int to int

//Step 1: Apply drive to both wheels

////DEBUG!!

// mySerial.printf("In RunBothMotors(%d,%d)\n", leftspeednum, rightspeednum);

////DEBUG!!

SetLeftMotorDirAndSpeed(bisFwd, leftspeednum);

SetRightMotorDirAndSpeed(bisFwd, rightspeednum);

}

//09/08/20 added bool bisFwd param for DRV8871 motor driver

void RunBothMotorsMsec(bool bisFwd, int timeMsec, int leftspeednum, int rightspeednum)

{

//Purpose: Run both Motors for timesec seconds at speednum speed

//Inputs:

// timesec = time in seconds to run the Motors

// speednum = speed value (0 = OFF, 255 = full speed)

//Outputs: Both Motors run for timesec seconds at speednum speed

//Plan:

// Step 1: Apply drive to both wheels

// Step 2: Delay timsec seconds

// Step 3: Remove drive from both wheels.

//Notes:

// 01/14/15 - added left/right speed offset for straightness compensation

// 01/22/15 - added code to restrict fast/slow values

// 11/25/15 - rev to use motor driver class object

// 09/08/20 added bool bisFwd param for DRV8871 motor driver

RunBothMotors(bisFwd, leftspeednum, rightspeednum);

//Step 2: Delay timsec seconds

delay(timeMsec);

}

//11/25/15 added for symmetry ;-).

void StopBothMotors()

{

StopLeftMotors();

StopRightMotors();

}

//01/10/18 reverted to regular ping(). median distance function takes too long

int GetLeftDistCm()

{

//Serial.print("LeftPing\t"); Serial.println(millis());

//Notes:

// 04/30/17 added limit detection/correction

// 07/20/20 rewritten for VL53L0X vs 'ping' sensors

GetRequestedVL53l0xValues(VL53L0X_LEFT);

int distCm = round((float)Lidar_LeftCenter / 10.f); //try to make this accurate

return distCm;

}

//06/17/20 rewritten for VL53L0X sensor

int GetRightDistCm()

{

//Purpose: Get right center VL53L0X distance in Cm

//Notes:

// 06/17/20: Copied from 'ping' version and adapted for VL53L0X

//DEBUG!!

//unsigned long now = millis();

//DEBUG!!

GetRequestedVL53l0xValues(VL53L0X_RIGHT);

int distCm = round((float)Lidar_RightCenter / 10.f); //try to make this accurate

//DEBUG!!

//mySerial.printf("GetRightDistCm() returned %d in %lu Msec\n", distCm, millis() - now);

//DEBUG!!

return distCm;

}

void SetLeftMotorDirAndSpeed(bool bIsFwd, int speed)

{

//mySerial.printf("SetLeftMotorDirAndSpeed(%d,%d)\n", bIsFwd, speed);

#ifndef NO_MOTORS

if (bIsFwd)

{

digitalWrite(In1_Left, LOW);

analogWrite(In2_Left, speed);

//mySerial.printf("In SetLeftMotorDirAndSpeed(%s, %d)\n",

// (bIsFwd == true) ? "true" : "false", speed);

}

else

{

digitalWrite(In2_Left, LOW);

analogWrite(In1_Left, speed);

}

#endif // !NO_MOTORS

}

void SetRightMotorDirAndSpeed(bool bIsFwd, int speed)

{

//mySerial.printf("In SetRightMotorDirAndSpeed(%s, %d)\n",

// (bIsFwd == true) ? "true" : "false", speed);

#ifndef NO_MOTORS

if (bIsFwd)

{

digitalWrite(In1_Right, LOW);

analogWrite(In2_Right, speed);

}

else

{

digitalWrite(In2_Right, LOW);

analogWrite(In1_Right, speed);

}

#endif // !NO_MOTORS

}

#pragma endregion Motor Support Functions

#pragma region CHARGE SUPPORT

bool IsIRBeamAvail()

{

//Purpose: Determine whether or not an IR beam is available for homing

//Inputs: call from loop()

//Outputs: true if an IR beam is detected, false otherwise

//Plan:

// Step1: Get analog levels from all 4 IR detectors

// Step2: return true if any of the 4 show detection level

//Notes:

// Might need some hysteresis here to avoid toggling in and out of the TRACKING_IRBEAM mode

// 02/21/17 read each det 3 times. If any sum is less than 3 X threshold, return true. Otherwise return false

// 10/15/17 rewritten to use info from IR Demod Module

// 04/05/20 revised to incorporate changes from 'I2C_Master_Tut5.ino'

//get latest info from IR Demod Module

long Fin1, Fin2;//04/05/20 needs to be 'long int' (4 bytes) here to match Teensy int (4 bytes)

float SteeringValue;

Wire.requestFrom(IR_HOMING_MODULE_SLAVE_ADDR, sizeof(Fin1) + sizeof(Fin2) + sizeof(SteeringValue));

I2C_readAnything(Fin1);

I2C_readAnything(Fin2);

I2C_readAnything(SteeringValue);

float total = Fin1 + Fin2;

////DEBUG!!

// mySerial.printf("Fin1 = %ld, Fin2 = %ld, SteeringValue = %3.2f\n", Fin1, Fin2, SteeringValue);

////DEBUG!!

return (total > IR_BEAM_DETECTION_THRESHOLD);

}

float GetTotalAmps()

{

//Purpose: Get total current in amps

//Inputs:

// Voltage on TOT_CURR_PIN is approximately Ichg*2 Amps

// VOLTAGE_TO_CURRENT_RATIO = measured voltage to current ratio

//Outputs:

// returns total robot current (chg current plus running current)

//Notes:

// 02/28/18 chg name from GetBattChgAmps() to GetTotalAmps()

int ITotAnalogReading = GetAverageAnalogReading(TOT_CURR_PIN, CURRENT_AVERAGE_NUMBER); //range is 0-1023

float ITotVolts = ((float)ITotAnalogReading / (float)MAX_AD_VALUE) * ADC_REF_VOLTS;

float ITotAmps = ITotVolts * VOLTAGE_TO_CURRENT_RATIO;

////DEBUG!!

//mySerial.printf("GetTotalAmps(): Areading, ITotVolts, ITotAmps = %d, %3.2f, %3.2f\n",

// ITotAnalogReading, ITotVolts, ITotAmps);

////DEBUG!!

return ITotAmps;

}

//added 02/28/19 to service 2nd 1Na169 current sensor

float GetRunningAmps()

{

//Purpose: Get robot running current in amps

//Inputs:

// Voltage on RUN_CURR_PIN is approximately IRun Amps

// VOLTAGE_TO_CURRENT_RATIO = measured voltage to current ratio for running current

//Outputs:

// returns robot running current

//Notes:

// 02/28/18 copied from GetTotalAmps() and adapted

//int IRunAnalogReading = analogRead(RUN_CURR_PIN); //range is 0-1023

int IRunAnalogReading = GetAverageAnalogReading(RUN_CURR_PIN, CURRENT_AVERAGE_NUMBER); //range is 0-1023

float IRunVolts = ((float)IRunAnalogReading / (float)MAX_AD_VALUE) * ADC_REF_VOLTS;

float IRunAmps = IRunVolts * VOLTAGE_TO_CURRENT_RATIO;

////DEBUG!!

// mySerial.printf("GetRunningAmps(): Areading, IRunvolts, IRunAmps = %d, %3.2f, %3.2f\n",

// IRunAnalogReading, IRunVolts,IRunAmps);

////DEBUG!!

return IRunAmps;

}

bool IsStillCharging()

{

//Purpose: Determine battery charge status

//Inputs:

// Battry charging current in amps from GetBattChgAmps()

// Battery voltage from GetBattV()

//Outputs:

// returns TRUE if battery voltage is below full charge voltage threshold

// AND charging current is above full charge current threshold. Otherwise returns FALSE

float BattV = GetBattVoltage();

float TotI = GetTotalAmps();

float RunI = GetRunningAmps();

////DEBUG!!

// mySerial.printf("IsStillCharging(): BattV = %2.3f, TotI = %2.3f, RunI = %2.3f\n",

// BattV, TotI, RunI);

////DEBUG!!

return (BattV < FULL_BATT_VOLTS&& TotI - RunI > FULL_BATT_CURRENT_THRESHOLD);

}

bool IsChargerConnected()

{

bool bConnect = digitalRead(CHG_CONNECT_PIN); //goes HIGH when chg cable connected

////DEBUG!!

// mySerial.printf("IsChargerConnected() returns %d\n", bConnect);

////DEBUG!!

return bConnect;

}

bool MonitorChargeUntilDone()

{

//Purpose: Monitor charging status until charge is complete

//Inputs: startMsec = millis() at the time of the function call

//Outputs:

// returns TRUE if charging completes successfully, FALSE otherwise

// provides mode-specific telemetry to PC via Wixel

//Plan:

// Step1: Blink charger display LEDs

// Step1: Get current time check for sufficiently elapsed time

// Step2: Get charger status signals, and echo them to display LEDs

// Step2: Send telemetry to PC via Serial port (Wixel)

// Step2: Check for end-of-charge or failure (don't know what this would be yet...)

//Notes:

// 03/11/17 for testing, rev to return as soon as connection dropped

// 05/21/17 rev to xmit telemetry before loop & then delay a bit before entering loop

// 05/21/17 abstracted status reporting code to separate function

// 10/16/17 removed startMsec from call sig

// 03/15/18 revised for TP5100 charger module

// 04/01/18 rev to always stay on charge for at least MINIMUM_CHARGE_TIME_SEC sec

// 02/24/19 rev to use new 1NA169 current sensor output

// 02/28/19 moved ChargeTelemetryString printout here from MODE_CHARGING case

//Step1: Get current time & check for sufficient elapsed time

int ElapsedChgTimeSec = 0;

float ElapsedChgTimeMin = 0; //added 05/02/20

bool bChgConn = digitalRead(CHG_CONNECT_PIN); //goes HIGH when chg cable connected

Serial.println(ChargingTelemStr); //moved here from main loop MODE_CHARGING case

bool bStillCharging = true;

//04/27/20 re-arranged for clarity

while (ElapsedChgTimeSec < MINIMUM_CHARGE_TIME_SEC ||

(bStillCharging

&& ElapsedChgTimeSec < BATT_CHG_TIMEOUT_SEC

&& bChgConn)

)

{

delay(1000); //one-second loop

ElapsedChgTimeSec++;

bStillCharging = IsStillCharging(); //02/24/19 - now using 1NA169 current sensor

bChgConn = digitalRead(CHG_CONNECT_PIN); //goes HIGH when chg cable connected

//05/02/20 rev to only print out 10 times/min

if (ElapsedChgTimeSec % 6 == 0)

{

ElapsedChgTimeMin = (float)ElapsedChgTimeSec / 60.f;

float BattV = GetBattVoltage();

float TotalI = GetTotalAmps(); //rev 02/28/19: chg name from 'Batt' to 'Total'

float RunI = GetRunningAmps();

//mySerial.printf("%d\t%2.4f\t%2.4f\t%2.4f\t%2.4f\n",

// ElapsedChgTimeSec, BattV, TotalI, RunI, TotalI - RunI); //rev 02/24/19 for 1Na169 sensor

mySerial.printf("%3.1f\t%2.4f\t%2.4f\t%2.4f\t%2.4f\n",

ElapsedChgTimeMin, BattV, TotalI, RunI, TotalI - RunI); //rev 02/24/19 for 1Na169 sensor

UpdateChgStatusLEDs(BattV, bStillCharging); //updates 'fuel guage' LEDs 04/22/20 added bStillCharging to sig

}

}

//Step2: Check for end-of-charge or failure (don't know what this would be yet...)

//if charging ran over time, something went wrong

//10/16/17 revised for better telemetry

//if (bChgConn == LOW) //charger unplugged

if (!bChgConn) //charger unplugged

{

Serial.print("Charge connection dropped after "); Serial.print(ElapsedChgTimeSec / 60);

Serial.println(" minutes");

return false;

}

else if (ElapsedChgTimeSec < BATT_CHG_TIMEOUT_SEC)

{

Serial.print("Charging Completed Successfully in "); Serial.print(ElapsedChgTimeSec / 60);

Serial.print(" minutes at "); Serial.println(millis());

return true;

}

else

{

Serial.print("Charging timout value of "); Serial.print(BATT_CHG_TIMEOUT_SEC);

Serial.print(" secs expired at "); Serial.println(millis());

return false;

}

}

float GetBattVoltage()

{

//02/18/17 get corrected battery voltage. Voltage reading is 1/3 actual Vbatt value

int analog_batt_reading = GetAverageAnalogReading(BATT_MON_PIN, VOLTS_AVERAGE_NUMBER);//rev 03/01/19 to average readings

float calc_volts = ZENER_VOLTAGE_OFFSET + ADC_REF_VOLTS * (double)analog_batt_reading / (double)MAX_AD_VALUE;

////DEBUG!!

// mySerial.printf("a/d = %d, calc = %2.2f\n", analog_batt_reading,calc_volts);

////DEBUG!!

return calc_volts;

}

bool ExecDisconManeuver()

{

//Purpose: Disconnect from charging station

//Inputs: Call from Charging Mode case block

//Outputs: Robot disconnects from charging station, backs up, and turns 90 away from near wall

//Plan:

// Step1: Turn OFF charger status LEDs (added 04/28/17)

// Step2: Determine which side wall is closer

// Step3: Back straight up for long enough to clear side rails

// Step4: Turn 90 away from near side wall

//Notes:

// 02/15/18 rev to use full speed to disengage, and new rolling turn routines

// 03/27/18 rev for TP5100 charging module

float batv = GetBattVoltage();

mySerial.printf("in ExecDisconManeuver() with BattV = %2.4f\n", batv);

//Step1: Turn OFF charger status LEDs (added 04/28/17)

//chg status LEDs are all enabled via a LOW digital output

//03/15/18 rev for TP5100

digitalWrite(FIN_LED_PIN, HIGH); //output is LOW (active) when Pw1_IN is HIGH/TRUE

digitalWrite(_80PCT_LED_PIN, HIGH); //output is LOW (active) when Pw2_IN is HIGH/TRUE

digitalWrite(_60PCT_LED_PIN, HIGH); //output is LOW (active) when Chg1_IN is LOW/FALSE

digitalWrite(_40PCT_LED_PIN, HIGH); //output is LOW (active) when Chg2_IN is LOW/FALSE

digitalWrite(_20PCT_LED_PIN, HIGH); //output is LOW (active) when Fin1_IN is LOW/FALSE

digitalWrite(CHG_LED_PIN, HIGH); //output is LOW (active) when Fin2_IN is LOW/FALSE

//Step2: Determine which side wall is closer. Ping sensors on 2nd deck can see over charger side rails

int leftdist = GetAvgLeftDistCm();

int rightdist = GetAvgRightDistCm();

Serial.print("leftdist = "); Serial.print(leftdist); Serial.print(", ");

Serial.print("rightdist = "); Serial.println(rightdist);

//Step3: Back straight up for long enough to clear side rails

//09/08/20 modified for DRV8871 motor driver

//SetLeftMotorDir(REV_DIR);

//SetRightMotorDir(REV_DIR);

//RunBothMotorsMsec(2000, MOTOR_SPEED_FULL, MOTOR_SPEED_FULL);

RunBothMotorsMsec(false, 2000, MOTOR_SPEED_FULL, MOTOR_SPEED_FULL);

//

//Step4: Turn 90 away from near side wall

RollingTurn(rightdist < leftdist, true, 90); //turn 90 deg away from nearest wall

////DEBUG!!

// MoveAhead(0, 0);

// delay(500);

// cli();

// sleep_enable();

// sleep_cpu();

////DEBUG!!

return true; //can't think of anything else at the moment

}

long GetBatRunDurationSec()

{

return 1; //dummy for now

}

bool IRHomeToChgStn(int avoidancedistCm, int initleftspeed, int initrightspeed)

{

//Purpose: Home in to charging station with optional avoidance manuever

//Inputs:

// avoidancedistCm = int denoting how far away to start avoidance maneuver

//Outputs:

// either connected to charging station or turn away at avoidancedistCm

//Plan:

// Step1: Initialize PID for homing

// Step2: If front distance < avoidancedistCm, turn 90 deg away from near wall

// otherwise continue homing until connected or stuck

//Notes:

// 03/19/17 rev to add initial left/right speed vals to calling sig

// 08/09/20 added IsStuck() call, limited to 5 calls/sec to avoid false positives

// 08/10/20 now using timer ISR for this so only need to check bIsStuck state

String trackstr = "IR"; //used for telemetry printouts

String str = ""; //telemetry string

bool result = true; //added 01/16/19 to supress warning

//elapsedMillis IsStuckCallElapsedMillis = 0; //added 08/09/20

//Step1: Initialize PID for homing

//set the target value

//IRHomingSetpoint = 0.05; //10/15/17 this seems to be the best value for now

//IRHomingSetpoint = -0.05; //07/12/20 new wheels, new motors

//IRHomingSetpoint = -0.15; //07/12/20 new wheels, new motors

//IRHomingSetpoint = -0.25; //07/12/20 new wheels, new motors

//IRHomingSetpoint = 0.25; //07/12/20 new wheels, new motors

//IRHomingSetpoint = 0.20; //07/12/20 new wheels, new motors

IRHomingSetpoint = 0.15; //07/12/20 new wheels, new motors

//turn the PID on

IRHomingPID.SetMode(AUTOMATIC);

//set the limits

IRHomingPID.SetOutputLimits(-MOTOR_SPEED_HALF, MOTOR_SPEED_HALF);

//11/02/18 added dist var to CalcDistArrayVariance sig

//08/09/20 now using no_param version of IsStuck();

int frontdist = GetFrontDistCm();

int bChgConn = LOW; //for testing

lastHomingTelemetryMsec = 0; //used to space out telemetry prints

//Step2: If front distance < avoidancedistCm, turn 90 deg away from near wall

// otherwise continue homing until connected or stuck

mySerial.printf("front dist = %d, lastHomingTelemetryMsec = ", frontdist);

Serial.println(lastHomingTelemetryMsec);

Serial.println(IRHomingTelemStr); //header for chg telemetry data

//08/10/20 now using ISR for bIsStuck state

while (bChgConn == LOW && !bIsStuck && frontdist > avoidancedistCm)

{

//05/02/20 turn on Laser

digitalWrite(RED_LASER_DIODE_PIN, HIGH);

//01/30/17 added to kill motors remotely using Wixel & serial port

CheckForUserInput();

//10/06/17 get new homing data from Teensy 3.2 IR Beacon Homing Module at address 8

//2nd param in Wire.requestFrom not used by slave - but its val % 32 must be in range of 1-32

//float tempfloat = 0; //IRHomingLRSteeringVal is a double, so use a temp float and then convert on assignment

long FinalValue1, FinalValue2;

Wire.requestFrom(IR_HOMING_MODULE_SLAVE_ADDR, sizeof(FinalValue1) + sizeof(FinalValue1) + sizeof(IRHomingLRSteeringVal));

I2C_readAnything(FinalValue1);

I2C_readAnything(FinalValue2);

I2C_readAnything(IRHomingLRSteeringVal);

//skip bad values

if (!isnan(IRHomingLRSteeringVal))

{

//By default, computes new output approx 10 times/sec (use SetSampleTime() to change)

if (IRHomingPID.Compute())//if Compute returns TRUE, IRHomingOutput has new value

{

leftspeednum = initleftspeed + IRHomingOutput;

rightspeednum = initrightspeed - IRHomingOutput;

//DEBUG!!

//print out telemetry every 400 msec or so

if (lastHomingTelemetryMsec > IR_HOMING_TELEMETRY_SPACING_MSEC) //c/o to get more resolution on steering dynamics

{

lastHomingTelemetryMsec -= IR_HOMING_TELEMETRY_SPACING_MSEC;

mySerial.printf("%lu\t%2.2f\t%lu\t%lu\t%2.2f\t%2.2f\t\t%d\t%d\t%d\n",

millis(), GetBattVoltage(), FinalValue1, FinalValue2, IRHomingLRSteeringVal, IRHomingOutput,

leftspeednum, rightspeednum, frontdist);

}

//DEBUG!!

//for testing, use charging LEDs for visualization of IRHomingLRSteeringVal

int FINout = (IRHomingLRSteeringVal > -1.0 && IRHomingLRSteeringVal < -0.5) ? LOW : HIGH;

int _80PCTout = (IRHomingLRSteeringVal >= -0.5 && IRHomingLRSteeringVal < -0.25) ? LOW : HIGH;

int _60PCTout = (IRHomingLRSteeringVal >= -0.25 && IRHomingLRSteeringVal < 0) ? LOW : HIGH;

int _40PCTout = (IRHomingLRSteeringVal >= 0 && IRHomingLRSteeringVal <= 0.25) ? LOW : HIGH;

int _20PCTout = (IRHomingLRSteeringVal > 0.25 && IRHomingLRSteeringVal < 0.5) ? LOW : HIGH;

int CHGout = (IRHomingLRSteeringVal > 0.5 && IRHomingLRSteeringVal < 1.0) ? LOW : HIGH;

digitalWrite(FIN_LED_PIN, FINout);

digitalWrite(_20PCT_LED_PIN, _20PCTout);

digitalWrite(_40PCT_LED_PIN, _40PCTout);

digitalWrite(_60PCT_LED_PIN, _60PCTout);

digitalWrite(_80PCT_LED_PIN, _80PCTout);

digitalWrite(CHG_LED_PIN, CHGout);

//mySerial.printf("FIN/20/40/60/80/CHG = %d/%d/%d/%d/%d/%d\n", FINout, _20PCTout, _40PCTout, _60PCTout, _80PCTout, CHGout);

//0424/20 experiment with going to full speed when near charge plug

if (frontdist <= CHG_STN_FINAL_APPR_DIST_CM)

{

leftspeednum = rightspeednum = MOTOR_SPEED_MAX;

mySerial.printf("Accelerating to Contact with frontdist = %d\n", frontdist);

}

MoveAhead(leftspeednum, rightspeednum);

}

}

//11/11/18 moved outside 'if (!isnan(IRHomingLRSteeringVal))' block so will always get executed

frontdist = GetFrontDistCm(); //this is also a loop exit condition

bChgConn = digitalRead(CHG_CONNECT_PIN); //goes HIGH when chg cable connected

//05/02/20 turn off Laser

digitalWrite(RED_LASER_DIODE_PIN, LOW);