Posted 13 March 2023,

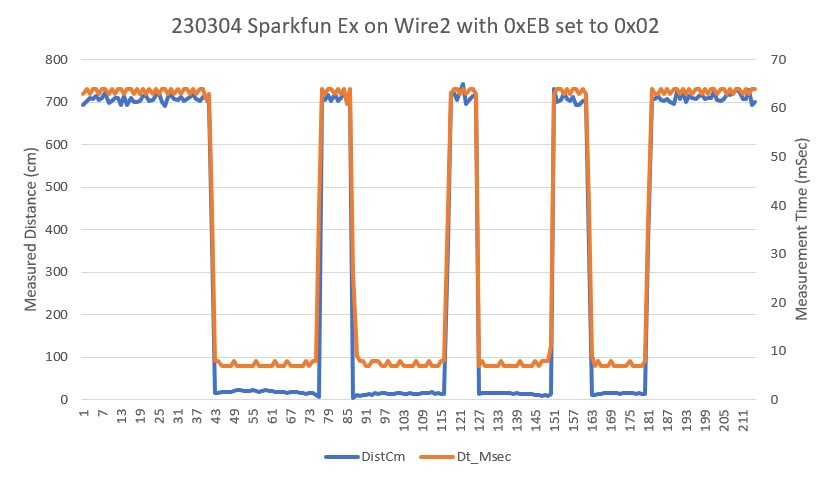

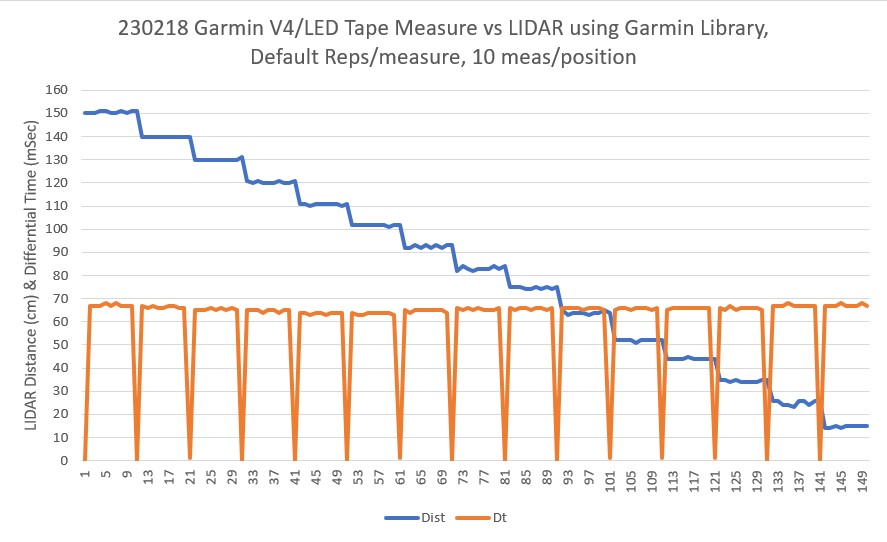

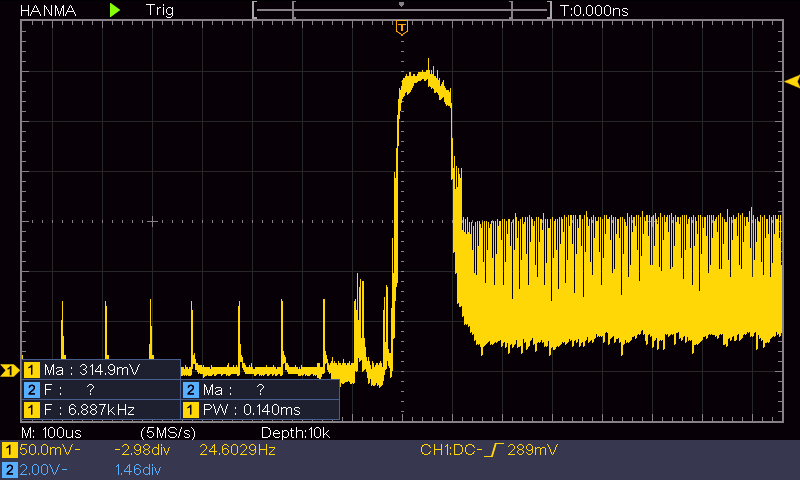

Now that I have the new Garmin LIDAR installed, It’s time to return to ‘real world’ testing. Here’s a short video of a run starting in our entry hallway and proceeding past two open doorways into our dining/living area:

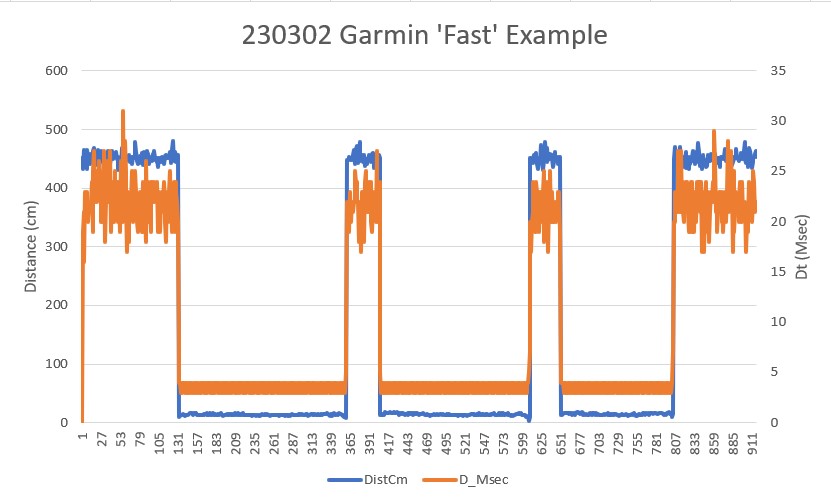

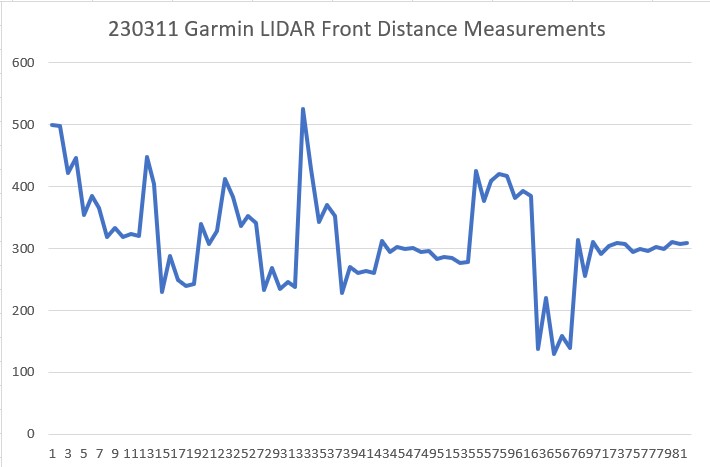

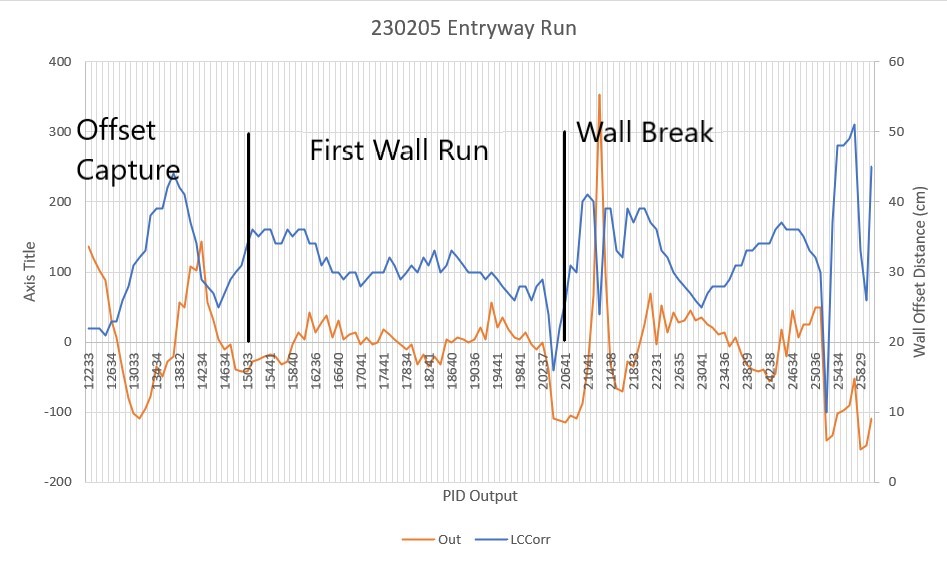

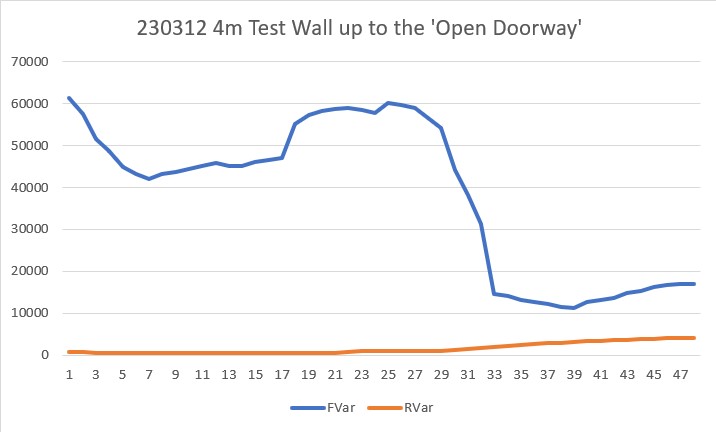

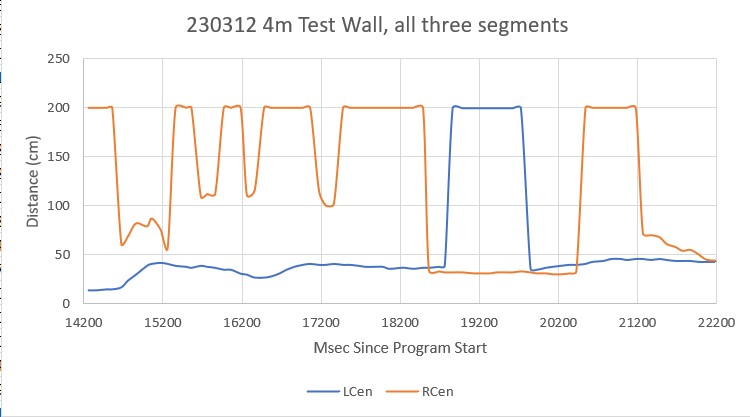

As can be seen in the video, WallE3 handles the oblique turn to the left and the two open doorways perfectly, but then loses it’s way when (I think) the right-hand wall disappears entirely. Not sure what happened there. Here’s the telemetry from the entire run:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 |

Msec LCen RCen Front Rear FVar RVar 14216 36.0 84.0 459 31.0 61316.5 713.9 14316 36.0 85.0 459 36.0 57641.1 677.7 14435 37.0 82.0 455 38.0 51058.0 643.3 14516 36.0 79.0 456 37.0 48133.6 612.2 14675 36.0 90.0 450 43.0 43071.8 580.7 14716 36.0 90.0 452 49.0 40894.0 549.9 14816 35.0 97.0 450 56.0 38961.2 520.6 14944 35.0 89.0 434 62.0 35959.5 494.0 15016 36.0 84.0 439 61.0 34760.5 468.6 15137 36.0 87.0 425 65.0 33153.4 445.7 15216 37.0 90.0 429 76.0 32610.9 429.6 15316 37.0 117.0 426 82.0 32262.8 418.8 15456 37.0 200.0 408 89.0 32288.2 415.1 15516 37.0 200.0 414 83.0 32460.1 405.2 15680 38.0 200.0 398 100.0 33358.1 415.1 15716 37.0 200.0 403 105.0 33888.5 431.4 15816 37.0 114.0 399 98.0 34500.8 436.2 15981 37.0 84.0 655 107.0 34627.8 453.1 16016 37.0 84.0 569 117.0 34634.2 485.9 16180 37.0 86.0 1000 126.0 41132.9 534.7 16216 37.0 95.0 856 117.0 42355.1 562.4 16316 36.0 200.0 952 200.0 44625.4 868.5 16444 36.0 200.0 511 200.0 44771.2 1158.2 16516 35.0 200.0 658 200.0 44567.3 1431.9 16647 34.0 200.0 499 200.0 44093.8 1690.3 16716 35.0 200.0 552 200.0 43435.6 1933.8 16816 33.0 200.0 516 200.0 42648.3 2163.0 16948 33.0 200.0 488 200.0 40456.0 2378.5 17016 33.0 200.0 497 200.0 38859.6 2580.8 17144 31.0 200.0 472 200.0 34694.3 2770.4 17216 31.0 200.0 480 200.0 31945.2 2947.7 17316 30.0 200.0 474 200.0 28734.2 3113.3 17480 30.0 200.0 529 200.0 20677.9 3267.7 17516 30.0 200.0 510 200.0 20615.4 3411.3 17679 30.0 200.0 684 200.0 21575.8 3544.7 17716 30.0 200.0 626 200.0 21700.8 3668.1 17816 30.0 200.0 664 200.0 21976.4 3782.2 17931 30.0 200.0 281 200.0 24192.7 3887.3 18016 32.0 200.0 408 200.0 24363.6 3983.9 18126 31.0 200.0 277 200.0 26523.7 4072.4 18216 32.0 200.0 320 200.0 27192.5 4153.1 18316 33.0 200.0 291 200.0 28089.1 4226.5 18426 33.0 200.0 258 200.0 30374.3 4293.0 18516 34.0 200.0 269 200.0 31355.0 4352.9 18629 34.0 200.0 247 200.0 33650.6 4406.7 18716 34.0 200.0 254 200.0 34675.4 4454.6 18816 34.0 200.0 249 200.0 35732.5 4497.0 18980 34.0 200.0 1000 200.0 45655.2 4534.3 19016 33.0 200.0 749 200.0 46579.3 4369.1 19180 33.0 200.0 381 200.0 46817.1 4163.8 19216 33.0 200.0 503 200.0 46549.5 3935.7 19316 33.0 200.0 421 200.0 46475.0 3708.8 19465 32.0 200.0 384 200.0 46474.7 3470.1 19516 32.0 200.0 396 200.0 46286.2 3205.4 19670 30.0 200.0 370 200.0 41748.6 2949.3 19716 31.0 200.0 378 200.0 36596.5 2702.4 19816 30.0 112.0 372 200.0 33825.3 2470.2 19981 29.0 99.0 368 200.0 29234.2 2248.6 20016 29.0 99.0 369 200.0 29291.3 2007.3 20180 29.0 105.0 960 200.0 38590.7 1768.8 20216 30.0 105.0 763 200.0 40327.4 1564.3 20316 29.0 109.0 894 200.0 43752.9 1372.4 20480 30.0 118.0 1000 200.0 54303.4 1197.2 20516 30.0 117.0 964 200.0 58593.1 987.9 20656 29.0 200.0 147 200.0 63526.8 834.3 20716 31.0 200.0 419 200.0 63624.2 690.5 20816 30.0 200.0 237 200.0 64887.5 513.7 20925 31.0 200.0 133 200.0 69615.9 362.0 21016 31.0 200.0 167 200.0 71348.9 237.4 21124 32.0 200.0 121 200.0 74684.3 135.0 21216 32.0 200.0 136 200.0 75331.8 0.0 21316 33.0 200.0 126 200.0 76393.0 0.0 21427 36.0 200.0 106 200.0 78663.8 0.0 21516 42.0 200.0 112 200.0 80091.6 0.0 21680 44.0 100.0 874 200.0 88066.8 0.0 21716 44.0 95.0 620 200.0 88310.9 0.0 21816 44.0 84.0 789 200.0 90480.9 0.0 21950 41.0 70.0 268 200.0 90559.5 0.0 22016 40.0 67.0 441 200.0 89859.8 0.0 22180 42.0 63.0 842 200.0 94125.1 0.0 22216 40.0 64.0 708 200.0 94173.2 0.0 22316 40.0 63.0 797 200.0 95054.4 0.0 22480 42.0 63.0 975 200.0 97893.1 0.0 22516 41.0 63.0 915 200.0 96349.6 0.0 22679 41.0 62.0 804 200.0 98380.4 0.0 22716 42.0 62.0 841 200.0 100113.0 0.0 22816 42.0 61.0 816 200.0 101802.3 0.0 22925 43.0 63.0 224 200.0 104800.0 0.0 23016 42.0 200.0 421 200.0 104624.7 0.0 23124 43.0 200.0 101 200.0 110791.7 0.0 23216 40.0 200.0 207 200.0 112225.4 0.0 23316 38.0 200.0 136 200.0 114619.6 0.0 23428 33.0 200.0 266 200.0 116132.4 0.0 23516 32.0 200.0 222 200.0 117308.0 0.0 23680 33.0 200.0 798 200.0 116311.5 0.0 23716 33.0 200.0 606 200.0 112256.7 0.0 23816 34.0 83.0 734 200.0 111960.1 0.0 23971 36.0 200.0 322 200.0 104123.7 0.0 24016 37.0 200.0 459 200.0 98337.4 0.0 24175 38.0 200.0 645 200.0 92677.5 0.0 24216 38.0 200.0 583 200.0 90939.8 0.0 24316 39.0 200.0 624 200.0 91353.5 0.0 24479 39.0 200.0 823 200.0 92171.1 0.0 24516 39.0 200.0 756 200.0 90667.2 0.0 24680 40.0 200.0 796 200.0 87823.2 0.0 24716 39.0 200.0 782 200.0 85383.6 0.0 24816 40.0 200.0 791 200.0 82935.7 0.0 24967 39.0 200.0 701 200.0 75131.1 0.0 25016 39.0 200.0 731 200.0 70765.3 0.0 25161 39.0 200.0 665 200.0 64631.1 0.0 25216 39.0 200.0 687 200.0 63313.8 0.0 25316 39.0 200.0 672 200.0 63403.2 0.0 25458 39.0 200.0 621 200.0 60461.9 0.0 25516 39.0 200.0 638 200.0 58124.0 0.0 25664 38.0 200.0 584 200.0 56519.6 0.0 25716 37.0 200.0 602 200.0 55444.0 0.0 25816 37.0 200.0 590 200.0 55242.8 0.0 25957 36.0 71.0 619 200.0 51665.6 0.0 26016 35.0 72.0 609 200.0 48720.9 0.0 26165 37.0 71.0 737 200.0 46505.4 0.0 26216 200.0 71.0 694 200.0 45767.0 0.0 26316 200.0 70.0 722 200.0 44802.7 0.0 In HandleAnomalousConditions with OPEN_DOORWAY error code detected ANOMALY_OPEN_DOORWAY case detected 26323: Top of loop() - calling UpdateAllEnvironmentParameters() Battery Voltage = 7.99 IRHomingValTotalAvg = 91 26338: gl_LeftCenterCm = 200.00 gl_RightCenterCm = 70.00 gl_LeftCenterCm > gl_RightCenterCm --> Calling TrackRightWallOffset(70) TrackRightWallOffset(350.0, 0.0, 0.0, 70) called TrackRightWallOffset: Start tracking offset of 70cm Msec 26457 200.0 68.0 711 200.0 41847.9 0.0 26557 200.0 68.0 716 200.0 39478.4 0.0 26693 200.0 69.0 597 200.0 33803.1 0.0 26757 200.0 68.0 636 200.0 28547.0 0.0 26895 200.0 68.0 586 200.0 20239.8 0.0 26957 200.0 68.0 602 200.0 17479.2 0.0 27057 200.0 68.0 591 200.0 14651.6 0.0 27196 200.0 70.0 554 200.0 10917.9 0.0 27257 200.0 70.0 566 200.0 10608.9 0.0 27397 200.0 70.0 580 200.0 10586.1 0.0 27457 94.0 70.0 575 200.0 8594.3 0.0 27557 63.0 70.0 578 200.0 6542.2 0.0 27703 45.0 68.0 714 200.0 5895.6 0.0 27757 44.0 68.0 668 200.0 5892.7 0.0 27894 45.0 67.0 720 200.0 5871.4 0.0 27957 44.0 68.0 702 200.0 5391.5 0.0 28057 46.0 66.0 714 200.0 4923.0 0.0 28204 47.0 65.0 671 200.0 4355.7 0.0 28257 47.0 65.0 685 200.0 3974.5 0.0 28400 55.0 63.0 687 200.0 3295.5 0.0 28457 59.0 63.0 686 200.0 3269.0 0.0 28557 200.0 62.0 686 200.0 3242.4 0.0 28697 200.0 61.0 644 200.0 3099.0 0.0 28757 200.0 60.0 658 200.0 3094.8 0.0 28887 200.0 59.0 584 200.0 3191.9 0.0 28957 200.0 60.0 608 200.0 3206.6 0.0 29057 200.0 59.0 592 200.0 3248.1 0.0 29179 200.0 60.0 409 200.0 5286.8 0.0 29257 200.0 59.0 470 200.0 5769.1 0.0 29375 200.0 62.0 212 200.0 12524.8 0.0 29457 200.0 62.0 298 200.0 14509.5 0.0 29557 200.0 64.0 240 200.0 17186.3 0.0 29667 200.0 68.0 141 200.0 24880.8 0.0 29757 200.0 68.0 174 200.0 27580.2 0.0 29869 200.0 70.0 154 200.0 33230.3 0.0 29957 200.0 72.0 160 200.0 35536.5 0.0 30057 200.0 71.0 156 200.0 37698.5 0.0 30173 200.0 73.0 352 200.0 37922.3 0.0 30257 40.0 73.0 286 200.0 38775.1 0.0 30384 36.0 74.0 519 200.0 38309.2 0.0 30457 38.0 74.0 441 200.0 38241.4 0.0 30557 38.0 73.0 493 200.0 38031.1 0.0 30683 41.0 72.0 578 200.0 38051.3 0.0 30757 41.0 72.0 549 200.0 38040.7 0.0 30891 41.0 70.0 423 200.0 38006.3 0.0 30957 41.0 70.0 465 200.0 37862.1 0.0 31057 42.0 69.0 437 200.0 37766.2 0.0 31181 42.0 70.0 469 200.0 36536.2 0.0 31257 43.0 70.0 458 200.0 35426.2 0.0 31385 44.0 71.0 504 200.0 33457.2 0.0 31457 45.0 72.0 488 200.0 32168.4 0.0 31557 46.0 72.0 498 200.0 31032.2 0.0 31703 50.0 73.0 532 200.0 29001.2 0.0 31757 51.0 74.0 520 200.0 28127.1 0.0 31873 54.0 73.0 250 200.0 27170.0 0.0 31957 58.0 74.0 340 200.0 25988.4 0.0 32057 59.0 77.0 280 200.0 24978.9 0.0 32172 65.0 77.0 244 200.0 23407.3 0.0 32257 70.0 78.0 256 200.0 22570.1 0.0 32374 75.0 79.0 237 114.0 21148.3 145.0 32457 80.0 79.0 243 110.0 20601.5 297.5 32557 84.0 79.0 239 116.0 19757.4 424.0 32673 90.0 80.0 246 115.0 19102.4 547.9 32757 103.0 80.0 243 106.0 19266.9 695.2 32873 107.0 82.0 219 105.0 19252.1 838.7 32957 113.0 84.0 227 115.0 19177.2 944.0 33057 113.0 82.0 221 118.0 19436.7 1035.2 33172 119.0 86.0 206 122.0 19158.8 1110.7 33257 200.0 93.0 211 200.0 18687.5 1110.7 33370 115.0 114.0 187 200.0 18370.8 1110.7 33457 118.0 96.0 195 200.0 18088.9 1110.7 33557 117.0 103.0 189 200.0 17888.8 1110.7 33666 117.0 200.0 145 200.0 18774.8 1110.7 33758 111.0 200.0 159 200.0 19435.2 1110.7 33872 108.0 200.0 355 200.0 18738.1 1110.7 33957 111.0 200.0 289 98.0 18102.6 1251.0 34057 116.0 200.0 333 94.0 17863.0 1396.6 34204 200.0 200.0 490 115.0 17123.9 1471.0 34257 200.0 200.0 437 200.0 16107.2 1471.0 34365 200.0 200.0 114 200.0 16495.4 1471.0 34457 200.0 200.0 221 200.0 16389.7 1471.0 34557 200.0 200.0 149 200.0 16348.5 1471.0 34665 200.0 200.0 97 200.0 16864.0 1471.0 34757 126.0 200.0 114 200.0 16740.3 1471.0 34865 200.0 200.0 115 200.0 15955.6 1471.0 34957 200.0 200.0 114 200.0 15202.1 1471.0 35057 200.0 85.0 114 200.0 14484.9 1471.0 35173 200.0 85.0 392 200.0 12440.0 1471.0 35257 200.0 80.0 299 200.0 10823.6 1471.0 35375 200.0 77.0 172 200.0 9361.6 1471.0 35457 200.0 80.0 214 200.0 9359.9 1471.0 35557 200.0 85.0 186 200.0 9150.2 1471.0 35665 200.0 86.0 107 200.0 9631.0 1471.0 35757 200.0 88.0 133 200.0 9771.4 1471.0 35865 200.0 90.0 93 200.0 10334.9 1471.0 35957 200.0 91.0 106 200.0 10544.7 1471.0 36057 200.0 93.0 97 200.0 10771.2 1471.0 36165 200.0 96.0 79 200.0 11337.1 1471.0 36257 200.0 97.0 85 200.0 11553.9 1471.0 36365 200.0 96.0 68 200.0 12132.4 1471.0 36457 200.0 95.0 73 200.0 12386.9 1471.0 36557 200.0 96.0 69 200.0 12630.4 1471.0 36666 200.0 97.0 72 200.0 13074.0 1471.0 36757 200.0 100.0 71 200.0 13287.0 1471.0 36866 81.0 100.0 68 200.0 13705.5 1471.0 36957 68.0 97.0 69 200.0 13904.6 1471.0 37057 69.0 100.0 68 200.0 14088.6 1471.0 37175 86.0 99.0 113 200.0 14178.9 1471.0 37257 84.0 101.0 98 200.0 14258.5 1471.0 37365 78.0 102.0 29 200.0 14162.5 1393.9 In HandleAnomalousConditions with OBSTACLE_AHEAD error code detected |

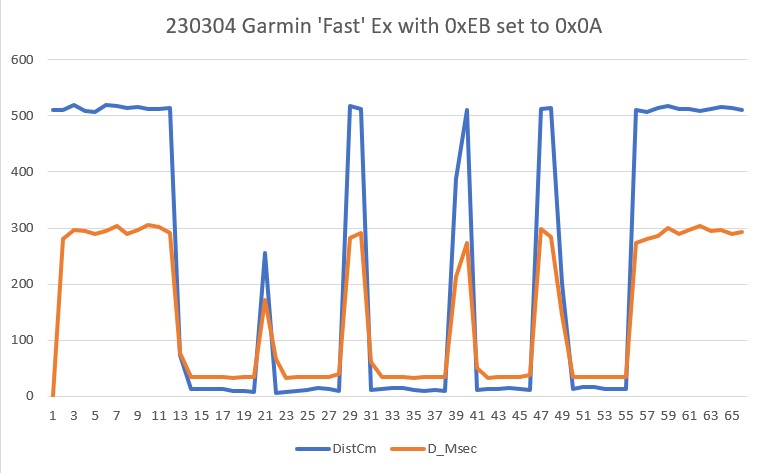

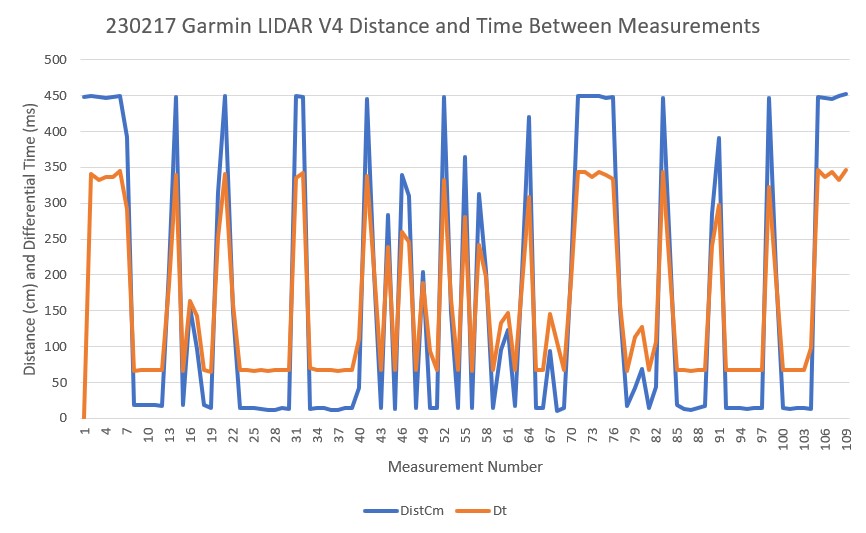

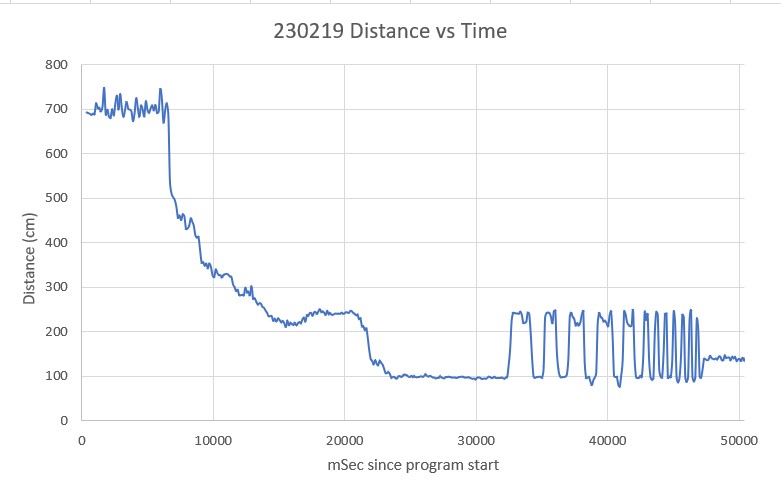

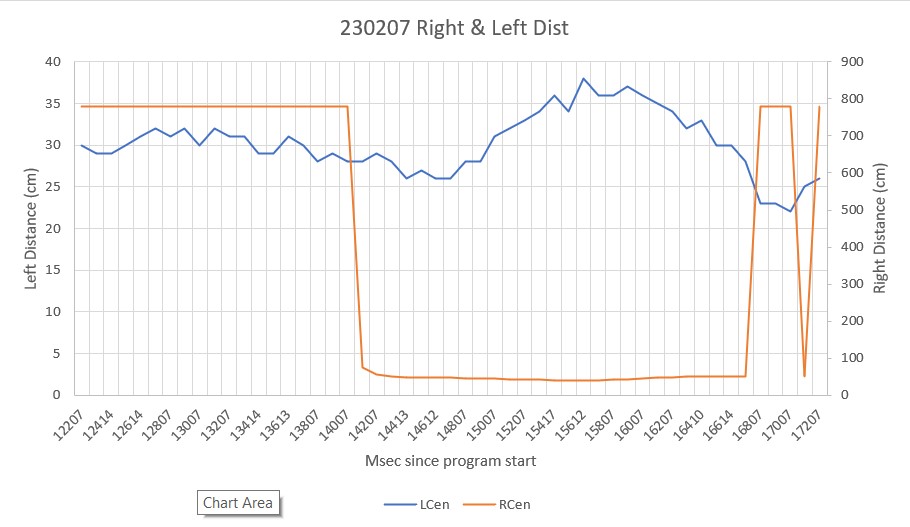

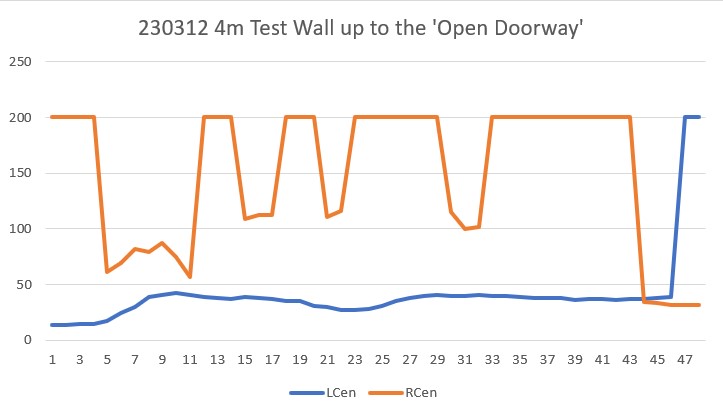

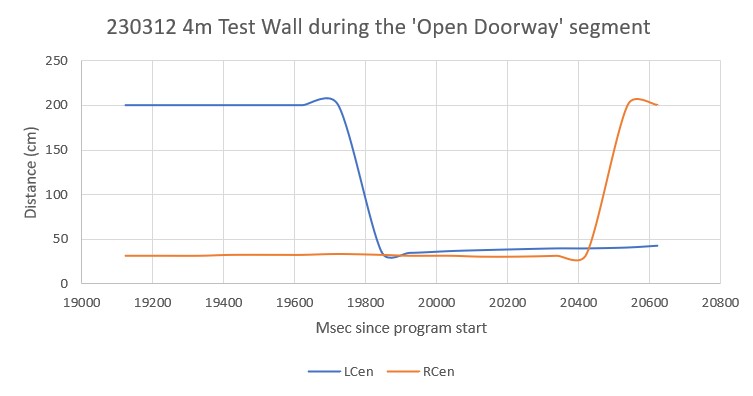

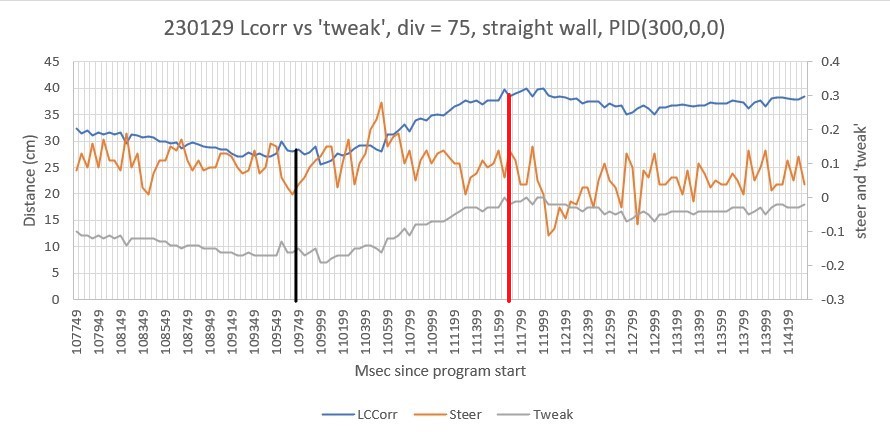

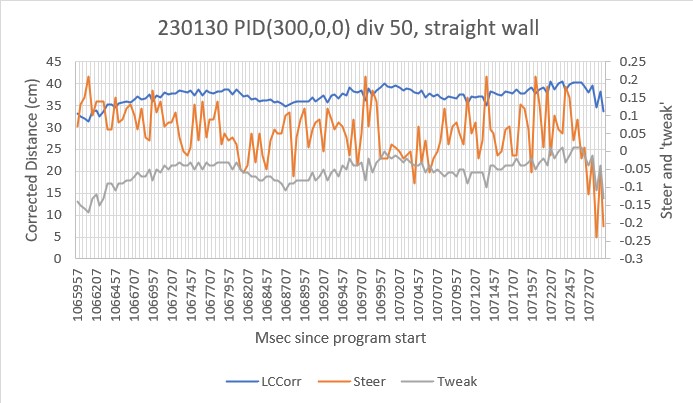

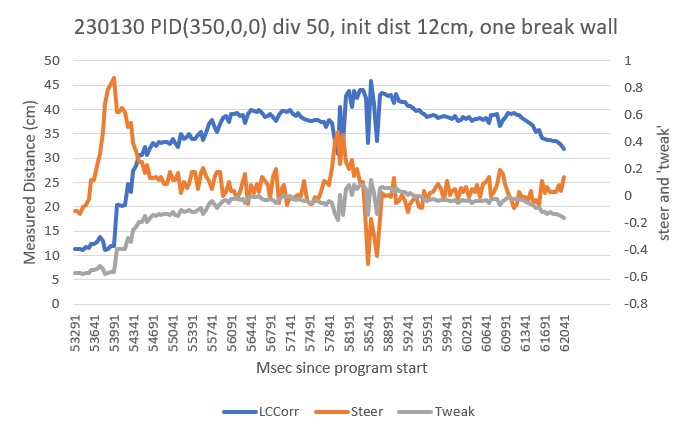

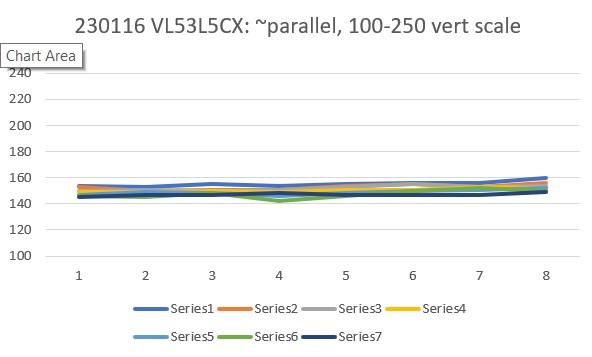

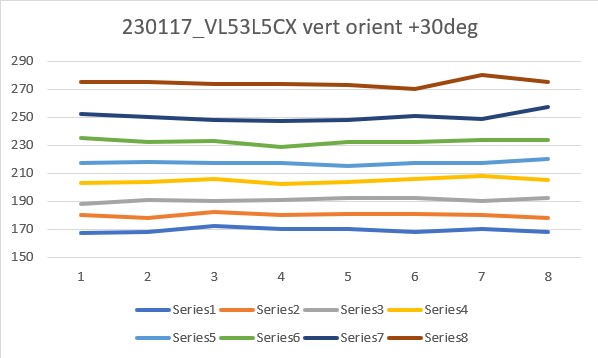

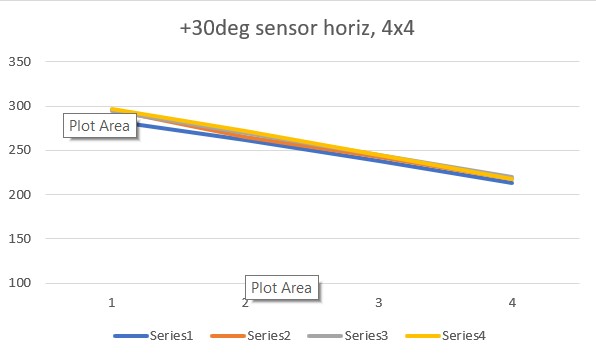

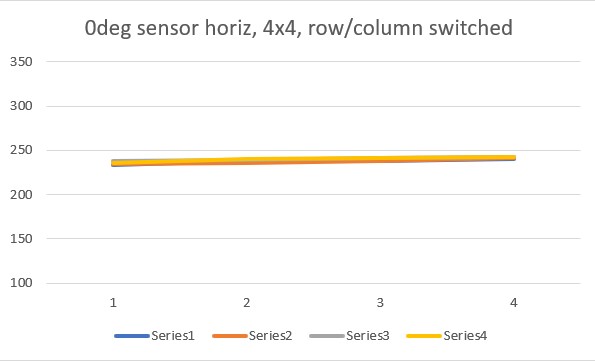

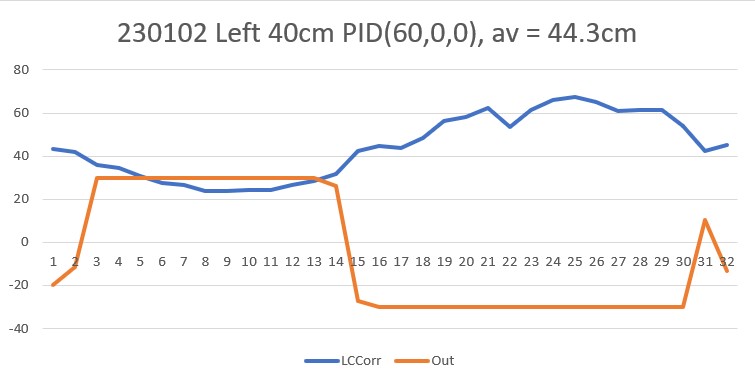

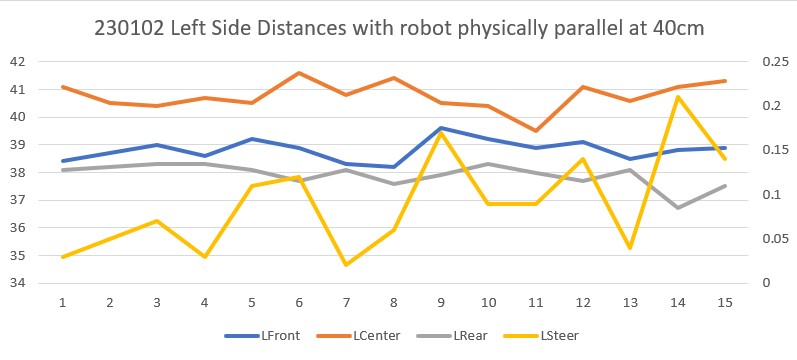

Here’s the Excel plot of the run up to the point where the first open doorway is detected. Note this also covers the oblique turn. From the video, the oblique turn occurs at about six seconds from the start, which should put it somewhere in the 20,000 mSec area. However, comparing the video and the plot, it looks like this turn actually occurs at about 21,500 – 22,000 mSec, where the distance to the right-hand wall falls from the max 200 to about 60-70 cm. The turn itself goes very smoothly, and then the first open doorway condition occurs about four seconds later, at 26,200 mSec.

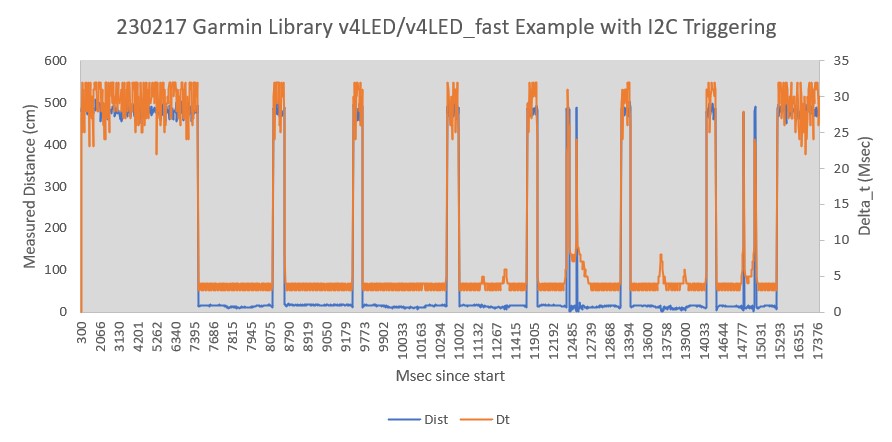

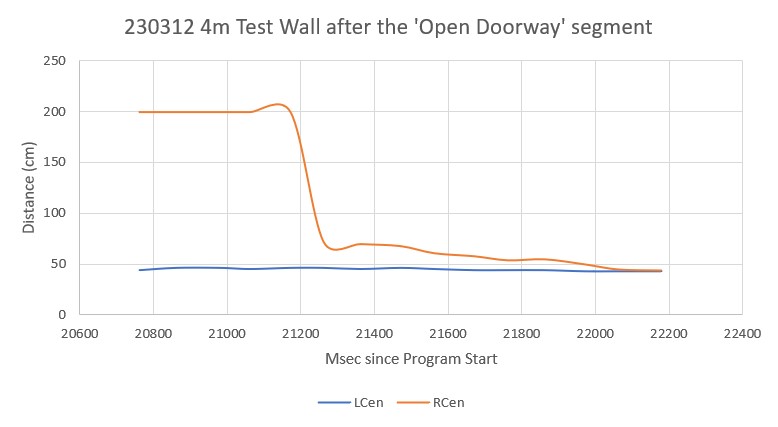

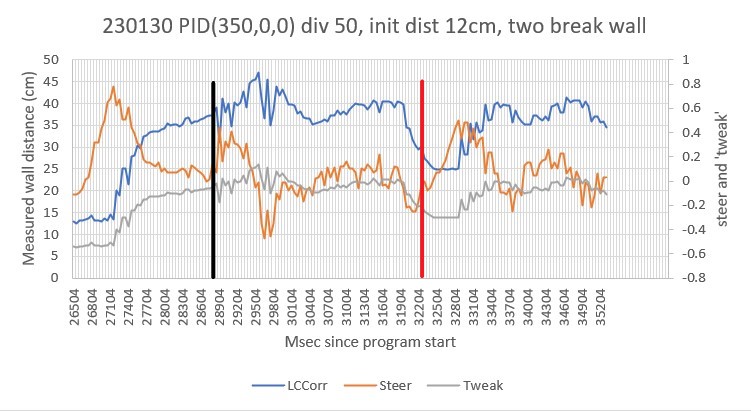

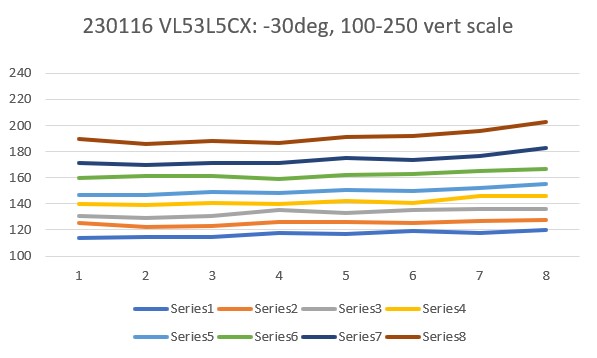

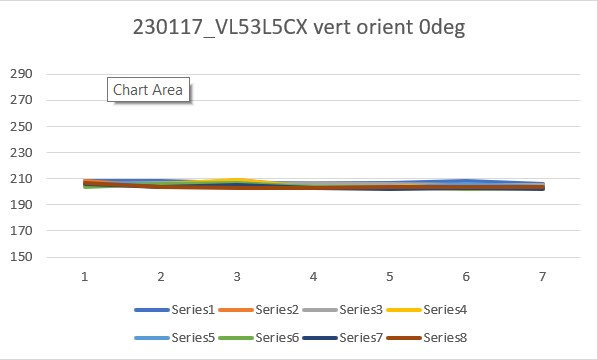

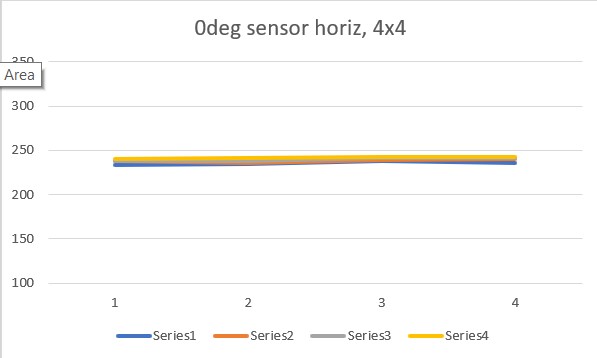

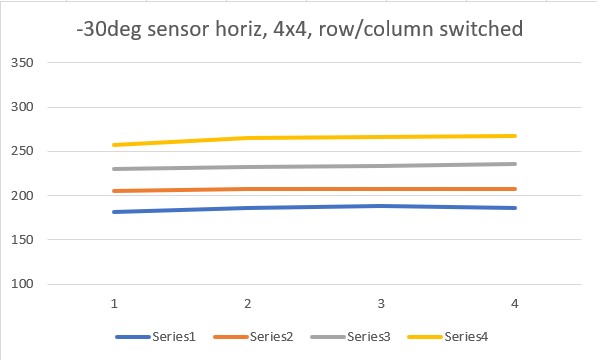

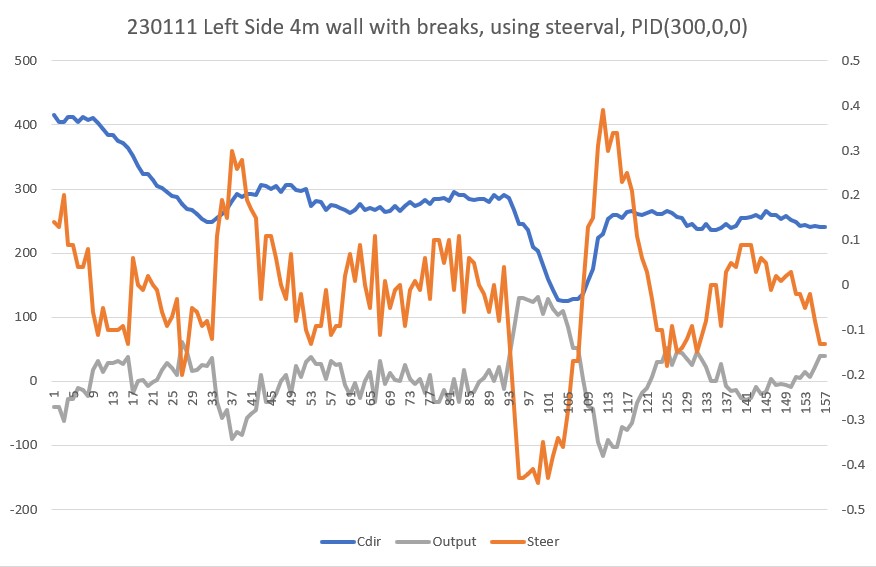

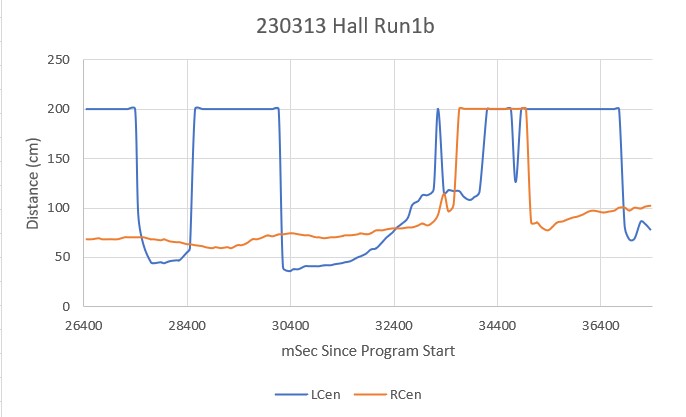

This next plot shows the period from the time of the first ‘open doorway’ detection to the end of the run, including the time where the robot passes the second open doorway (apparently without detecting it).

The left-hand wall distance starts out at 200 (max distance) due to the first open doorway. During this period the robot is tracking the right-hand wall (kitchen counter). When the left-hand distance returns to normal at about 27500 mSec, the robot continues to track the right-hand wall. Apparently the very short section of wall between the first and second doorways wasn’t enough to trigger the ‘open doorway’ condition. However, when the left-hand wall distance comes back down again after the second open doorway (at approximately 30400 mSec, the robot should have reverted to left-wall tracking, but it obviously didn’t. Soon thereafter both the left and right-hand distances started increasing to max values and the poor little robot lost its way – so sad!

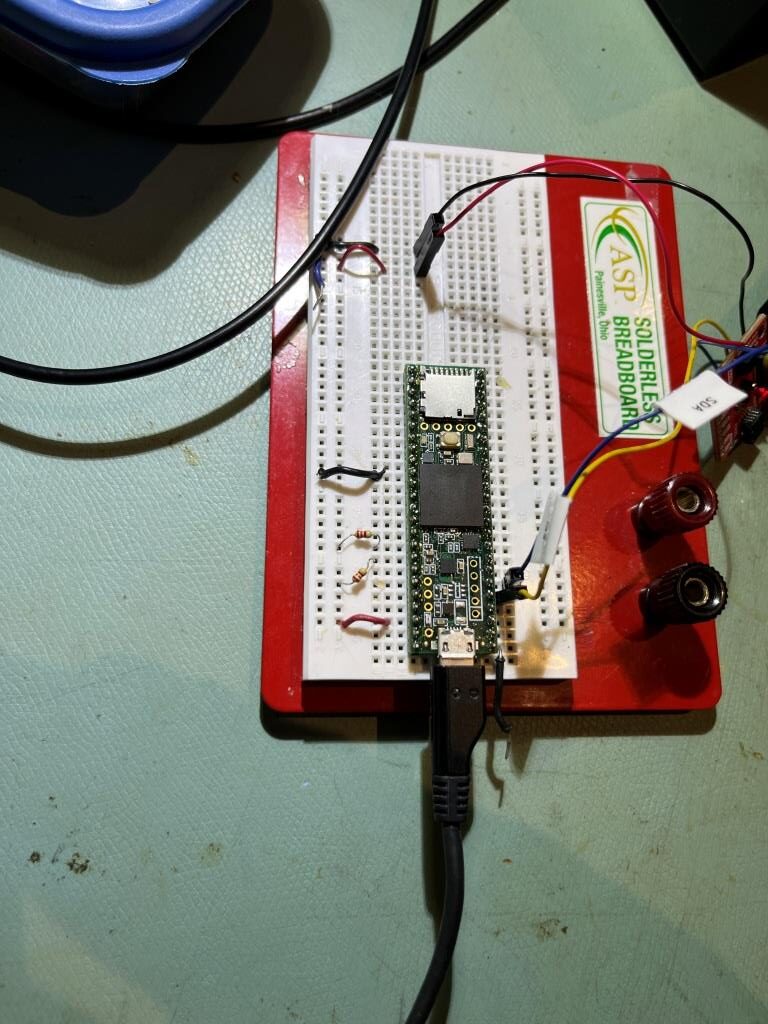

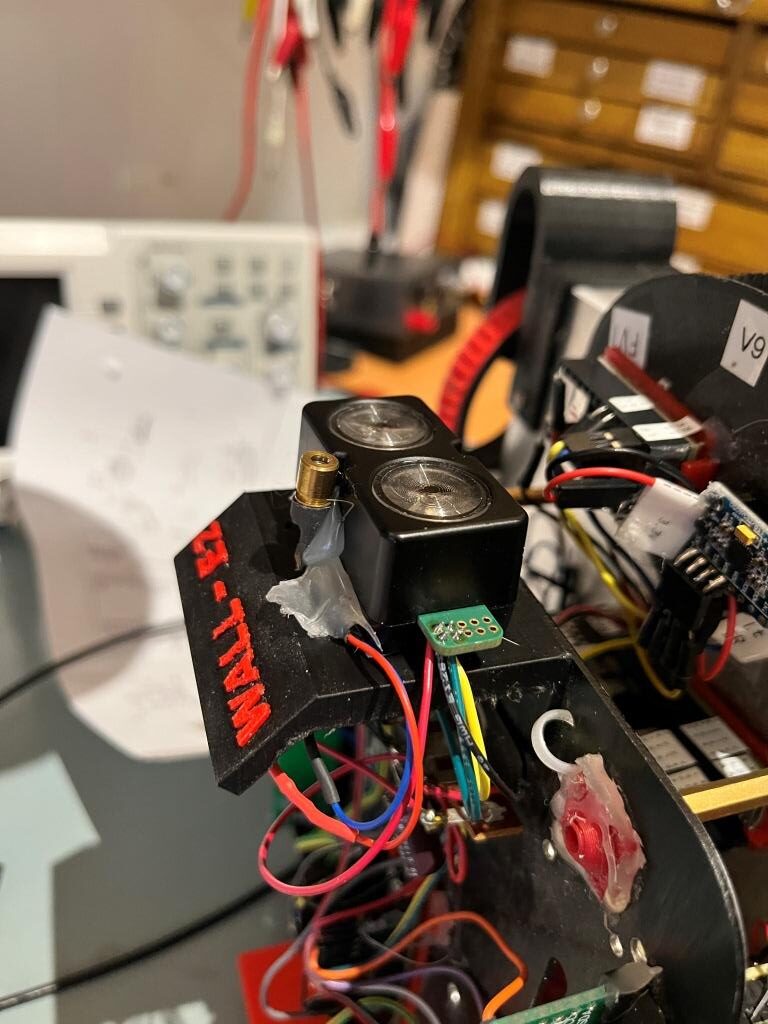

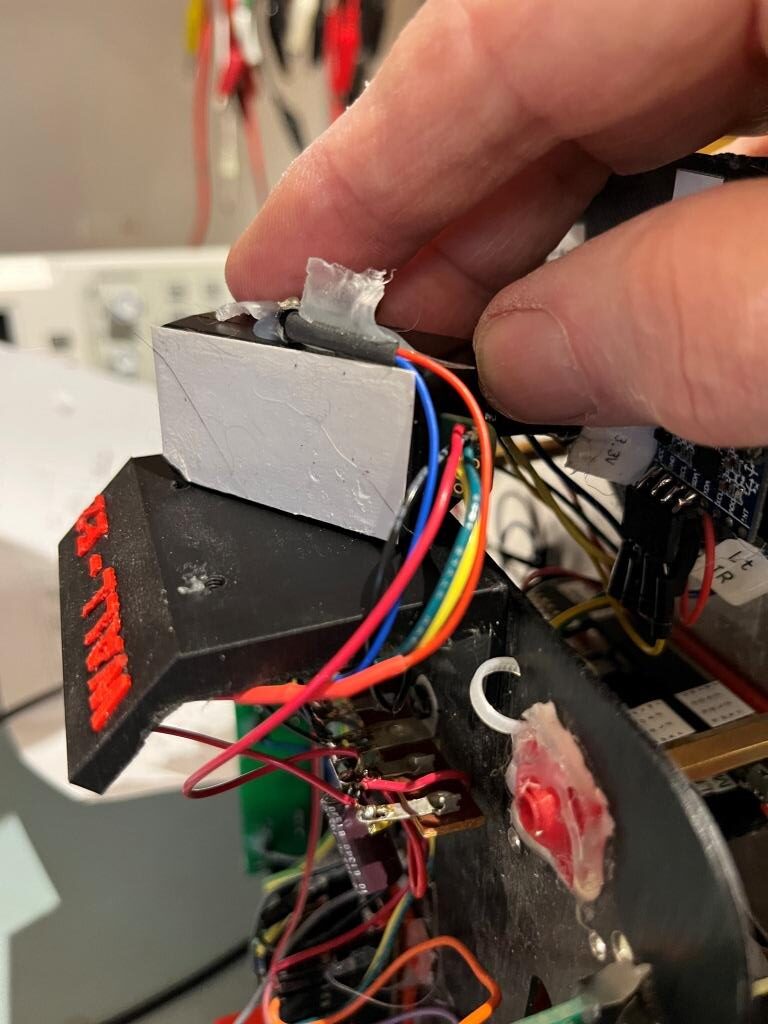

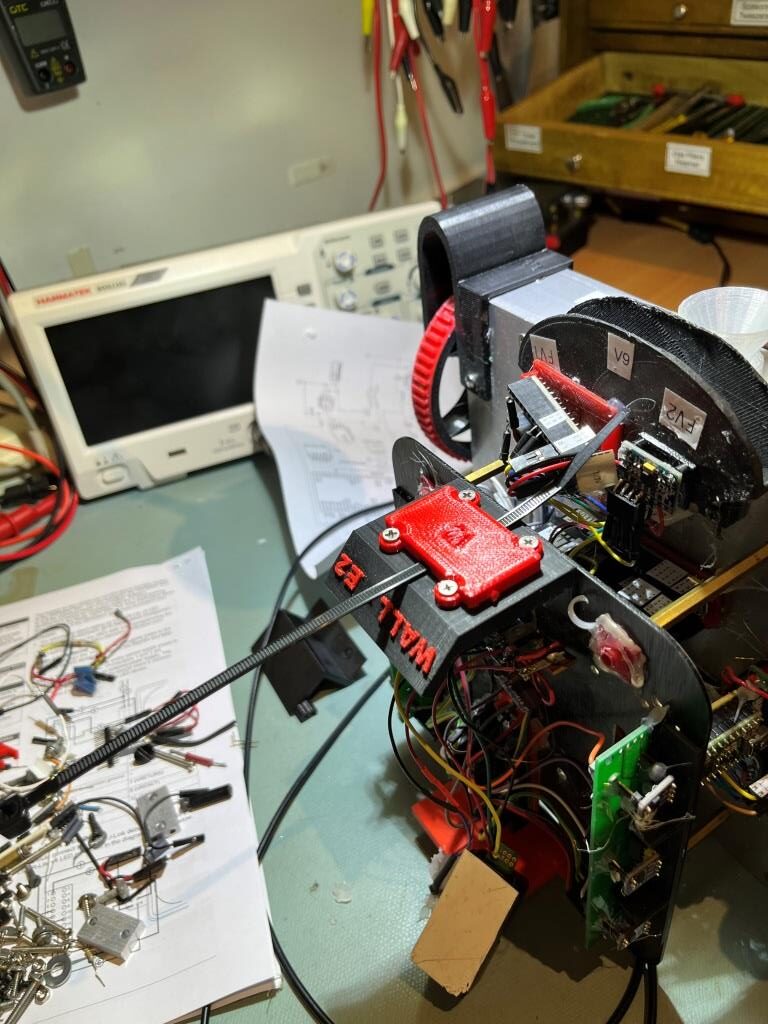

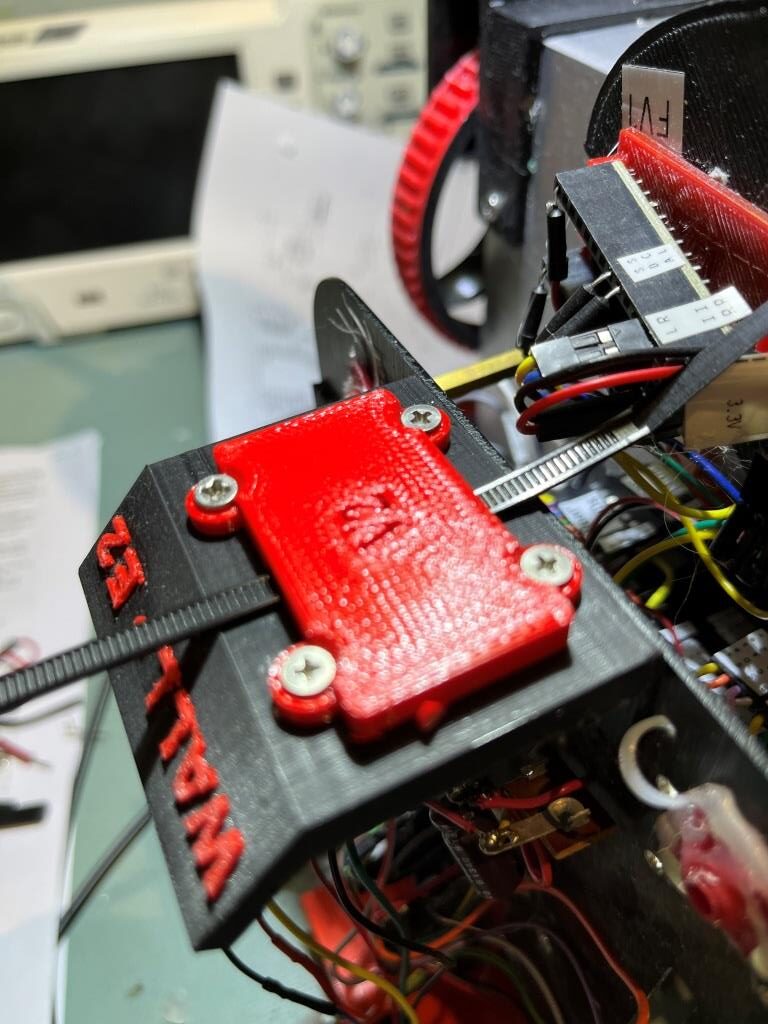

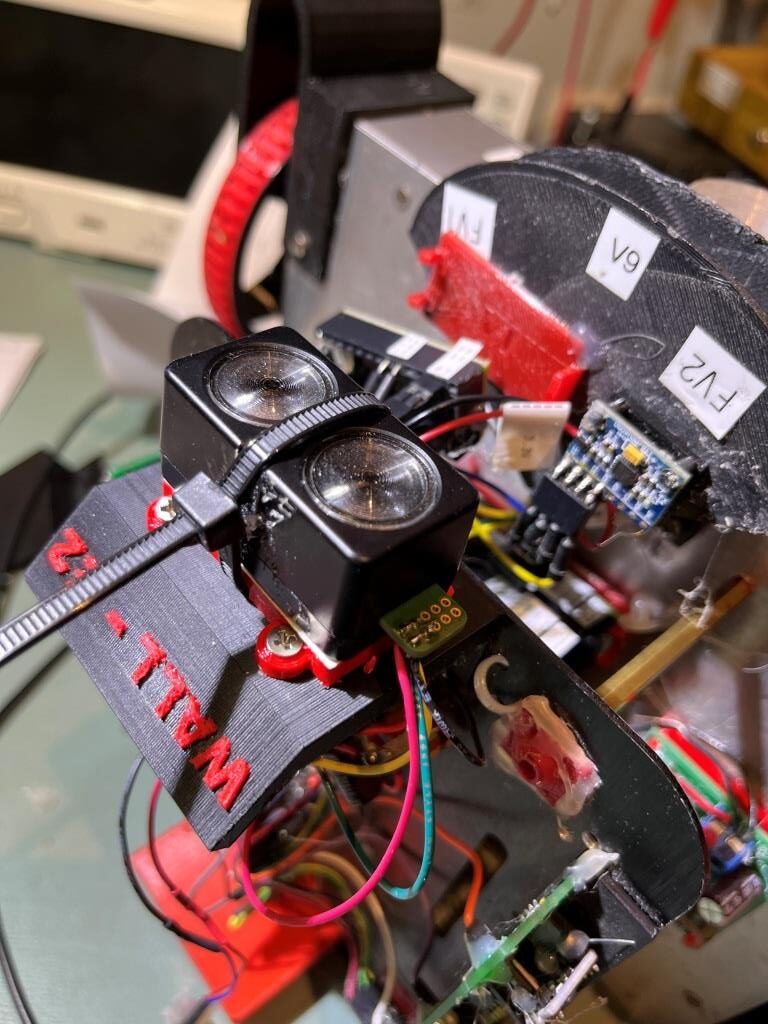

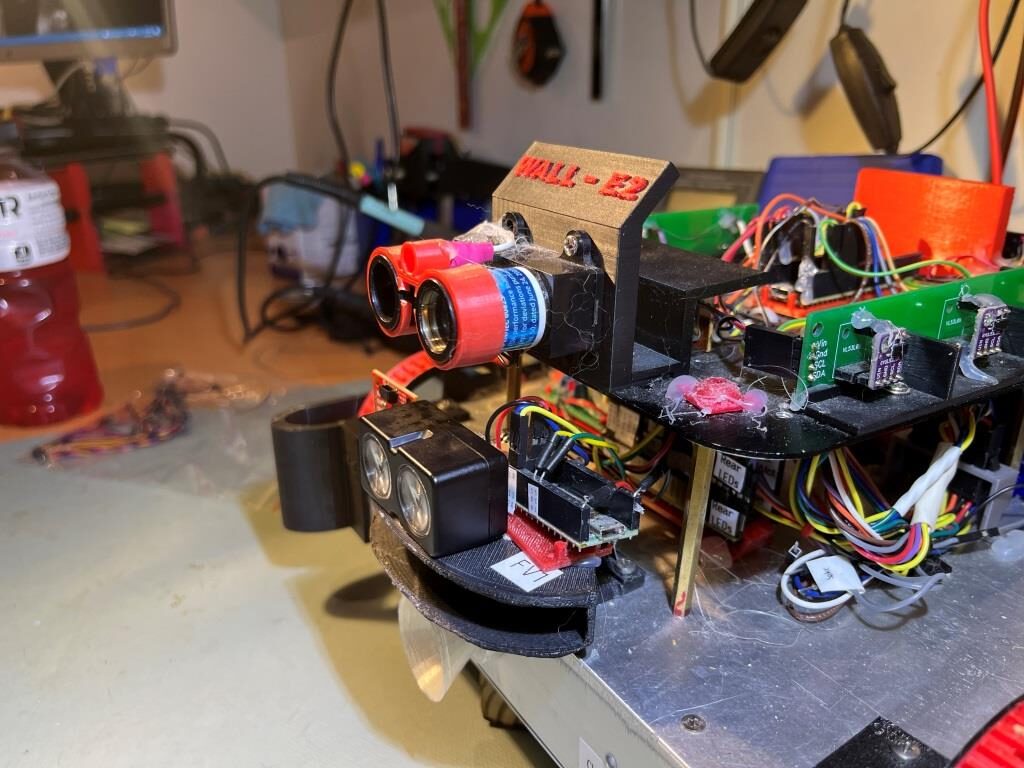

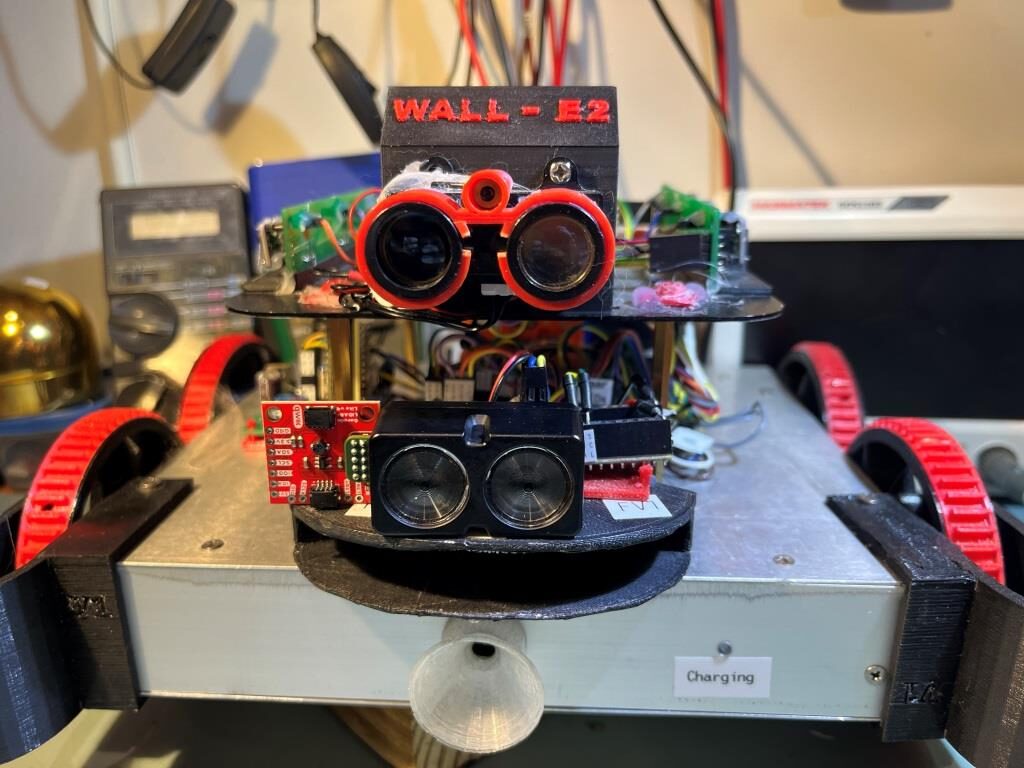

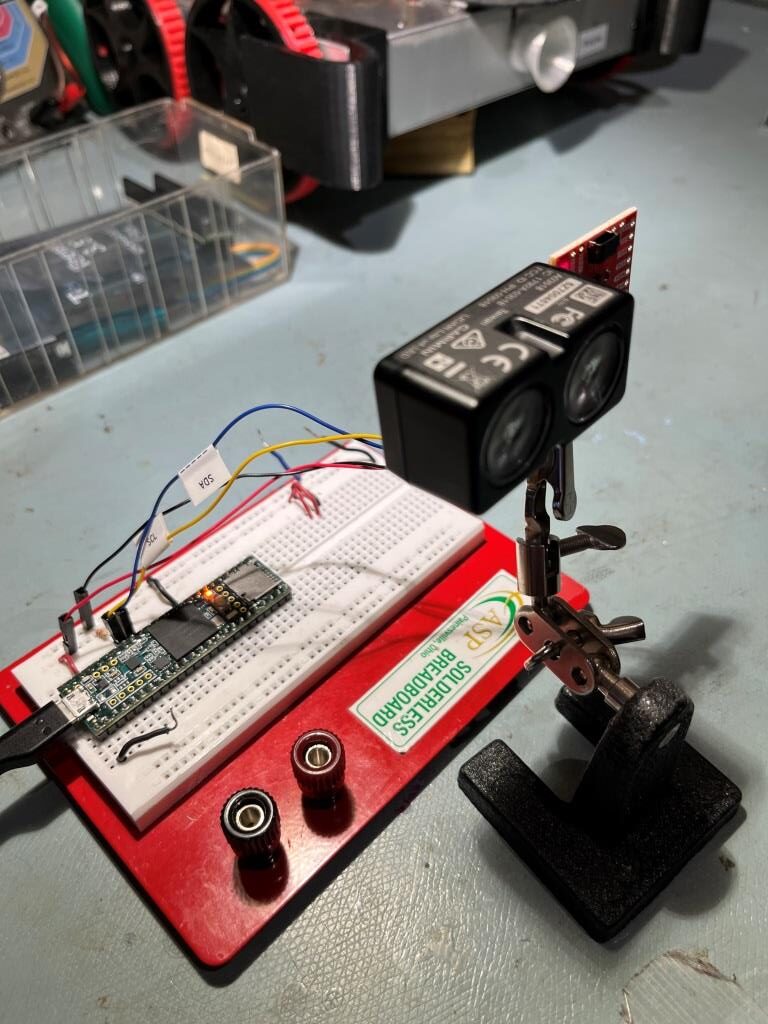

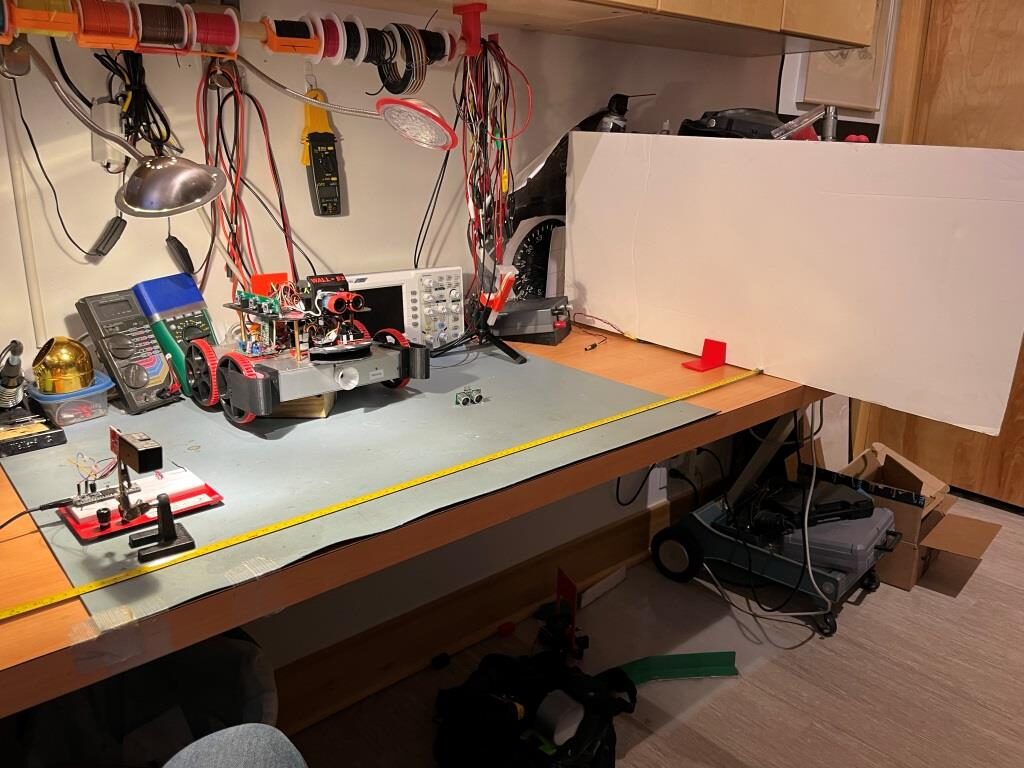

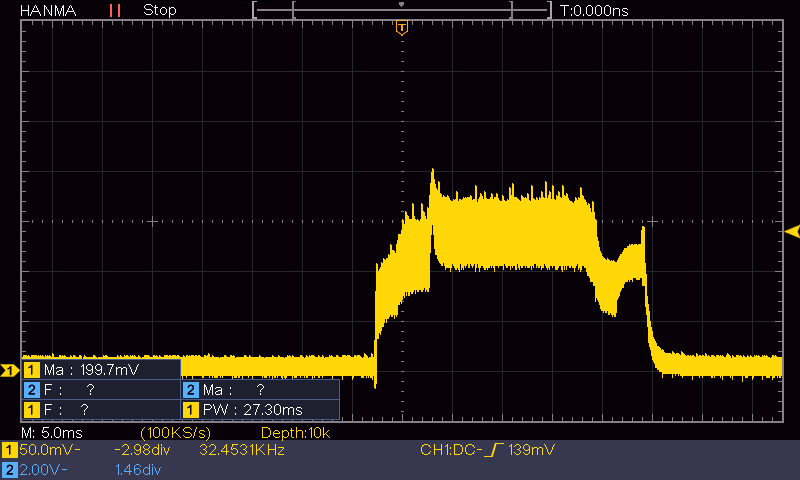

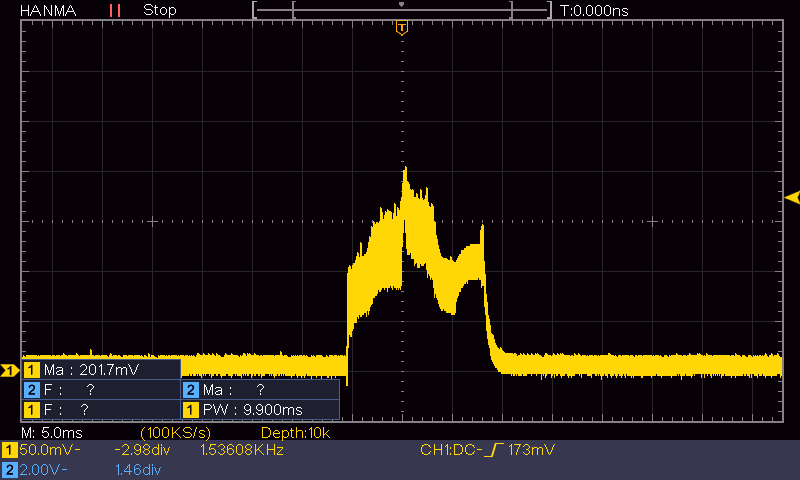

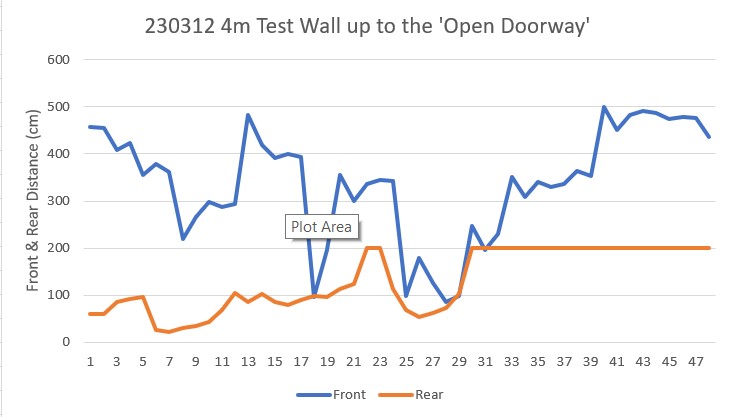

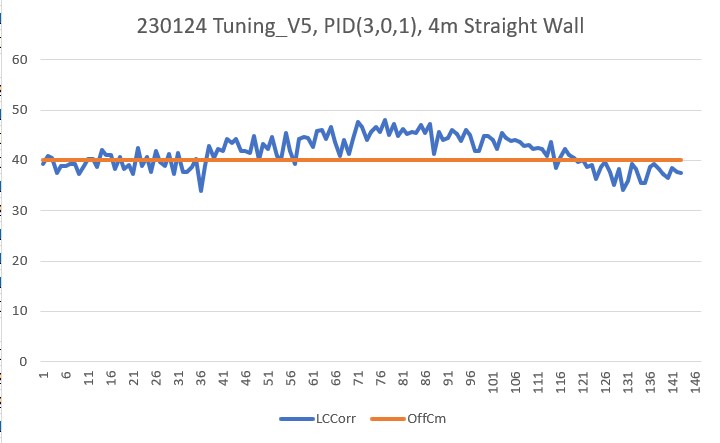

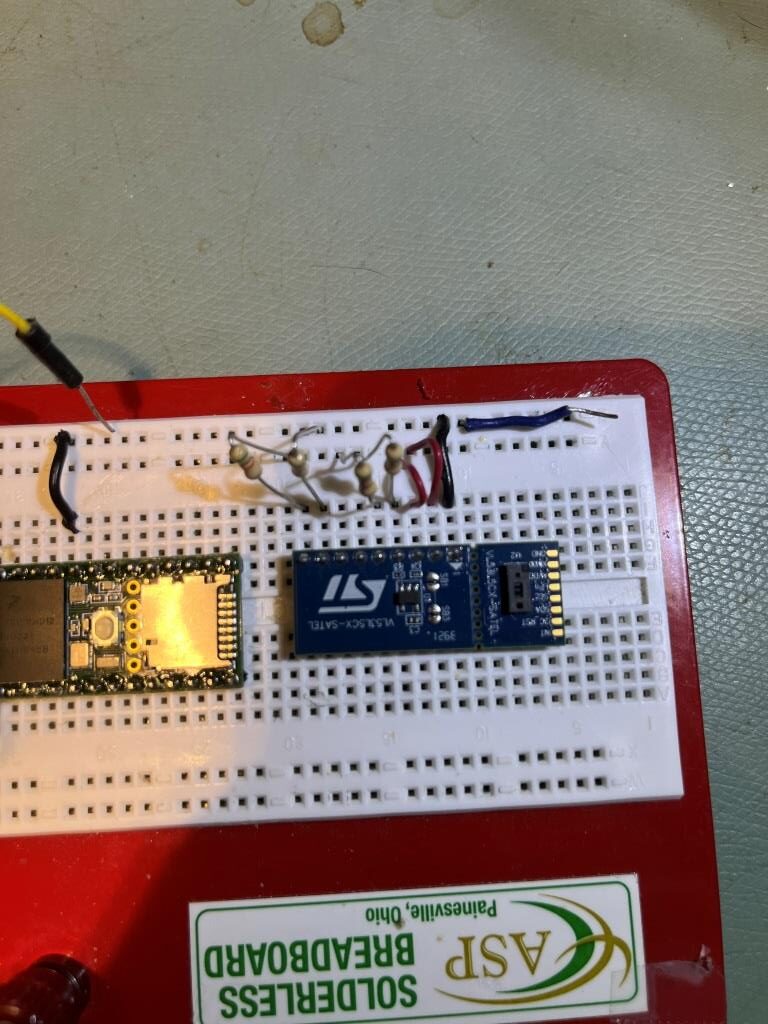

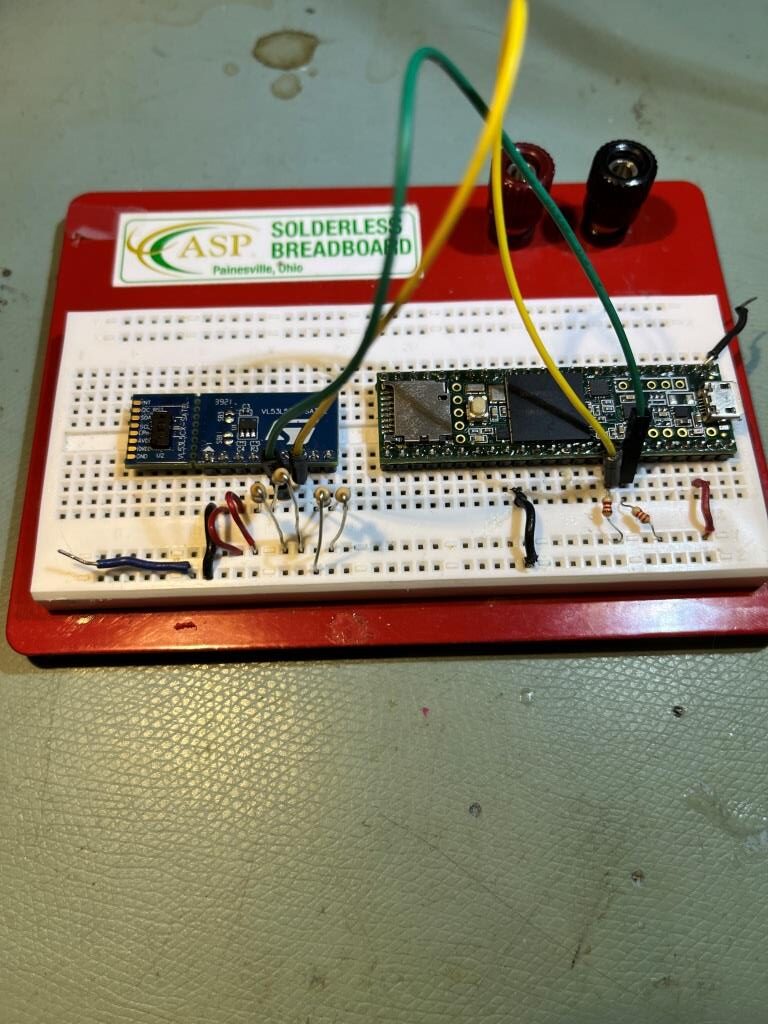

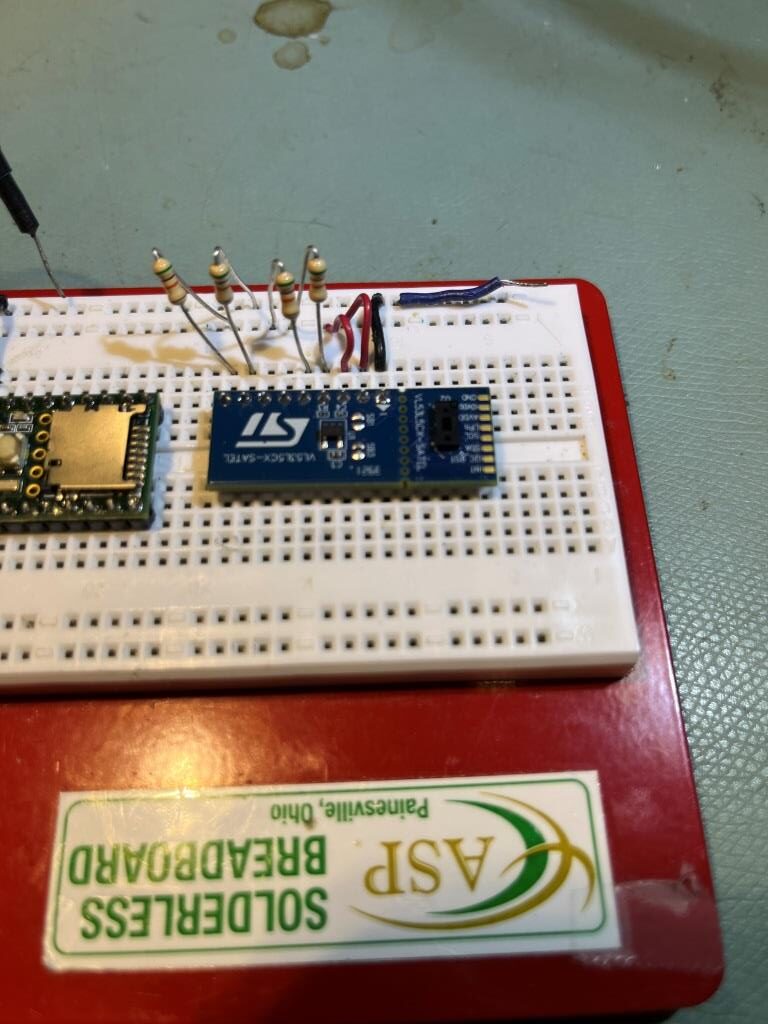

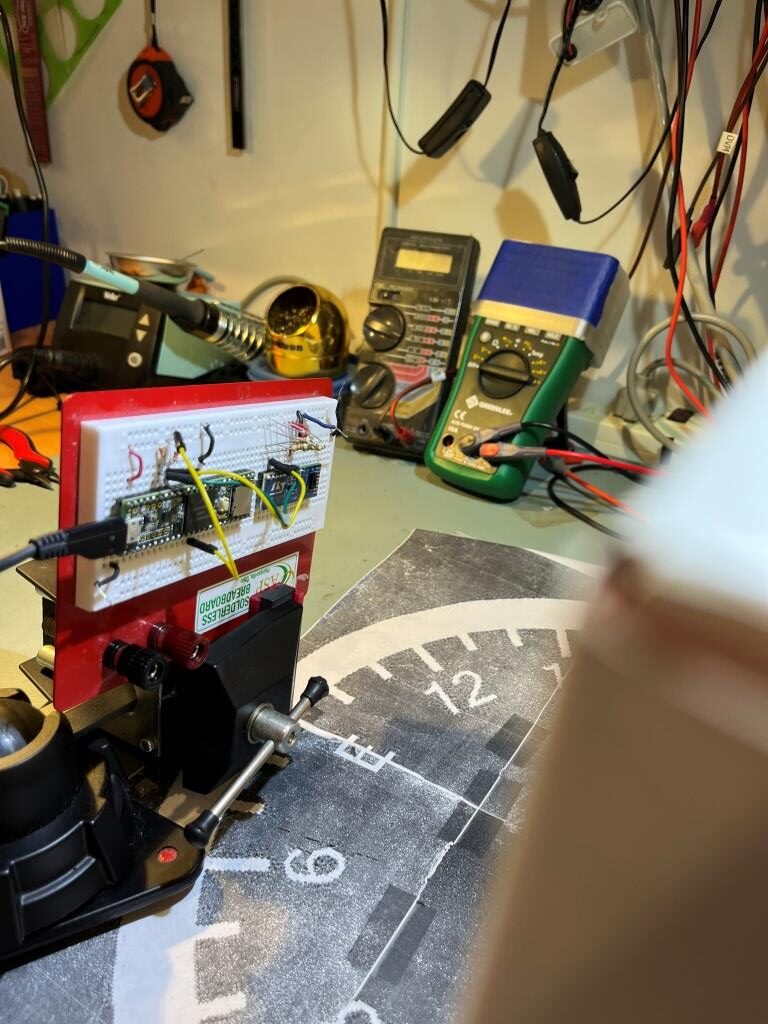

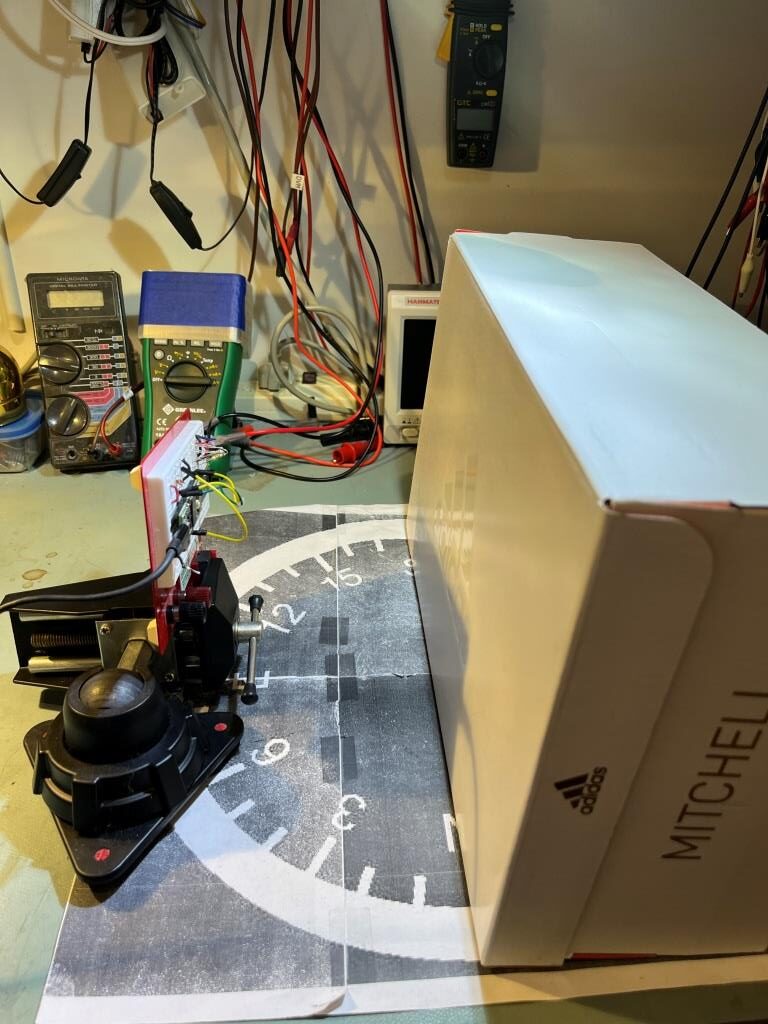

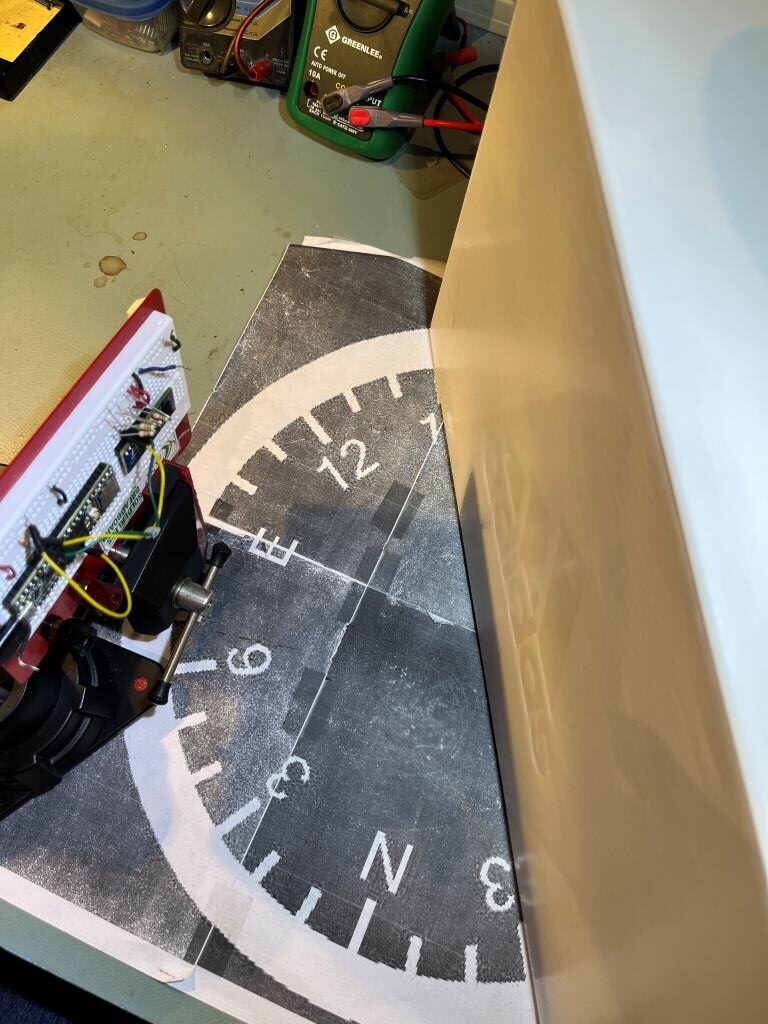

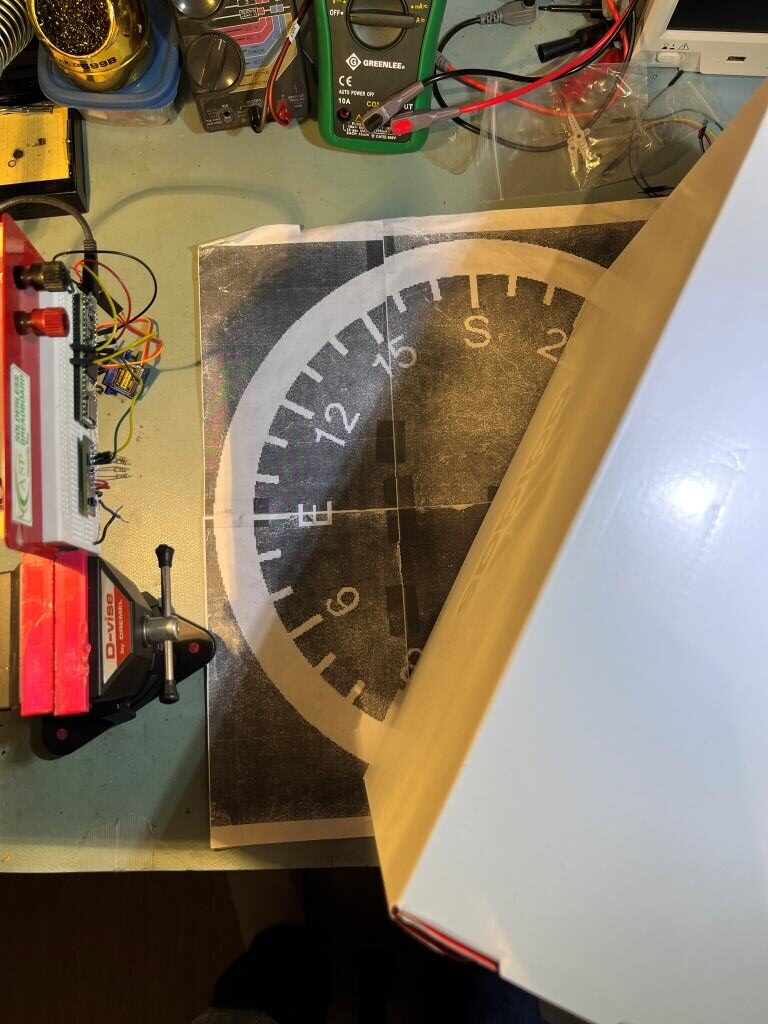

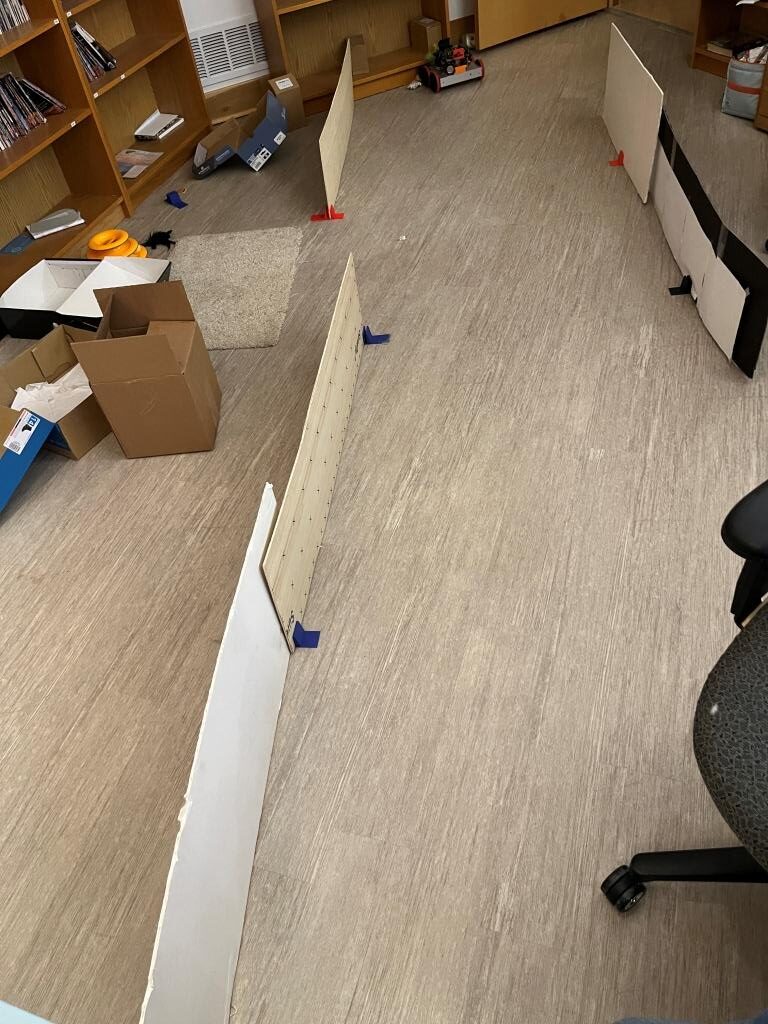

After looking through the data and the code, I began to see that although the code could detect the ‘open doorway’ condition, it wasn’t smart enough to detect the end of the ‘open doorway’, so the robot continued to track the right-hand wall – “to infinity and beyond!”. After making some changes to fix the problem, I mad a run in my test range (aka my office) to test the changes. Here’s a photo of the setup:

The test wall are set up so the ‘wrong side’ wall starts before the open doorway, and ends after it, and the distance between the two walls was set such that the measured distance to either side would be less than MAX_TRACKING_DISTANCE_CM (100 cm).

Here’s the telemetry from the run, with an additional column added to show the current ANOMALY CODE:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 |

Opening port Port open gl_pSerPort now points to active Serial (USB or Wixel) 7950: Starting setup() for WallE3_Complete_V2.ino Checking for MPU6050 IMU at I2C Addr 0x68 MPU6050 connection successful Initializing DMP... Enabling DMP... DMP ready! Waiting for MPU6050 drift rate to settle... Calibrating...Retrieving Calibration Values Msec Hdg 10531 -0.054 -0.042 MPU6050 Ready at 10.53 Sec with delta = -0.013 Checking for Teensy 3.5 VL53L0X Controller at I2C addr 0x20 Teensy available at 11632 with gl_bVL53L0X_TeensyReady = 1. Waiting for Teensy setup() to finish 11635: got 1 from VL53L0X Teensy Teensy setup() finished at 11737 mSec VL53L0X Teensy Ready at 11739 Checking for Garmin LIDAR at Wire2 I2C addr 0x62 LIDAR Responded to query at Wire2 address 0x62! Setting LIDAR acquisition repeat value to 0x02 for <100mSec measurement repeat delay Initializing Front Distance Array...Done Initializing Rear Distance Array...Done Checking for Teensy 3.2 IRDET Controller at I2C addr 0x8 11784: IRDET Teensy Not Avail... IRDET Teensy Ready at 11886 Fin1/Fin2/SteeringVal = 76 60 -0.6875 11888: Initializing IR Beam Value Averaging Array...Done 11891: Initializing IR Beam Value Averaging Array...Done Battery Voltage = 7.96 14098: Top of loop() - calling UpdateAllEnvironmentParameters() Battery Voltage = 7.96 IRHomingValTotalAvg = 30 14127: gl_LeftCenterCm = 16.00 gl_RightCenterCm = 48.00 TrackLeftWallOffset(350.0, 0.0, 0.0, 40) called TrackLeftWallOffset: Start tracking offset of 40cm Msec LCen RCen Front Rear FVar RVar ANOMALYCODE 14239 16.0 50.0 448 30.0 61469.3 726.4 NONE 14339 16.0 50.0 446 28.0 57852.4 696.7 NONE 14480 16.0 45.0 315 69.0 53127.2 664.8 NONE 14539 16.0 41.0 358 80.0 50844.8 642.7 NONE 14672 24.0 45.0 272 65.0 48742.9 609.6 NONE 14739 29.0 61.0 300 49.0 47804.5 576.7 NONE 14839 36.0 117.0 281 26.0 47322.4 563.0 NONE 14954 39.0 200.0 324 34.0 46199.8 544.0 NONE 15039 39.0 41.0 309 66.0 46108.9 519.5 NONE 15151 39.0 40.0 156 92.0 50430.1 518.1 NONE 15239 37.0 200.0 207 87.0 52053.2 509.8 NONE 15339 38.0 200.0 173 59.0 54277.3 487.0 NONE 15458 37.0 89.0 411 77.0 54262.7 471.1 NONE 15539 37.0 82.0 331 109.0 54945.0 495.2 NONE 15648 37.0 94.0 105 200.0 62127.1 842.4 NONE 15739 38.0 98.0 180 62.0 64470.8 821.9 NONE 15839 36.0 200.0 130 75.0 67450.4 804.0 NONE 15948 34.0 200.0 95 114.0 74016.6 827.9 NONE 16039 32.0 200.0 106 200.0 76787.8 1144.9 NONE 16160 30.0 200.0 372 118.0 76567.9 1166.6 NONE 16239 29.0 99.0 283 93.0 76708.2 1155.7 NONE 16339 28.0 98.0 342 99.0 76245.0 1150.2 NONE 16456 27.0 101.0 405 108.0 74147.9 1154.4 NONE 16539 27.0 102.0 384 200.0 72731.9 1434.3 NONE 16655 27.0 100.0 228 200.0 70382.6 1698.8 NONE 16739 29.0 100.0 280 93.0 68130.6 1683.7 NONE 16839 31.0 100.0 245 86.0 65563.2 1668.3 NONE 16949 34.0 104.0 154 86.0 59923.0 1654.3 NONE 17039 36.0 101.0 184 88.0 55745.3 1642.0 NONE 17149 38.0 100.0 174 200.0 45111.3 1886.6 NONE 17239 38.0 98.0 177 200.0 38459.1 2118.3 NONE 17339 39.0 101.0 175 200.0 30873.0 2337.4 NONE 17455 39.0 102.0 363 200.0 12576.5 2544.4 NONE 17539 40.0 200.0 300 200.0 11969.3 2740.0 NONE 17653 39.0 200.0 332 200.0 10862.4 2924.4 NONE 17739 40.0 200.0 321 200.0 10288.4 3098.2 NONE 17839 40.0 200.0 328 200.0 9703.9 3261.8 NONE 17953 39.0 200.0 290 200.0 9010.9 3415.6 NONE 18039 39.0 59.0 302 200.0 8986.3 3560.0 NONE 18153 38.0 60.0 276 200.0 8802.8 3695.6 NONE 18239 38.0 59.0 284 200.0 8811.6 3822.7 NONE 18339 37.0 57.0 278 200.0 8785.7 3941.6 NONE 18449 36.0 57.0 277 200.0 8704.3 4052.8 NONE 18539 37.0 57.0 277 200.0 8624.2 4156.6 NONE 18649 36.0 56.0 264 200.0 8365.4 4253.5 NONE 18739 37.0 56.0 268 200.0 8151.1 4343.7 NONE 18839 37.0 54.0 265 200.0 8091.9 4427.6 NONE 18949 200.0 53.0 258 200.0 7488.6 4505.7 NONE 19039 200.0 53.0 260 200.0 7027.9 4369.0 NONE In HandleAnomalousConditions with OPEN_DOORWAY error code detected ANOMALY_OPEN_DOORWAY case detected 19046: Top of loop() - calling UpdateAllEnvironmentParameters() Battery Voltage = 7.90 IRHomingValTotalAvg = 66 19062: gl_LeftCenterCm = 200.00 gl_RightCenterCm = 53.00 gl_LeftCenterCm > gl_RightCenterCm --> Calling TrackRightWallOffset(53) TrackRightWallOffset(350.0, 0.0, 0.0, 53) called TrackRightWallOffset: Start tracking offset of 53cm Msec LCen RCen Front Rear FVar RVar ANOMALYCODE 19181 200.0 52.0 258 200.0 6451.5 4041.0 OPEN_DOORWAY 19281 200.0 52.0 258 200.0 5965.8 3841.7 OPEN_DOORWAY 19391 200.0 53.0 216 200.0 5559.7 3781.3 OPEN_DOORWAY 19481 200.0 53.0 230 200.0 4999.9 3740.4 OPEN_DOORWAY 19590 200.0 53.0 225 200.0 3930.4 3640.2 OPEN_DOORWAY 19681 200.0 52.0 226 200.0 3767.1 3462.4 OPEN_DOORWAY 19781 200.0 54.0 225 200.0 3588.4 3154.9 OPEN_DOORWAY 19890 200.0 53.0 199 200.0 3631.8 2865.5 OPEN_DOORWAY 19981 200.0 54.0 207 200.0 3268.5 2701.6 OPEN_DOORWAY 20090 200.0 54.0 185 200.0 2664.1 2615.2 OPEN_DOORWAY 20181 200.0 54.0 192 200.0 2720.8 2503.5 OPEN_DOORWAY 20281 200.0 53.0 187 200.0 2788.3 2270.9 OPEN_DOORWAY 20389 67.0 52.0 179 200.0 2948.3 2099.2 OPEN_DOORWAY 20481 56.0 52.0 181 200.0 2864.5 2022.7 OPEN_DOORWAY 20589 55.0 53.0 139 200.0 3073.5 2022.7 OPEN_DOORWAY 20681 54.0 52.0 153 200.0 3141.1 1764.2 OPEN_DOORWAY 20781 53.0 54.0 143 200.0 3246.5 1549.5 OPEN_DOORWAY 20890 53.0 56.0 128 200.0 3592.9 1461.6 OPEN_DOORWAY In HandleAnomalousConditions with ANOMALY_TRACKING_WRONG_WALL error code detected ANOMALY_TRACKING_WRONG_WALL case detected 20899: Top of loop() - calling UpdateAllEnvironmentParameters() Battery Voltage = 7.91 IRHomingValTotalAvg = 88 20915: gl_LeftCenterCm = 54.00 gl_RightCenterCm = 56.00 TrackLeftWallOffset(350.0, 0.0, 0.0, 40) called TrackLeftWallOffset: Start tracking offset of 40cm Msec LCen RCen Front Rear FVar RVar ANOMALYCODE 21027 51.0 58.0 129 200.0 3400.4 1378.8 ANOMALY_TRACKING_WRONG_WALL 21127 49.0 59.0 130 200.0 3504.3 1209.2 ANOMALY_TRACKING_WRONG_WALL 21235 46.0 59.0 124 200.0 3444.7 1052.8 ANOMALY_TRACKING_WRONG_WALL 21327 44.0 59.0 126 200.0 3404.2 919.8 ANOMALY_TRACKING_WRONG_WALL 21435 43.0 60.0 124 200.0 3333.0 919.8 ANOMALY_TRACKING_WRONG_WALL 21527 43.0 61.0 124 200.0 3335.0 919.8 ANOMALY_TRACKING_WRONG_WALL 21627 47.0 62.0 124 200.0 3269.9 724.5 ANOMALY_TRACKING_WRONG_WALL 21735 49.0 60.0 132 200.0 3214.6 490.4 ANOMALY_TRACKING_WRONG_WALL 21827 49.0 60.0 129 200.0 3141.9 245.9 ANOMALY_TRACKING_WRONG_WALL 21935 49.0 55.0 100 200.0 3154.9 0.0 ANOMALY_TRACKING_WRONG_WALL 22027 48.0 53.0 109 200.0 3097.4 0.0 ANOMALY_TRACKING_WRONG_WALL 22127 49.0 65.0 103 200.0 3034.9 0.0 ANOMALY_TRACKING_WRONG_WALL 22235 49.0 200.0 80 200.0 3087.9 0.0 ANOMALY_TRACKING_WRONG_WALL 22327 51.0 200.0 87 200.0 3034.4 0.0 ANOMALY_TRACKING_WRONG_WALL 22435 49.0 200.0 78 200.0 2958.7 0.0 ANOMALY_TRACKING_WRONG_WALL 22527 46.0 200.0 81 200.0 2885.7 0.0 ANOMALY_TRACKING_WRONG_WALL 22627 44.0 200.0 79 200.0 2785.2 0.0 ANOMALY_TRACKING_WRONG_WALL 22735 42.0 200.0 46 200.0 2743.8 0.0 ANOMALY_TRACKING_WRONG_WALL 22827 40.0 200.0 57 200.0 2629.7 0.0 ANOMALY_TRACKING_WRONG_WALL 22935 40.0 200.0 76 200.0 2545.7 0.0 ANOMALY_TRACKING_WRONG_WALL 23027 50.0 200.0 69 200.0 2446.4 0.0 ANOMALY_TRACKING_WRONG_WALL 23127 60.0 200.0 73 200.0 2336.8 0.0 ANOMALY_TRACKING_WRONG_WALL 23235 65.0 200.0 55 200.0 2142.8 0.0 ANOMALY_TRACKING_WRONG_WALL |

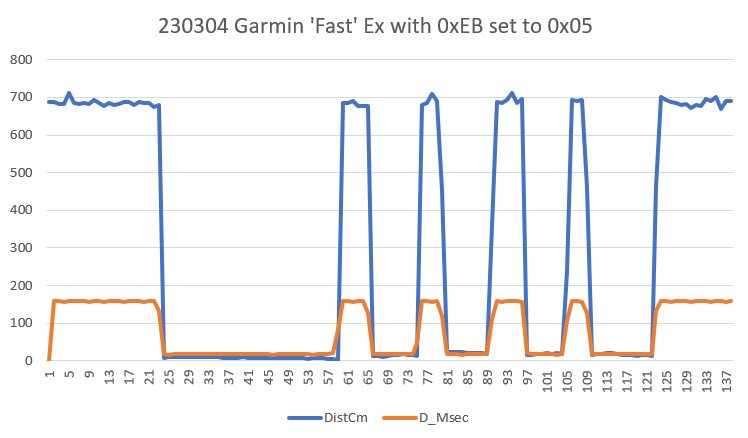

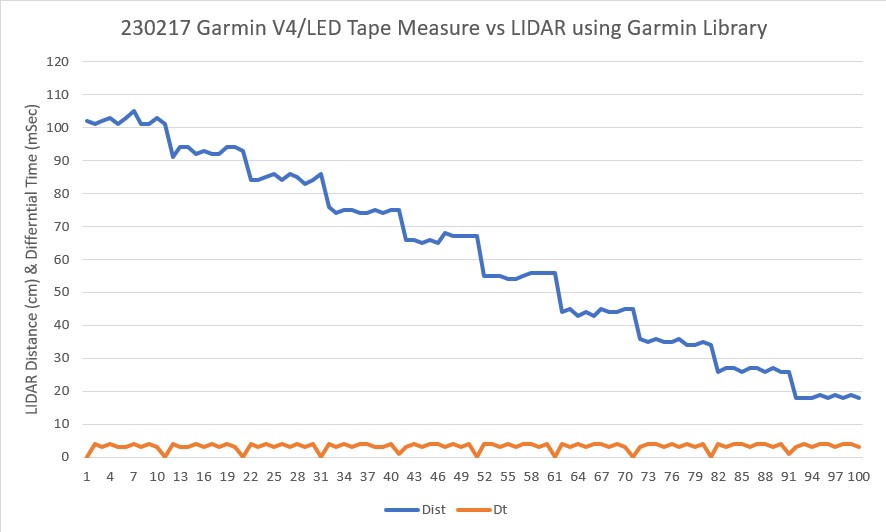

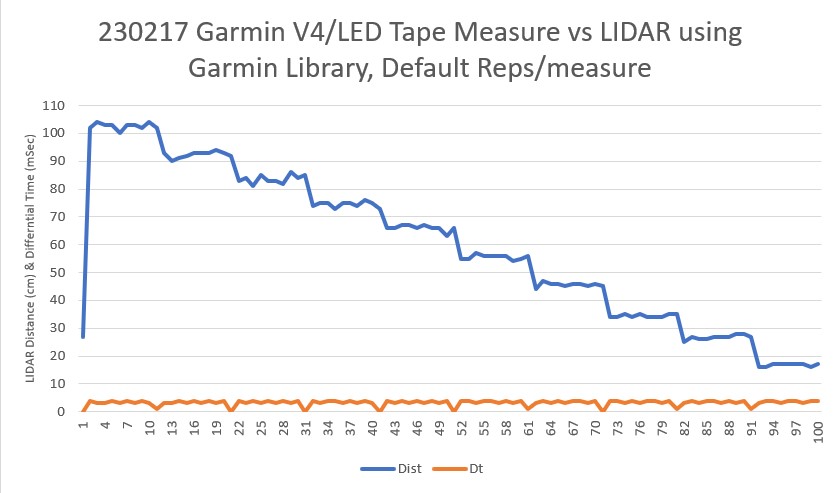

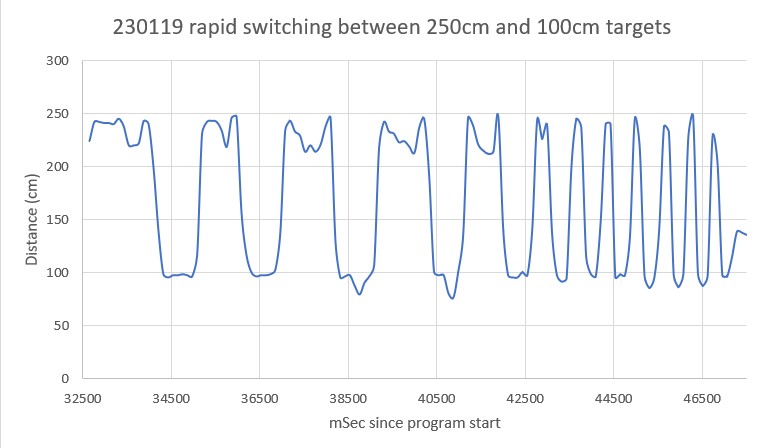

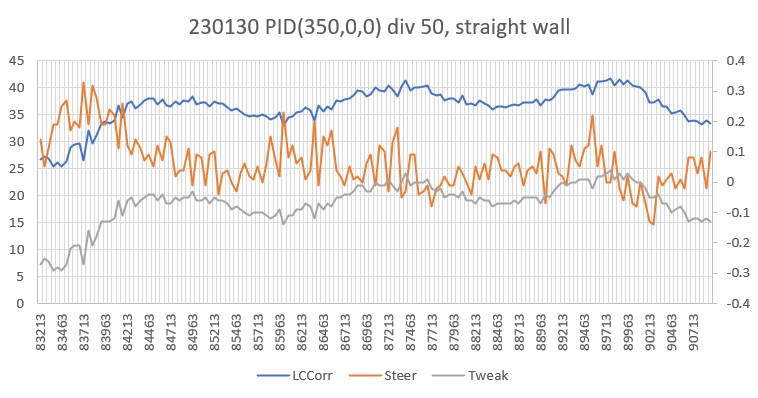

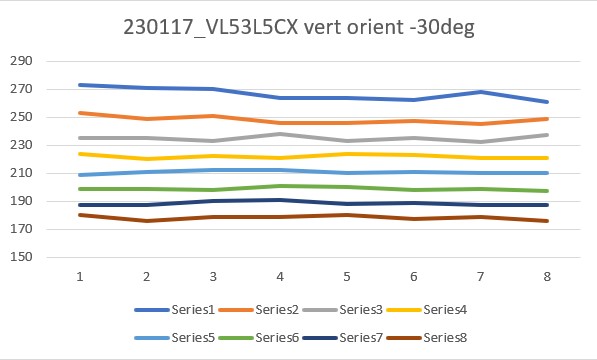

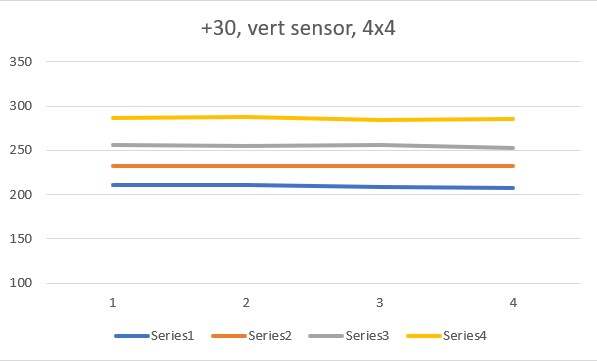

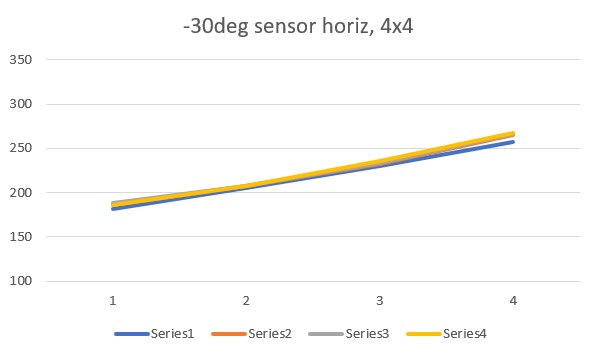

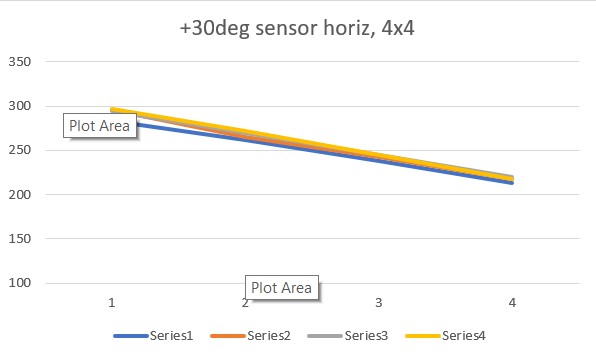

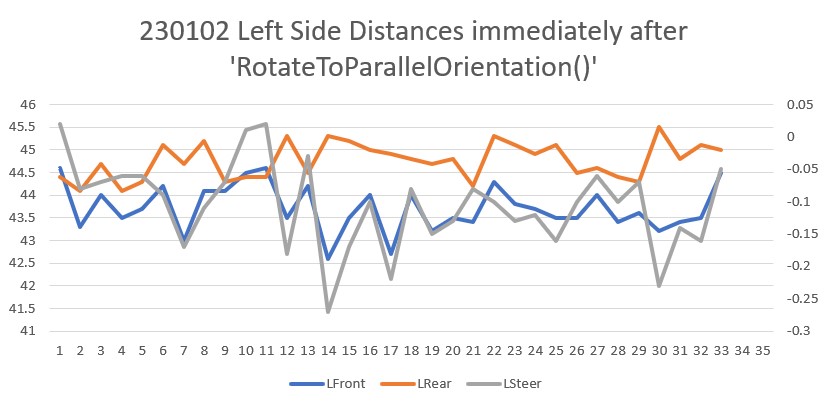

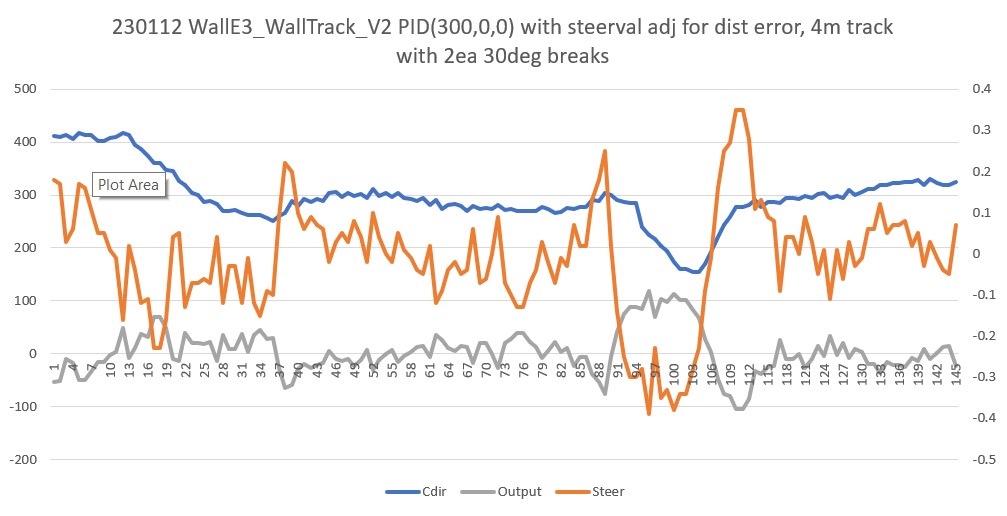

The robot starts the run tracking the left wall at the default offset (40cm), with an anomaly code of ANOMALY_NONE. This continues until the 19 sec mark (19,039 mSec), where the robot detects the ‘open doorway’ condition. This causes the code to re-assess the tracking condition, and it decides to track the right wall, starting at about 19,181 mSec.

During this segment, the AnomalyCode value is OPEN_DOORWAY. This continues until about 20,890 mSec where the ‘Tracking Wrong Wall’ condition is detected. Note that the actual/physical ‘open doorway condition ended at about 20,389 mSec when the left-side distance changed from 200 (max) to 67 cm, but it took another 0.5 sec for the algorithm to catch the change.

The ‘Tracking Wrong Wall’ detection caused the robot to once again re-assess the tracking configuration, whereupon it changed back to left-wall tracking at 21,027 mSec with the new anomaly code of ‘ANOMALY_TRACKING_WRONG_WALL’. Left side wall tracking continues until the run is terminated at 23,235 mSec. Note that the right-hand wall stops at about 22,235 mSec and the right side distance measurement goes to 200 (max) cm and stays there for the rest of the run.

Looking at the above, I believe the fixes I implemented were effective in addressing the ‘wandering robot syndrome’ I observed on the previous run. Next I will remove the debugging printout code, clean things up a bit, and then repeat the last ‘real-world’ run from before.

10 May 2023 Update:

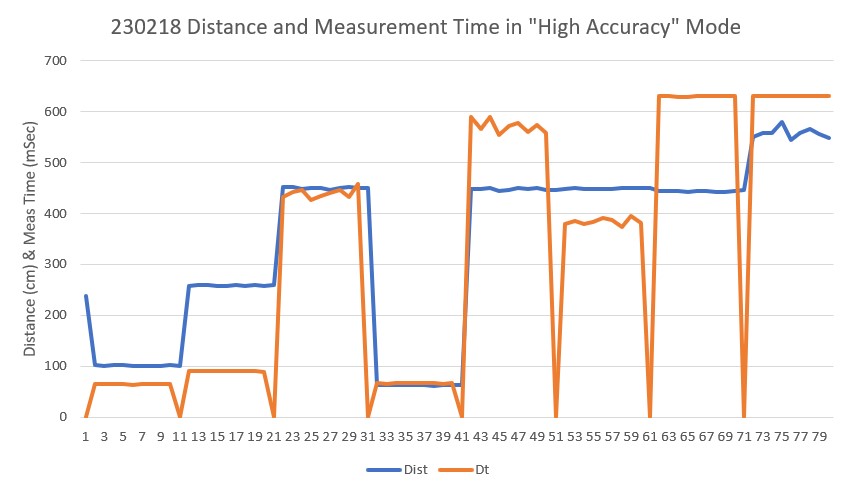

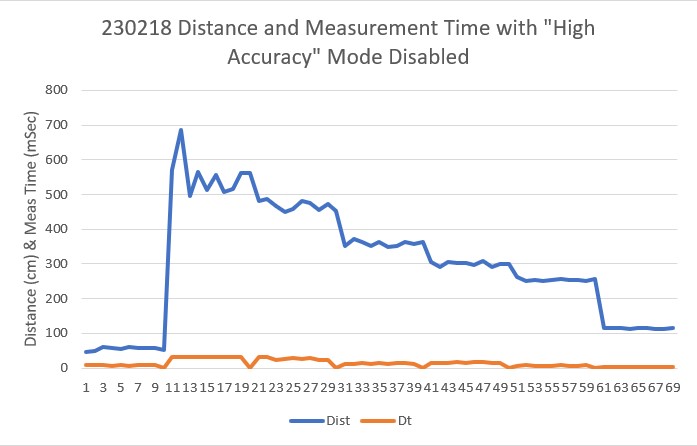

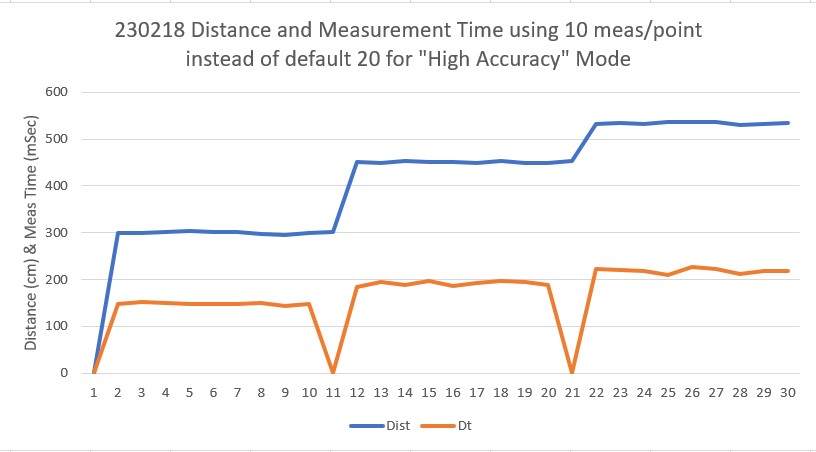

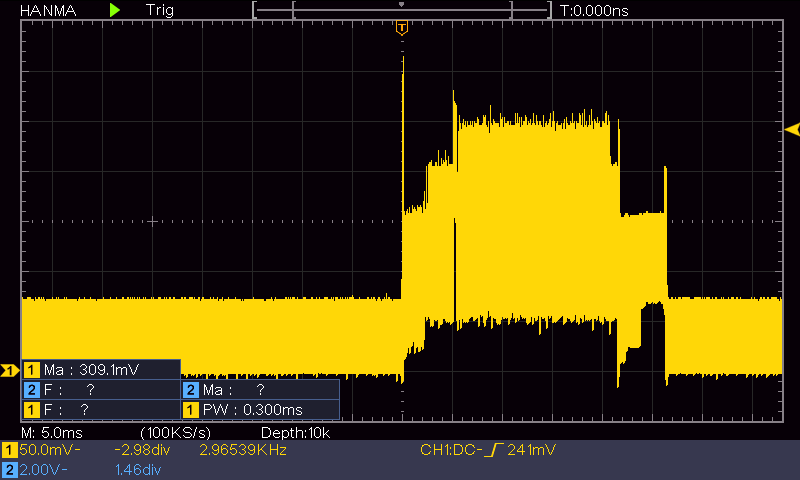

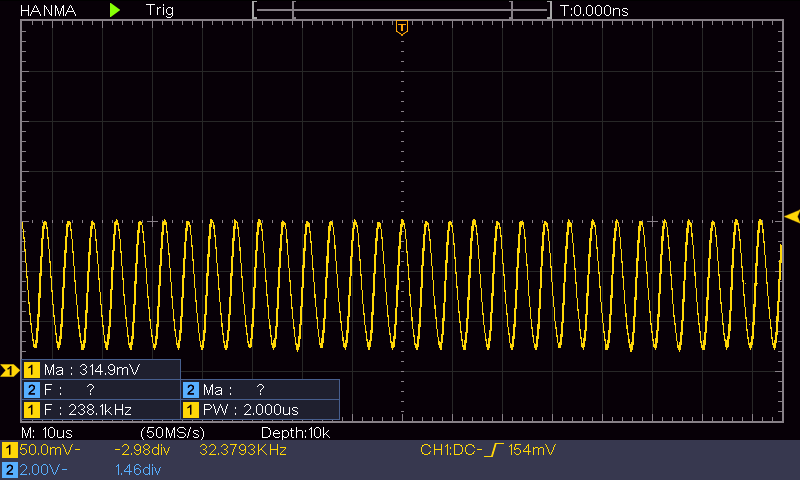

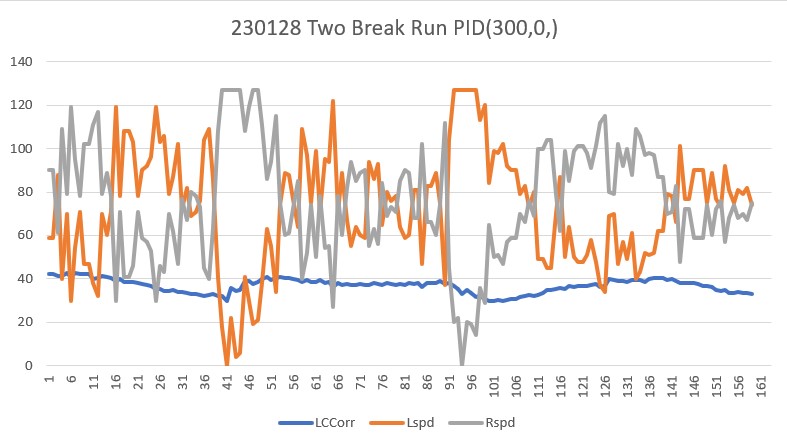

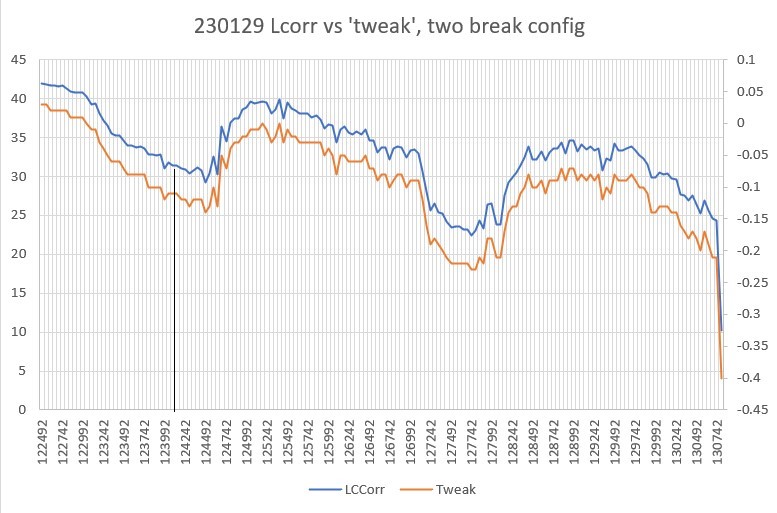

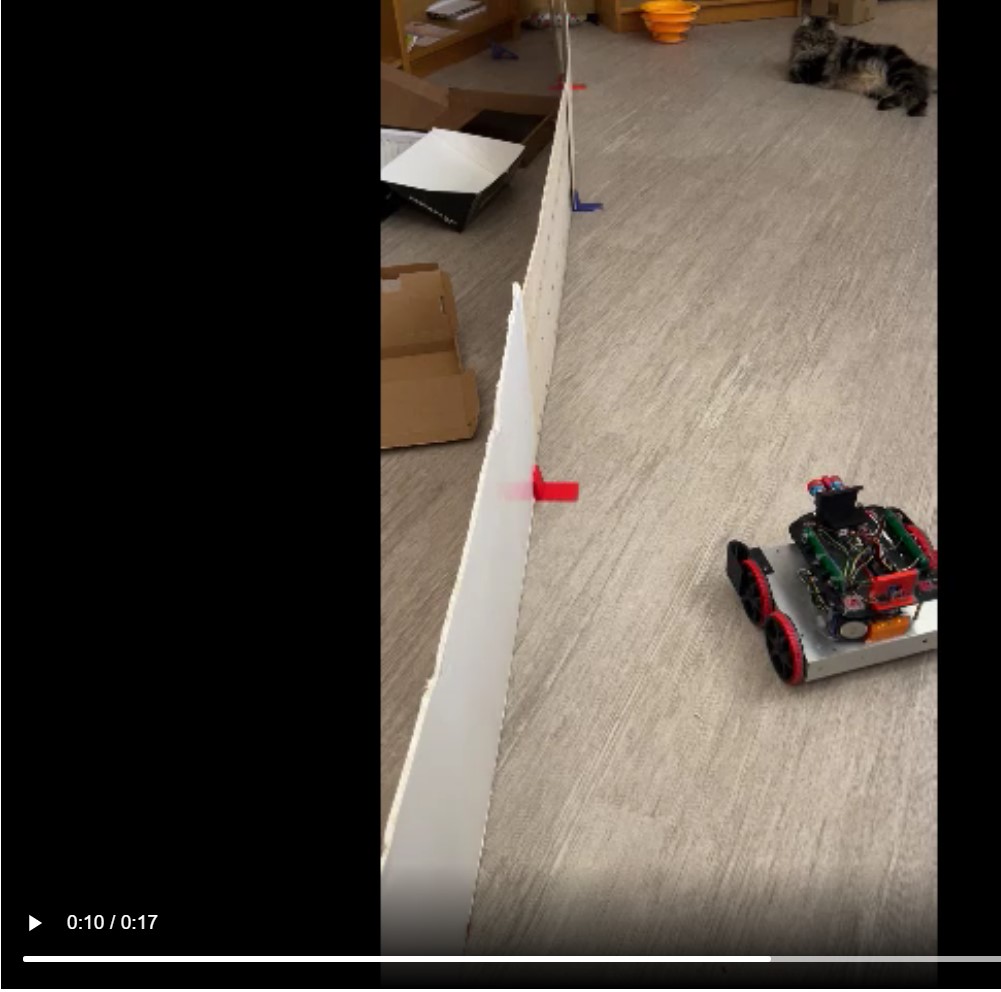

I was definitely having problems with the ‘Open Doorway’ condition, so I wound up back in my ‘indoor range’ (AKA my office) to see if I could work through the issues. It turned out I was not detecting the onset or end of the ‘Open Doorway’ condition properly. I made some changes to the code and to the telemetry output to more thoroughly describe the action, and then ran the test again. The short movie and the telemetry output show the results:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 |

14104: End of setup(): Elapsed run time set to 0 0.0: Top of loop() - calling UpdateAllEnvironmentParameters() Battery Voltage = 8.09 IRHomingValTotalAvg = 36 0.1: gl_LeftCenterCm = 18.10 gl_RightCenterCm = 103.80 TrackLeftWallOffset(350.0, 0.0, 0.0, 30) called TrackLeftWallOffset: Start tracking offset of 30cm at 0.1 Sec LCen RCen Front Rear FVar RVar HdgDeg WWCnt ACODE TRKDIR 0.2 18.6 103.6 418 34.7 55332 12777 -0.2 0 NONE LEFT 0.3 18.0 103.2 425 34.7 49642 12710 2.4 0 NONE LEFT 0.4 18.6 120.3 422 44.7 44997 12633 9.7 0 NONE LEFT 0.5 18.4 145.2 195 84.3 45966 12393 17.2 0 NONE LEFT 0.6 19.2 148.8 168 89.1 48722 12178 21.2 0 NONE LEFT 0.7 21.6 148.7 156 93.5 52650 11992 23.0 0 NONE LEFT 0.8 22.6 151.4 148 98.4 57430 11829 24.1 0 NONE LEFT 0.9 24.6 153.8 143 103.1 62752 11688 23.6 0 NONE LEFT 1.0 27.7 149.1 145 107.3 68172 11571 21.4 0 NONE LEFT 1.1 29.1 118.0 152 110.7 73316 11480 17.6 0 NONE LEFT 1.2 30.0 93.4 183 104.9 77339 11447 12.0 0 NONE LEFT 1.3 30.2 90.9 390 79.4 77600 11558 4.5 0 NONE LEFT 1.4 31.2 93.6 377 78.9 77591 11704 -4.0 0 NONE LEFT 1.5 31.8 114.1 360 84.1 77125 11847 -12.1 0 NONE LEFT 1.6 32.5 134.0 531 65.3 75618 12108 -16.3 0 NONE LEFT 1.7 32.5 159.2 348 68.3 73482 12372 -14.4 0 NONE LEFT 1.8 31.8 156.6 351 84.5 69956 12573 -10.4 0 NONE LEFT 1.9 30.4 139.5 344 102.4 64824 12713 -5.3 0 NONE LEFT 2.0 29.9 126.4 364 109.0 57663 12837 1.5 0 NONE LEFT 2.1 30.0 123.0 362 131.2 48293 12904 7.0 0 NONE LEFT 2.2 31.4 117.7 360 145.2 36381 12946 8.1 0 NONE LEFT 2.3 32.2 121.0 351 150.5 21565 12982 6.8 0 NONE LEFT 2.4 33.3 126.3 367 154.9 12815 13012 3.0 0 NONE LEFT 2.5 33.8 158.3 318 149.7 12354 13047 -2.4 0 NONE LEFT 2.6 34.4 180.0 313 127.8 11859 13122 -7.0 0 NONE LEFT 2.7 35.3 186.3 301 127.6 11352 13184 -8.3 0 NONE LEFT 2.8 35.0 191.7 300 132.8 10732 13217 -7.1 0 NONE LEFT 2.9 34.8 190.4 297 146.0 10091 13204 -3.6 0 NONE LEFT 3.0 34.5 186.5 297 169.9 9691 13144 -0.8 0 NONE LEFT 3.1 35.5 186.5 293 187.5 9001 13059 -0.3 0 NONE LEFT 3.2 35.8 189.2 283 190.1 8137 12948 -1.7 0 NONE LEFT 3.3 36.4 65.6 282 192.2 7120 12809 -3.1 0 NONE LEFT 3.4 36.6 55.8 274 183.6 6003 12633 -4.6 0 NONE LEFT 3.5 40.5 54.0 266 182.0 4893 12418 -5.6 0 NONE LEFT 3.6 58.7 53.5 261 187.0 3862 12162 -4.7 0 NONE LEFT 3.7 153.5 52.6 259 213.6 3209 11890 0.1 0 NONE LEFT 3.8 152.9 52.7 297 221.9 3095 11585 6.8 0 OPEN_DOORWAY LEFT 3.8: Top of loop() - calling UpdateAllEnvironmentParameters() Battery Voltage = 7.99 IRHomingValTotalAvg = 59 3.8: gl_LeftCenterCm = 149.80 gl_RightCenterCm = 53.70 TrackRightWallOffset(350.0, 0.0, 0.0, 30) called TrackRightWallOffset: Start tracking offset of 30cm at 3.8 Sec LCen RCen Front Rear FVar RVar HdgDeg WWCnt ACODE TRKDIR 4.0 112.6 56.7 95 80.5 4015 10868 22.8 0 NONE RIGHT 4.1 109.8 61.5 77 64.7 4866 10491 28.4 0 NONE RIGHT 4.2 116.2 67.0 74 64.9 6511 10001 26.8 0 NONE RIGHT 4.2 123.6 53.8 77 72.8 7878 9365 21.5 0 NONE RIGHT 4.4 112.5 42.2 285 88.2 7594 8563 13.2 0 NONE RIGHT 4.5 111.8 36.9 264 122.8 7212 7594 4.6 0 NONE RIGHT 4.6 121.8 33.5 219 246.5 6877 6757 -6.1 0 NONE RIGHT 4.7 151.5 31.2 217 207.4 6529 5683 -17.7 0 NONE RIGHT 4.8 160.8 32.6 215 240.9 6091 4631 -30.3 0 NONE RIGHT 4.9 143.1 34.5 106 186.6 6396 3288 -36.9 0 NONE RIGHT 5.0 148.2 37.4 108 186.3 6744 3194 -35.5 0 NONE RIGHT 5.1 142.1 38.9 193 195.4 6527 3104 -30.4 0 NONE RIGHT 5.2 141.3 38.8 193 219.0 6313 3063 -22.9 0 NONE RIGHT 5.3 111.4 38.2 192 228.0 6078 3025 -13.7 0 NONE RIGHT 5.4 44.3 38.4 191 250.8 5818 3084 -2.8 0 NONE RIGHT 5.5 35.3 39.9 240 264.0 5565 3303 7.8 0 NONE RIGHT 5.6 36.4 41.8 205 115.4 5305 3257 13.1 0 NONE RIGHT 5.7 38.7 41.0 196 116.8 5061 3219 12.0 0 NONE RIGHT 5.8 39.9 38.2 225 125.1 4847 3181 7.3 0 NONE RIGHT 5.9 40.7 34.4 174 153.5 4663 3142 0.2 0 NONE RIGHT 6.0 41.9 32.1 172 283.5 4493 3460 -9.1 0 NONE RIGHT 6.1 43.5 34.1 171 263.2 4317 3665 -18.2 0 NONE RIGHT 6.2 43.2 141.7 162 264.2 3994 3843 -22.0 0 NONE RIGHT 6.2 42.5 144.0 156 277.9 3578 3988 -20.1 0 OPEN_DOORWAY RIGHT 6.3: Top of loop() - calling UpdateAllEnvironmentParameters() Battery Voltage = 7.98 IRHomingValTotalAvg = 72 6.3: gl_LeftCenterCm = 41.80 gl_RightCenterCm = 143.50 TrackLeftWallOffset(350.0, 0.0, 0.0, 30) called TrackLeftWallOffset: Start tracking offset of 30cm at 6.3 Sec LCen RCen Front Rear FVar RVar HdgDeg WWCnt ACODE TRKDIR 6.4 40.4 140.9 138 272.0 2838 4175 -10.6 0 NONE LEFT 6.5 39.8 143.8 138 300.8 2477 4276 -3.9 0 NONE LEFT 6.6 40.8 152.5 139 306.0 2100 4375 -0.1 0 NONE LEFT 6.7 41.6 150.7 131 305.7 1770 4493 -1.3 0 NONE LEFT 6.8 42.6 142.4 123 326.3 1510 4747 -5.4 0 NONE LEFT 6.9 44.2 138.6 126 313.7 1384 4920 -10.0 0 NONE LEFT 7.0 43.3 141.2 118 306.7 1383 5094 -11.6 0 NONE LEFT 7.1 44.2 143.9 111 310.0 1387 5294 -11.6 0 NONE LEFT 7.2 44.1 146.9 113 322.4 1359 5539 -11.0 0 NONE LEFT 7.3 44.4 152.2 108 325.1 1355 5783 -11.3 0 NONE LEFT 7.4 44.6 154.1 100 324.2 1419 5987 -11.2 0 NONE LEFT 7.5 44.5 158.5 97 328.7 1503 6119 -10.5 0 NONE LEFT 7.6 45.0 160.5 92 342.9 1582 6285 -10.5 0 NONE LEFT 7.7 45.3 162.6 86 346.5 1659 6451 -11.1 0 NONE LEFT 7.8 45.7 163.1 80 339.7 1585 6597 -11.7 0 NONE LEFT 7.9 46.9 161.1 64 345.6 1496 6806 -12.9 0 NONE LEFT 8.0 50.4 158.5 52 345.8 1553 7029 -15.2 0 NONE LEFT 8.1 58.5 161.6 48 346.2 1488 7243 -18.9 0 NONE LEFT 8.2 62.5 181.1 49 325.8 1394 7359 -24.4 0 NONE LEFT 8.3 57.7 293.4 56 230.4 1382 7299 -31.8 0 NONE LEFT 8.4 53.7 278.8 51 188.5 1356 7286 -36.8 0 NONE LEFT 8.5 55.1 96.4 46 189.5 1330 7282 -34.6 0 NONE LEFT 8.6 97.5 98.2 42 195.6 1315 7307 -29.3 0 NONE LEFT 8.7 103.3 197.4 38 292.1 1299 7356 -22.3 0 NONE LEFT |

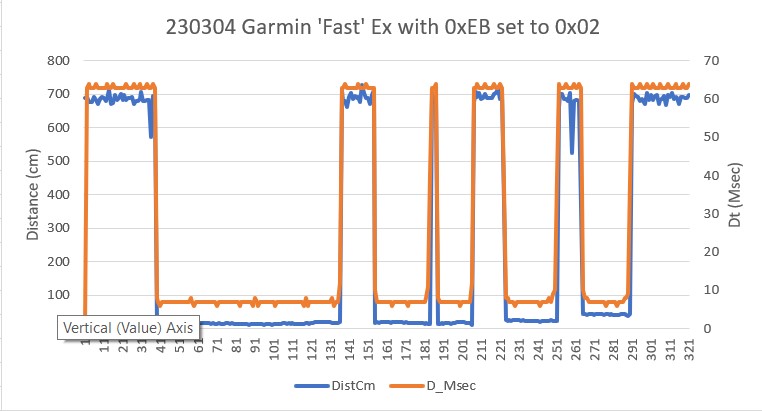

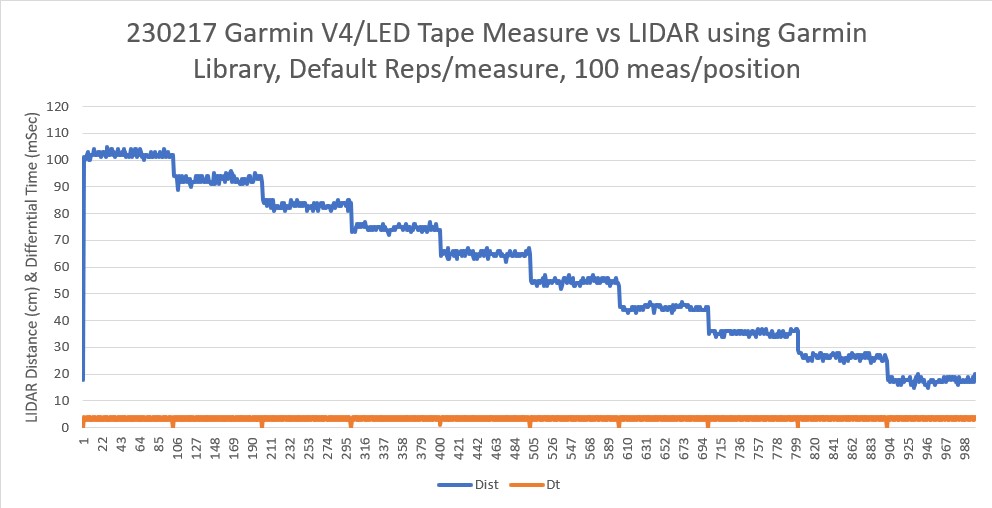

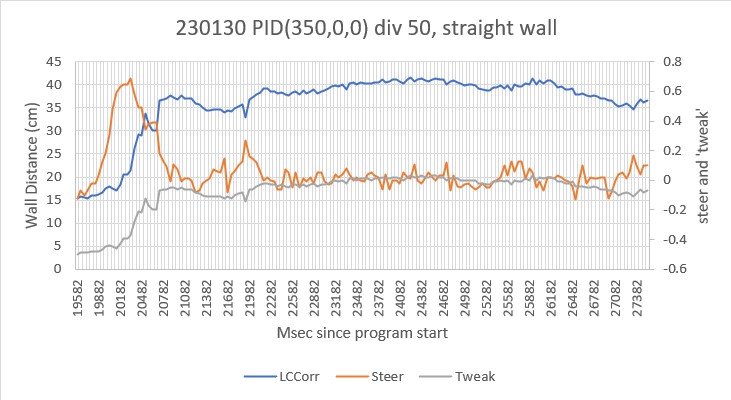

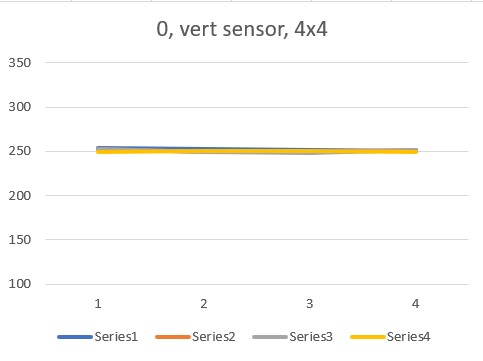

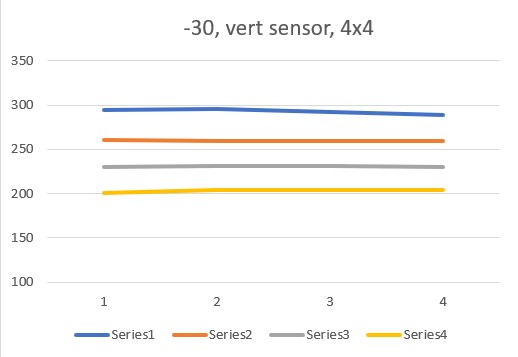

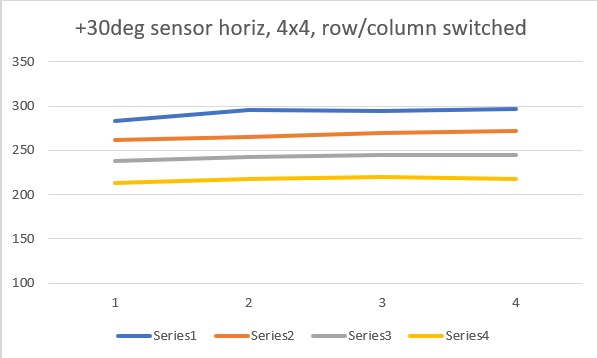

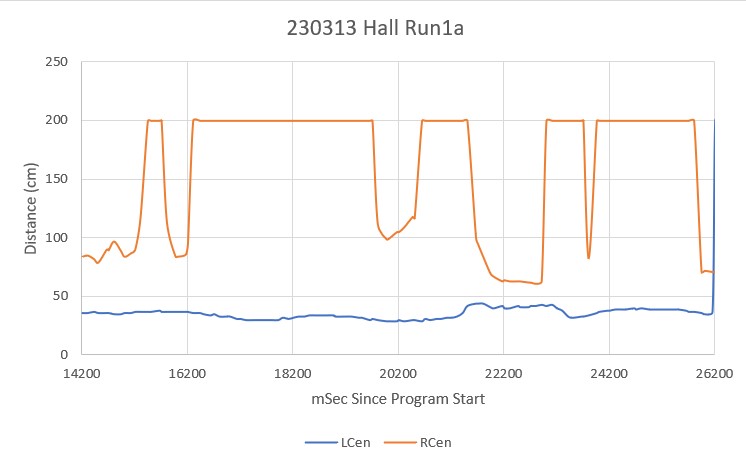

Salient points in the video and telemetry printout:

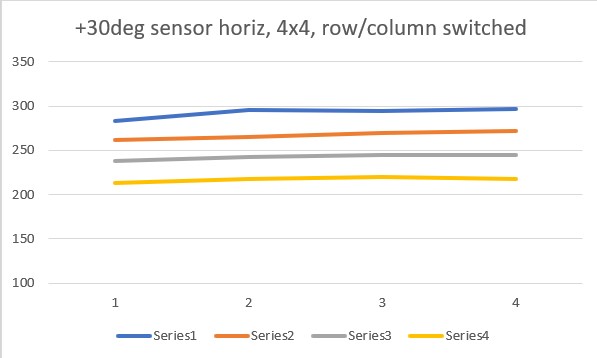

- WallE3 captures and then tracks the desired 30cm offset with pretty decent accuracy up until 3.8sec where it encounters the ‘open doorway’ on the left. This results in an ‘OPEN_DOORWAY’ anomaly report, which in turn causes TrackLeftWallOffset() function to exit, which in turn causes the program to start over at the top of loop().

- The ‘top of loop’ code reevaluates the tracking condition, and because the left distance is well over 100cm and the right distance is about 50cm, it decides to track the right wall instead.

- The right wall is tracked from 4.0 to 6.2 sec (where the right wall ends) and again detects an ‘Open Doorway’ condition, which forces the loop() function to restart. This time the right distance is about 143cm and the left distance is about 42cm, so the code chooses the left wall for tracking

- Left wall tracking continues from 6.4 to 8.7sec – the end of the run.

Stay tuned,

Frank