Posted 14 February 2020,

Happy (American) Valentines Day! In my last post, I described my plan to use Wall-E2’s new relative heading super power to find the relative heading parallel to the nearest wall. I ended that post with “…and not all that hard to program, either”. Well, this turned out to be a bit of an exaggeration as things weren’t quite as easy as I first thought; the interaction of the physics of the robot and the time scales associated with ping measurements complicated things a bit.

Background:

For some time now I have been working on ways to enhance Wall-E2’s autonomous wall-tracking ability. Wall-E2 can track walls fairly well, but lacks the ability to track a wall at a specified stand-off distance. Currently, tracking occurs at whatever distance Wall-E2 first detects the nearest wall. While this isn’t terrible, I wanted to do better.

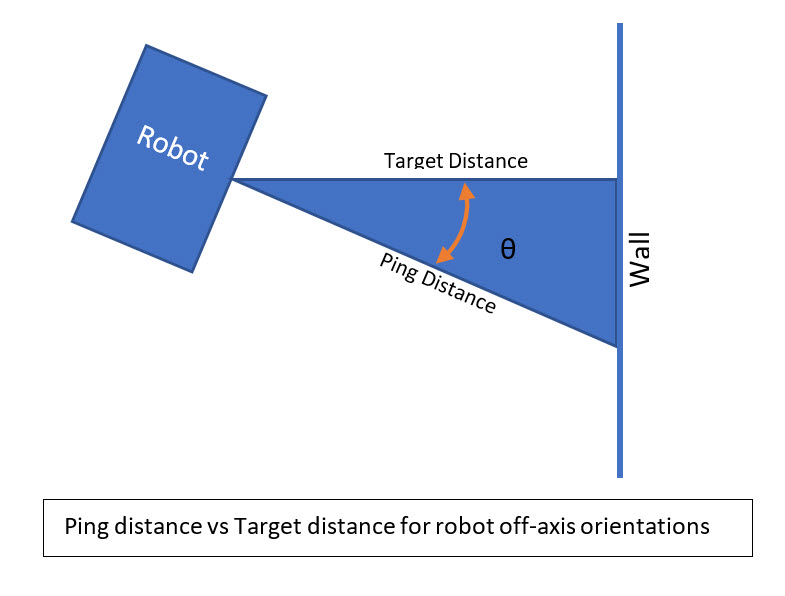

Unfortunately, the way in which the measured ‘ping’ distance to the nearest wall interacts with the relative orientation of the robot with respect to that wall makes it almost impossible to determine the actual offset distance, and therefore how to determine what to do to maintain a constant offset distance. As shown in the following diagram, when the robot makes a turn, the measured distance to the wall will change just due to the orientation change, without the robot’s actual offset distance changing at all.

Without having some idea of the angle theta in the above diagram, making a judgement of where the robot is relative to the target offset distance is difficult, if not impossible. This situation was the impetus for adding the MPU6050 Inertial Measurement Unit (IMU) to Wall-E2’s list of super powers. The general idea was that knowledge of relative headings would allow Wall-E2 to make accurate heading-controlled turns without relying solely on timing. After a lot of work to eliminate RFI/EMI problems associated with the Pololu metal-geared motors on Wall-E2, I’m happy to say that the MPU6050 is now quite stable, and making turns of just a few degrees is quite possible.

However, acquiring and then maintaining a particular offset distance from the nearest wall is still not straightforward. Back in early December last year I demonstrated the ability to acquire and then maintain a constant offset distance, but only if the robot started out reasonably parallel to the wall. If the robot was oriented toward or away from the wall by more than a few degrees, it would not work. So I needed to find a way to first orient the robot parallel to the nearest wall at any distance, so that my current acquisition & tracking algorithm would work successfully.

The basic idea behind finding the parallel heading is that when the robot is turned through a forward arc and the measured ‘ping’ distance decreases and then starts increasing, the robot’s relative heading at this inflection point is the desired parallel heading. If the distance instead starts increasing, then the robot started out either parallel to or facing away from the wall. In either case, reversing the turn back toward the wall will cause the measured distance to decrease to a minimum and then increase again. As in the first case the heading at the point at which the measured distance starts to increase is the desired parallel heading.

Although the basic idea as described above is very straightforward, as usual there are some ‘gotchas’ in the actual implementation:

In order to minimize heading overshoot due to the robot’s mass and angular momentum, the parallel heading search turns must be performed at lower-than-normal speeds. After some experimentation I settled on a turn rate of about 60 deg/sec. With the robot starting with an angle-in orientation of about 30 deg, this means that it takes about 1 second to sweep through to an angle-out orientation of about 30 deg. With the Arduino UNO setup I’m using for the tests, I was getting distance measurements about every 30-50 mSec, so about 33 to 20 measurements/sec, or around 2-3 measurements/degree.

The lower turn rate significantly reduces the rate at which the ‘ping’ distance changes per unit time, making it much harder to detect the distance inflection point. In effect, the lower turn rate flattens the ‘distance/degree’ slope, making inflection point detection more difficult. At 20-30 measurements/degree and only a few cm change from max on one side to max on the other, there are a lot of identical measurements returned.

My initial cut at addressing the the above issue was to space the ping measurements further apart in time, thereby increasing the ‘distance/degree’ slope. After trying this (using a ‘elapsedMillisec’ variable) I realized that an equivalent method would be to simply increase the size of the inflection detection window (the number of times the ping measurement must be on the ‘other side’ of the inflection point in order to qualify as a valid inflection). After some experimentation, I arrived at a value of 20.

For some reason, it was much easier to find a good parallel heading value if the robot started out pointed toward the near wall. If it started out pointed away from the wall, the robot often stopped well short of or well after the actual parallel heading. Eventually I developed a 4-turn process for this case to really nail down the parallel heading. Here are some short videos demonstrating the algorithm.

Now that I can reliably determine the relative heading that orients the robot parallel to the nearest wall, I should be able to marry this capability with my already-developed algorithm for acquiring and maintaining a specific offset distance.

20 February 2020 Update:

So I combined the ‘find parallel heading’ feature with my already-existing angle-based tracking algorithm, and this worked fairly well. Here’s a short video demonstrating the technique:

In the above video, the blue painter’s tape strips are marked every 10 cm, with a double-width mark at 30 cm (the desired offset distance). As the video shows, the robot first determines an approximate parallel heading, and from there starts the normal angle-based tracking algorithm.

Next, I tried an ‘enhancement’ to the above by having the robot move toward the wall on a 30-45 deg ‘cut’ from the parallel heading, and then turning back to parallel at the desired offset distance. As the following video shows, this didn’t turn out so well. If the robot doesn’t start out exactly parallel, then the ‘cut’ is either too steep or too shallow, resulting in a too-early or too-late turn back to the parallel heading.

So it looks like the ‘find parallel then start tracking’ approach works pretty well, but the ‘find parallel then drive to offset on a cut then back to parallel’ approach hasn’t been very successful.

27 February 2020 Update

After thinking about the difficulties I was encountering with my ‘FindParallel’ algorithm, I realized that the reason the robot was often overshooting the parallel orientation was due to small aberrations in ‘ping’ distance measurements that caused the ‘hit counter’ to reset to zero in the middle of an otherwise perfect arc of distance values. The ‘hit counter’ is incremented each time the newest ‘ping’ distance measurement trends along the same line, and is reset to zero whenever the newest ping measurement breaks the trend. When the hit counter exceeds a preset level, the parallel condition is considered to be detected. I thought I might be able to improve performance by making the algorithm a little more tolerant of such aberrations. So, rather than having the ‘hit counter’ reset to zero, I changed the algorithm to decrement by a set amount rather than reset it to zero. This markedly improved performance, as shown in the following videos.

There are four sections in the above video. In the first clip, the robot starts out pointed away from the wall and outside the desired 30 cm offset. The ‘FindParallel’ algorithm executes, and then approaches and then tracks the wall at the desired 30 cm offset. The next three clips show the same situation, except starting outside the 30 cm offset and pointed toward the wall, and then from inside the 30 cm offset, pointed away from and toward the wall. In each case, the robot successfully acquires a reasonably parallel heading and then acquires and tracks the 30 cm offset distance.

Stay tuned!

Frank