Posted xx April 2017

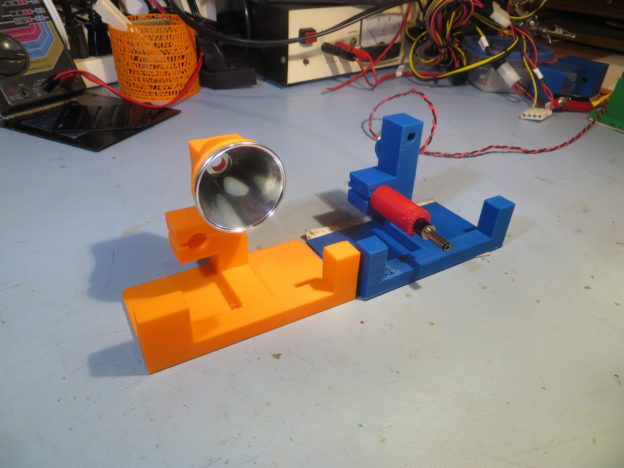

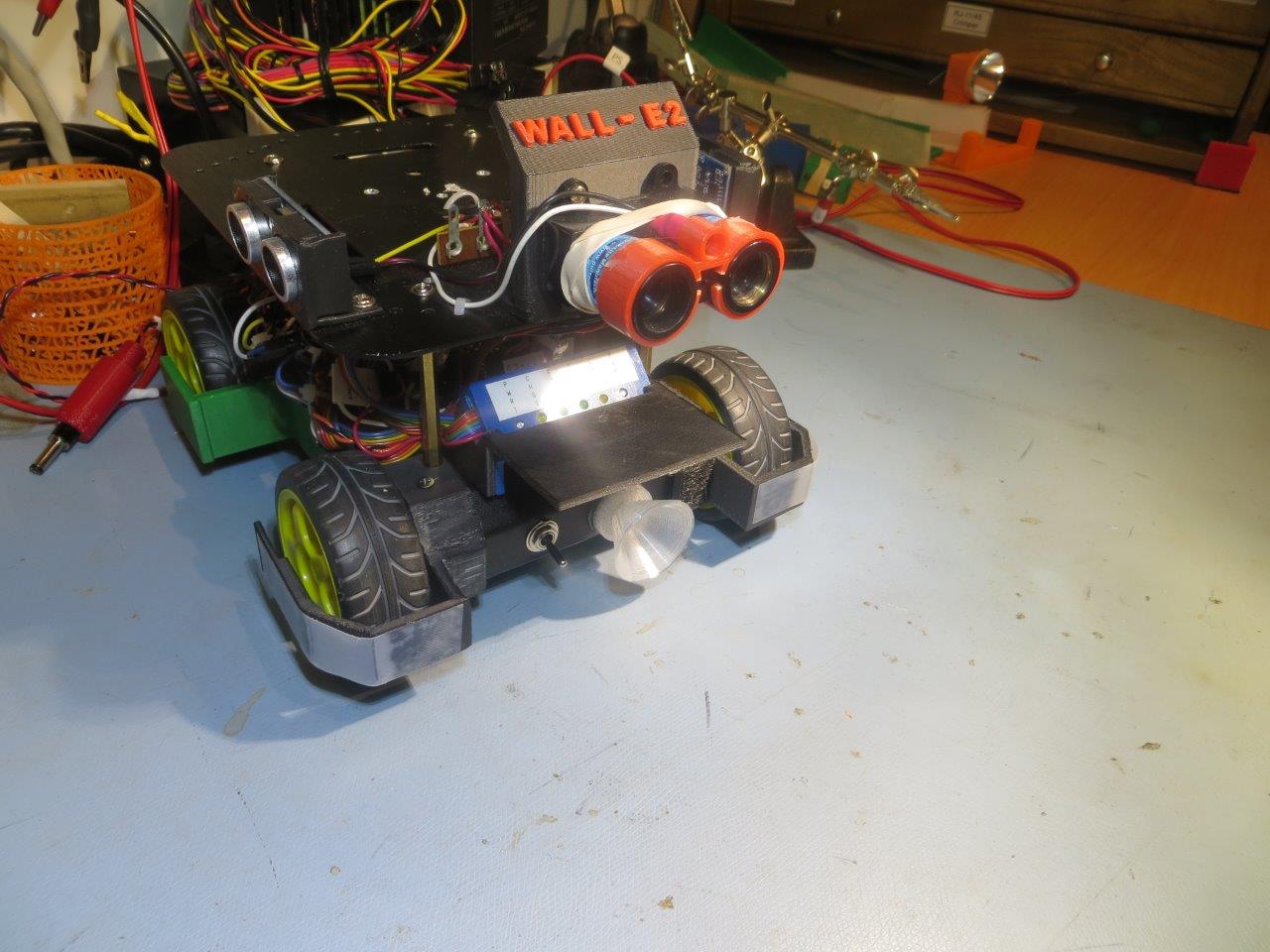

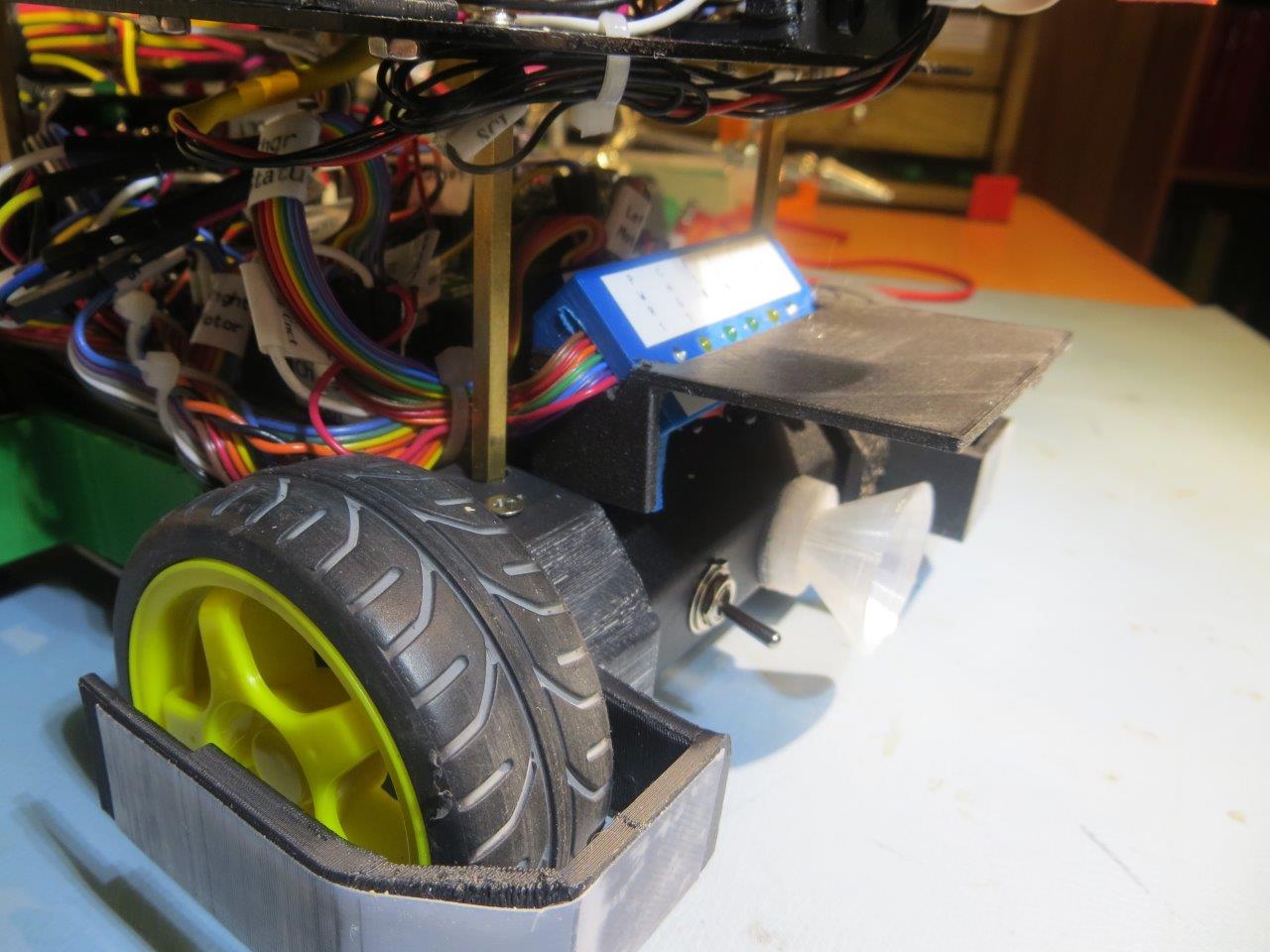

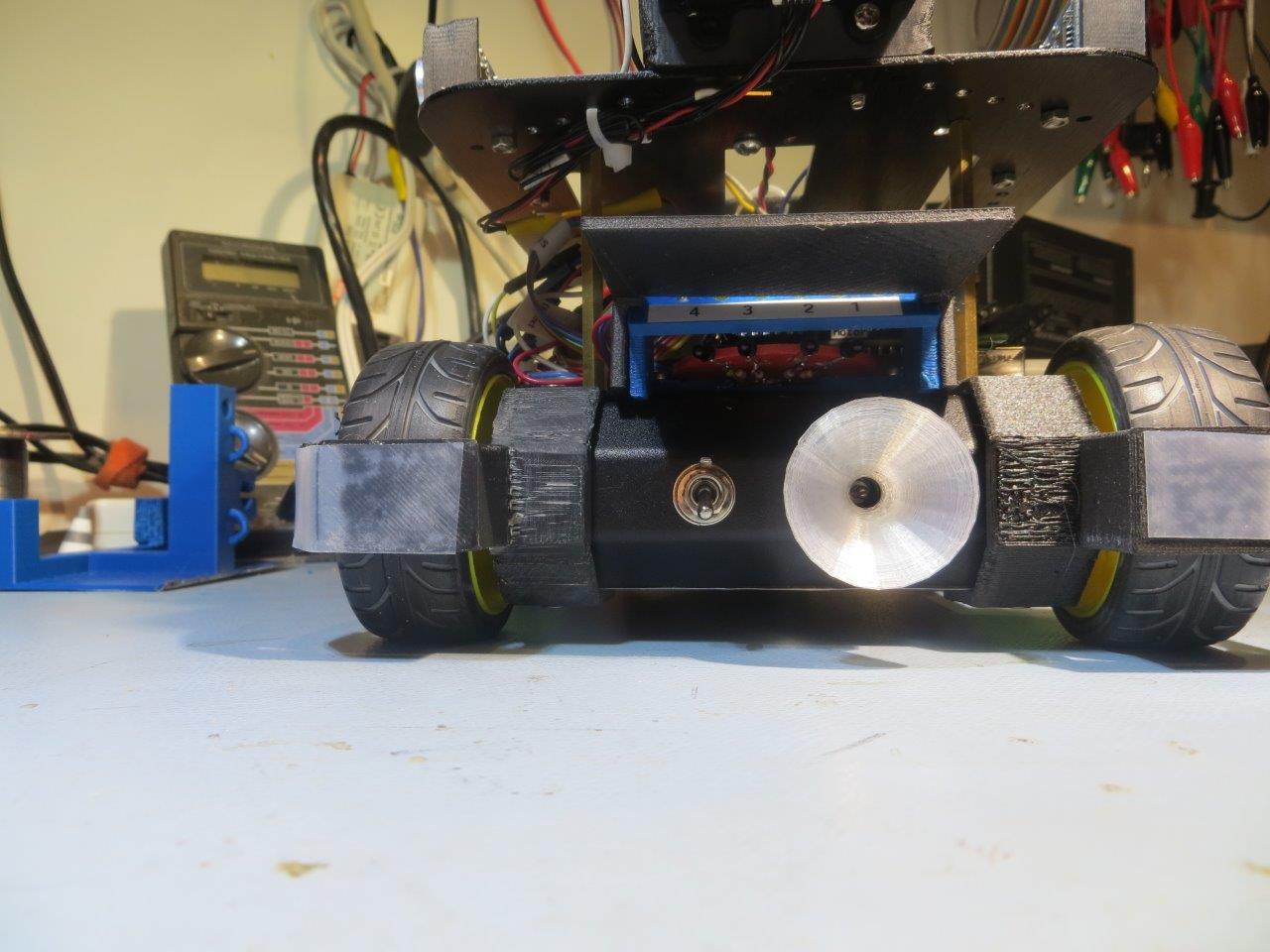

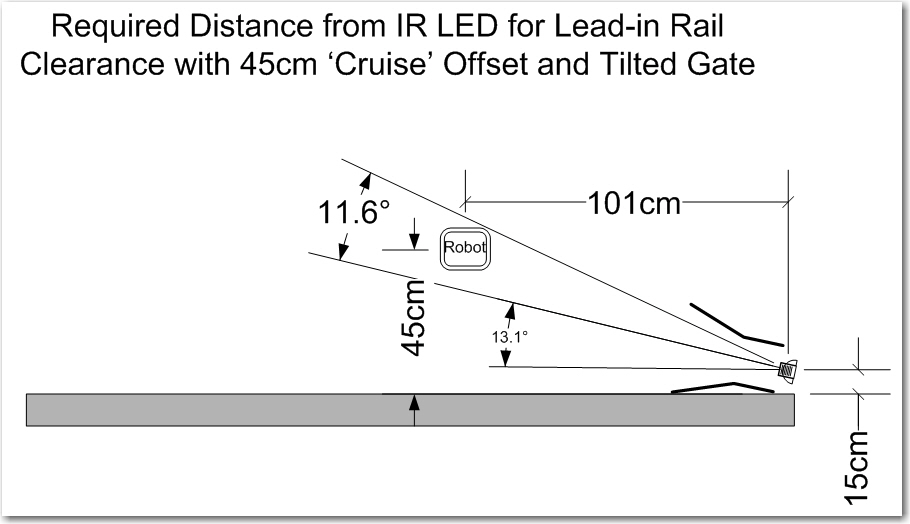

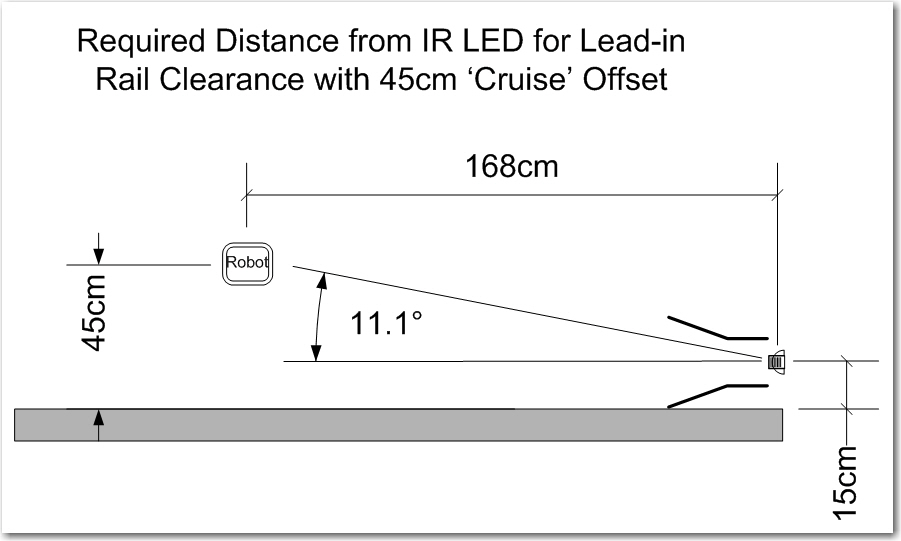

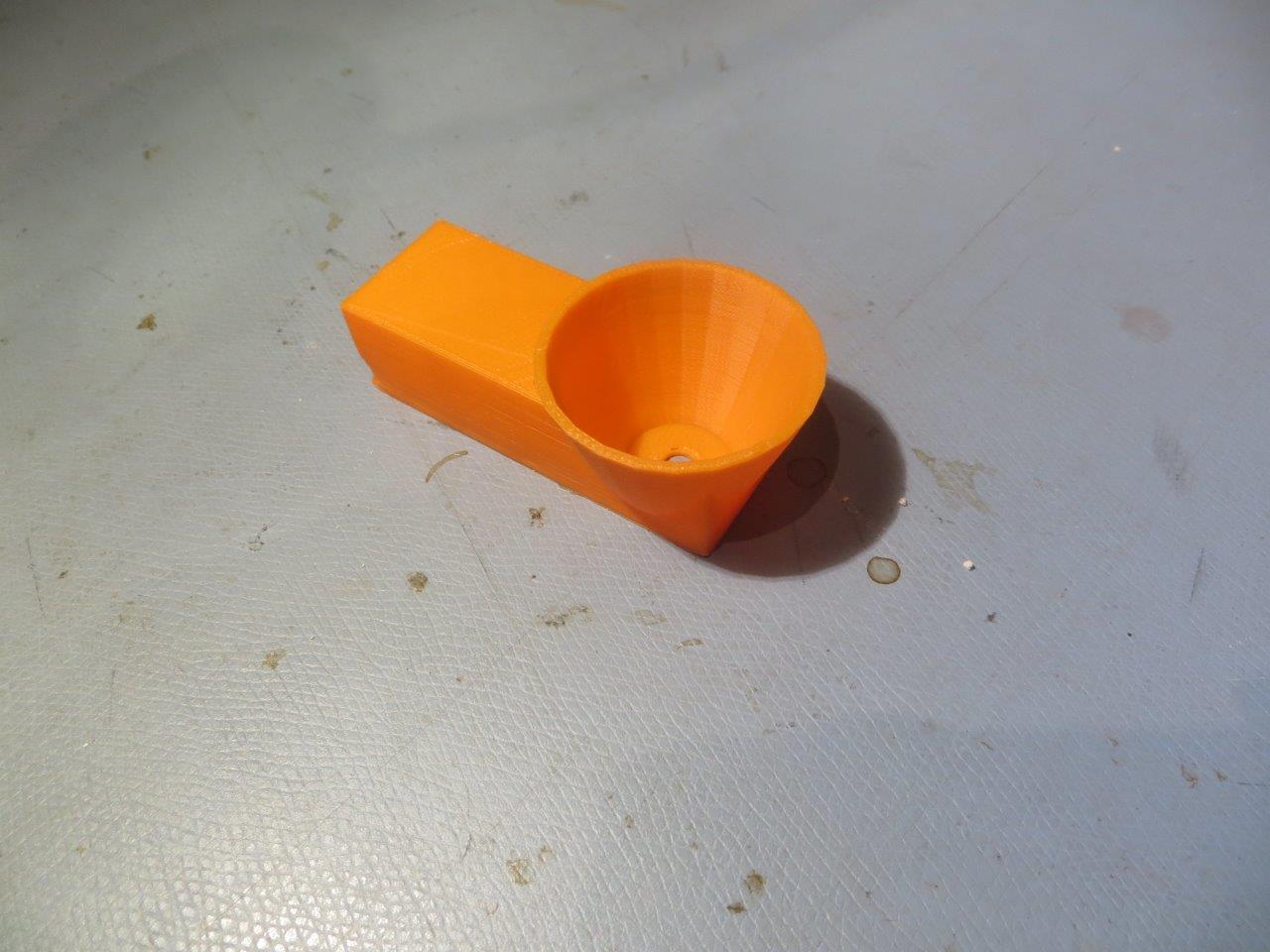

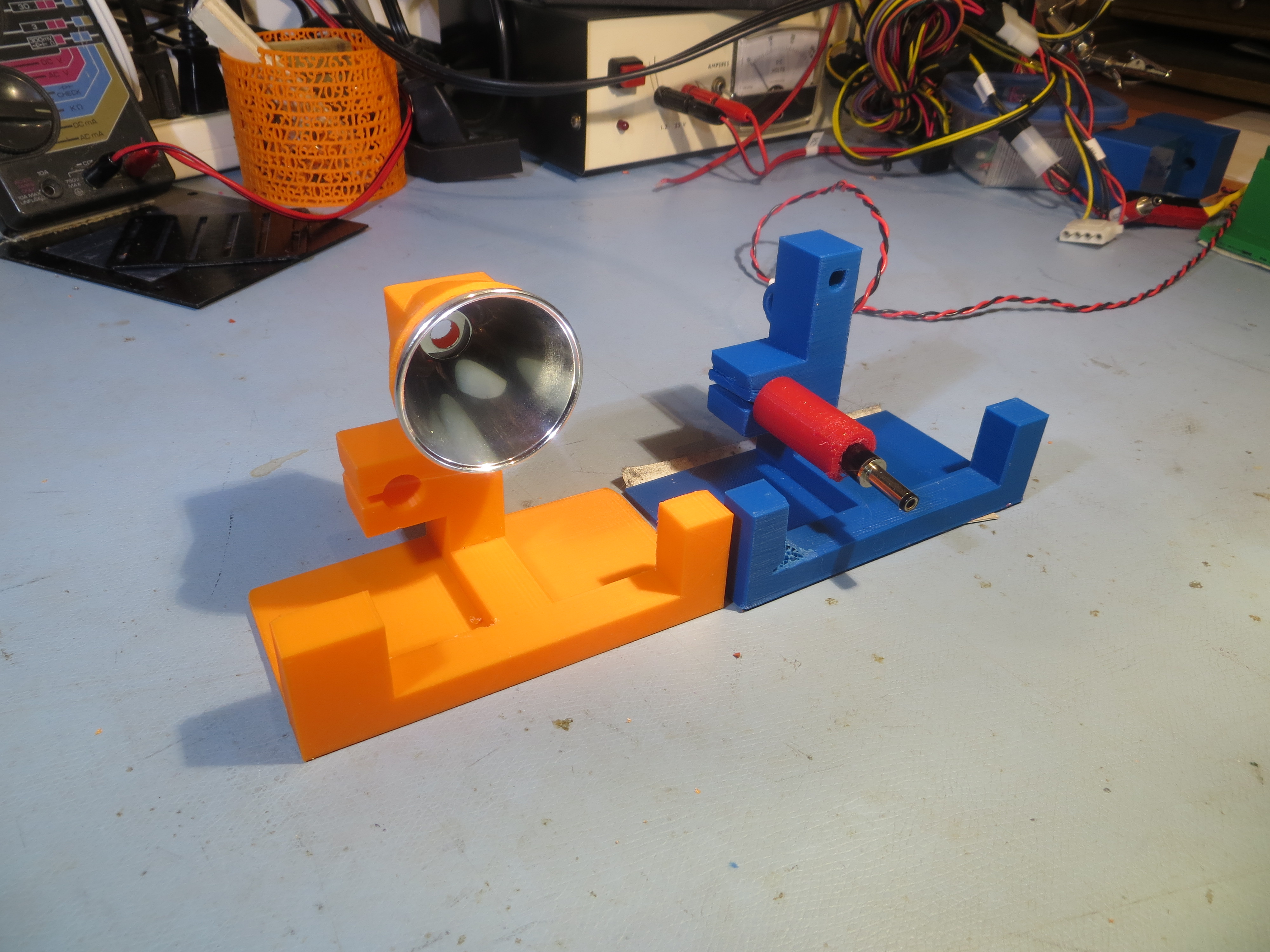

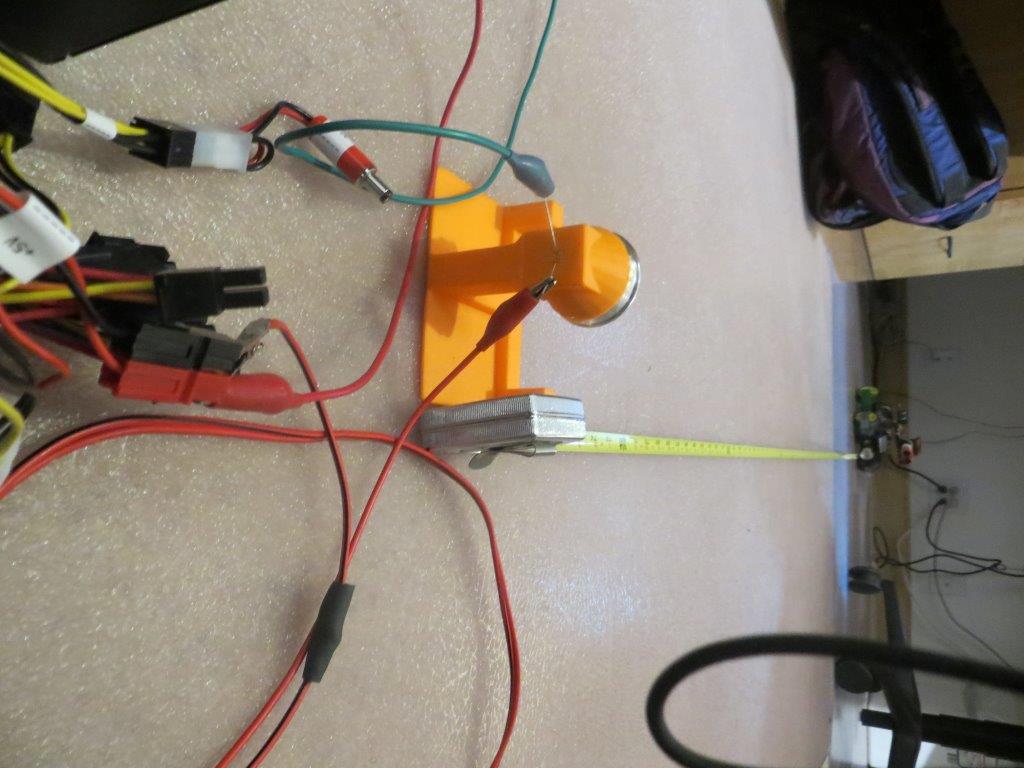

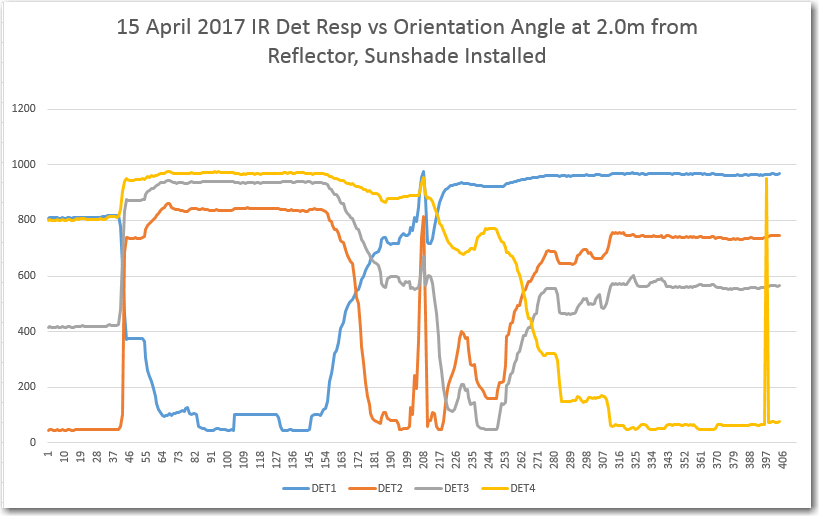

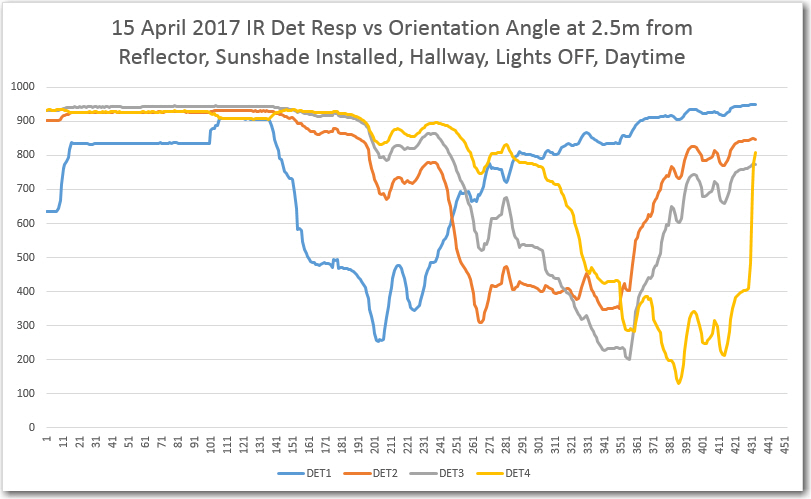

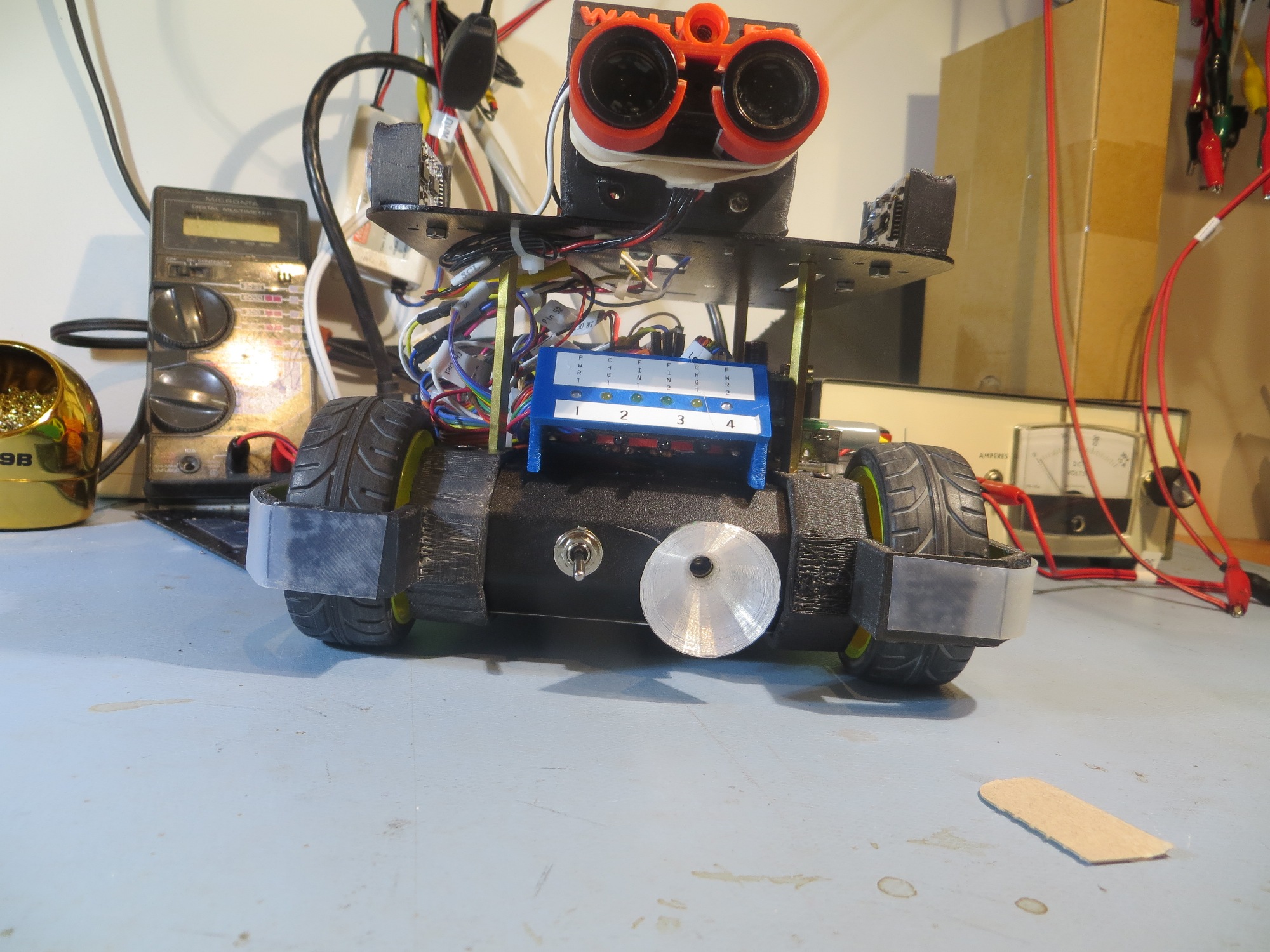

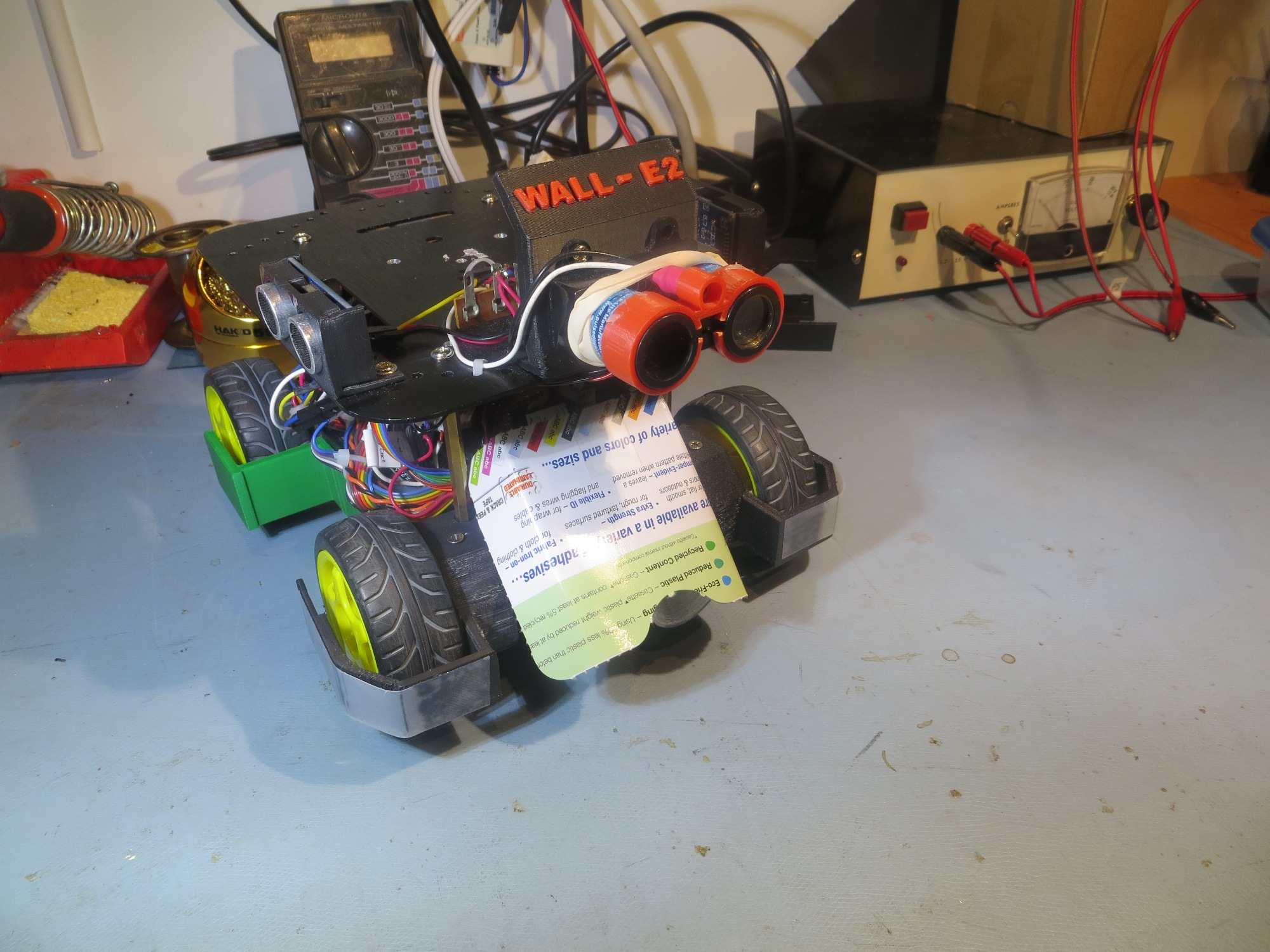

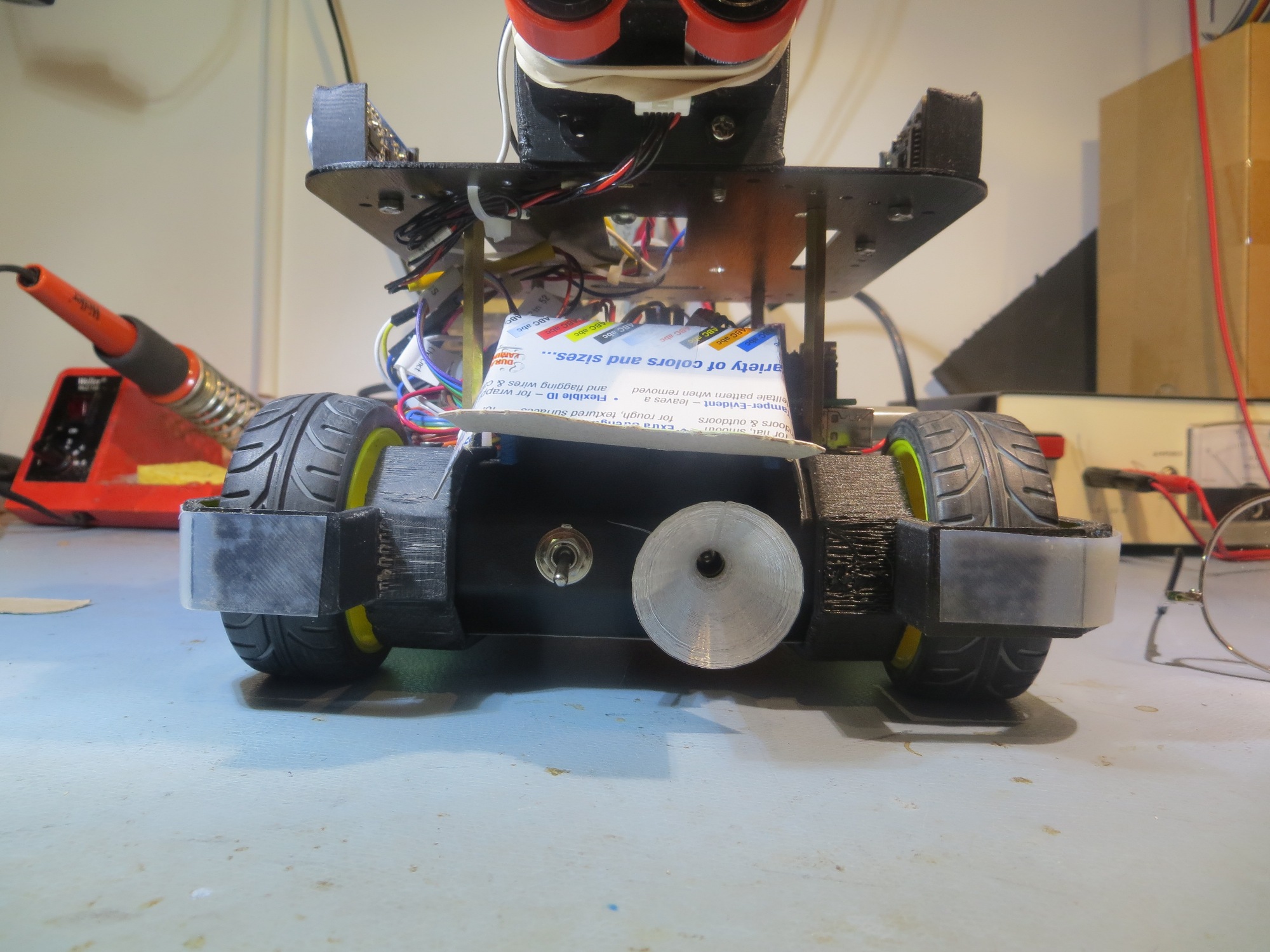

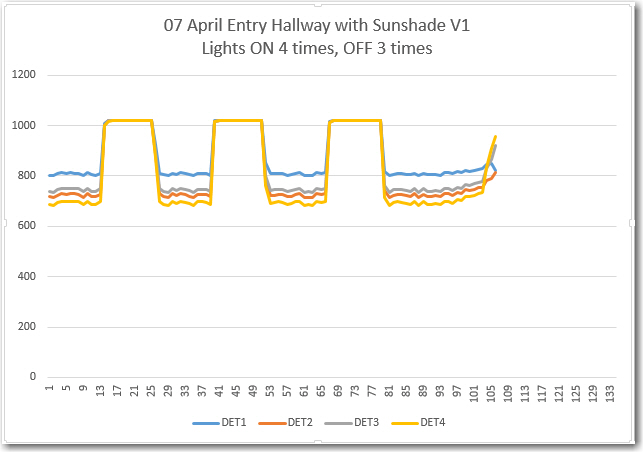

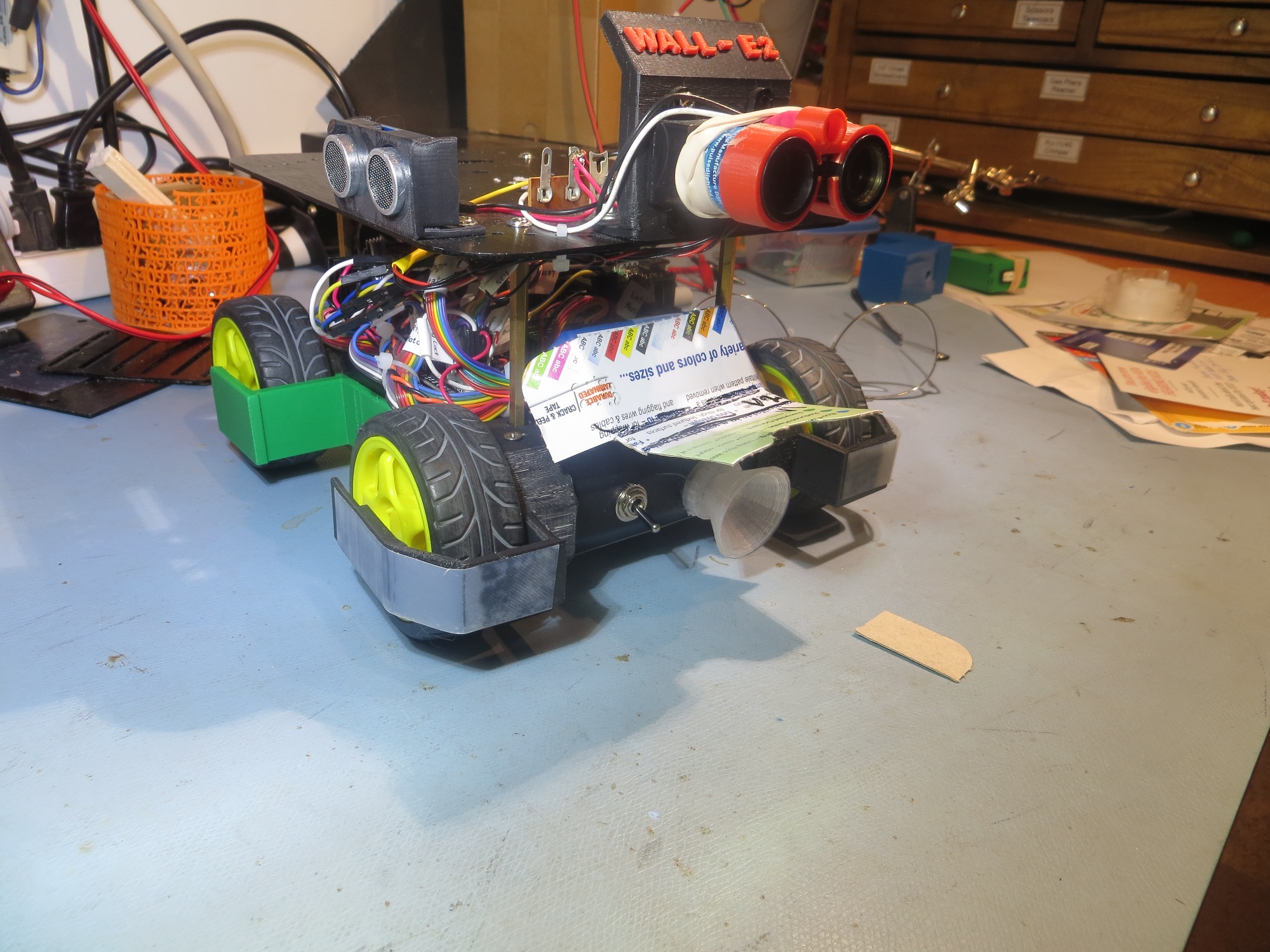

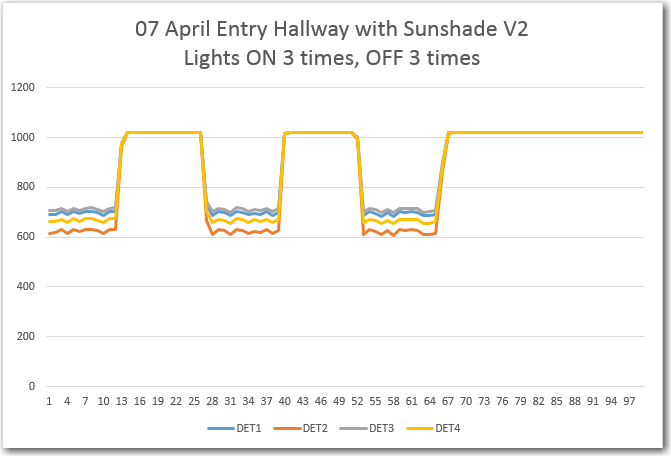

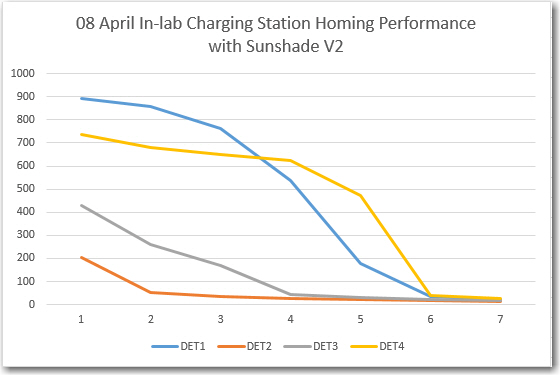

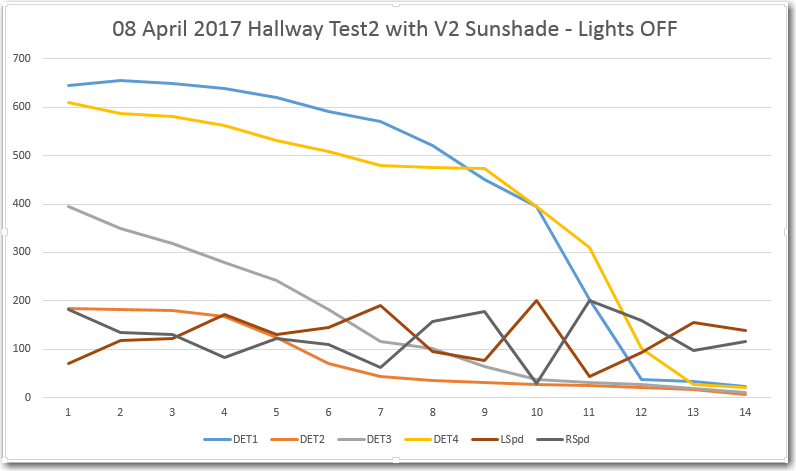

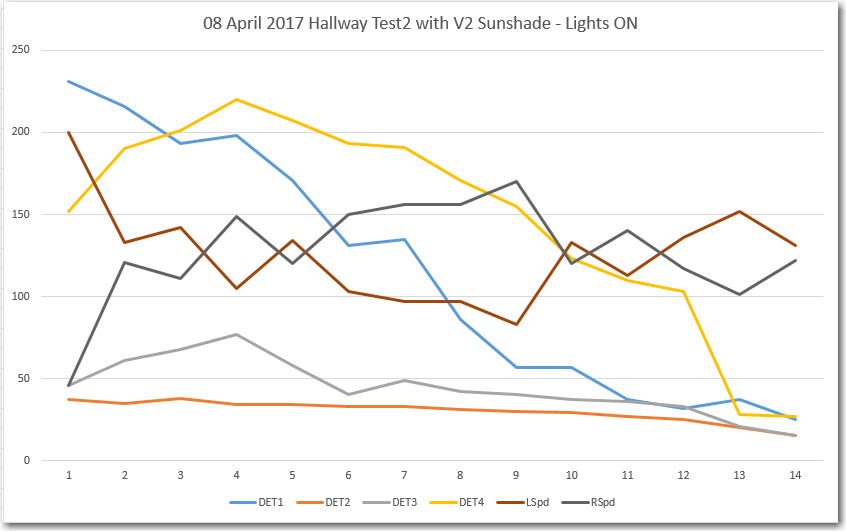

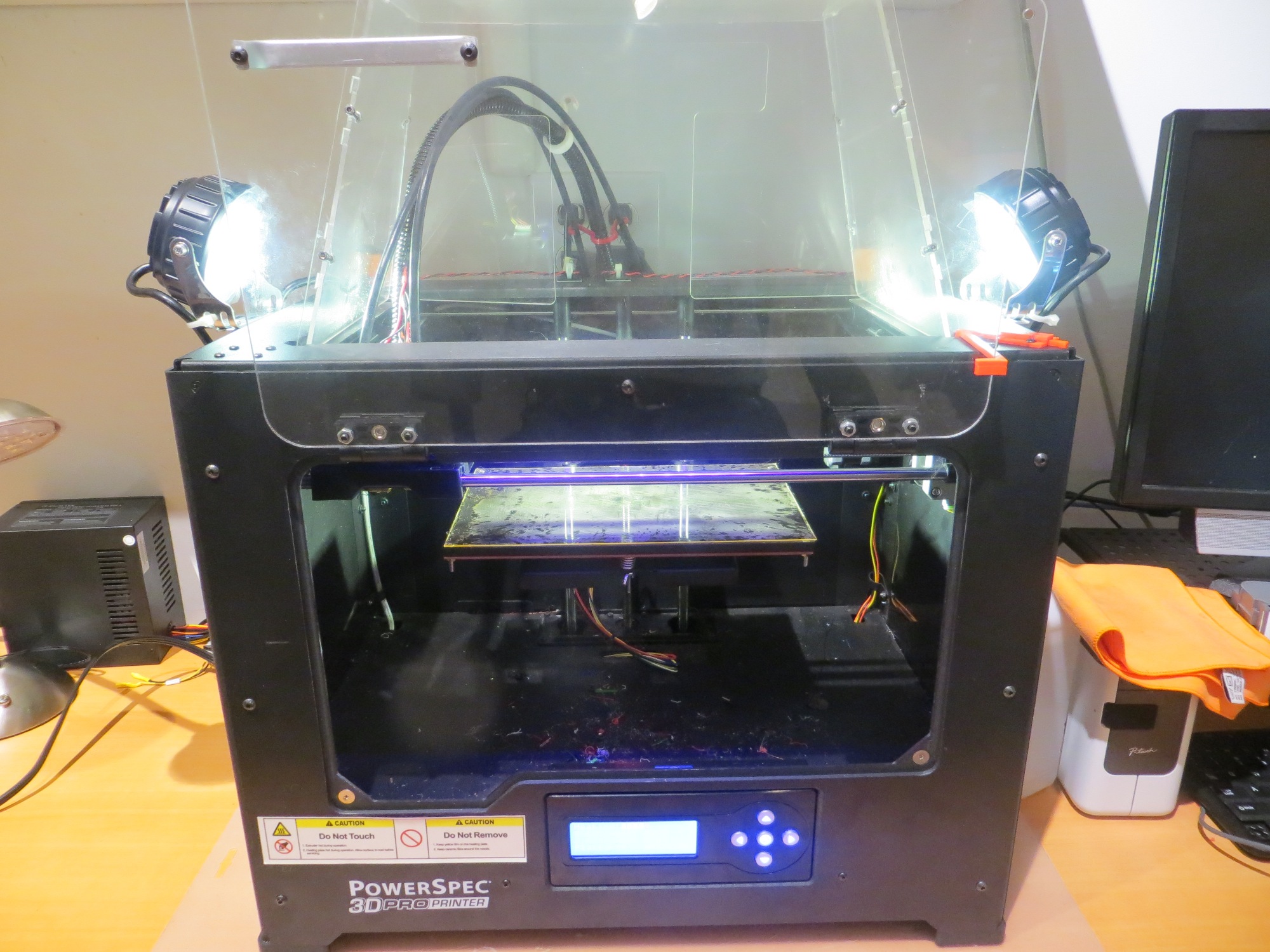

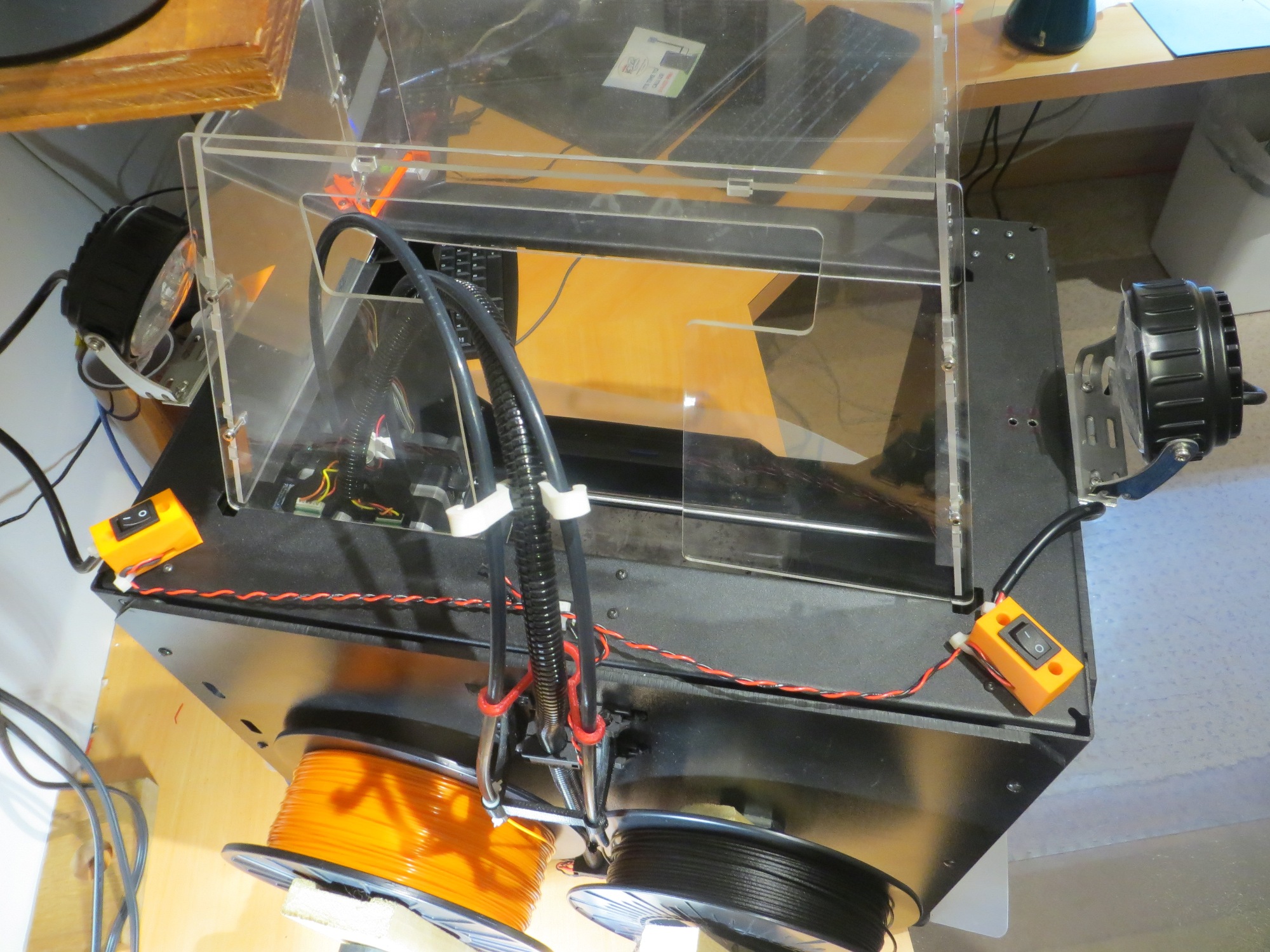

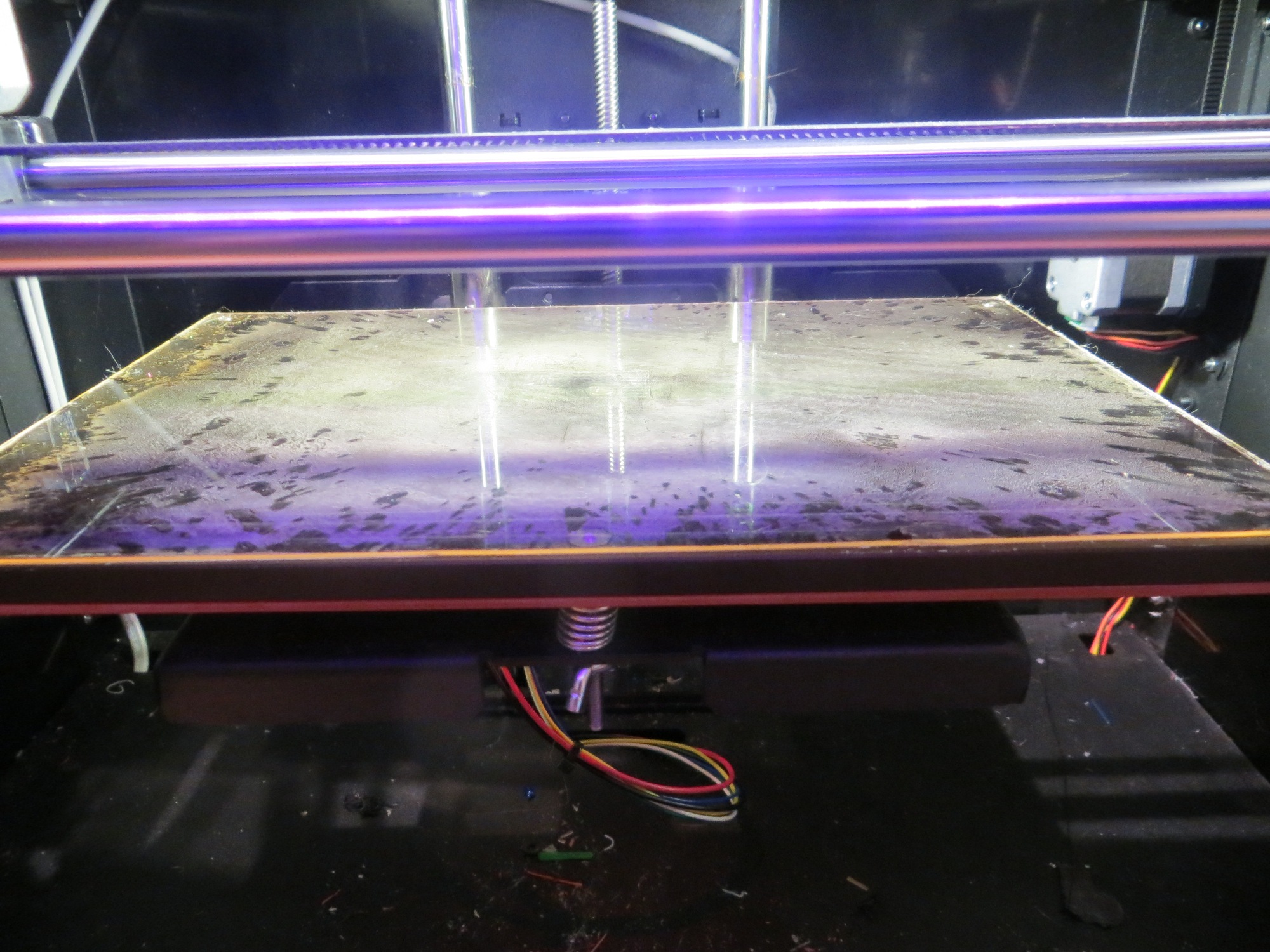

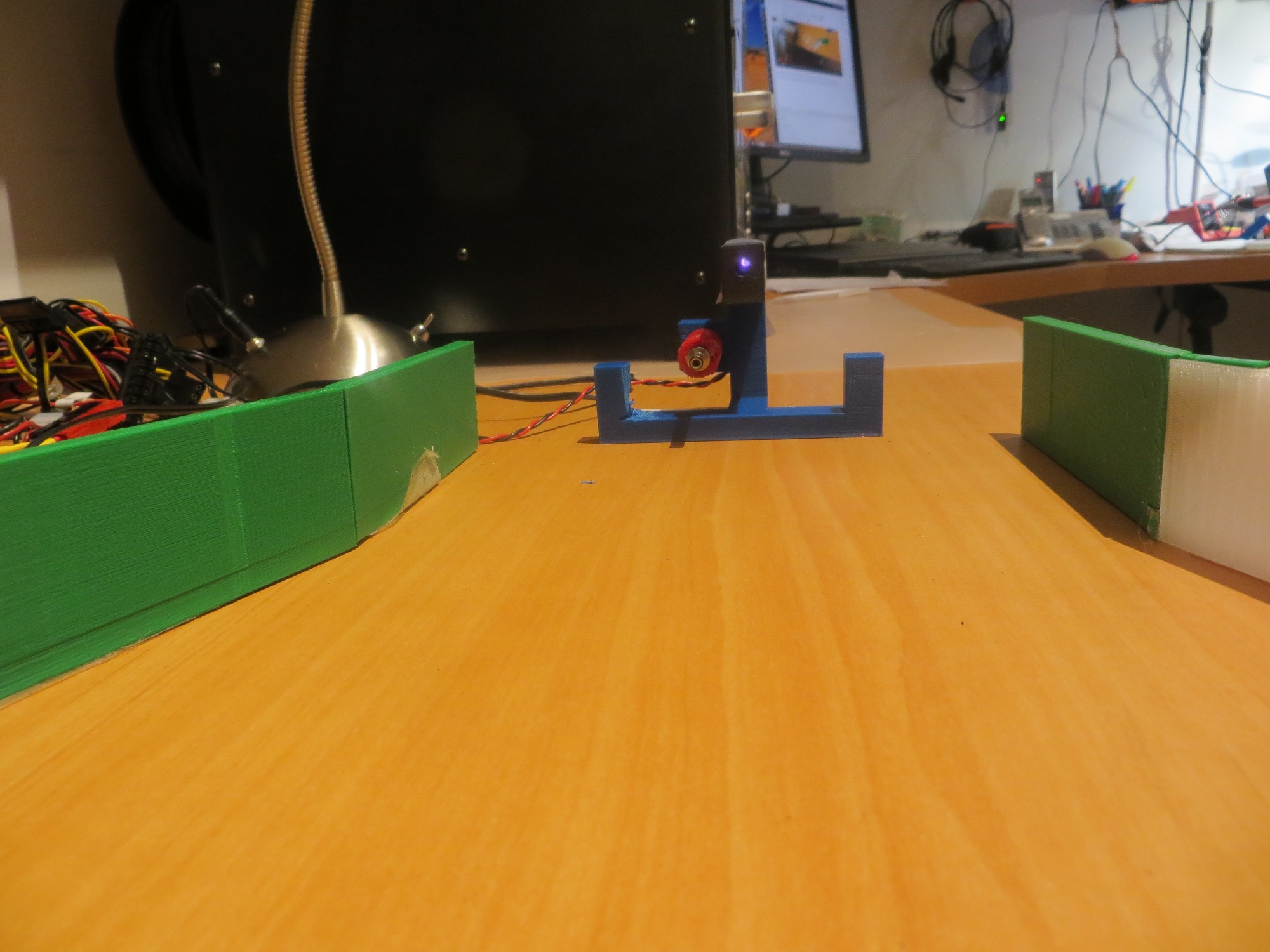

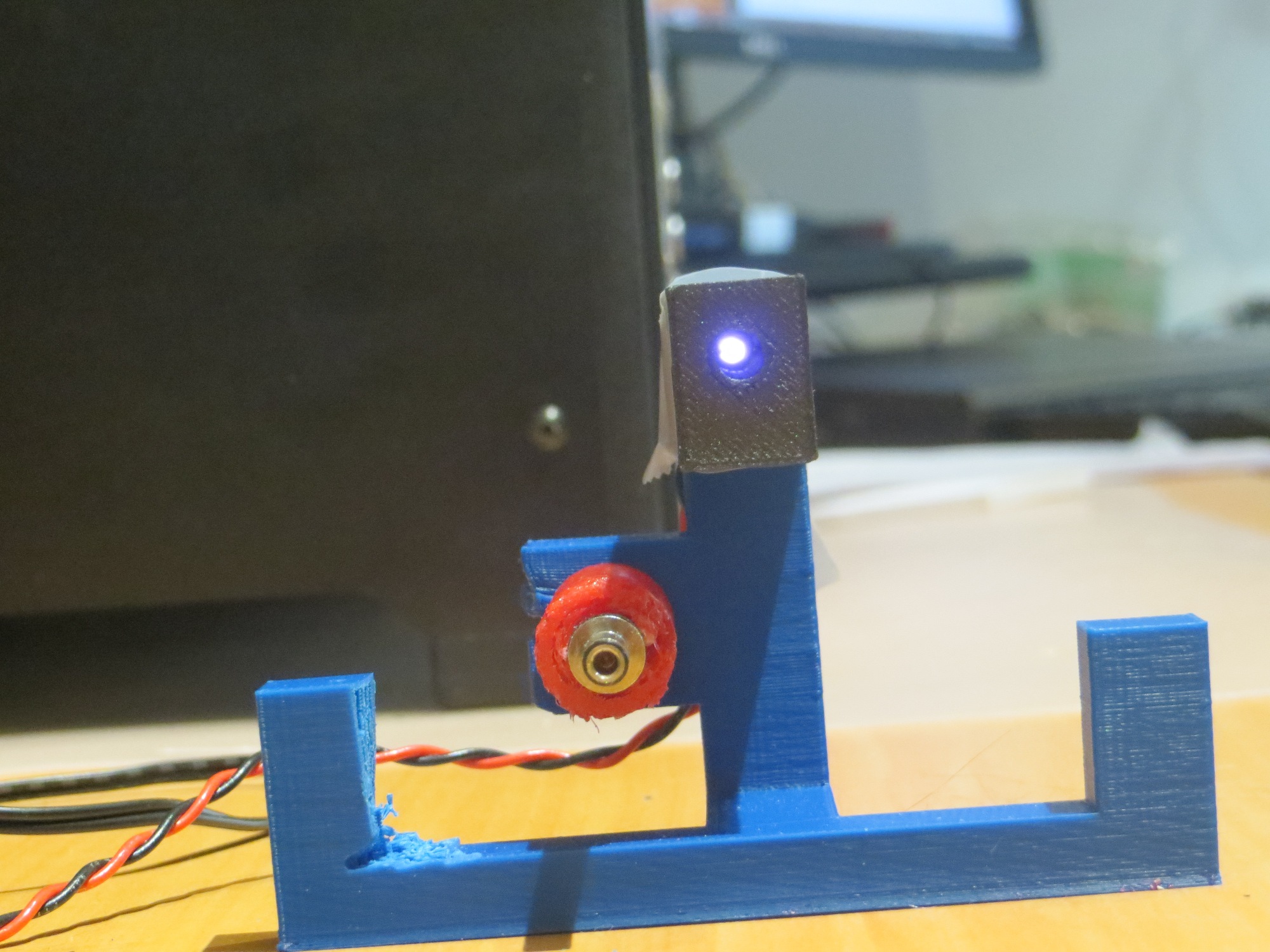

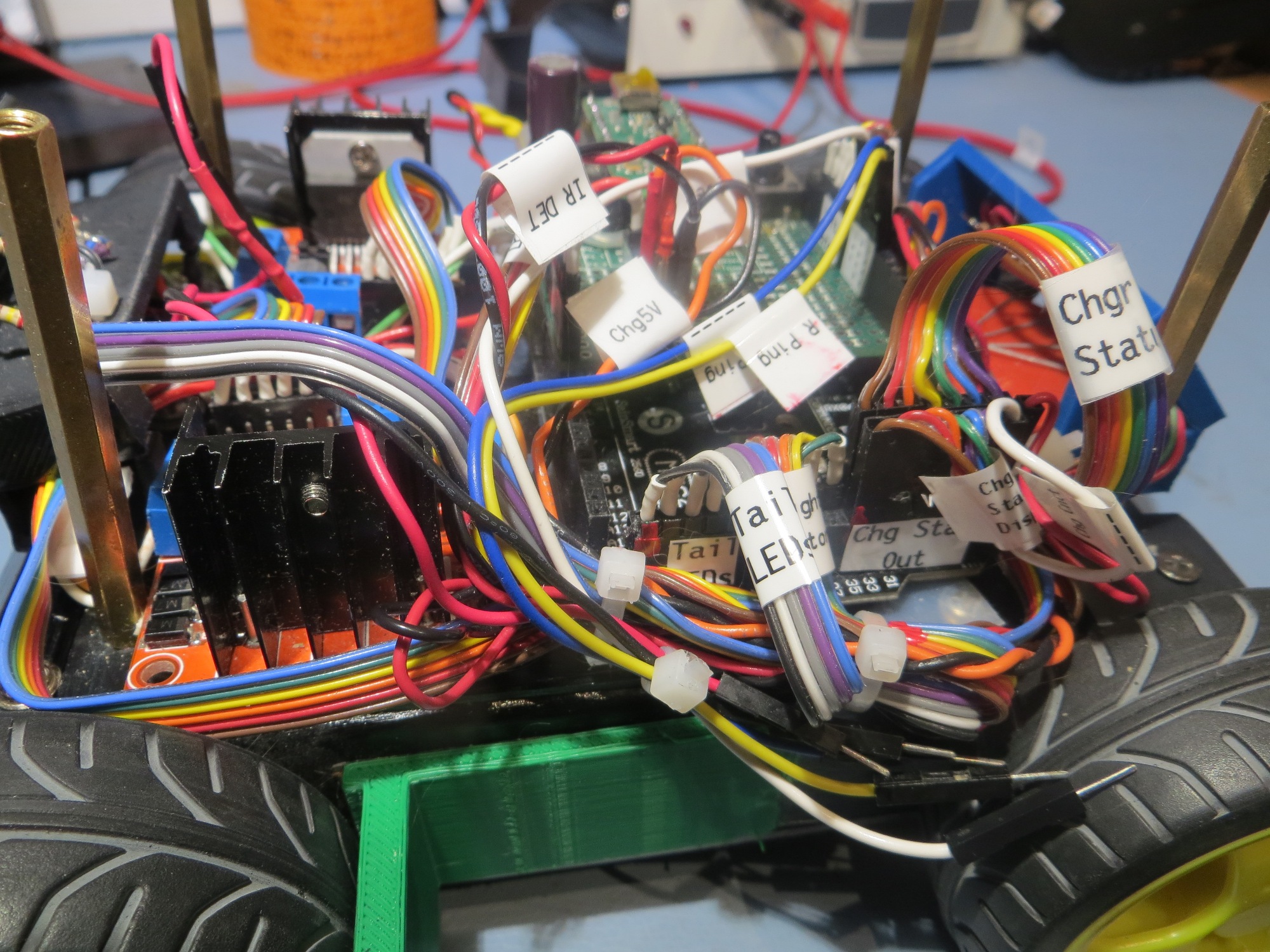

While doing the hallway testing that led to the discovery of the overhead IR ‘noise’ problem and the design of a ‘sunshade’ to suppress that noise and implementation of the flashlight reflector idea for the IR LED, I discovered several other problems with the operating system, as follows:

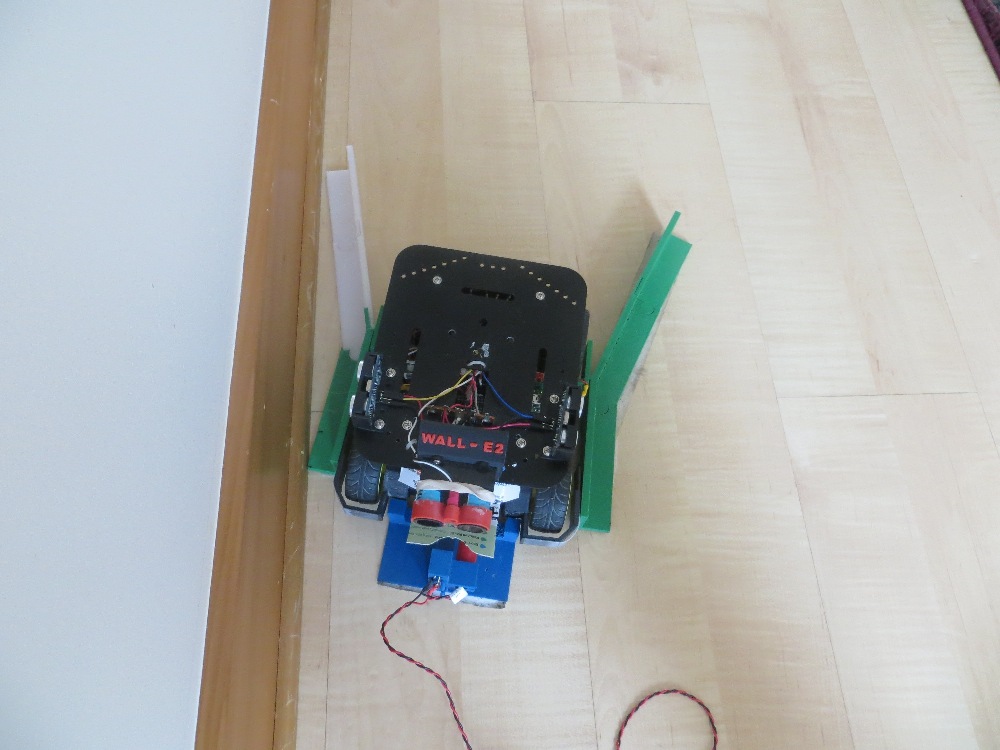

- Once the software detects an IR signal, it switches in to IR homing mode, but the only way back out again is to either execute the avoidance routine if it isn’t in need of a charge, or by connecting to the charger and then disconnecting once the battery is fully charged. There’s no provision for error cases, like getting stuck on on of the lead-in rails.

- The charging station disconnect maneuver needs work – it doesn’t back far enough away, and doesn’t turn far enough to actually get away from the charging station.

- The routine that monitors the charging state needs work. On several occasions it detected ‘end of charge’ when one of the chargers momentarily switched from ‘charging’ to the ‘finished’ state and back to ‘charging’. The software should not declare ‘end of charge’ until both chargers’ status is ‘finished’, and the software should employ integration to handle momentary state changes.

Item 1 – Exit provisions for IR homing code

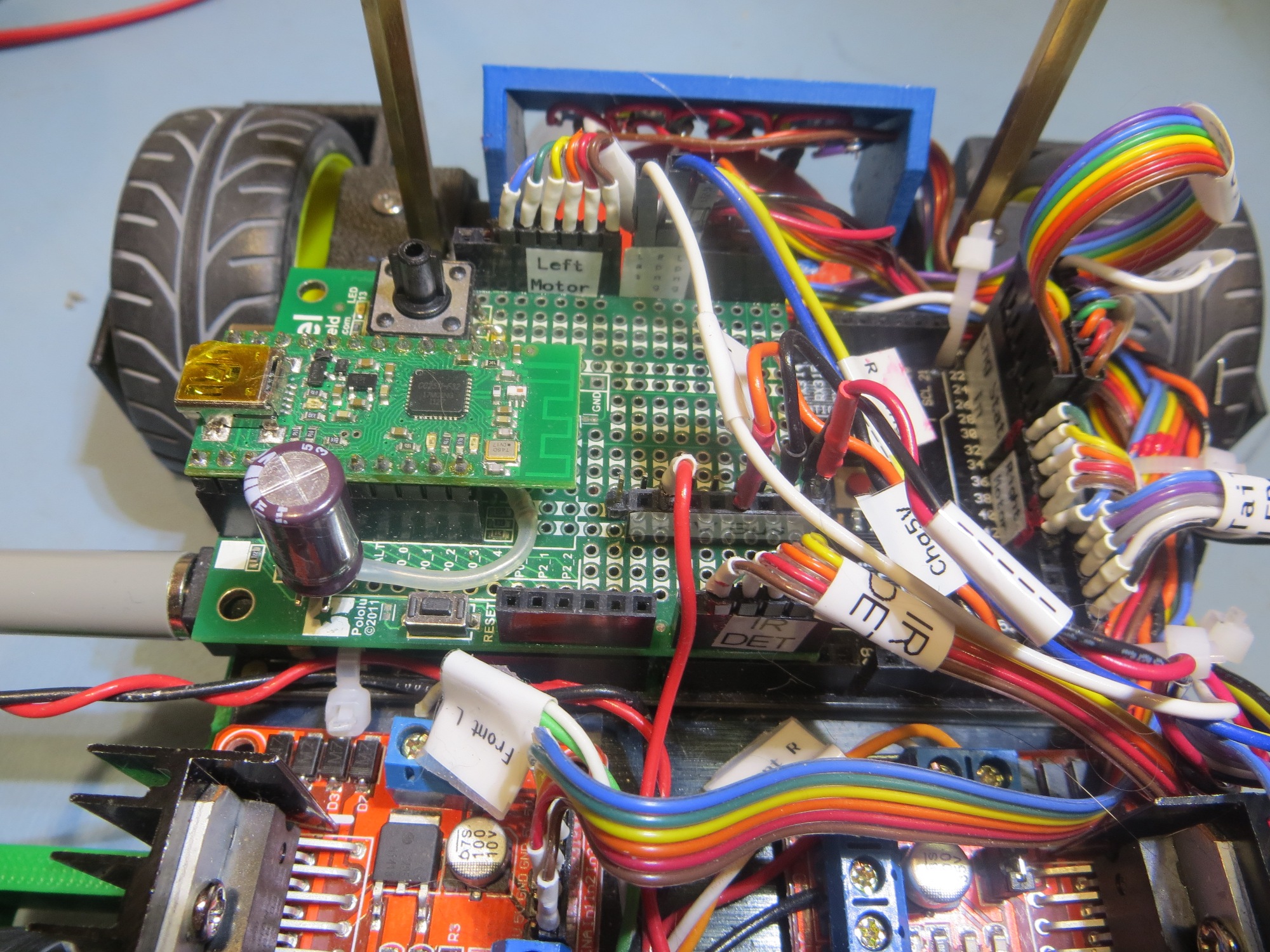

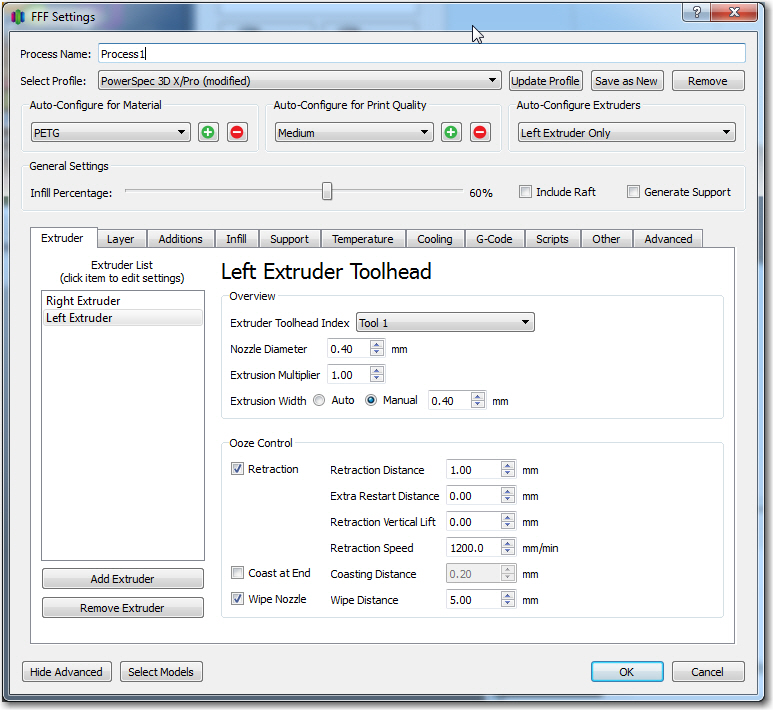

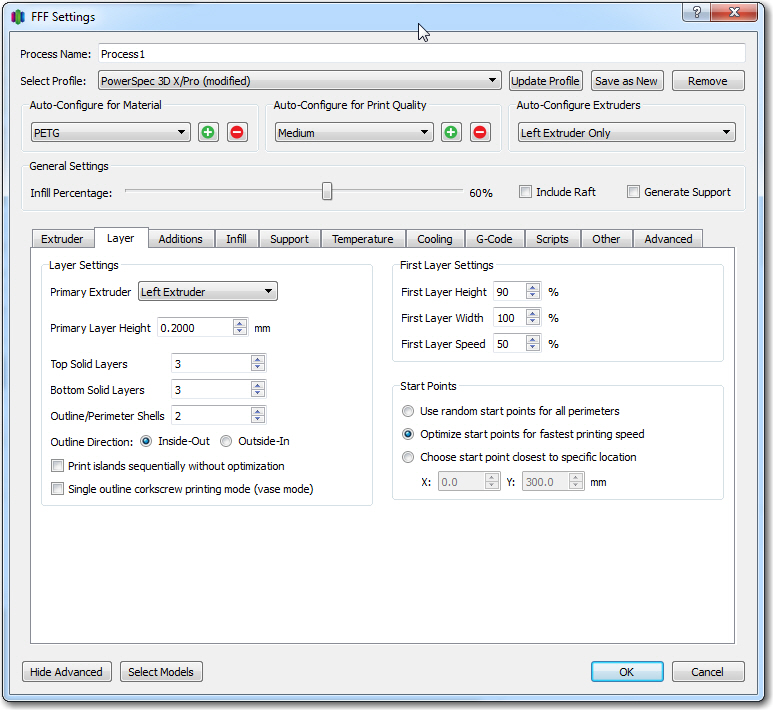

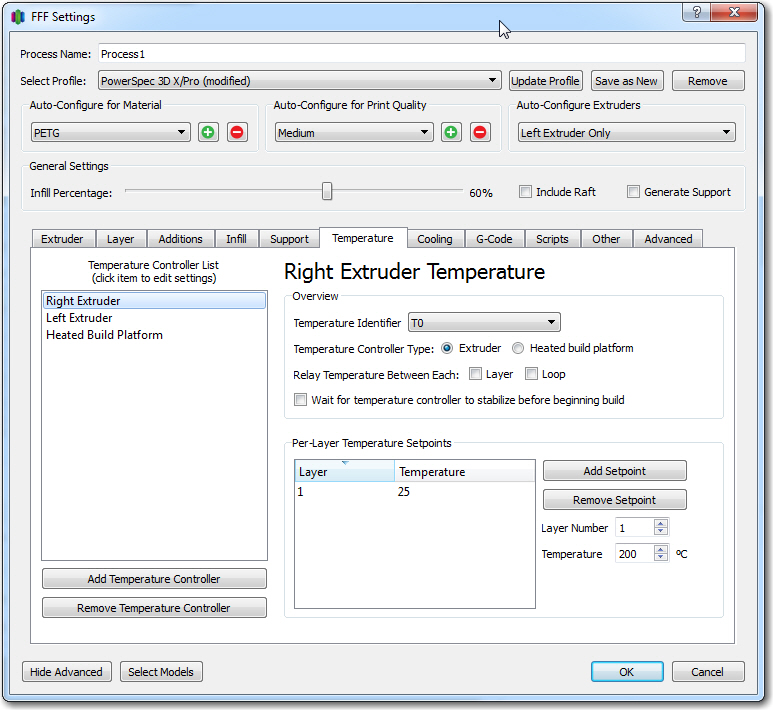

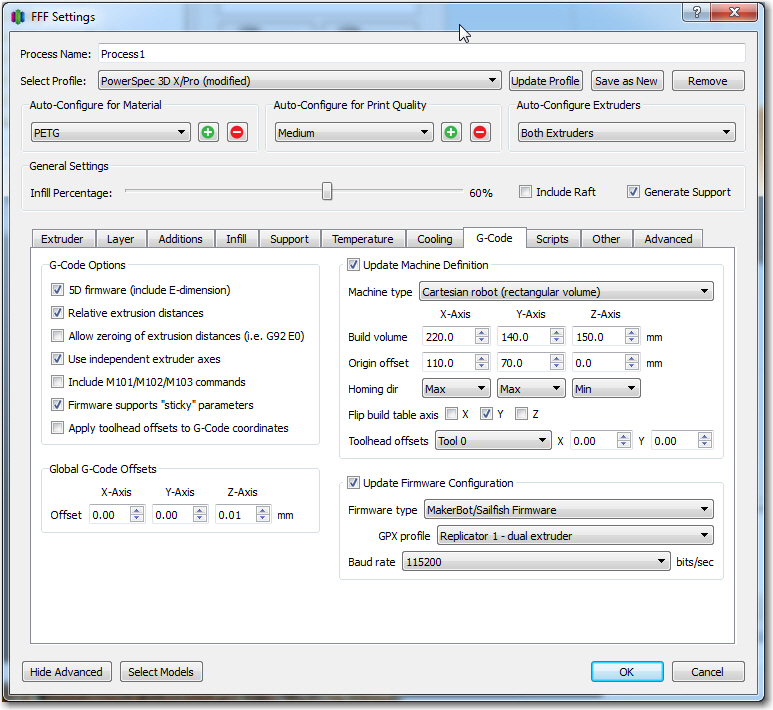

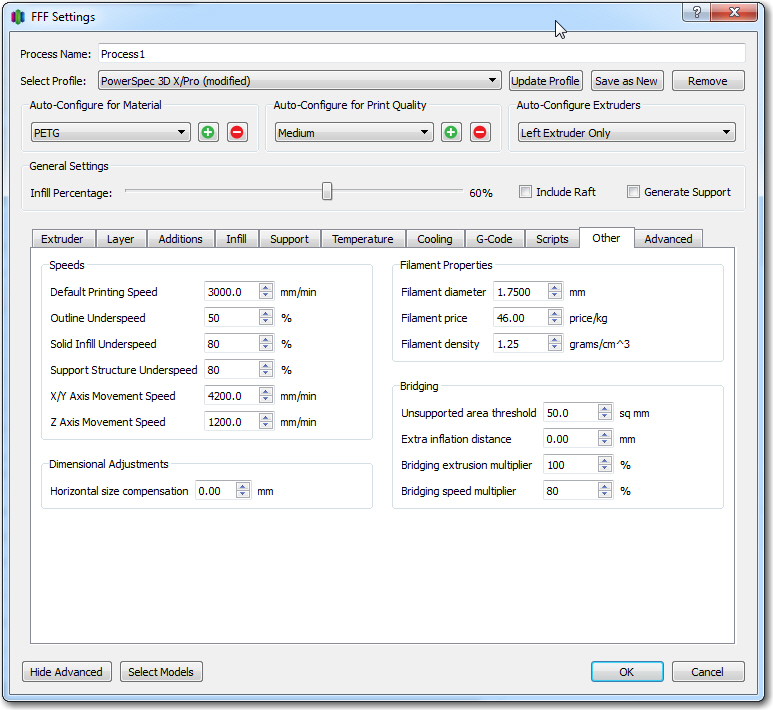

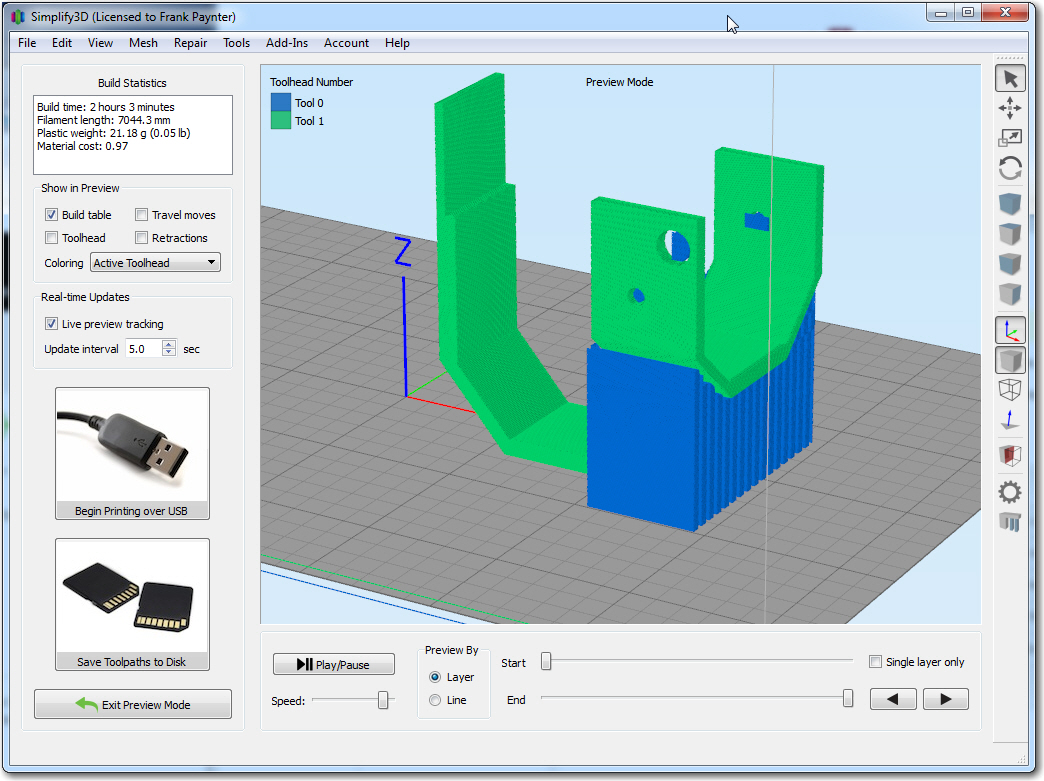

This item actually turned into a full-blown rewrite of Wall-E2’s operating system software structure. As I looked at the code to determine the best way to implement the required error case detection/responses, it became apparent that the problem was ‘baked-in’ to the software system design, and would have to be addressed at that level. So, I went ‘back to the drawing board’ (in this case to Microsoft Visio) and reworked the system design to allow Wall-E2 to detect and respond appropriately to error conditions in any mode, not just the normal wall-following one. The revised structure charts are shown in the following PDF document:

Loading...

Loading...

In the revised structure, the system always returns to the ‘Determine Op Mode’ block after each pass through the system, without getting stuck anywhere. This means that the ‘GetOpMode()’ routine has the opportunity on each pass through loop() to detect error situations (like the ‘stuck’ condition) and respond by switching to the appropriate branch of the structure tree.

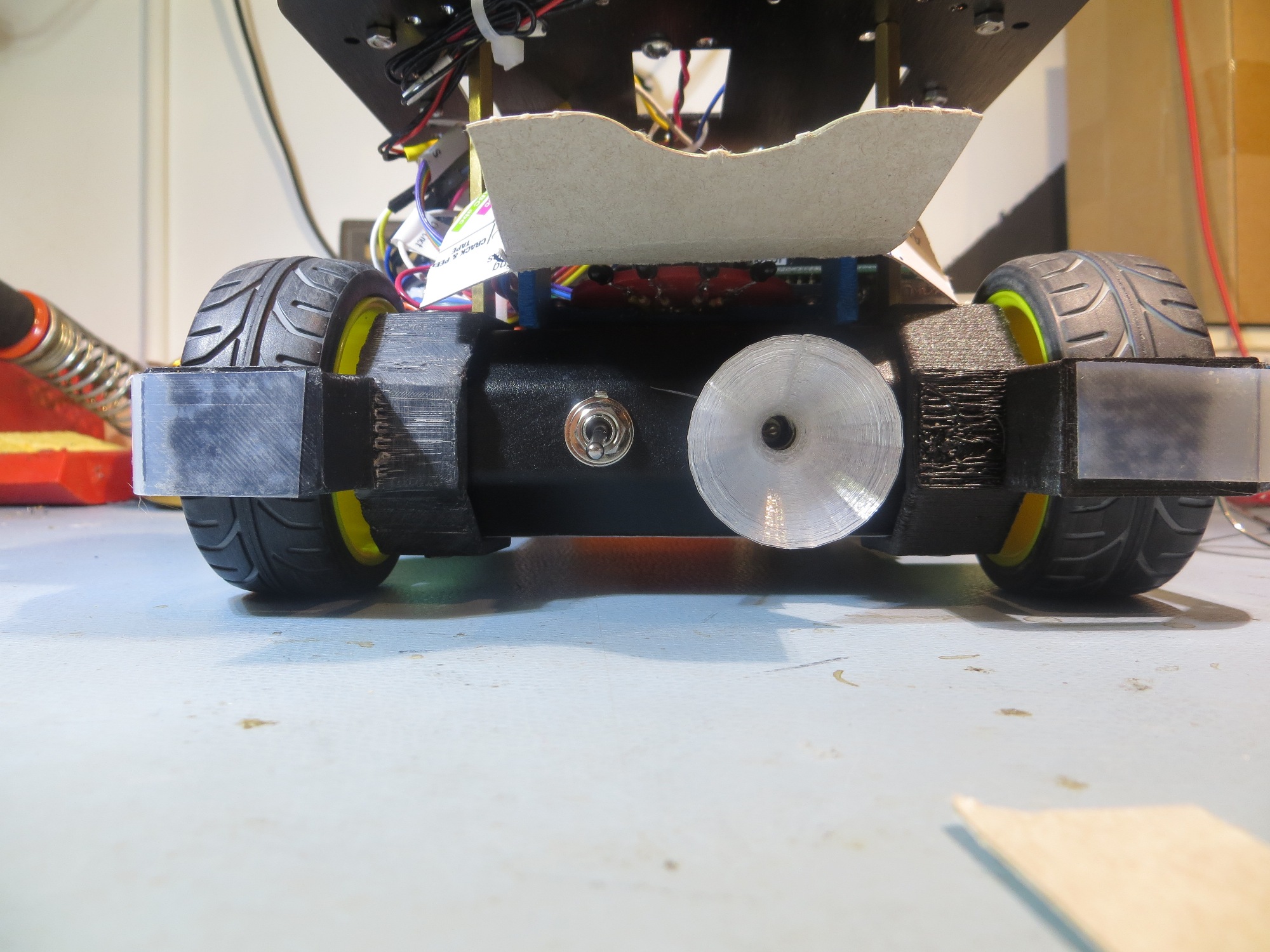

Item 2 – Charging Station Disconnect Maneuver

This one was just a matter of tweaking the ‘degrees’ parameter to the ‘RotateCWDeg((bool bRotateCW, int degrees_to_rotate)’ function. I started out with degrees_to_rotate = 90, but then tweaked it to 120 for better disconnect performance.

Item 3 – Charge State Monitoring

In the current operating system, a function called ‘MonitorChargeUntilDone()’ is called when the robot detects that it is connected to the charging plug. This function goes into an infinite loop, waiting for the charging status to change from ‘charging’ to ‘finished’. While in this loop, the charger 1 and charger 2 status lines are read in about once per second, until either both ‘finished’ outputs are TRUE, or the BATT_CHG_TIMEOUT_SEC backup timer elapses. This logic seems OK, but I have observed several inappropriate disconnect instances where only one of the two ‘finished’ outputs were TRUE. I suspect that there is a period of time where these two status lines oscillate from FALSE (not yet finished) to TRUE (finished) and back again, before finally settling down on ‘finished’.

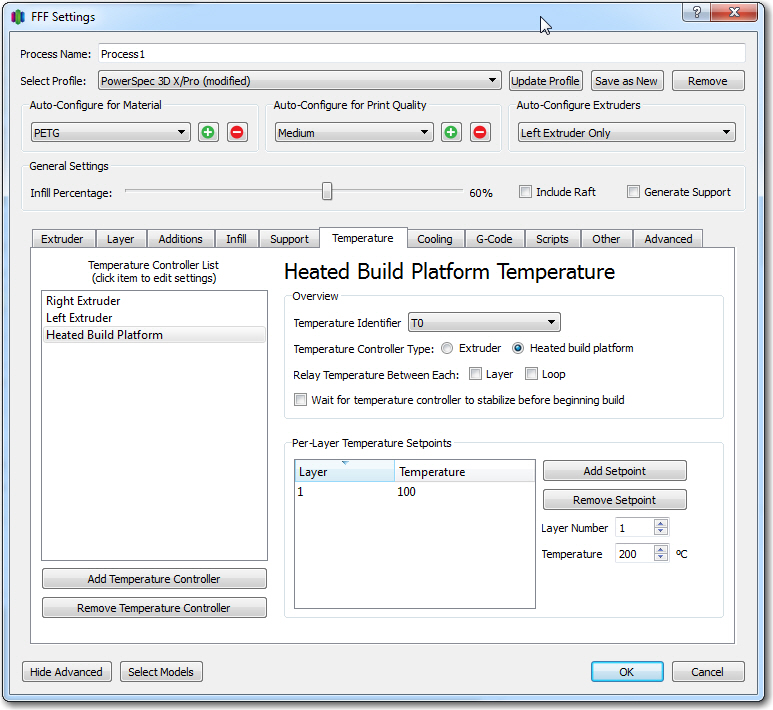

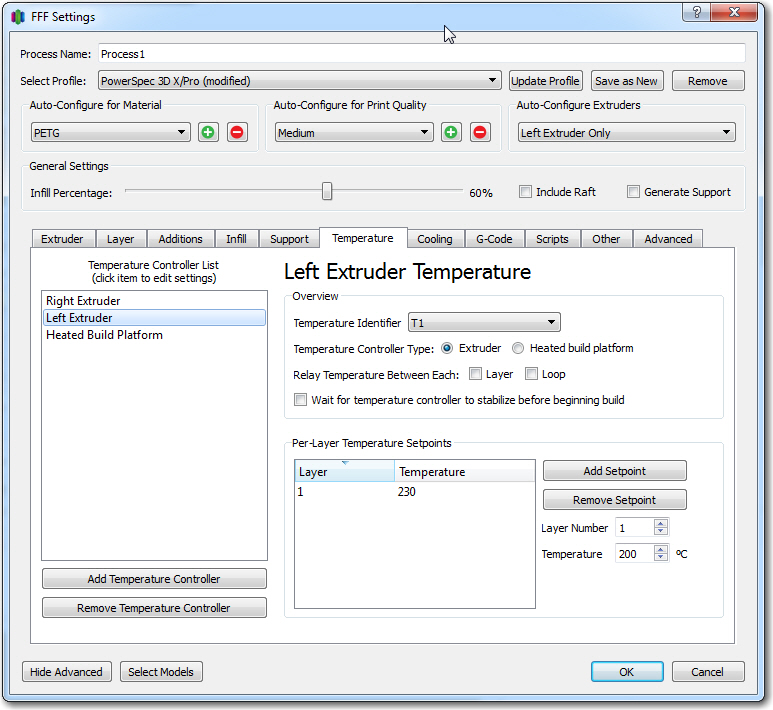

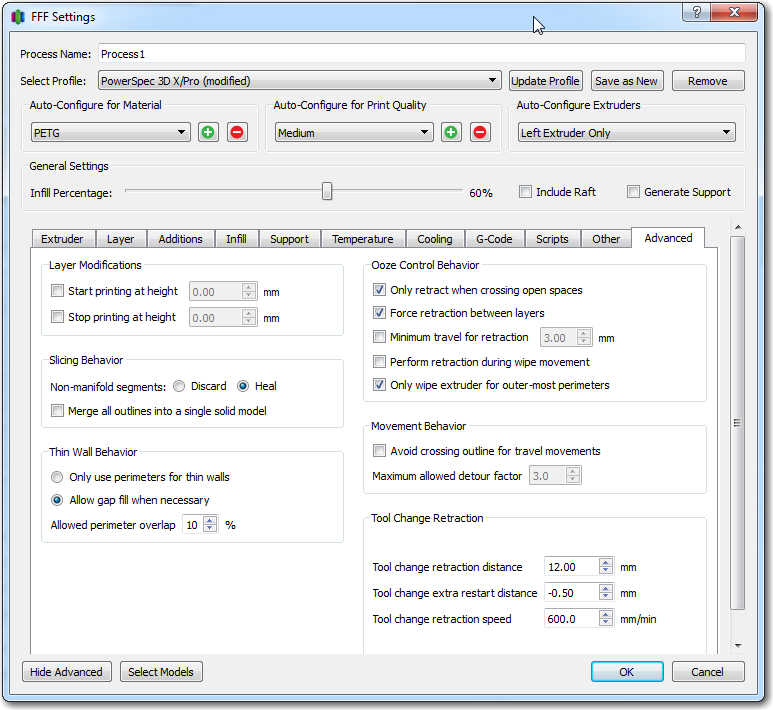

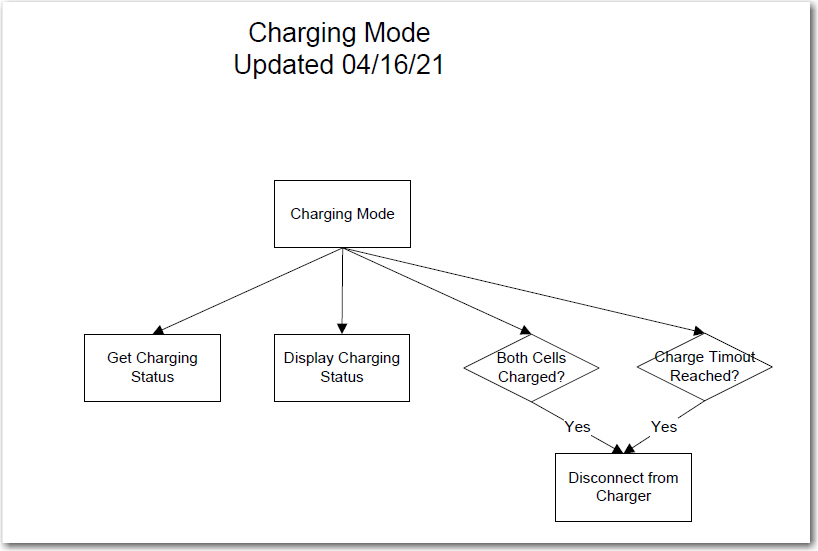

In my new strategy of eliminating all inner loops, the ‘MonitorChargeUntilDone()’ function will no longer be used. Instead, the ‘Charge Mode’ block of the new software structure chart (shown below) will be implemented.

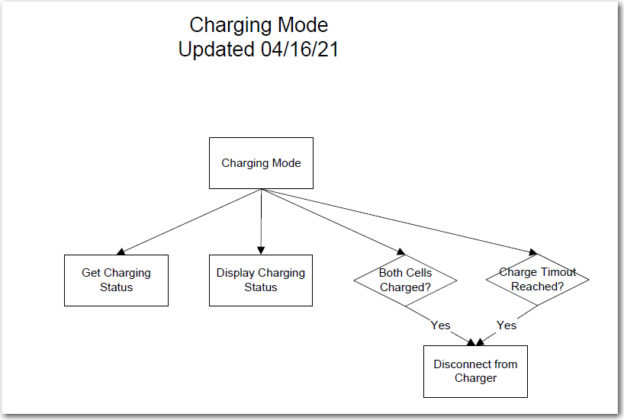

Updated Charge Mode Structure Chart Detail

This block will be executed on each pass through the loop() function, as long as the ‘Determine Op Mode’ block returns with the current mode set to ‘MODE_CHARGING’. When/If the ‘Both Cells Charged’ or the ‘Charger Timeout’ reached ‘if’ statements return TRUE, then the ‘Disconnect’ function will be triggered, which will cause the robot to immediately disconnect and back away from the charging station. This in turn will cause the ‘Determine Op Mode’ block to output a different mode value (assumed to be, but not necessarily ‘MODE_WALL_FOLLOWING’), and the appropriate portion of the overall structure diagram will be executed.

I’m away from my robot at the moment, at a bridge tournament in Gatlinburg, Tennessee, so I can’t immediately implement and test the above changes. However, I will be back home next week and hope to have everything running by the end of May. If everything works out, I may have a fully functioning charge station operational by then, and a ‘more-or-less’ fully autonomous Wall-E2 robot. It will be interesting to see what kind of trouble Wall-E2 can get into with the much longer run times that should be possible with autonomous charging capability.

Stay tuned!! 😉

Frank