As described in the previous post on this topic, my plan was to use about 1 second’s worth of stored data to (hopefully) detect the ‘stuck’ condition, where Wall-E has managed to get himself stuck without triggering the normal obstacle avoidance routine. As I mentioned before, this happens when it hits an obstacle that is too low to register on the front-facing ping sensor (like the legs on our coat rack), or too acoustically soft to return a good distance reading (like my wife’s slippers).

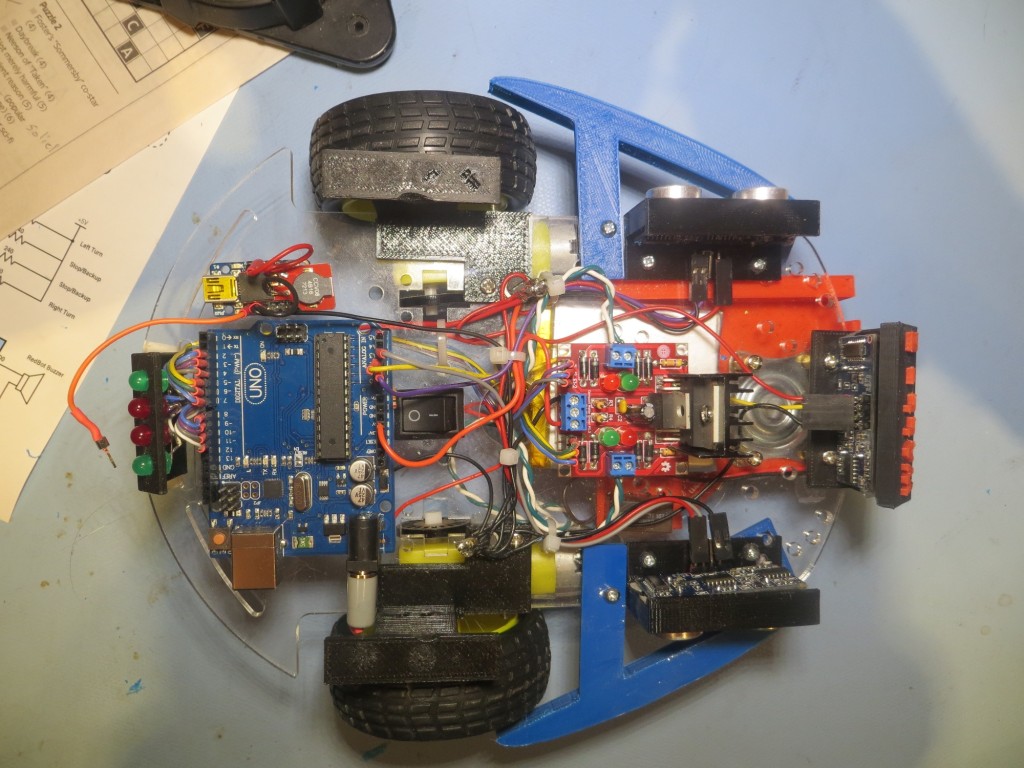

So, I implemented three byte arrays, each K bytes long to hold 1-2 second’s worth of data (K was set to 50 initially). Each array is loaded from the ‘top’ (position K-1), and older data is shifted down to make room. The oldest reading gets dumped off the bottom into the bit bucket. Then, at each pass through the movement loop, the function IsStuck() is called to assess the presence or absence of the ‘stuck’ condition. For each array, the maximum and minimum readings are acquired, and then they are subtracted to give the maximum distance deviation

for that sensor over that period. If the maximum distance deviation for all three sensors is below some arbitrary (initially 5 cm) limit, then the ‘stuck’ condition is declared, and the movement loop is terminated, causing a return the main program loop. This in turn causes the ‘RecoverFromStuck’ routine, (which does the backup-and-turn trick) to run.

I was a bit worried about the amount of RAM consumed by the arrays, but it turned out to be negligible. I was also worried about the amount of time it would take to manage the arrays and make the deviation computations, but this too turned out to be a non-problem.

However, what did turn out to be a problem is that the darned thing didn’t work! Well, the coding was OK, and the algorithm worked just the way I had hoped, but Wall-E was still getting hung up on the coat rack and on the wife’s slippers. Wall-E would occasionally recover from the coat rack, but never from the slipper trap. Apparently the fuzz on the outside of the slipper makes them pretty much invisible at the ultrasonic frequency used by the ping sensors. I played around with the array length and distance deviation threshold parameters, but if I tightened everything down to the point where Wall-E would reliably un-stick itself, it would also reliably trigger the ‘stuck’ condition repeatedly during a normal wall-following run. This led to a condition where Wall-E was going backwards more often than it was going forward; amusing for a while, but definitely not what I had in mind!

So, Wall-E was stuck on slippers, and I was stuck for a way around/over/through the problem. Often when I come across a seemingly insurmountable problem, I beat my head against it for way too long (I am an engineer, after all). However, I have also learned that if I drop the issue for a while, I often come up with an answer (or at least another approach) ‘out of the blue’ while doing something entirely unrelated.

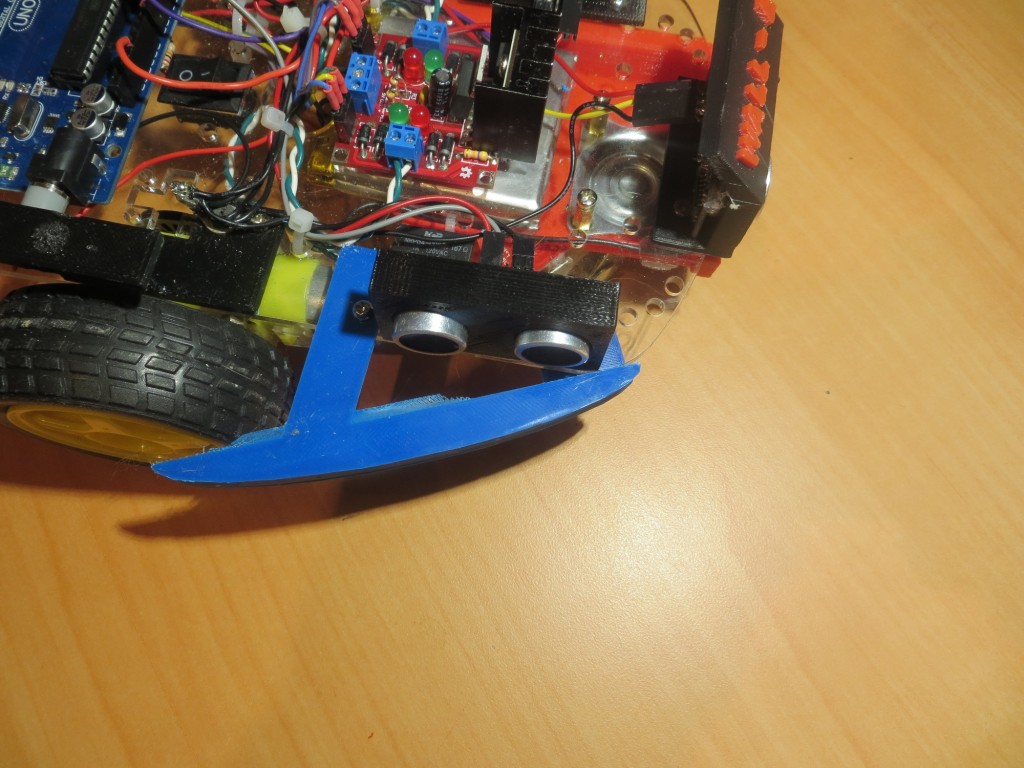

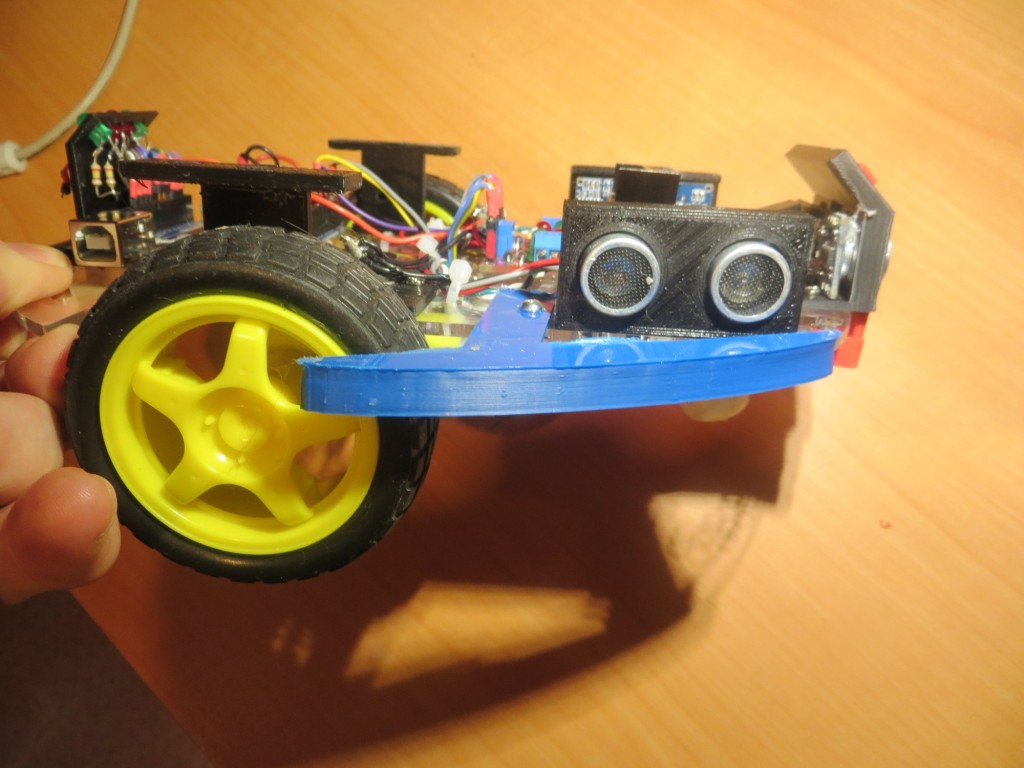

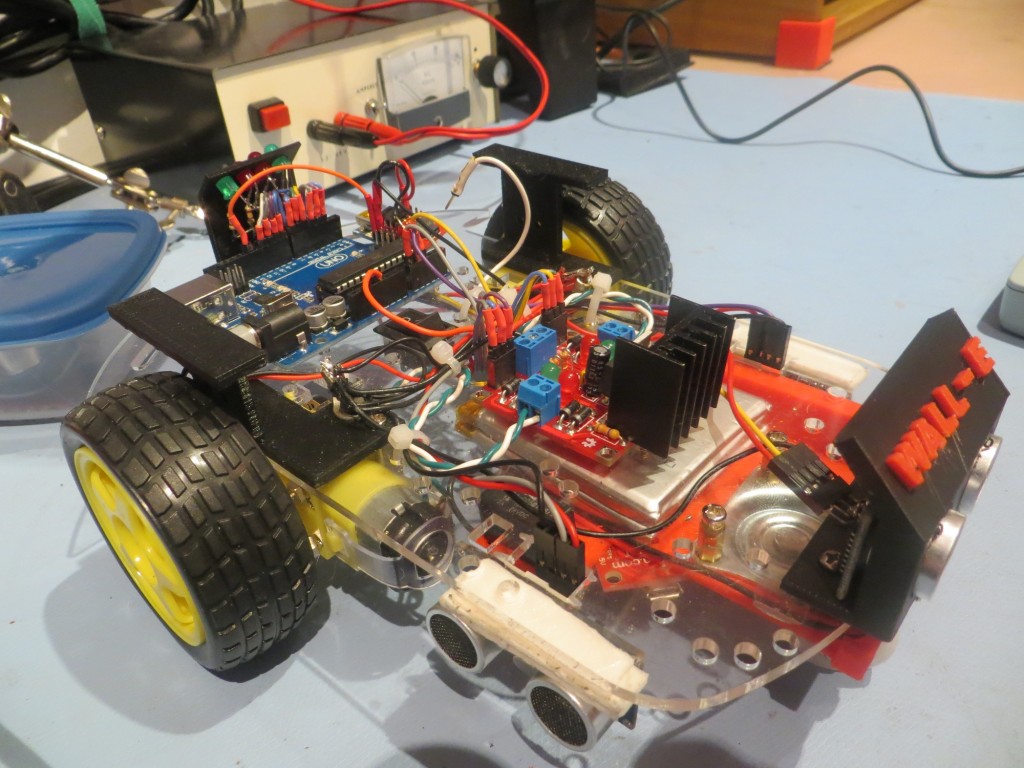

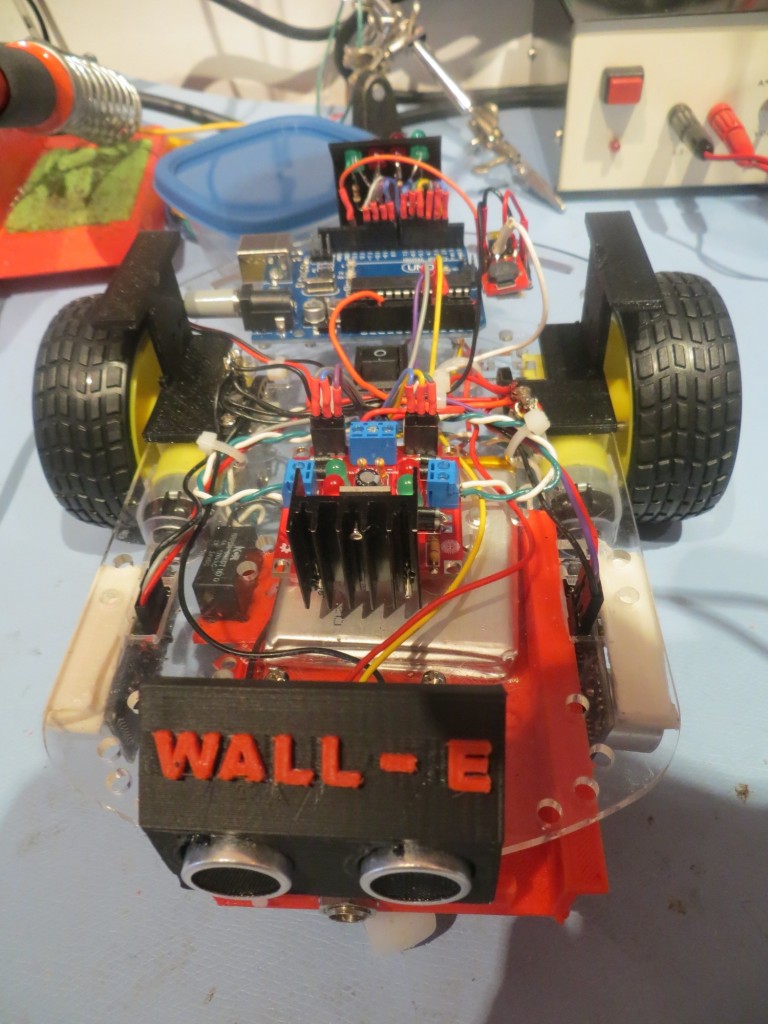

In this case I was driving to a bridge game at the local club and musing idly about Wall-E’s slipper fetish. I had done some fairly careful bench tests in debug mode and had discovered that the real issue wasn’t that the slippers were acoustically invisible, it was that they weren’t quite invisible, and the front ping sensor distance readings varied from 7 cm to infinity (infinity here being 200 cm). This is what was causing the ‘stuck’ detection scheme to fail, as there was enough variability over the 1-2 second time frame so that the detection threshold was never met. So, I’m thinking about this, and it suddenly occurred to me that the solution was to add a second forward-looking ping sensor above the current one, so that when Wall-E snuggled up against one of my wife’s slippers, the top sensor would still have a clear line of sight (and would hopefully either report a real distance to the next obstacle or report 0 for ‘clear’). In fact, I might even be able to exploit the variability of the bottom sensor in ‘slipper fetish’ mode by comparing the top and bottom sensor readings. A ‘clear’ (or constant real distance) reading from the top sensor and a varying one from the bottom sensor might be a definitive ‘stuck on a slipper’ determinant.

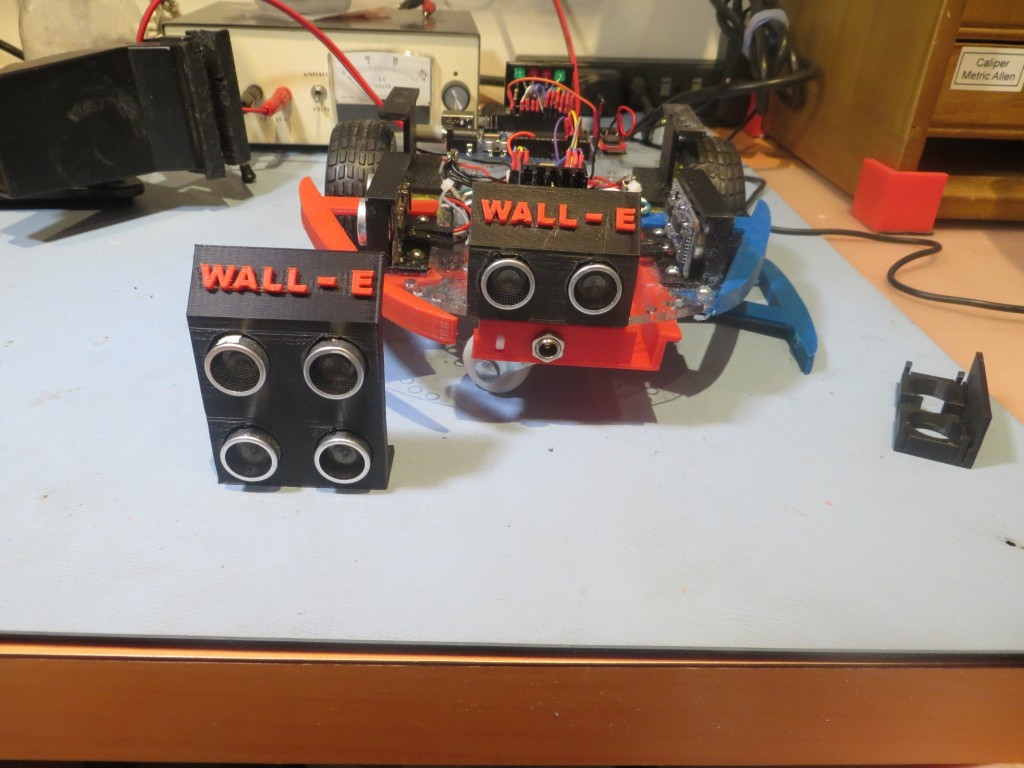

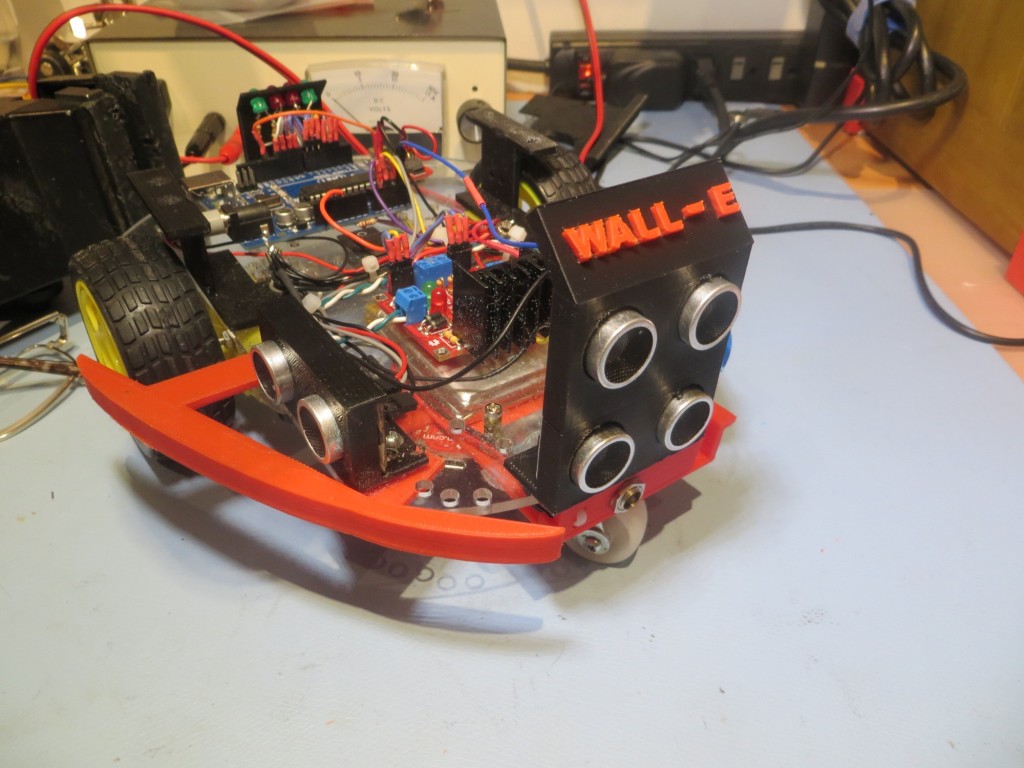

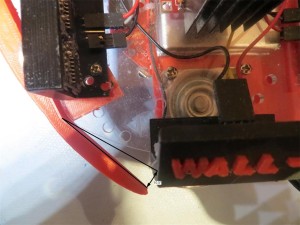

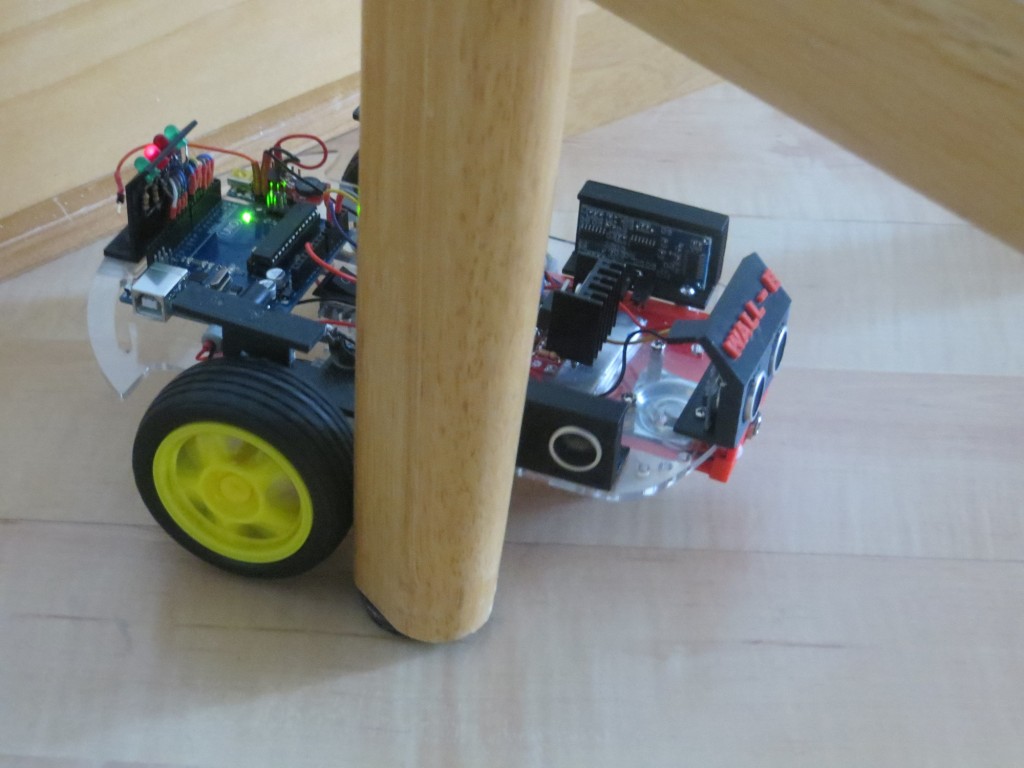

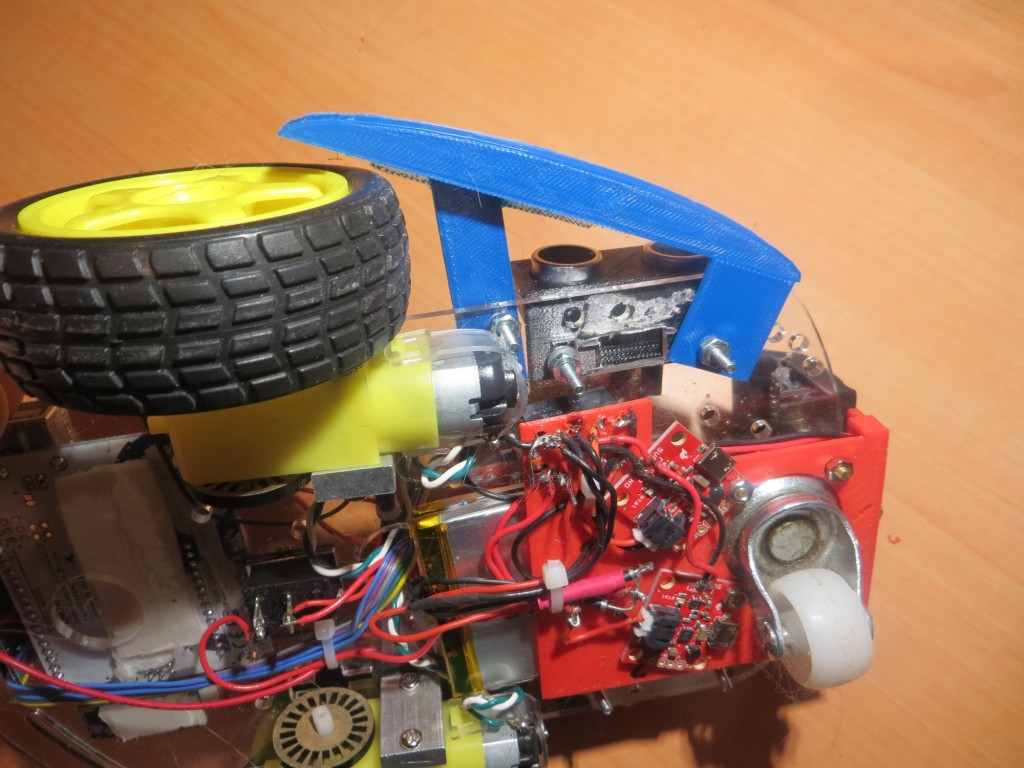

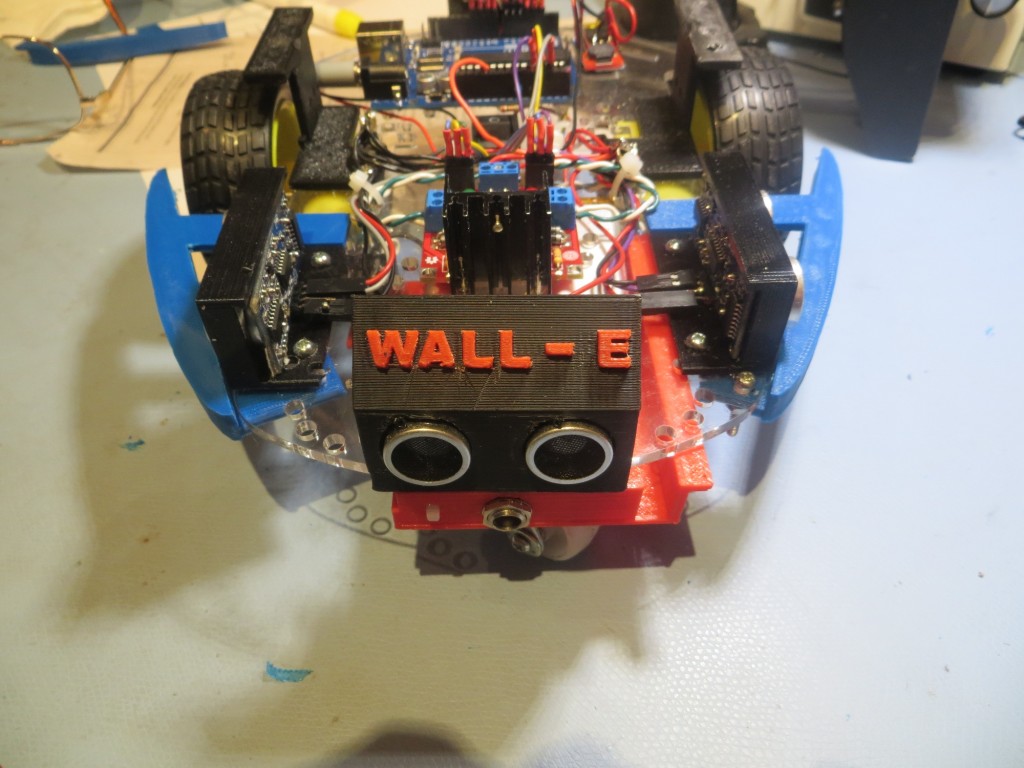

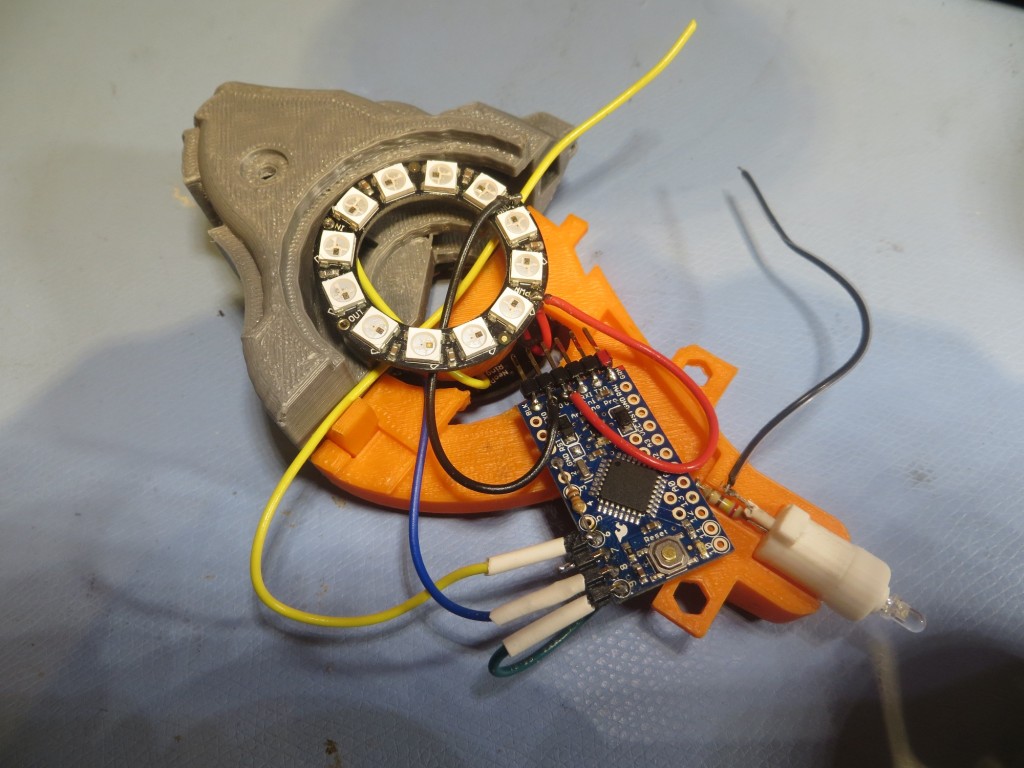

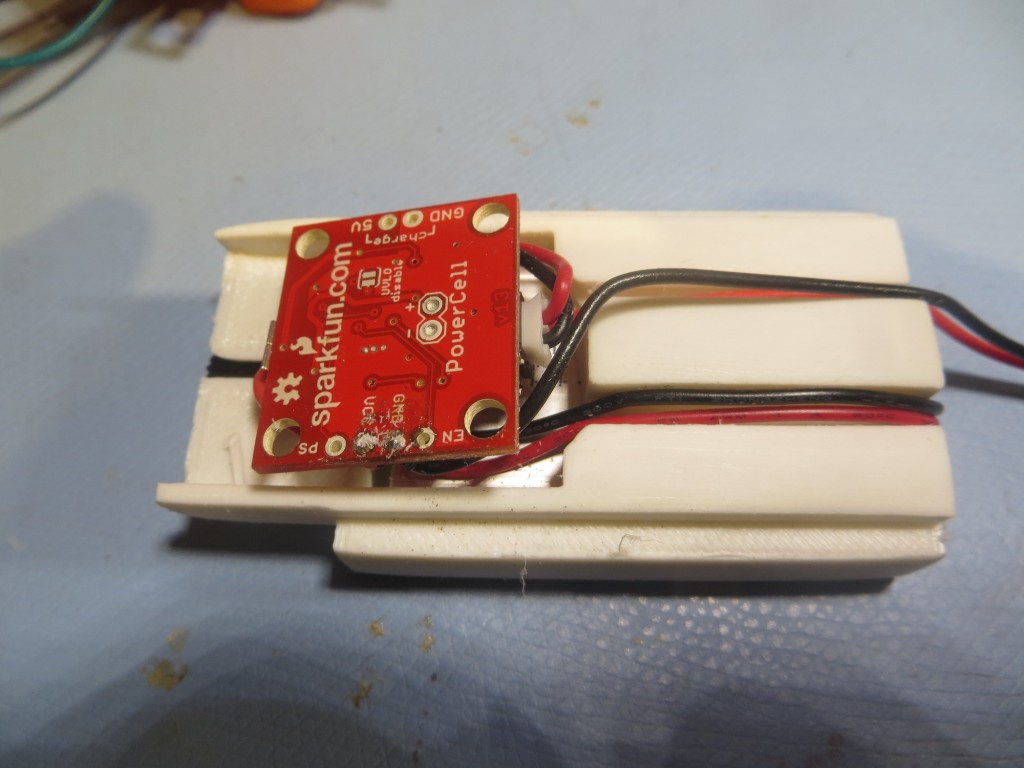

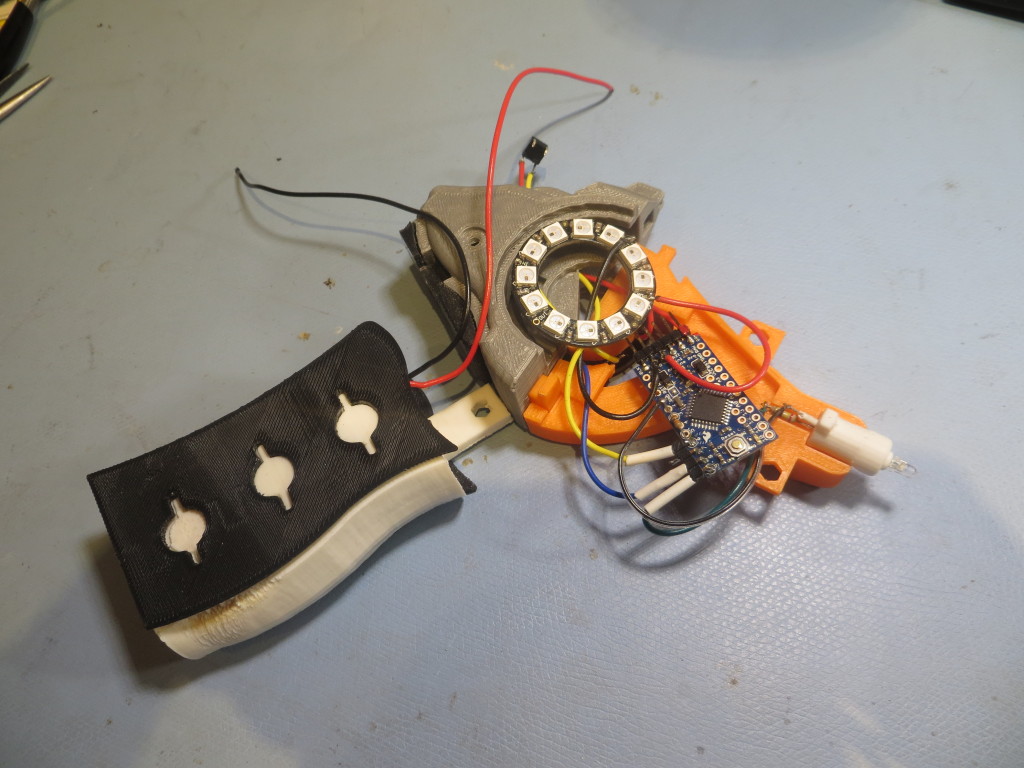

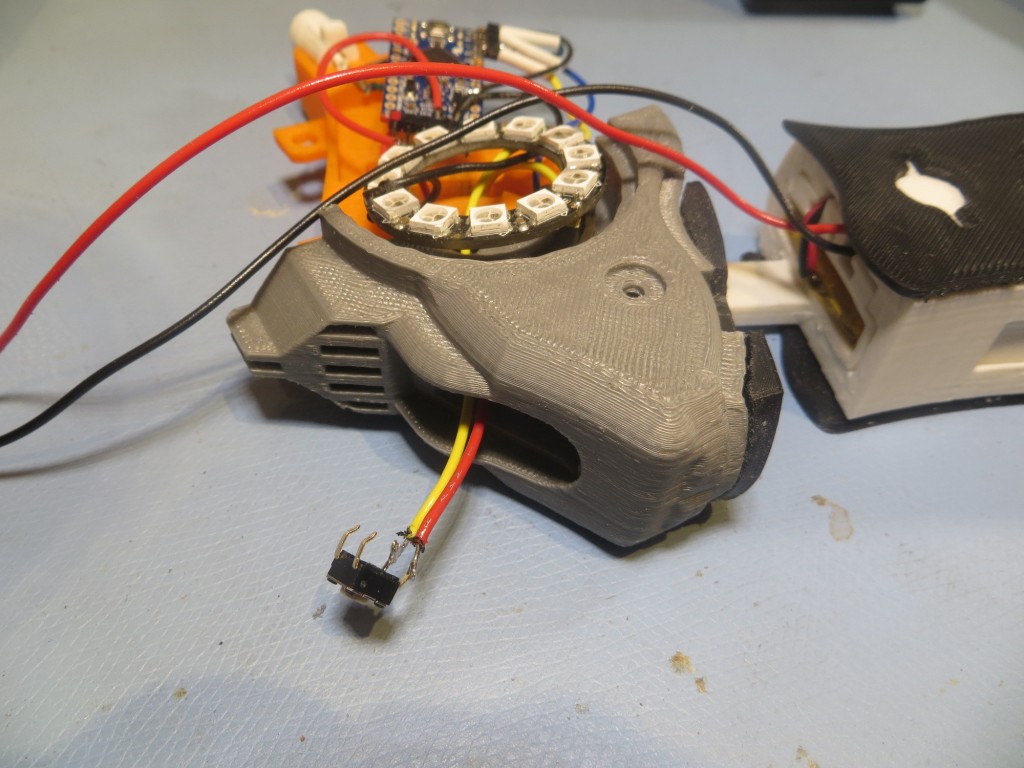

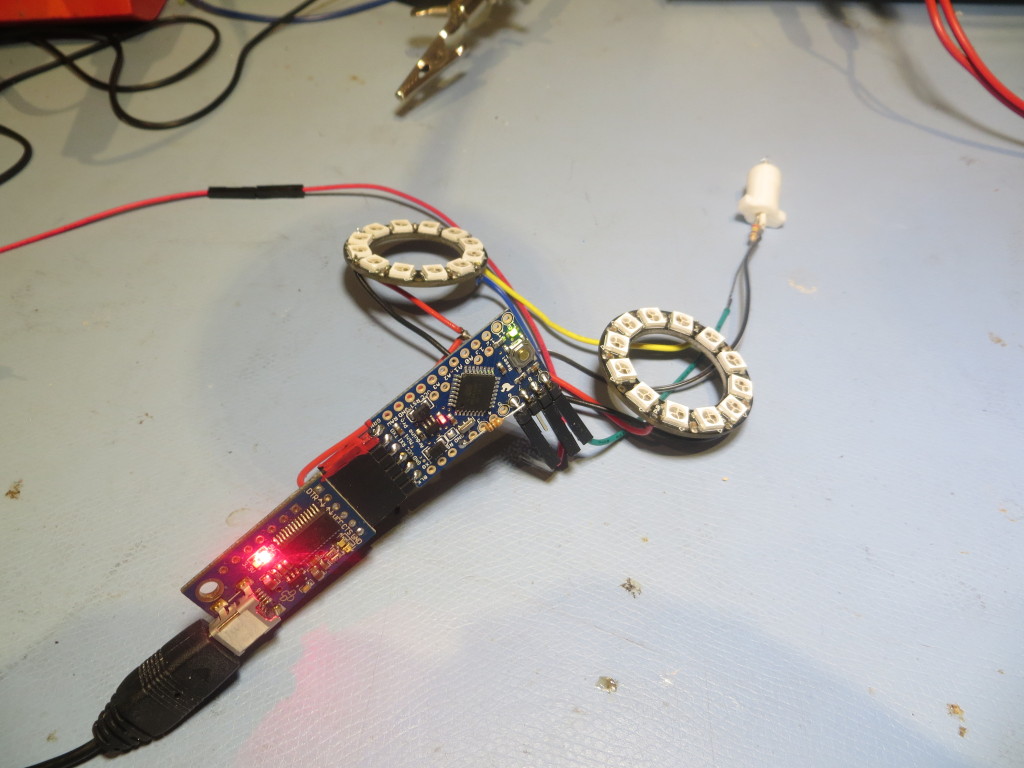

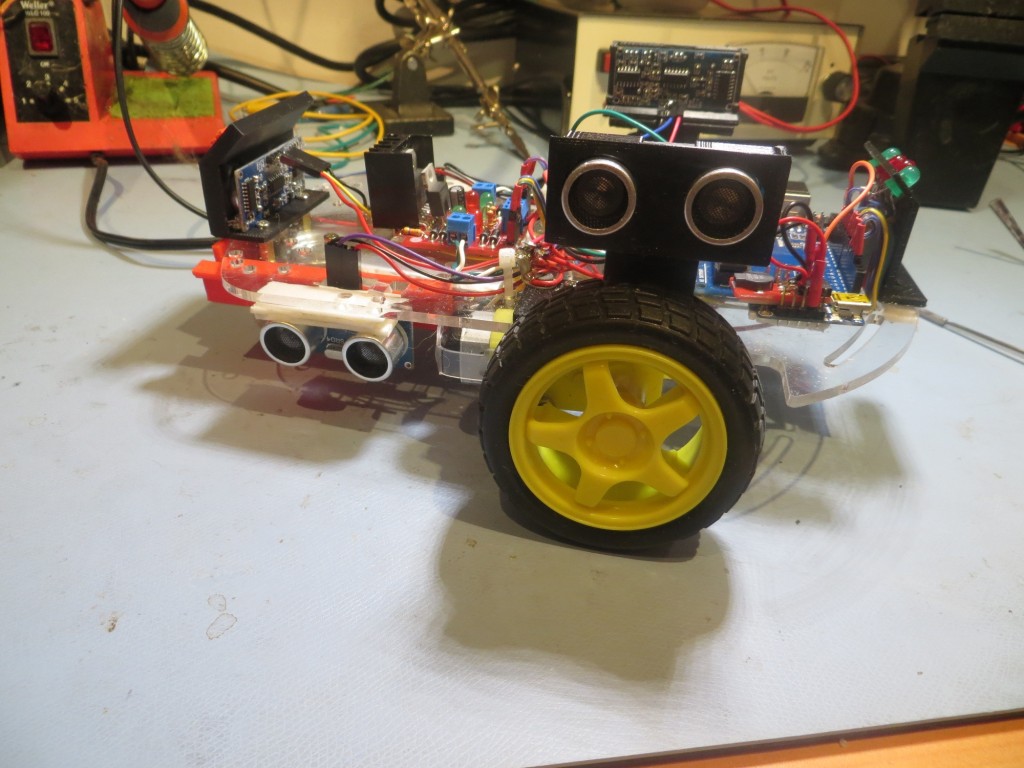

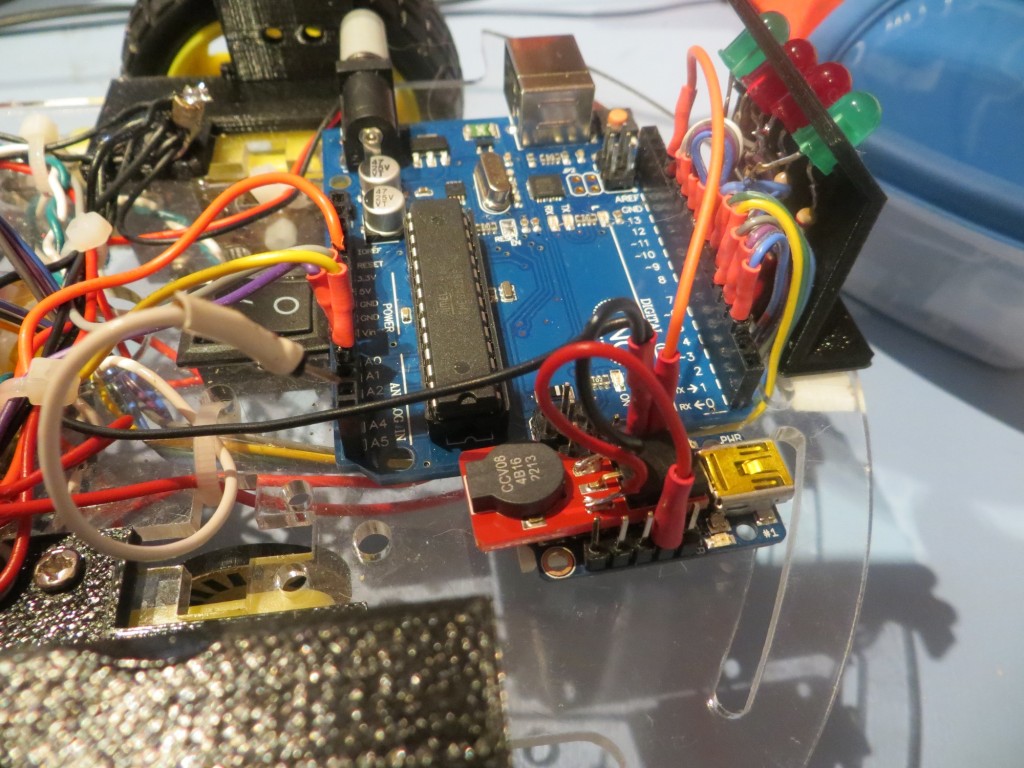

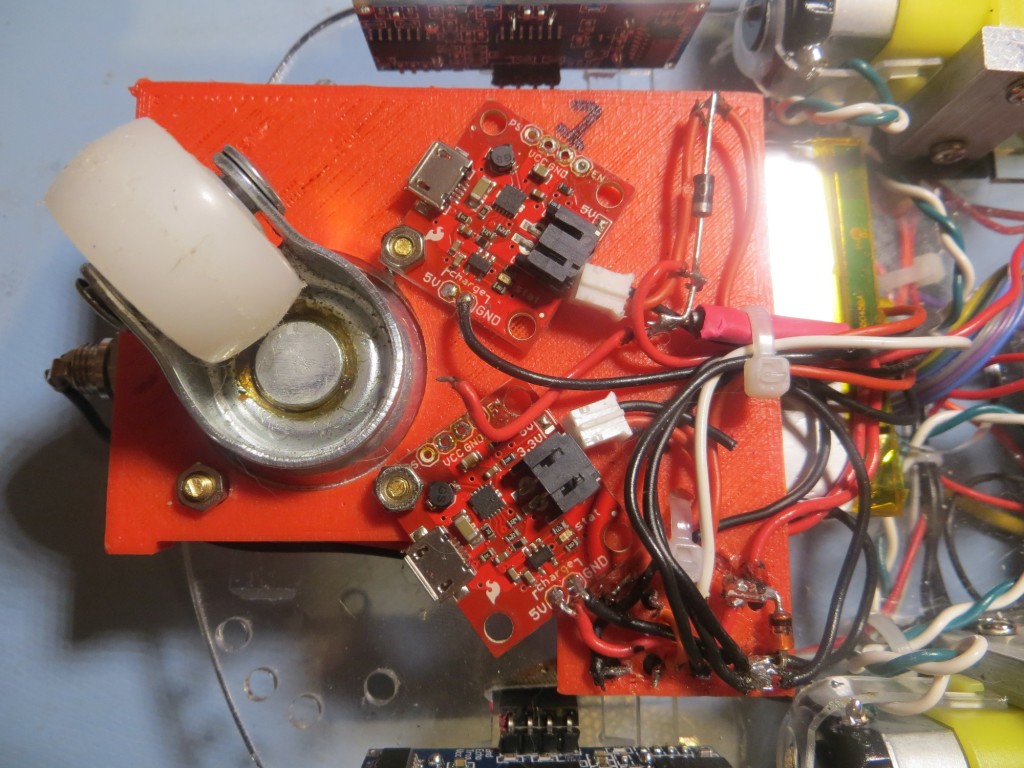

And, because I ‘have the (3D printing) technology’, I was able to modify the front sensor bracket design to add another ping sensor location above the existing one, and print it out on my PowerSpec 3D Pro printer. So, within a day of my drive-time ‘aha’, I had a new dual ping sensor bracket installed on Wall-E, and the second sensor wired into a spare analog input on the Arduino board. Next I’ll have to add a 4th array to the setup, but coding should be more or less copy and paste. I will have to figure out whether or not I can simply compare the top & bottom sensor data to determine the’stuck on a slipper’ condition, or have to include data from the left/right ping sensors as well, but I’m very optimistic that this is a winner!