Posted 04/17/15

I haven’t had much time to work on the ‘stuck detection’ problem lately, as I have been playing duplicate bridge every day at the ACBL regional tournament here in Gatlinburg, Tennessee. However, my pick-up partner for the morning session didn’t show, so I’m using the free time to think about the problem some more.

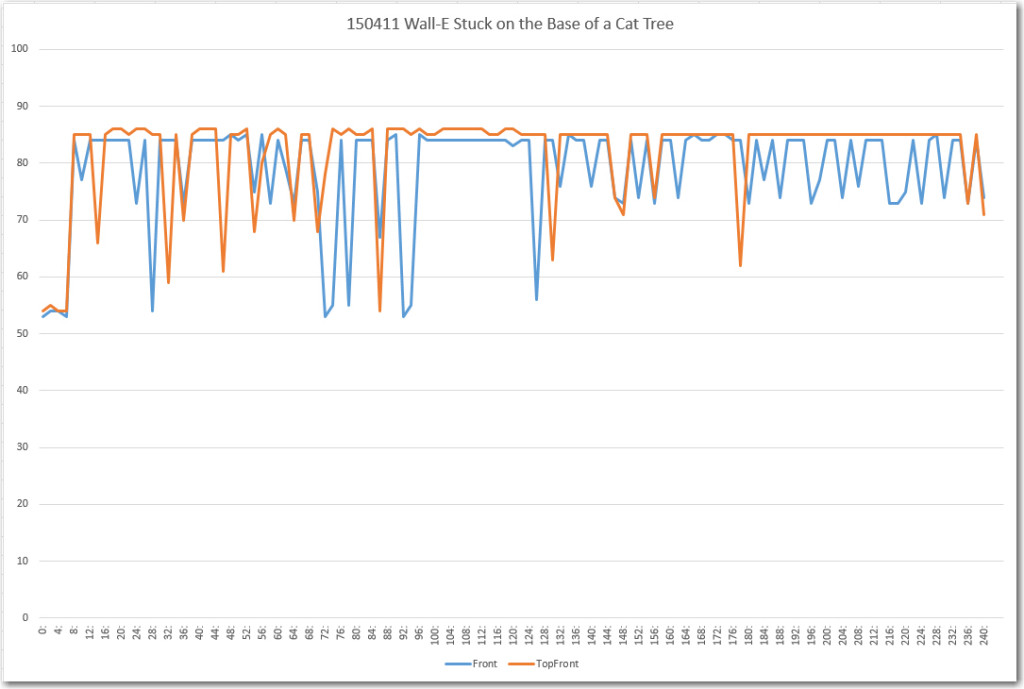

In my last post I showed that Wall-E gets confused in certain circumstances when what I believe to be multipath effects corrupt the data collected by the two front-facing ultrasonic sonar sensors. My current ‘stuck detection’ algorithm relies on at least the top sensor getting good clean distance data, even if the lower one is completely or partially blocked (the ‘stealth slipper’ scenario). When the data from both sensors is corrupted, I’m screwed. In my last post I showed some data I collected from a ‘stuck’ scenario using Wall-E’s on-board EEPROM, and this data made it crystal clear that both sensors were getting bad data. So, what to do? Time to go back to the drawing board and devise a new, improved ‘stuck detection’ algorithm that takes in to account the new information.

Here’s what we have:

- The ‘stealth slipper’ scenario, where the lower sensor is partially or completely blocked, while the upper one is not. In this case, the upper sensor may report a real distance if there is an obstacle within the set MAX_DISTANCE_CM parameter, or it may report zero if there isn’t . The lower sensor can sometimes also report zero when it is blocked by the fuzzy slippers, as the slippers absorb or deflect enough of the ultrasonic energy to make the sensor think there’s nothing there. The ‘stuck’ detection algorithm handles this case in a two-step process. First, if both sensors report very little variation over time (meaning the distance to the next obstacle isn’t changing) then a ‘stuck’ condition is declared. Or, if the deviation over time of the bottom sensor is different than the variation over time of the top sensor (the bottom sensor is partially blocked by the slipper, and reports widely varying distance readings), then a ‘stuck’ condition is declared. A potential flaw in this algorithm is that either or both sensors can report a constant zero even if Wall-E is moving normally, but there simply isn’t anything within the set MAX_DISTANCE_CM parameter. In the current algorithm, this is addressed by setting the MAX_DISTANCE_CM parameter to 400 cm for the front sensors, on the theory that in a normal wall-following scenario, there is always something within 4 meters (13 feet or so).

- The normal wall-following scenario, where (hopefully) the front sensors report a steadily declining distance, with enough variation over time to avoid triggering a ‘stuck’ detection. In this mode, the side sensor reporting the smaller distance will be used for primary left/right guidance.

- The multipath scenario, where the physical geometry is such that the front sensors report widely varying distances, sometimes the same, and sometimes different. The present ‘stuck detection’ algorithm fails completely here, as the top sensor reports enough variation over time to pass the first test, and there isn’t enough variation difference between the top and bottom sensors to satisfy the second one. I don’t think there is anything to be done about the problem with the first test, as the presence of variation over time is the only reliable indicator of movement. However it seems to me that the second test (differential variation between the two sensors) can be improved. The differential variation test essentially compares the average variation in the top sensor to the average variation in the bottom one, and this clearly doesn’t work for the multipath case. But, if I compared the two sensor readings on a point-by-point basis over a few seconds, I should be able to detect the mutipath case. Maybe a running count of differences that exceed a set threshold (from the plot, it looks like a 10 cm distance threshold would work)? Then if the count/sec exceeds some other threshold, declare a ‘stuck’ condition?

Anyway, I’m starting to think there may be some hope for Wall-E after all; maybe he won’t wind up stuck forever under a cat tree somewhere. In any case, I think it would be wise to use my new-found EEPROM powers to collect some more ‘real-world’ data before I leap too far to a conclusion. I think I might even want to include the side sensors in the reporting, so I can see what is actually happening during a typical wall-following sequence, as well as when Wall-E is actually stuck. I think I can probably get at least 10-20 seconds of data without running out of EEPROM space, so we’ll see.

Stay tuned,

Frank