Posted 04/01/2015 (not an April fool’s joke!)

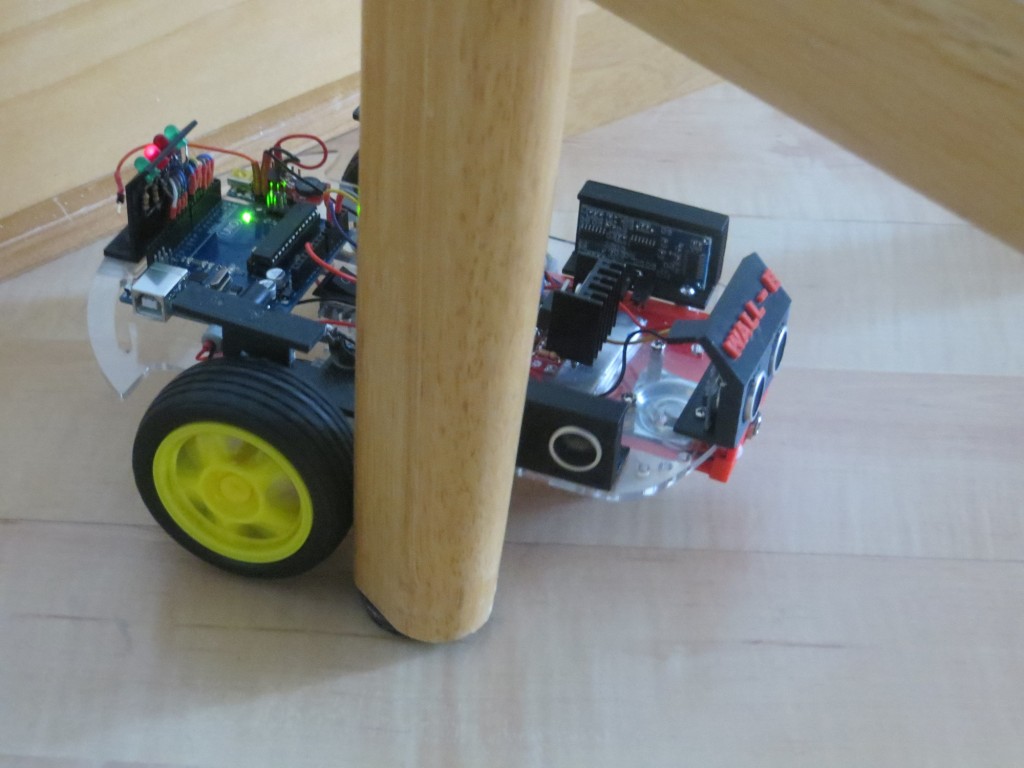

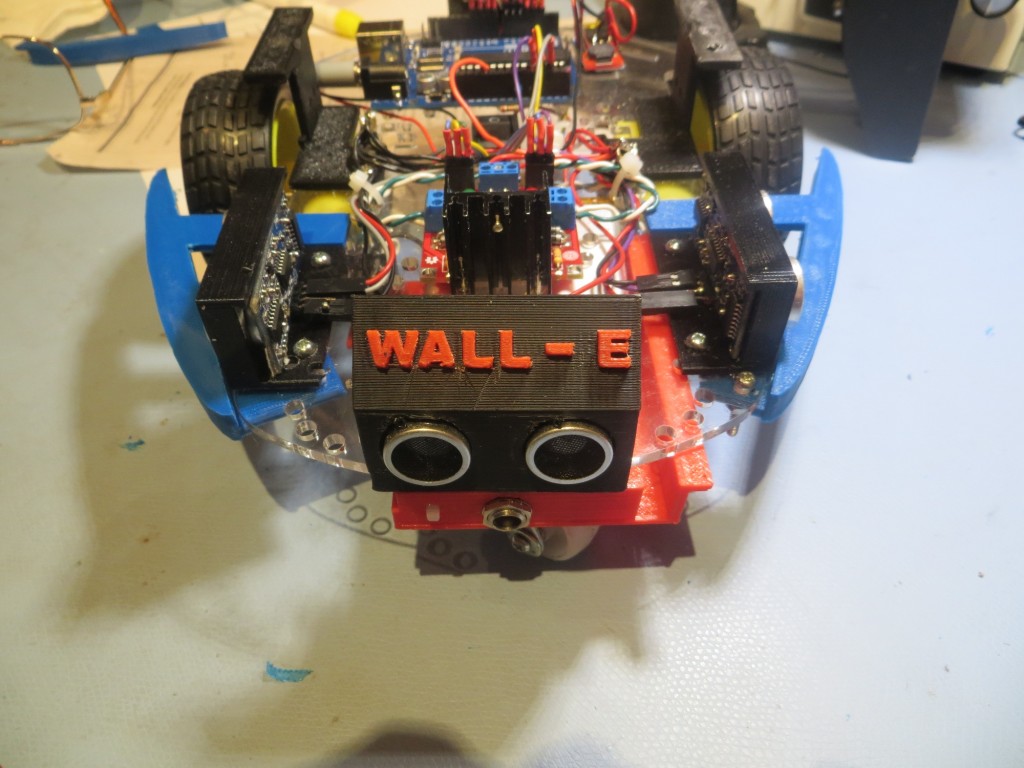

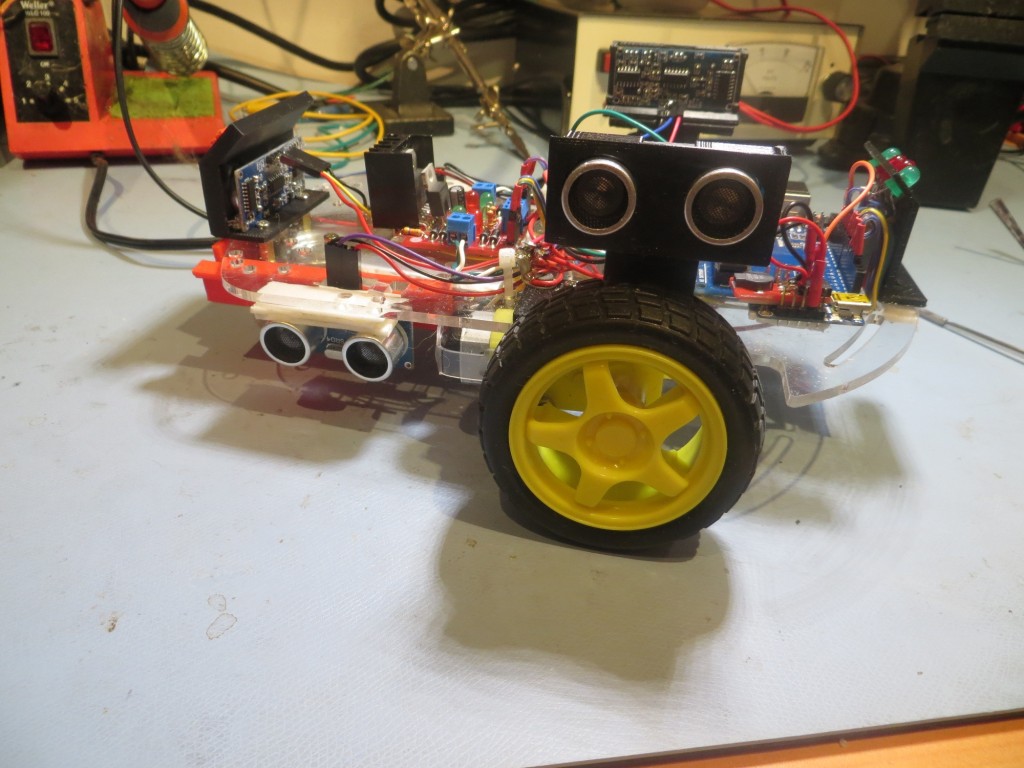

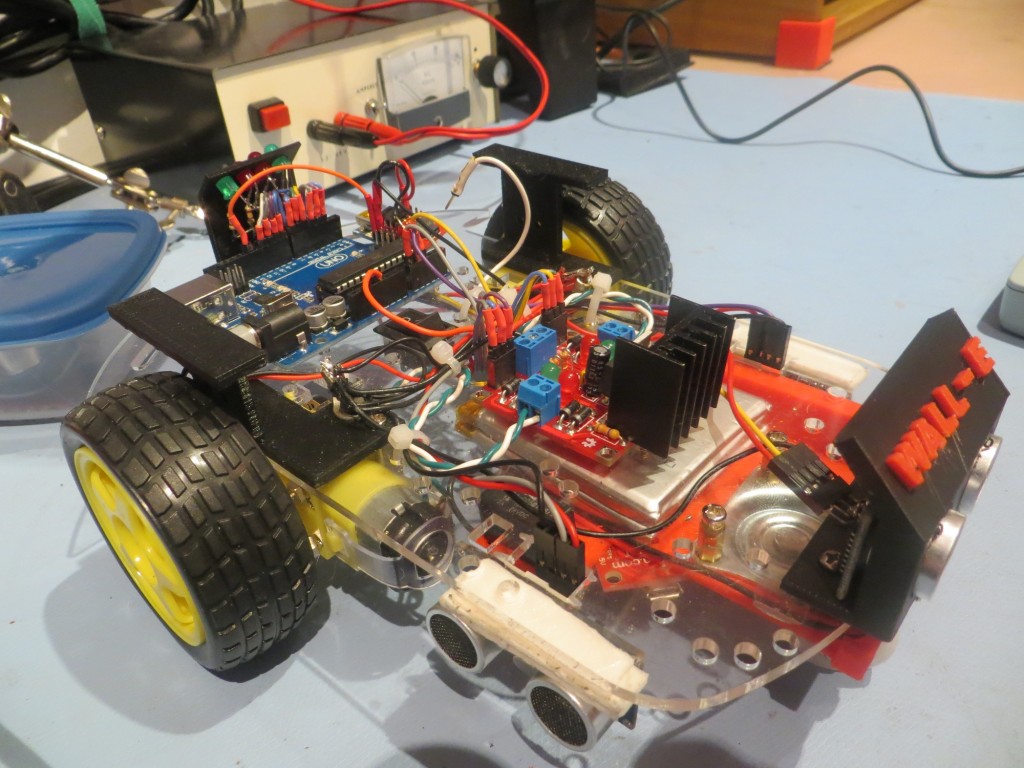

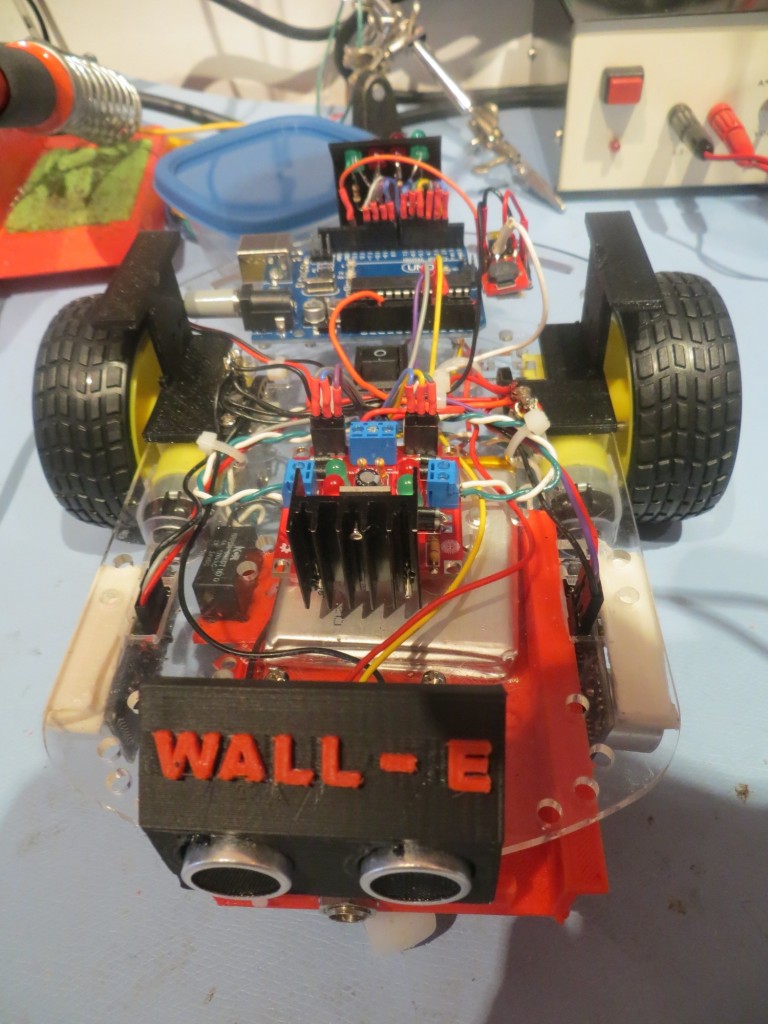

As I mentioned in my last post (Baby Gets a New Bumper) one of the outstanding issues with the robot is that it still gets stuck when it runs into something that is too low (like the feet of our coat rack or my wife’s slippers) to trigger the front sensor obstacle avoidance routine. It then sits there, spinning it’s wheels fruitlessly, forever (or until someone takes pity on it and waves a foot in front of its front ping sensor).

The current obstacle detection scheme is simply to monitor the front ping sensor distance readings and trigger the avoidance routine whenever the front distance falls below a set threshold. Unfortunately there are some configurations like the ones mentioned above where this detection scheme fails.

So, the idea is to enhance this with an algorithm that detects the situation where the wheels are still engaged, but the robot isn’t moving. So, how to detect “isn’t moving”? If the robot isn’t moving, then subsequent distances reported by any of the ping sensors will be the same, so that might work. I actually used this algorithm initially for obstacle detection avoidance by comparing adjacent front ping sensor distance measurements. The idea was that if adjacent measurements matched, the robot was stuck. However, I soon found at least two significant issues with this algorithm:

- Ping sensors report a distance of zero whenever the actual distance is beyond the max detection range (set to 200 cm for my robot), so the obstacle detection scheme would trigger when there was nothing ahead at all.

- It is quite possible for the reported distance to change 1 or 2 units either way, just due to round-off/truncation errors, so just comparing two adjacent measurements will be error-prone.

I can address the first of the issues above by using all three sensors, on the theory that the robot can’t be 200 cm from all three sensors at the same time (and if it is, it needs to go somewhere else anyway!). Unfortunately, this will require three times the work, but oh well ;-).

The second issue can be addressed with some sort of filtering/averaging scheme, which pretty much by definition implies some sort of multi-reading state memory and associated management code for each of the three sensors. Fortunately my recent timing study revealed that the time required for the current movement/detection/movement code is insignificant compared to the delays inherent in the ping sensors themselves, so the additional time required to implement a more sophisticated ‘stuck’ detection scheme shouldn’t be a problem.

So, what about ‘stuck’ detection and filtering? Assume we want to detect the ‘stuck’ condition within a few seconds of its occurrence (say 5?), and that each sensor is reporting about 20-50 readings per second. So, 5 seconds worth of readings at 50 readings/sec is 250 readings. Times 3 sensors is 750 readings, assuming I want to store the entire 5 seconds for all three sensors.

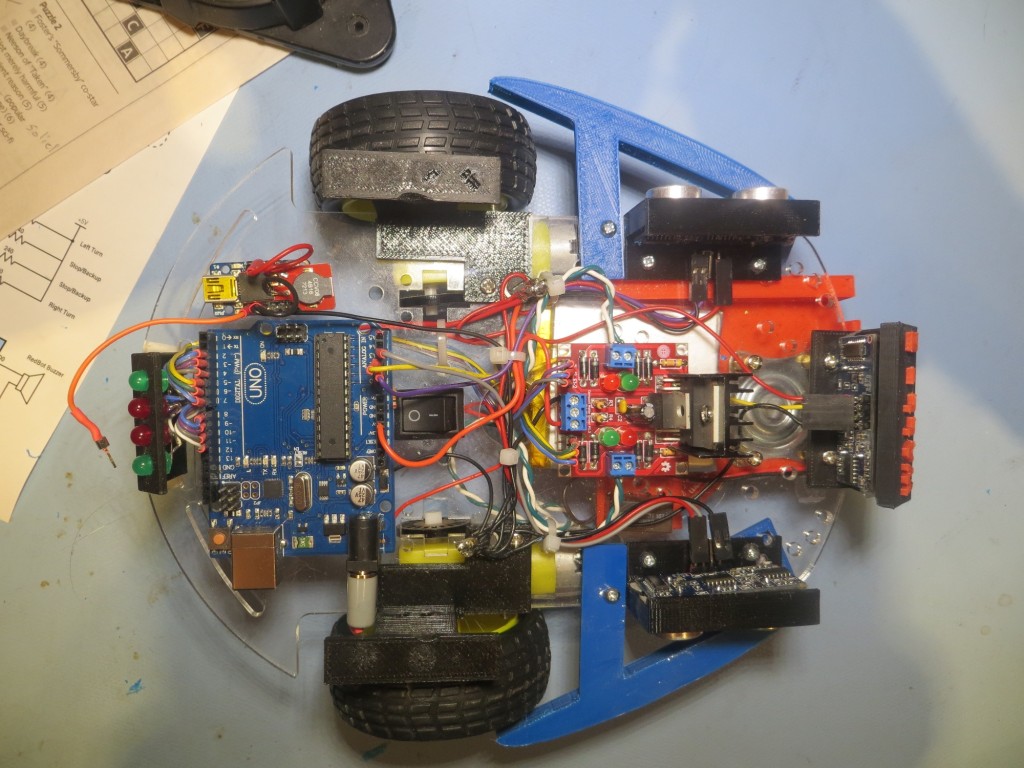

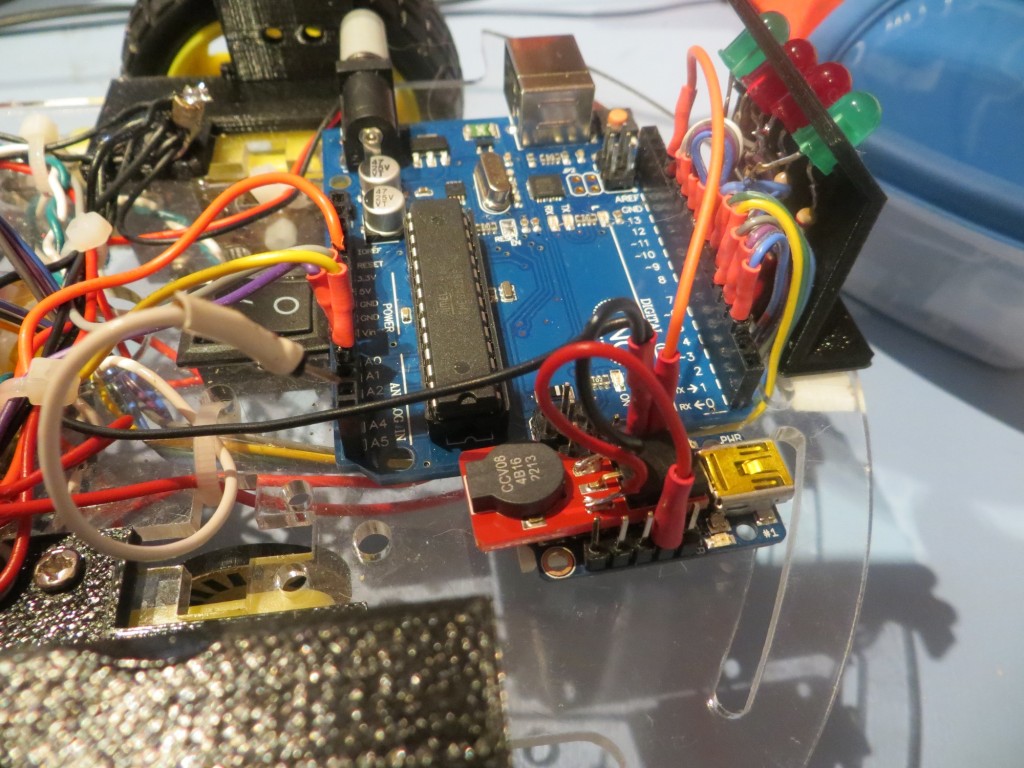

750 readings means 750 short integers (the distance range is 0-200 cm, which I should be able to store in an 8-bit unsigned short int), which should translate to just 750 bytes in RAM. The Arduino Uno I’m using has 2048 bytes of RAM, of which I am currently using only 246 bytes. So, I should have plenty of RAM space, assuming nothing in my code makes the stack grow too much.

Another way to skin the cat might be to just store the 5 second running average differential for each sensor, on the theory that the average differential would be some non-zero value while the robot is actually moving, but would trend to zero if it grinds to a halt somewhere. Have to think about how I would actually implement that…..

The central problem here is how to differentiate the ‘stuck’ condition from one where the robot is following a wall as normal, with no other wall or forward obstacle within range, or no forward obstacle but the ‘other’ wall is a constant distance away (a hallway, for example). In both of these cases, all three ping sensors could, conceivably, report constant values (distance to left wall, distance to right wall, distance to forward obstacle). IOW, holding a constant position with respect to both walls and having no forward obstacle would be indistinguishable from the ‘stuck’ condition. The good news here is that even the best that Wall-E can do in terms of wall following involves a lot of back-and-forth ‘hunting’ movements, so there should be enough short term variation in the left/right distances to distinguish active wall following from the ‘stuck’ state. The bad news is that since the variation is cyclic, it is possible to select a period such that the short term variation gets aliased (or averaged) out – resulting in false positives.

Looking at the most recent video of Wall-E in action (see below), it appears the ‘hunting’ motion has a period of about 1 second, which would indicate the best time frame for distinguishing ‘stuck’ from ‘active’. So, if I store measurements for about 1 second (i.e. about 20 – 50 measurements) from all three sensors, then the ‘active’ condition should show a full cycle of ‘hunting’ behavior from the left and/or right sensors, while the ‘stuck’ condition should have little/no variation across that time frame. The forward sensor won’t show the ‘hunting’ behavior in ‘active’ mode, but it should show either 0 (no obstacle within range) or a constantly declining value as an obstacle is approached. For the forward sensor, there is no way to distinguish the ‘stuck’ condition from the ‘active’ condition with no obstacle in range- oh well.

So, I think I’m getting a feel for the algorithm. Set up an array of distance readings for the left, right and front sensors sufficient to hold about one second of readings each. Each time through the movement loop, calculate the max deviation from one end of each array to the other. If the deviation for all three arrays is below some threshold, declare the ‘stuck’ condition.

Next up – implementation and testing.

Frank